Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@retoor it's because I haven't been doing the usual thing and going through others posts to read and upvote good content. People are very mutual like that.

If you aren't engaging with what they're making looking at it, commenting, keeping up with the people who make the content you like, most people don't have the time of day.

Just is what it is -

Also there's a bit of "engrish" going on because I used voice-to-text and was half asleep when I posted it.

I was like "time to sleeeeep", and my brain all of a sudden was like "hey...hey..

Hey....you know what would be a cool idea...hey wake up i want to code....what if we did this thing....hey get up." Like a golden retriever with ADHD. -

@Wisecrack wow, your upvote ration (votes to upvotes) is way better than mine and I have the feeling I upvote anything.

How are things going? -

@retoor not bad actually. suffering from the usual savior complex but coding a bunch again. I struggle with watching the world burn and not being in a position to immediately do anything about it.

Got a tooth pulled without any painkillers because fuck getting addicted.

Loosely working on a couple projects I'd let fall to the way side.

How about you on your end? -

@Wisecrack I'm fine, but pissed that I accidentally fucked my gh code streak of 40 days. Wanted to reach a year. Besides that working on rewrite of sudoku resolver so it can remember more data to apply to the algorythm. Discovering the best algorithm could be interesting for you too to make. For 99% you can brute force but in some situations that'll continue endless. My algorithm has bruteforce as a call back, but it will do that from the best position and most optimal starting digit so did a bit damage control

-

@retoor if you can characterize it as a formula you may be able to find closed form solutions that speed up the process significantly.

While I'm familiar enough to tell you this, I'm not familiar enough to actually do it myself otherwise I would.

So much room in sudoku for new and interesting solvers.

I wonder how a reinforcement learner would do?

Not really what you're working on but it'd be fascinating to see considering AlphaGO was built on the same premise. -

I haven't upvoted because I'm still digesting it.

Don't take it as a jab please. I love your posts, but my math skills are just so limited in comparison that I'm really slow in digesting it all XD -

@CoreFusionX no worries, I barely understand it myself.

The gist is that

* 'keys' in CRT are partial, and each part is distributed to a different user.

* We dogfood the data into the keys, so having the keys is equivalent to having the data.

* Critically you don't need ALL keys to decrypt or encrypt, as long as a majority of user keys are present when attempting encryption/decryption

* Two sets of users: Verified and unverified users

* Verification consists of being given part of a decryption key

* This way unverified users can post (and maintain a copy on their end) but they can't send out malicious or unwanted content to the group that becomes permanent on other distributed platforms require a centralized server to manage or remove.

[continued] -

* Verification of contents consists of three stages: 1. Posting content encrypted with a partial key, 2. others confirming it is a partial key by decrypting it, 3. others adding their own partial key to it by 'liking' it, which syncs it to their own local storage.

* Each user or a master node automatically maintains a text index of all content available to the group, based on how many members of the group have it synced. The index itself is automatically synced between users, and automatic majority vote is used to resolve conflicts.

*Because unverified users can post but not encrypt, once enough users have synced/liked the content individually, sufficient data now exists between the encrypted data and the unencrypted data that the unverified user posted, for them to derive the key partials automatically from each individual user that synced or 'verified' it. Thus content being liked/shared/synced also serves as the verification process for a user.

[continued] -

* On the otherhand, the index will automatically prune links to content that aren't sufficiently shared/liked/synced to a sufficient number of other users. This pruning can happen on a period preconfigured timer, or it can happen whenever enough dogfooding of group data into the shared keys mutates the keys enough that unverified users with sufficiently low ratings/syncs/shares essentially have whatever keys they *did* manage to derive go 'stale'.

* Thus verification is not an either/or status but a continuous value representing how similar the interests of the group are to the content and interests of any one member of the group, verified or otherwise. -

* The combination of a text-only index, automatic pruning, distributed ledgers *with* consent (nothing gets copied or synced to local except the index w/o user permission or 'likes/shares'), means malicious actors (very frequently spoilers, trolls, NGOs, and even government bots) posting terrorism, child pornography, or other shit designed to discredit/shutdown/censor a platform, are automatically pushed out and gradually prevented from poisoning the well.

* the entire system is therefore likewise resistant to manufactured reasons for shutdowns/censorship and outside actors taking over as mods.

* indexes need not be plain text readable, but could be done as json or another format for frontends to be extended/modded to interpret as they see fit.

Related Rants

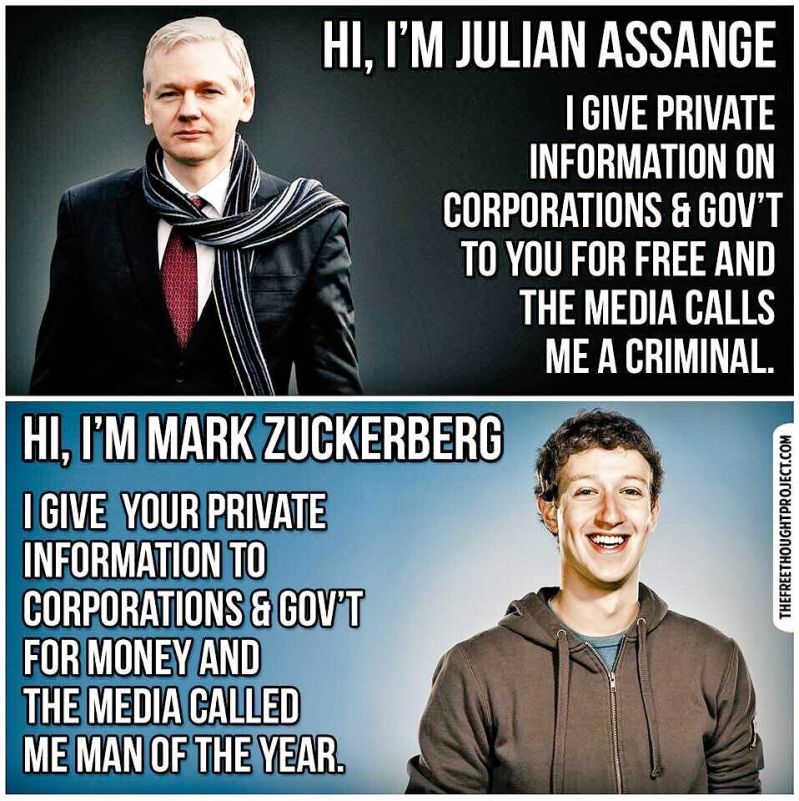

Find the difference...

Find the difference...

Chinese remainder theorem

So the idea is that a partial or zero knowledge proof is used for not just encryption but also for a sort of distributed ledger or proof-of-membership, in addition to being used to add new members where additional layers of distributive proofs are at it, so that rollbacks can be performed on a network to remove members or revoke content.

Data is NOT automatically distributed throughout a network, rather sharing is the equivalent of replicating and syncing data to your instance.

Therefore if you don't like something on a network or think it's a liability (hate speech for the left, violent content for the right for example), the degree to which it is not shared is the degree to which it is censored.

By automatically not showing images posted by people you're subscribed to or following, infiltrators or state level actors who post things like calls to terrorism or csam to open platforms in order to justify shutting down platforms they don't control, are cut off at the knees. Their may also be a case for tools built on AI that automatically determine if something like a thumbnail should be censored or give the user an NSFW warning before clicking a link that may appear innocuous but is actually malicious.

Server nodes may be virtual in that they are merely a graph of people connected in a group by each person in the group having a piece of a shared key.

Because Chinese remainder theorem only requires a subset of all the info in the original key it also Acts as a voting mechanism to decide whether a piece of content is allowed to be synced to an entire group or remain permanently.

Data that hasn't been verified yet may go into a case for a given cluster of users who are mutually subscribed or following in a small world graph, but at the same time it doesn't get shared out of that subgraph in may expire if enough users don't hit a like button or a retain button or a share or "verify" button.

The algorithm here then is no algorithm at all but merely the natural association process between people and their likes and dislikes directly affecting the outcome of what they see via that process of association to begin with.

We can even go so far as to dog food content that's already been synced to a graph into evolutions of the existing key such that the retention of new generations of key, dependent on the previous key, also act as a store of the data that's been synced to the members of the node.

Therefore remember that continually post content that doesn't get verified slowly falls out of the node such that eventually their content becomes merely temporary in the cases or index of the node members, driving index and node subgraph membership in an organic and natural process based purely on affiliation and identification.

Here I've sort of butchered the idea of the Chinese remainder theorem in shoehorned it into the idea of zero knowledge proofs but you can see where I'm going with this if you squint at the idea mentally and look at it at just the right angle.

The big idea was to remove the influence of centralized algorithms to begin with, and implement mechanisms such that third-party organizations that exist to discredit or shut down small platforms are hindered by the design of the platform itself.

I think if you look over the ideas here you'll see that's what the general design thrust achieves or could achieve if implemented into a platform.

The addition of indexes in a node or "server" or "room" (being a set of users mutually subscribed to a particular tag or topic or each other), where the index is an index of text audio videos and other media including user posts that are available on the given node, in the index being titled but blind links (no pictures/media, or media verified as safe through an automatic tool) would also be useful.

random

open platforms

social media

rtd

distributed ledgers