Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Isn't xor a single instruction in x86?

Why are you using a multi layer model to guess at the answer? -

@lungdart and why build robots. You can buy furbies in the store

@Wisecrack tsoding let's see exactly how to train one neuron capable to be a 99.9% sure boolean 😂 He also said that you can't do an xor with it. His video is great resource, the only real basics video I've seen. Too easy for you probably he does everything from scratch what still can be interesting -

@atheist he's prolly figuring it out by himself. That's the fun part I guess. Same as I spend a long time on writing a regex parser myself. Also, later when I actually did look what the "official" way to do it, I did discover that almost all resources are fake and never could become a full featured regex validator as the known ones so actually achieved something. The journey was amazing. The journey is so amazing, it's described how it was about the devs named in an assay in the book "Beautiful code". It was invented in 50's and patented in '71. While a decent parser is around thousand lines, one dev got mad when they complained about code length that he wrote the most basic regex parser ever doing most common operations in 30 lines. It's beautiful indeed. 80% of the work is really in 20% of the parser. More even. A half parser is not a parser. I'm writing a general purpose language too with some advise from lorentz. My previous got stuck on garbage collection before source got lost

-

@atheist ah. But there is smth special about xor, tsoding mentioned it as well that his neuron was incapable to train that. Don't know if that was related to the one layer. I don't learn remember AI/ML well. It's too complex for my minor interest. My lack of interest regarding this subject is that I don't have a goal to make with it. I would really like to make a bot that behaves like me and let it communicate with my environment trough communication apps but I have not found any way to decently do that. There are social chat models but they're quite limited and their assistent role is baked in. I can't bake in a retoor role. Do you maybe know any way to achieve that? Or is getting the bot social the hardest part? That's why replika which is quite good stays on top? They do actually do smth special? Bot with empathy, feelings quite nice simulated. So yes, I want a self hosted replika of myself

-

@lungdart proof-of-concept. It's not multi-layer as far as hidden layers go. It's a single layer and that's the goal.

I started out trying to confirm a few different questions:

1. Can we train and perform complex functions under circumstances traditionally considered impossible: the answer it turns out is yes (using multiple biases per node)

2. Can we train a network without backprop. In the single hidden layer example it turns out the answer is yes. Jury is out of it works across multiple hidden layers.

3. Can we train a model to perform even more complex functions in a hidden layer? That's what I'm working on now. -

@atheist the hidden layer in the model has one node instead of two. Adding multiple biases instead of one per node (essentially acting as free parameters) and doing a nonstandard alternative to backprop makes the network separable even with a single hidden layer with only one node.

-

@atheist no, haven't pub'd the code yet.

Focused on general improvements, training the model to perform more complex functions the hidden layer, and handling inputs that aren't just binary.

After that I want to train it to do something non-trivial, clean up the code, write a proper article maybe on substack or something, and seek a proper venue to present it and the backprop alternative.

Significantly tired of toiling in obscurity broke as a joke. Not after riches, just enough to continue further research. Hard to hold down a 9-5, find time to do deepwork and keep a family. Pick two as they say.

Spent a lot of time trying to find others willing to assist or help but mostly concluded I'd have to either go work at a research lab (fat chance w/o a b/g and a degree), or get to a place where I could pay others to work with me.

Lot of low-hanging fruit at this time, more than I can handle by myself frankly.

Related Rants

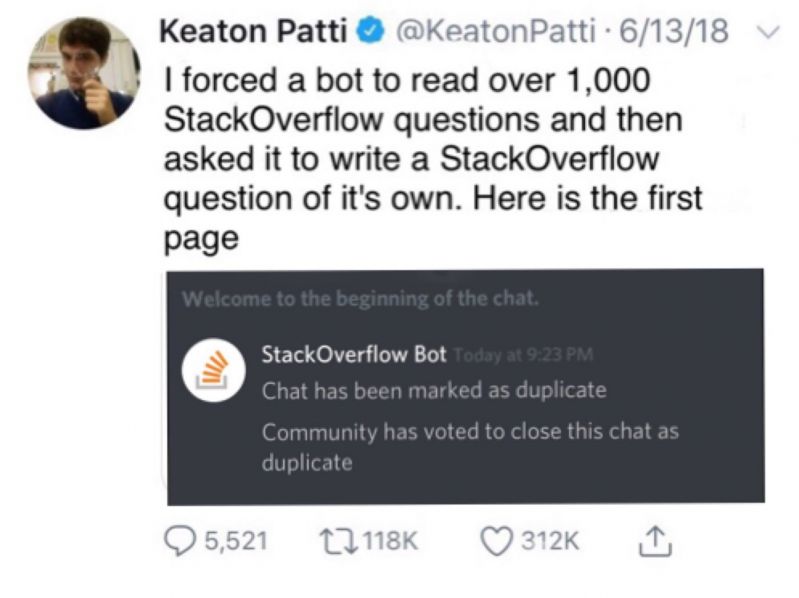

Machine Learning messed up!

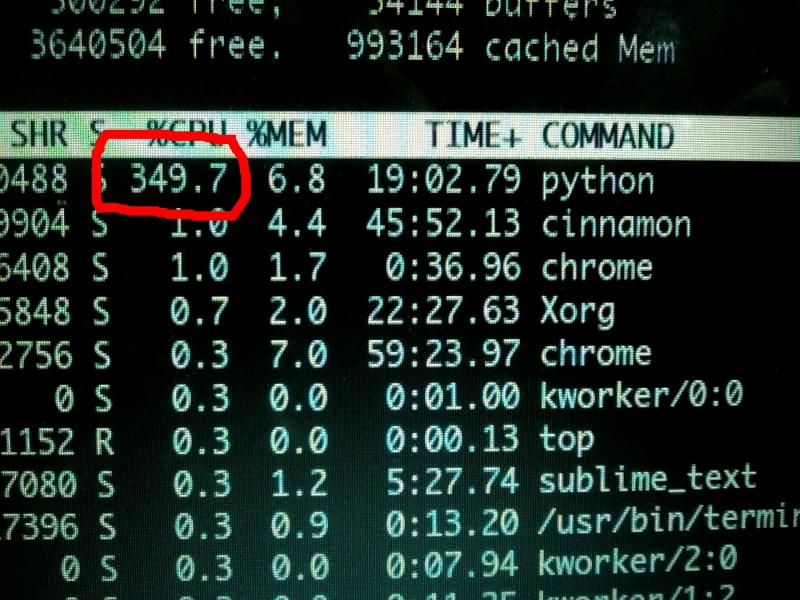

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

research 10.09.2024

I successfully wrote a model verifier for xor. So now I know it is in fact working, and the thing is doing what was previously deemed impossible, calculating xor on a single hidden layer.

Also made it generalized, so I can verify it for any type of binary function.

The next step would be to see if I can either train for combinations of logical operators (or+xor, and+not, or+not, xor+and+..., etc) or chain the verifiers.

If I can it means I can train models that perform combinations of logical operations with only one hidden layer.

Also wrote a version that can sum a binary vector every time but I still have

to write a verification table for that.

If chaining verifiers or training a model to perform compound functions of multiple operations is possible, I want to see about writing models that can do neighborhood max pooling themselves in the hidden layer, or other nontrivial operations.

Lastly I need to adapt the algorithm to work with values other than binary, so that means divorcing the clamp function from the entire system. In fact I want to turn the clamp and activation into a type of bias, so a network

that can learn to do binary operations can also automatically learn to do non-binary functions as well.

random

machine learning

ml