Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Hazarth91811yBut a hash function doesn't capture latent properties of the input, not really. Hash functions are highly destructive with high entropy. The whole point of an auto encoder is the compression of the entire feautre space, including latent properties.

Hazarth91811yBut a hash function doesn't capture latent properties of the input, not really. Hash functions are highly destructive with high entropy. The whole point of an auto encoder is the compression of the entire feautre space, including latent properties.

A token dictionary is an embedding layer. It captures no festures, it only acts as a representation (except maybe position if you use positional embedding). You can't compare those two at all. The only difference is using a trained embedding function, which very likely started as an auto encoder.

The real question is what is the purpose of each layer in any given architecture. You can't really do much with an autoencoder when working token-wise, the information space of one token is so slim It would be a waste. Now using it on group of tokens might make much more sense, because it will end up being much more space efficient (and thus faster) than making a dict for groups of tokens, which can approach Infinity quickly -

@Hazarth Assuming you hash individual tokens before training, and the hash is only dependent on plaintext, and you train on input-output pairs, the training becomes a projection between representations. You lose nothing to entropy as a result, no?

encoder encodes input hashes, decoder trains for decoding into the respective plaintext.

Not trivial granted, unless you have a non-standard training regime. -

Hazarth91811y@Wisecrack I can't claim that I tried it, so you'd have to test it out. But I see a couple of potential issues:

Hazarth91811y@Wisecrack I can't claim that I tried it, so you'd have to test it out. But I see a couple of potential issues:

1. If you're talking about token generation, then this has one huge downside, hash functions aren't position encoded, so you'd have to do as an extra step, which would lose you the hashable property of input anyway

2. Hash functions can lean to collisions, that happening in even one token would be a problem

3. Not all words are created equal, and trainable embeddings take that into consideration. Stop words might be be clustered close together after training, that doesn't happen if you use dictionary or hash function unlike in word2vec, which is a trained embedding

4. Hash functions are not easily scalable, if you want to tune the embedding you have to largely move in the sizes of pre-made hash functions, or implement your own, for probably very little benefit

5. Hashes are not decimals, 256bit hash != tensor(256) -

Hazarth91811yAround the "attention is all you need" paper it was shown that you do want position aware embeddings, so static dictionaries are no longer really used for sequence inputs/outputs...

Hazarth91811yAround the "attention is all you need" paper it was shown that you do want position aware embeddings, so static dictionaries are no longer really used for sequence inputs/outputs...

And even if you were going to work with a more suitable network, like a recurrent one, you'd be more limited with using a hash dictionary over a tunable and trainable embeddings layer. You could probably still achieve some sort of symmetry without too much data being lost to entropy (given you choose a sufficiently large hash network) but I think it'd end up being inefficient. And you'd also have to precompute the dictionary every time you change the source hash function, since you don't want to be hashing each token on-line every time. that's lost cycles -

Hazarth91811yIt could be an interesting experiment. But autoencoders do come with a bunch of benefits. Word2Vec was SotA for a long time and it's essentially an auto-encoder. Though you could call it a semantic auto-encoder rather than a traditional one. That's something that Hashes or static dictionaries will never give you, because hashes don't have any relation. They are supposed to increase entropy to a certain level. So now your network would just be able to use it literally as a random embedding without any latent information, while a trained autoencoder actually contains those latent bits of information that allows the network to relate one token to others

Hazarth91811yIt could be an interesting experiment. But autoencoders do come with a bunch of benefits. Word2Vec was SotA for a long time and it's essentially an auto-encoder. Though you could call it a semantic auto-encoder rather than a traditional one. That's something that Hashes or static dictionaries will never give you, because hashes don't have any relation. They are supposed to increase entropy to a certain level. So now your network would just be able to use it literally as a random embedding without any latent information, while a trained autoencoder actually contains those latent bits of information that allows the network to relate one token to others -

@Hazarth I think we're actually just talking past each other here.

Problem I encountered was how do you train a standard NN on text without storing a dictionary beforehand?

Thats where I was coming from.

Custom hashfunction that only outputs numbers to train on was the first idea that came up.

Current network has N additional biases per node in a hidden layer.

Was trivial to find functions like xor with say 4 biases per node. Problem was when you go to run the model, how do you decide which bias to select for a known (trained) input to produce the correct output?

So I could train a model but couldn't use it.

I had to do some additional work to get something I think will work, but essentially we use a dual-decoder regime that dogfoods the input.

I'm building the whiteboard version today to see if it works.

Related Rants

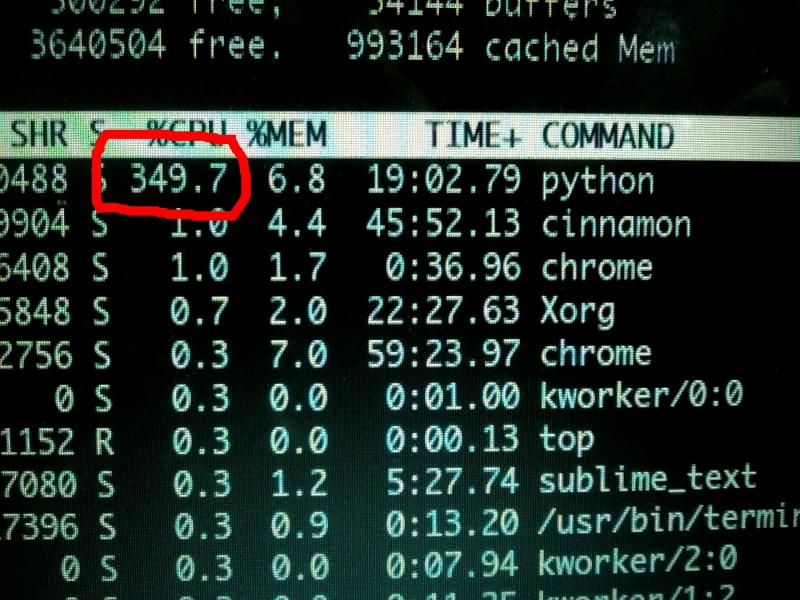

Machine Learning messed up!

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

So I realized if done correctly, an autoencoder is really just a bootleg token dictionary.

If we take some input, and pass it through a custom hashfunction that strictly produces hashes with only digits as output, then we can train a network, store the weights and biases, and then train a decoder on top of that.

Using random drop out on the input-output pairs, we can do distillation of the weights and biases to find subgraphs that further condense this embedding.

Why have a token dictionary at all?

random

machine learning

neural networks