Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

typosaurus10598358dGreat, I've been on his page before. But for some reason didn't try it. Don't remember why. I will try your github thingy tho. Probably tomorrow. I'm plating with ollama models currently. The 0.5 and 1.5b are quite useful. I need those since my vps can't do better

typosaurus10598358dGreat, I've been on his page before. But for some reason didn't try it. Don't remember why. I will try your github thingy tho. Probably tomorrow. I'm plating with ollama models currently. The 0.5 and 1.5b are quite useful. I need those since my vps can't do better -

trekhleb210358d@retoor I've tried to train ~80M GPT parameters on a single GPU in the browser so far. Pretty heavy. It is interesting to see how 1.5B parameter will behave...

trekhleb210358d@retoor I've tried to train ~80M GPT parameters on a single GPU in the browser so far. Pretty heavy. It is interesting to see how 1.5B parameter will behave... -

typosaurus10598358d@trekhleb that's thing, I have only a cpu. So I can forget training myself prolly. It will never get in neighborhood of 1.5b?

typosaurus10598358d@trekhleb that's thing, I have only a cpu. So I can forget training myself prolly. It will never get in neighborhood of 1.5b? -

typosaurus10598358dI'm already training four days a rnn on a spare laptop. It's training with eight books. It already can create some sentences. The method I use has its limits. If you train too long, it'll make up his own language, it gets too creative. This rnn is also from scratch written by someone, it's very small in C, but python version exists too

typosaurus10598358dI'm already training four days a rnn on a spare laptop. It's training with eight books. It already can create some sentences. The method I use has its limits. If you train too long, it'll make up his own language, it gets too creative. This rnn is also from scratch written by someone, it's very small in C, but python version exists too -

trekhleb210357d@retoor I'm not sure, it probably depends on the model configuration/implementation and equipment. But in the browser, for that "homemade GPT", I see that training on WebGPU is around x100 - x1000 times faster than CPU

trekhleb210357d@retoor I'm not sure, it probably depends on the model configuration/implementation and equipment. But in the browser, for that "homemade GPT", I see that training on WebGPU is around x100 - x1000 times faster than CPU -

typosaurus10598357d@trekhleb is there anything specific you want to make? I'm trying to make a chatbot clone of myself. It's working out nice with a 1b model. It's called retoor1b :D I choose a weaker model for two reasons:

typosaurus10598357d@trekhleb is there anything specific you want to make? I'm trying to make a chatbot clone of myself. It's working out nice with a 1b model. It's called retoor1b :D I choose a weaker model for two reasons:

- hardware limitation (laptop / vps, vps is faster)

- easier to train than a model that already has a 'full personality'

- it only has to do creative question answering. it doesn't have to solve complex questions. It can code quite well tho, maybe i'll delete that future

Related Rants

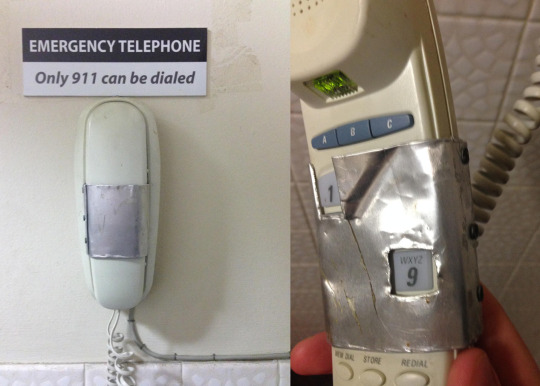

What only relying on JavaScript for HTML form input validation looks like

What only relying on JavaScript for HTML form input validation looks like Found something true as 1 == 1

Found something true as 1 == 1

For learning purposes, I made a minimal TensorFlow.js re-implementation of Karpathy’s minGPT (Generative Pre-trained Transformer). One nice side effect of having the 300-lines-of-code model in a .ts file is that you can train it on a GPU in the browser.

https://github.com/trekhleb/...

The Python and Pytorch version still seems much more elegant and easy to read though...

devrant

gpt

ml

transformenrs

js

ai