Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

But you might purchase one, so there obviously must be a competition to ensure you know about all of them so you don't miss one out of ignorance while choosing. 😉

-

Google does this all the time. Especially with YouTube recommendations. I watch one video on Jenkins because I'm trying to figure out how to use a poorly documented plugin, and now my recommended videos are almost all about Jenkins.

-

@EmberQuill This is one of YouTube's most annoying algorithm "features." It clearly is over-trained to offer similar video recommendations, irrespective of any particular user's viewing habits, meaning that any side-trip out of curiosity or for troubleshooting/research renders the recommendations almost unusable for days.

I had a binge session for a couple days of a particular type of time-wasting video, and it took weeks of telling YouTube that I wasn't interested on every single recommendation before the videos were purged from my feed.

We forget that the algorithm exists to serve Google (maximize watch time) rather than serve us (give us what we actually want, not just stuff similar to what we have already watched), and it's things like this that remind us. -

@powerfulparadox ugh, yeah, I had the same sort of problem. I watched some of those awful "robotic voice reads funny reddit posts for people who can't be bothered to read themselves" videos and it took several weeks to stop recommending thrm, even after hiding every single recommended video multiple times in a row.

YouTube has over ten years of data on my watching habits so you'd think they would be better at catching outliers at this point.

I also have the problem with Amazon recommending things after I've bought one and most likely don't need more. Like YouTube, recommendations seem to be based almost entirely on the last few weeks of data, with a couple of random recommendations based on things from five years ago. -

FloydDavis14249dTotally get the frustration! It’s like recommendation algorithms sometimes miss the mark by focusing on keywords instead of real context. I’ve been exploring tools, and they approach AI recommendations with more emphasis on relevance and context, which makes a huge difference. It's refreshing to see AI tech that actually tries to understand user intent rather than just pushing whatever seems loosely related.

-

RandyEverman8714249dIt's like the recommendation systems aren’t really 'getting' what you actually want. I’ve seen that a lot with poorly targeted ads or suggestions that seem way off. Sometimes, though, it comes down to the data science solutions behind the scenes, where the focus is more on keywords rather than deeper context or intent https://dataforest.ai/services/... I've worked with teams using tools from DataForest, and their approach digs into the specifics, aiming to match recommendations with real intent. It’s amazing how much better it gets when the data analysis goes beyond just surface-level. By the way, the way they model concepts— breaks down complex ideas—is a game-changer.

Related Rants

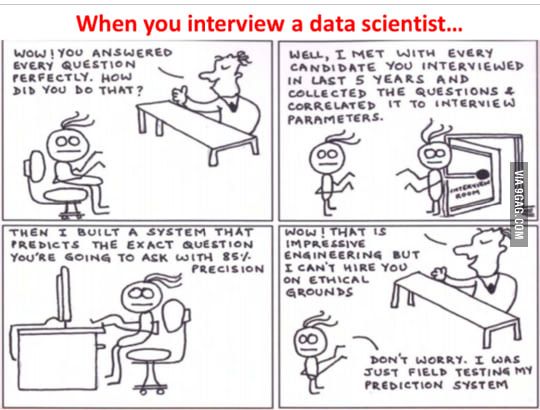

Interviewing a Data Scientist

Interviewing a Data Scientist

A note to the team designing recommendations on google ads:

Just because I search for deep learning concepts for personal learning, does not mean I will be purchasing every paid online data science course on the planet.

rant

data science