Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Promising, but nowhere near there yet - either in terms of performance or app compatibility.

I'm going against the general YouTubers trend on this - If you're upsetting the ecosystem this much with an architectural change, I'd kinda expect more tbh. At the moment we've just got a bit more battery life and comparable real world performance to last gen macs - bad trade off given the headache of an architecture change imho. -

Very surprising. I can't explain how a RISC arch with a significantly lower frequency can rival the best of the best 5 GHz CISC chips

I don't get it -

@12bitfloat The point is that the M1 at 10W scores like 7500 with Geekbench multi-thread while the 5950X at 140W package power earns around 16000.

Means, the 5950X needs 14 times as much energy to be only a little more than twice as fast. -

@iiii Well, these aren't the best 5 GHz CISC chips that @12bitfloat mentioned. For laptops, I think we can drop Intel from the list entirely and look at AMD only.

The 4800U is the fastest low-power chip that is available (at least in theory), and some 4800U machines can compete with the M1 while others are way below that.

However, a lot depends on the RAM being used, and by far not all AMD laptops offer LPDDR4-4266 like the M1. DDR4-3200 is more common, and you even see DDR4-2666 used. You can even see single channel RAM configs in AMD laptops, taking another performance hit.

Also, the 4800U isn't 10W and does need a fan. The faster 4800U machines likely have their cTDP set to 25W. -

@iiii What exactly is "castrated" about the M1 as CPU? Obviously, the ecosystem Apple builds around that is, yeah, but that's not the problem of the M1 itself.

ARM Linux does exist, after all, though of course there won't be M1 drivers for now. But that's a SW problem, not a HW one. With the drivers, you could have ARM Linux running and just recompile from source. -

@iiii SW compatibility is not an issue with Rosetta 2, and no, Rosetta 2 is not a runtime emulator. On top of that, application SW is supposed to be just re-compiled anyway. Well, mostly, except e.g. for hidden bugs that didn't manifest on x86 but can on ARM because of its weak memory ordering.

The instruction set is of course smaller, that's what the R in RISC means. So what? Nobody codes large amounts of code in assembly, but even then, ARM assembly is so much nicer to code than the pretty insane x86 ISA.

And the RAM - you can't extend LPDDR4 in ANY laptop, Apple or non-Apple, because this has to be soldered. With RAM sockets, you don't get that RAM speed, and neither the low power consumption of LPDDR (LP = low power). -

Geoxion8565y@RocketSurgeon The ram is not counted in the transistor count. I can prove that:

Geoxion8565y@RocketSurgeon The ram is not counted in the transistor count. I can prove that:

The count is said to be 16 billion. If every bit of memory took 1 transistor to make (it will take more than that), then 16GB of memory = 16 billion transistors.

If memory was counted, then there'd be no transistors left for any other part. -

@iiii That's not the problem of the M1 itself, but of Macs being a proprietary platform with only one vendor. Also, the M1 laptops are being offered with 8GB or 16GB RAM, depending on your choice.

-

@iiii That's the point of RISC - having fewer instructions, but execute them faster because there's less complexity.

Actually, x86 are also RISC machines internally because they decode the complex x86 instructions into micro-ops and execute only the latter ones. -

@iiii Mac users do, and anyone who develops for iOS of course. Not least because the M1 can also execute iOS apps natively, which should be pretty nice for iOS app devs.

I might have given this machine a closer look if it were able to run Linux easily, and if it had a standard M.2 SSD slot instead of Apple's insanely overpriced soldered SSDs. With that Apple SSD crap, 256 GB cost much more than a 1 TB Samsung NVMe SSD. That's why Apple is soldering also the SSD, to shut out market competition. -

@iiii So my general verdict is: the M1 doesn't suck, but Apple does, and since the M1 can only be bought in Apple devices, the M1 actually does suck. ^^

Also, the M1 isn't an x86 killer because the M1 isn't some standard ARM. Apple is quite ahead of the industry in that regard, I'll give them that, and until others (e.g. Qualcomm) catch up with their ARM designs, AMD will be on 5nm too, and have Zen4 on the market. -

@12bitfloat because the CISC vs RISC distinction is only in the fetch and decode stages. x86 CISC CPUs convert to RISC micro-ops like @Fast-Nop said, so the actual execution "backend" is similar. With modern instruction caches being large enough and having high enough bandwidth for large parallel fetches and modern branch predictors able to predict those fetches with insane accuracy, it doesn't actually matter that CISC can encode more for less memory, because the other advantages that RISC gives you, mostly superscalar speculative out of order processing, completely overshadows that.

In practice for everyday code @iiii CISC compiler optimizations don't work all that well compared to the benefits of superscalar OOO. It's the same problem as with VLIW - great for static optimization, meh for dynamic. Yes you can stuff more in less code, but the true bottleneck is the degree of instruction-level parallelism, which is like 2.4 on average (so you can schedule on average 2.4 instructions for execution in one go) and that really doesn't have much to do with CISC or RISC because the reorder buffers of most RISC processors are easily large enough to expose that ILP.

Also, ARM RISC processors can do something called macro-op fusion which sticks a bunch of ops together that is then treated as a single instruction for better instruction bandwidth, so it *is* doing the CISC optimization when there actually is a benefit, but dynamically in hardware.

The job of a modern instruction set is to get the computation graph to the processor, not specify in low level details how it'll actually be executed (eg. I'm sure you know that the registers mentioned in the instruction set usually don't exist as such in hardware). After a point most ISAs do that roughly equally well, so might as well use the representation that's easier for hardware to work with.

Related Rants

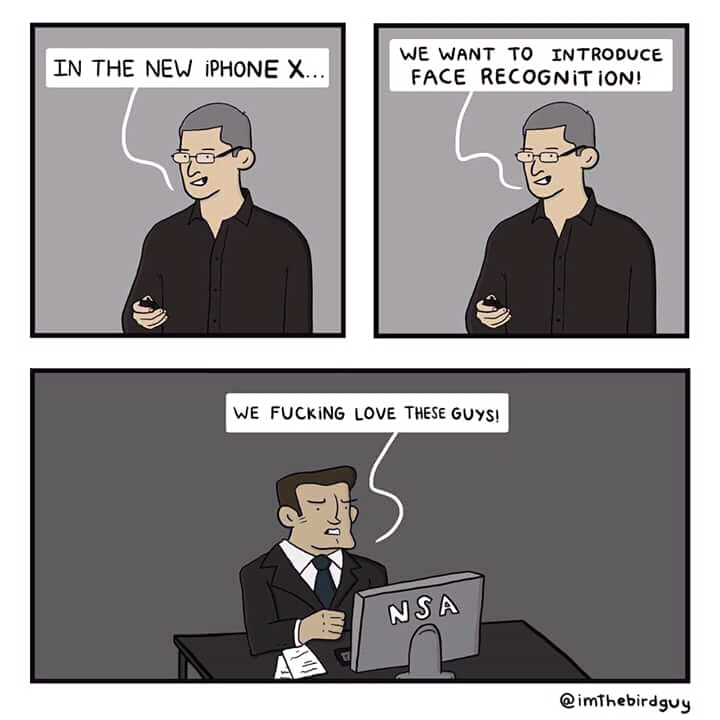

Why not! 😂

Why not! 😂 Can't wait for this to happen

Can't wait for this to happen As a long-time iPhone user, I am really sorry to say it but I think Apple has completed their transition to be...

As a long-time iPhone user, I am really sorry to say it but I think Apple has completed their transition to be...

What are people unfiltered thoughts on apples new ARM processor? Especially if you are a Mac user?

(I'm an OS hopper, though my main machine is running ubuntu right now, and work machines are usually mac with windows with wsl)

question

apple macbook

apple

arm cpu

new cpu