Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

hitko29954y@Demolishun XML is fucking ambiguous. Attribute and child with the same name? Check. The same characters can be escaped in 3+ ways? Check. Numbers and booleans as string? Check. Want to use the type Person for multiple properties? Create dummy Sender and Recipient wrapper elements. Representing a list of plain values? Concatenate them, since putting them as <item>value</item> will essentially double the file size. Parsing? Here are 213412312 different parsers, each supporting a different flavour and returning differently structured data for the same input document, since XML structure is way more complex than how most OO languages structure the data.

hitko29954y@Demolishun XML is fucking ambiguous. Attribute and child with the same name? Check. The same characters can be escaped in 3+ ways? Check. Numbers and booleans as string? Check. Want to use the type Person for multiple properties? Create dummy Sender and Recipient wrapper elements. Representing a list of plain values? Concatenate them, since putting them as <item>value</item> will essentially double the file size. Parsing? Here are 213412312 different parsers, each supporting a different flavour and returning differently structured data for the same input document, since XML structure is way more complex than how most OO languages structure the data. -

The datastructure of XML is actually very similar to that of code in a language that supports named arguments. Consider:

<document>

<ns:tag>

<entry>value</entry>

<entry attr="metadata">value</entry>

<simple />

</document>

But written as:

document(

ns.tag(

entry("value"),

entry(attr: "metadata", value),

simple()

)

)

Hm, this could be an excellent markup language with eval parsing (the thing that made JSON popular) in tons of languages. -

XML has a feature that JSON doesn't and it actually suffers from this problem a lot; it can express node types directly. A good config language should have no problem with arrays of typed nodes (eg. rule chains), but JSON has to resort to ["name", ...details] tuples which trip up autoformatters because they're actually arrays and not named nodes.

-

hitko29954y@lbfalvy Until you get to

hitko29954y@lbfalvy Until you get to

<entry title="Simple title">

<title>

<![CDATA[Grandparent > ]]>

<![CDATA[Parent > ]]>

<![CDATA[Title]]>

</title>

</entry>

and your code looks like

entry(

title:"Simple title",

title("Grandparent > ", "Parent > ", "title")

)

Which is which? How do you map that to an object?

Also regarding your other comment, in JSON, just like everywhere in OOP, you define

abstract type Rule {type: RuleType}

type BaseRule extends Rule {type: "base"}

type OtherRule extends Rule {type: "other"}

Then just serialise a chain of rules as [{Rule1}, {Rule2}, ...], and switch based on rule.type. Autoformatters and code intelligence works perfectly with that, no tuples needed. -

hitko29954y@Demolishun JSON specification consists of 5 grammar expressions, and that's it. No extensibility rules, no "what if" use cases, no ambiguity. The only change which happened to JSON was back in 2006, when the specification added support for exponent notation (writing 1.2e6 instead of 1200000). I know, people are creating their tools on top of JSON for data validation, traversal, referencing, just like they did with XML. But unlike XML, JSON doesn't have some complicated standard allowing to integrate such modification. Casing point: it's perfectly okay to consume JSON data from an API and ignore its the schema, but it's near impossible to correctly parse XML data from an API without the appropriate schema, since "1 2 3" might be plain string, a list of strings ["1", "2", "3"], or a list of numbers [1, 2, 3].

hitko29954y@Demolishun JSON specification consists of 5 grammar expressions, and that's it. No extensibility rules, no "what if" use cases, no ambiguity. The only change which happened to JSON was back in 2006, when the specification added support for exponent notation (writing 1.2e6 instead of 1200000). I know, people are creating their tools on top of JSON for data validation, traversal, referencing, just like they did with XML. But unlike XML, JSON doesn't have some complicated standard allowing to integrate such modification. Casing point: it's perfectly okay to consume JSON data from an API and ignore its the schema, but it's near impossible to correctly parse XML data from an API without the appropriate schema, since "1 2 3" might be plain string, a list of strings ["1", "2", "3"], or a list of numbers [1, 2, 3]. -

Too bad YAML is that abomination of featuritis it is.

JSON is crap. And XML is just even more crap which also is more crappy.

At the end, JSON sadly is the least horrible standard. -

@hitko There's no ambiguity, the first title is a param name, the second is the type of the first positional param. If it's confusing you can namespace them to indicate the source, if they both come from the same source then I don't understand why the author would use the same word for both a datatype and a metadata key, that's a recipe for chaos in every language.

-

How can one mess up darn JSON scheme? At finger snap! It doesn't need to be a low life product that everyone forgets next hour, to fuck such simple thing up. Lads keep ignoring styleguides and other documentation. Even if it's just a 15 min thing.

-

@Oktokolo if anyone used yaml for a serialisation format, I think I'd scream even more.....

-

@AlmondSauce

If you don't use references and ignore the awkward multiline string syntaxes, it actually is a pretty human-readable and well-defined format.

Whether indenting should carry meaning or not, seems to be highly controversial topic - but i am in the deathsentence-for-people-who-don't-indent-properly-camp anyways, so naturally have no problem with that. -

hitko29954y@lbfalvy Oh, so you mean I have to access them separately, despite them having the same name? Like my parser and code must actually know which one is a parameter and which one is a child? I dunno man, sounds like someone is trying to use hierarchical markup and complex query syntax to make it look like both of those belong to the same object, even though there must exist at least one intermediate "container" object, like element.params and element.children. Which is exactly my fucking point, instead of avoiding unnecessary markup and just explicitly specifying "params": {...} or "children": [...] when needed (and having the ability to give each of those a more meaningful name), XML specification implicitly adds these wrapper objects everywhere to avoid potential collisions. Collisions which wouldn't be possible without ambiguity.

hitko29954y@lbfalvy Oh, so you mean I have to access them separately, despite them having the same name? Like my parser and code must actually know which one is a parameter and which one is a child? I dunno man, sounds like someone is trying to use hierarchical markup and complex query syntax to make it look like both of those belong to the same object, even though there must exist at least one intermediate "container" object, like element.params and element.children. Which is exactly my fucking point, instead of avoiding unnecessary markup and just explicitly specifying "params": {...} or "children": [...] when needed (and having the ability to give each of those a more meaningful name), XML specification implicitly adds these wrapper objects everywhere to avoid potential collisions. Collisions which wouldn't be possible without ambiguity. -

@hitko

> I have to access them separately, despite them having the same name?

Yes, they're both called parameters because they are details - parameters - of the node above. Sounds like an obvious enough name to me.

> My parser and code has to know which one's a parameter and which one's a child

The parser relies on the syntax, read the example again, it's unambiguous. Your code has to know exactly as much as it does about XML, the structures are isomorphic.

> make it look like both of those belong to the same object, like element.params and element.children

Now you're using language specific terminology and arguing with limitations only some languages have. In Javascript, Python, Lua, C# and I believe C++ you can define structures indexable by both integers and strings, and make them work like the most natural thing. The difference with named parameters is that they can be assigned selectively and in any order. -

@hitko In response to your earlier reply about type attributes: that's absolutely not what you do in an object-oriented language. It's what the object-oriented language does in the underlying procedural language, except it's actually useful there because the type name is replaced by the vtable. In an object-oriented language I instantiate objects with a class which is a separate, immutable metadata and optimized away whenever the object isn't expected to represent an interface or a superclass. It's definitely not a property and it has no place in the property list (querying by which is O(n) if even possible depending on the available query options by the way).

-

@hitko And by the way you just made Rule reference the complete list of subtypes, which is probably not a big deal considering we're talking about a config grammar but it blows up the moment you have externally provided rules and finely demonstrates why the type of an object isn't its property.

-

@hitko serializers use a type property in JSON, but the only way to parse them correctly is to resolve separately from the other properties before decoding them using the type-specific runtime call, so they're generally a part of the serializing library or a dedicated module whose sole purpose is to make up for a shortcoming in JSON.

-

hjk10156364yIt's very simple it depends on the situation what is best. The OPs point is valid manually checking the input when a schema is available is just stupid.

hjk10156364yIt's very simple it depends on the situation what is best. The OPs point is valid manually checking the input when a schema is available is just stupid.

We generally use openapi. Have a lib generates the incoming types and integrates with the httpserver and returns appropriate errors to the client. Makes code so much cleaner.

Related Rants

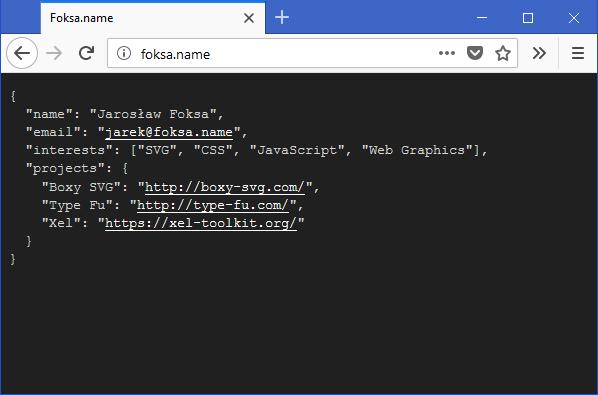

Man, this dude knows webdesign

Man, this dude knows webdesign JSON Bourne

JSON Bourne

Come on guys, use those JSON schemas properly. The number of times I see people going "err, few strings here, any other properties ok, no properties required, job done." Dahhh, that's pointless. Lock that bloody thing down as much as you possibly can.

I mean, the damn things can be used to fail fast whenever you misspell properties, miss required properties, format dates wrong - heck, even when you want to validate the set format of an array - and then libraries will throw back an error to your client (or logs if you're just on backend) and tell you *exactly what's wrong.* It's immensely powerful, and all you have to do is craft a decent schema to get it for free.

If I see one more person trying to validate their JSON manually in 500 lines of buggy code and throwing ambiguous error messages when it could have been trivially handled by a schema, I'm going to scream.

rant

json