Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

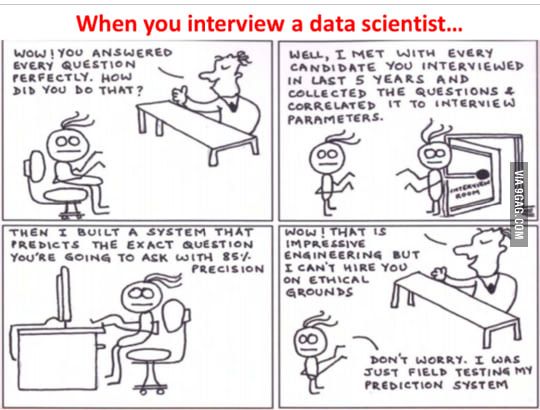

Interviewing a Data Scientist

Interviewing a Data Scientist

We specified a very optimistic setup for a data science platform for a client....

Minimum one machine with a 16 core CPU with 64GB RAM to process data.....

Client's IT department: Best we can do is an 8 core 16GB server.

Literally what I have on my laptop.

Data scientist doesn't use any out-of-memory data processing framework, e.g. Dask, despite telling him it's the best way to be economical on memory; ipykernel kills the computation anyway because it runs out of memory.

Data scientist has a 64GB machine himself so he says it's fine.

Purpose of the server: rendered pointless.

rant

data science