Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

essi3073yWell... Have you confirmed that Snyk is picking up both front-end and back-end code? --debug is a good friend 😄

essi3073yWell... Have you confirmed that Snyk is picking up both front-end and back-end code? --debug is a good friend 😄 -

“Problems in dependencies I have no control over” — what kinds of problems? Do you have vulnerabilities upstream of your project?

-

If you add static analysis to a project and don't get some real bug reports out of it, the tool is crap or misconfigured. Nobody is that good.

-

@devphobe it has found usages of weak hashing functions like md5.

The message was that I should check if it’s being used for sensitive data. Without digging deep inside that dependency, I can’t tell. -

@Oktokolo I don’t know if it’s crap (that’s my question) but it doesn’t seem misconfigured.

It’s the same configuration that other projects use and the other projects are getting more reports. -

@Lensflare A common problem with static analysis is too broad data types in signatures. Especially in dynamic languages, the analyzer might just assume "any" and skip checks when the code lacks annotations. So if this is TypeScript (or even worse, JavaScript), turn on reporting of the any "type" and missing type annotations for signatures.

-

@Oktokolo yes, that’s definitely a good idea.

The language of this project is Swift, though. It’s very strict.

Thinking about it, it also relies heavily on type inference, so this might be a problem for static analysis tools. In principle, it should work because type inference is static. But it’s also hard. Even the standard Swift compiler fails to check the type sometimes if the expression gets extremely complex.

I would assume that the static analysis tools that I’m using won’t just fail silently whenever they fail to analyze the code, but if they do, that could explain the lack of more extensive reports, maybe. -

From. my experience with java in our company. Sonarqube can be extremely pedantic, at least with minor code smells.

If you don't even have many of those, check if your Profile fits and if all relevant code is picked up. -

Static tools are like a test box setup.

Break them to figure out how resilient and hence good they are.

Breaking a test box as in doing all kinds of stupid things devs would do, like adding invalid environment files, throwing in wrong line endings, trying symlinks etc.

Same can be done with static tools.

Run more than one static tool, compare the results, check if the examples of the static tools produce warnings if injected into production code, make the static tool build run verifiable (e.g. adding commit / version information, dependencies with hash information of source tarballs, profile setting export etc.)

Many... Many... Many... Times the most dangerous assumption is that it works.

So try to break it, shake it, throw shit at it.

If it gives you warnings, good. If not, find out why.

My most beloved breakage test by the way is to remove configuration files...

When the static tool runs without any configuration, figure out how to print the active / used configuration and make absolutely sure to include this as an artifact / export file.

The number of times where a tool said "we will use the configuration provided via X" and then had some undocumented behaviour / knob / setting that needed to be enabled before hand... Too many times. Too many hours crying and ripping hairs out.

Related Rants

Did you say security?

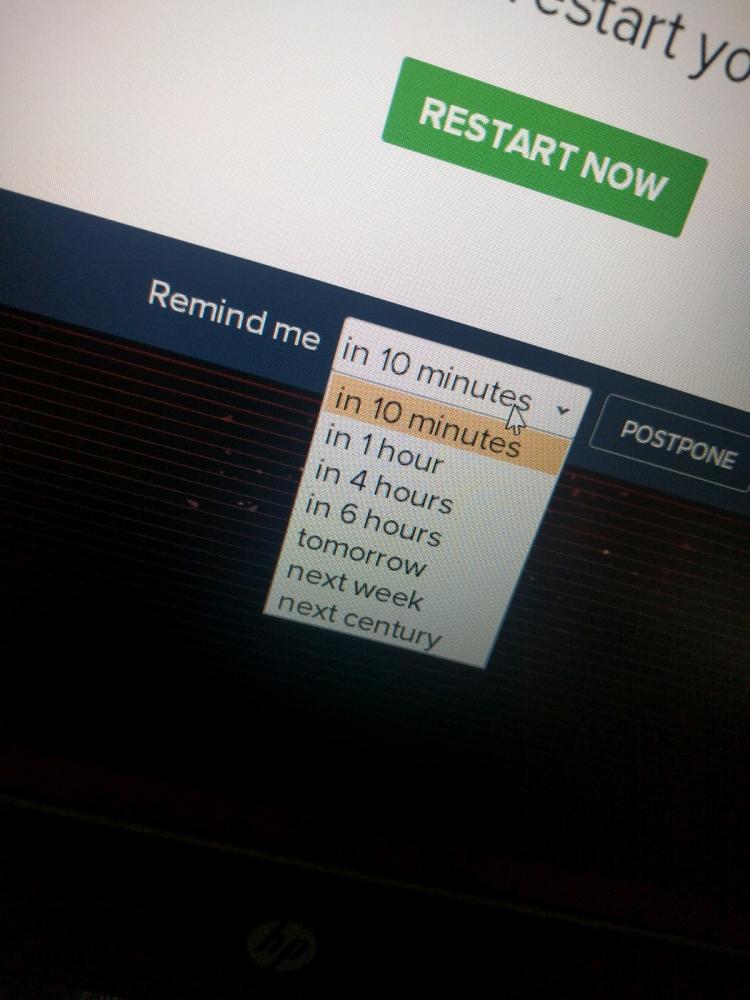

Did you say security? 10 points for next century option.

10 points for next century option.

Installed SonarQube and Snyk on the CI/CD of a 2.5 year old project that only had a linter enabled previously.

Practically zero problems found. One minor problem (same code in different branches), a few false positives, and a few possible problems in dependencies that I have no control over.

Now wondering:

Am I really that good or are those tools just shit?

rant

sonarqube

security

quality

snyk