Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

In my opinion, ethics dictate that the algorithms/models/parameters used for human interaction have to be public anyways.

Microsoft should try honesty for the first time since that company exists. It might actually work. -

The AI war is just another fad imo. Just wait until something cataclysmic happens due to it.

It will soon fade away like web3 did. -

Oh give me a break with this "prompt injection" BS. This "exploit" gains absolutely no access to any systems, gets through any security walls, or anything like that. It simply reveals some of the (IMO obvioius) rules that OpenAI is trying to apply to all answers, a task destined to fail anyway considering the size of the model.

-

@fullstackclown

They want us to not question it. Imho the label is by design.

Like doing the DAN on chatgpt is labeled 'hack'.

And thats wrong, but people gonna be people.

Ffs they could stop dicking around and just give us the model / checkpoint.

Related Rants

😁😁..

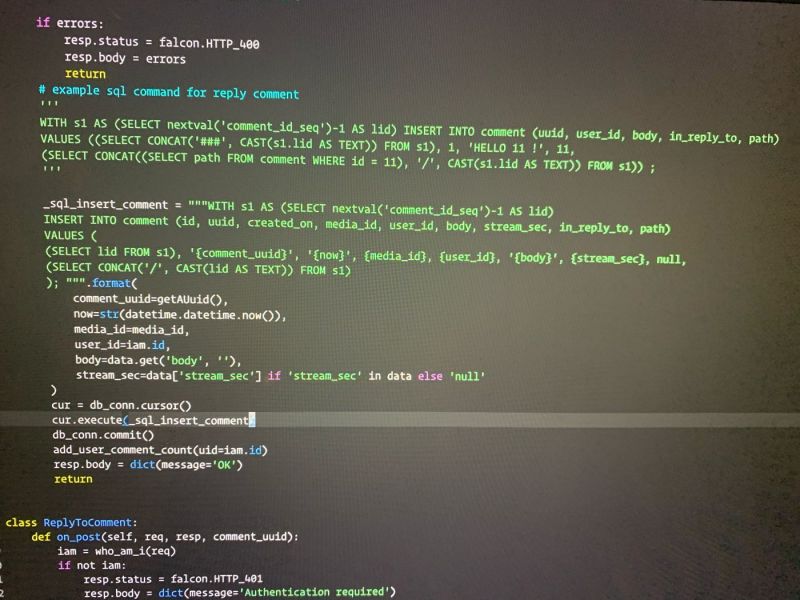

😁😁.. This fucking stupid asshole developer, wrote every single SQL execution with string formatting. Made me a full...

This fucking stupid asshole developer, wrote every single SQL execution with string formatting. Made me a full... So this is how they "teach" us in school...As a part time dev I was completely shocked when I saw this in our ...

So this is how they "teach" us in school...As a part time dev I was completely shocked when I saw this in our ...

Microsoft Manager: "We need to slap ChatGPT onto Bing....STAT!"

Devs: "There won't be enough time to test security."

Microsoft Manager: *Throws hands in the air* "Who cares!!?? Just get it done!"

Devs: "Ok, boss."

https://arstechnica.com/information...

rant

injection