Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

levi27071023ySo fucking true....No one ever teaches the real issues of data extraction, cleaning, fitting issues etc.

levi27071023ySo fucking true....No one ever teaches the real issues of data extraction, cleaning, fitting issues etc. -

The irreconcilability of logicians and statisticians is best indicated in the fact that data science still doesn't use type inference, type checking is only done by tools that need to translate to c++ or glsl and only during translation, and generic programming is just not a thing at all.

-

These things were commonplace and their benefits understood before data science had a name.

-

@Fast-Nop Yeah but they could really start coming up with new ways to write terrible code instead of the ones we already solved

-

@lorentz To be fair, I guess that's the point of using Python - to interface between libraries with the usual impedance mismatch. A language that performs auto-glue is quicker than making proper glue code.

-

onslaught153yjust watching another tutorial since i have a project due tomorrow and this dude just created variable for storing hidden size called "h_size" and variable for input called "inp"

lets throw the human interpretability out the window

kill me -

qwwerty11933yplenty of stuff you've listed is a lecturer/teacher failure - bad/incosistent naming, no explanations, overly complex one-liners, etc... I had 40+ hour AI/ML course last month and haven't encountered most issues you've mentioned.

qwwerty11933yplenty of stuff you've listed is a lecturer/teacher failure - bad/incosistent naming, no explanations, overly complex one-liners, etc... I had 40+ hour AI/ML course last month and haven't encountered most issues you've mentioned.

"python having no data types" .. not true. running jupyter notebooks without autocompletion, docs, debug, highlighting, autosave, etc... is your env setup failure + not true it's not git compatible

Nobody is stopping you to learn (i.e.) TensorFlow in C++. But afaik point of such course is usually to explain core of the AI/ML concepts instead of spending focus and time with programming and compiling. Now tell me a story how setting up a C++ env for everything to work and compile successfully is simpler and easier than python. -

qwwerty11933y@levi2707 pick a better teacher next time.

qwwerty11933y@levi2707 pick a better teacher next time.

mine essentialy started with "we need to clean up data first, because this is a real world". One of the topics was "properly define data collection methodology to ensure you start with something useful" and had an exercise with real life data just to demonstrate that sometimes it's not possible to extract anything useful. -

qwwerty11933y@levi2707 Sorry, don't know any online for ML.

qwwerty11933y@levi2707 Sorry, don't know any online for ML.

I've attended a course led by ML Prague conference organizer.

He also recomended Coursera as a good source, but you'll have to filter by who provides them (university, lecturers, format (hands-on homeworks), etc..) and reviews. Some universities offer also "specializations".

To be honest, picking up a valuable course is a mix of prepwork and luck and there's no "golden approach to picking the best"

Related Rants

No questions asked

No questions asked As a Python user and the fucking unicode mess, this is sooooo mean!

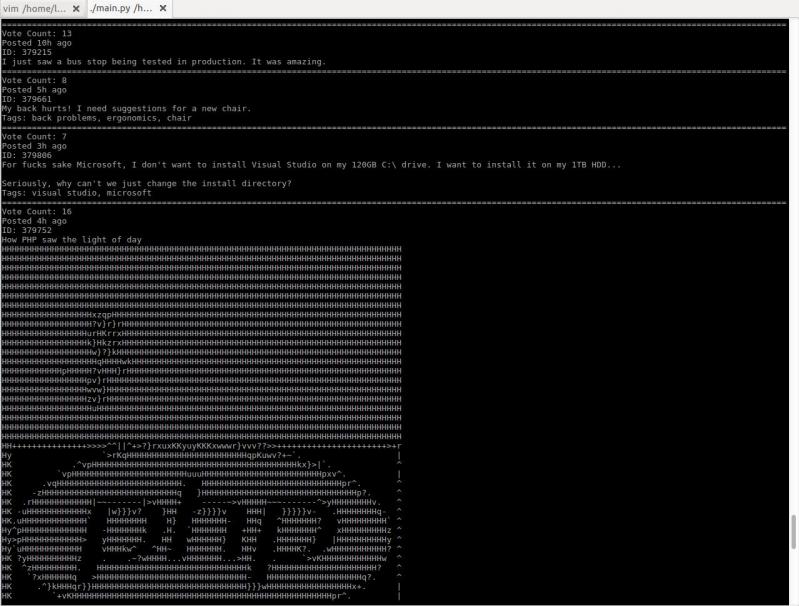

As a Python user and the fucking unicode mess, this is sooooo mean! I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

I just started working on a little project to browse devrant from terminal. It converts images to ascii art!

python machine learning tutorials:

- import preprocessed dataset in perfect format specially crafted to match the model instead of reading from file like an actual real life would work

- use images data for recurrent neural network and see no problem

- use Conv1D for 2d input data like images

- use two letter variable names that only tutorial creator knows what they mean.

- do 10 data transformation in 1 line with no explanation of what is going on

- just enter these magic words

- okey guys thanks for watching make sure to hit that subscribe button

ehh, the machine learning ecosystem is burning pile of shit let me give you some examples:

- thanks to years of object oriented programming research and most wonderful abstractions we have "loss.backward()" which have no apparent connection to model but it affects the model, good to know

- cannot install the python packages because python must be >= 3.9 and at the same time < 3.9

- runtime error with bullshit cryptic message

- python having no data types but pytorch forces you to specify float32

- lets throw away the module name of a function with these simple tricks:

"import torch.nn.functional as F"

"import torch_geometric.transforms as T"

- tensor.detach().cpu().numpy() ???

- class NeuralNetwork(torch.nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__() ????

- lets call a function that switches on the tracking of math operations on tensors "model.train()" instead of something more indicative of the function actual effect like "model.set_mode_to_train()"

- what the fuck is ".iloc" ?

- solving environment -/- brings back memories when you could make a breakfast while the computer was turning on

- hey lets choose the slowest, most sloppy and inconsistent language ever created for high performance computing task called "data sCieNcE". but.. but. you can use numpy! I DONT GIVE A SHIT about numpy why don't you motherfuckers create a language that is inherently performant instead of calling some convoluted c++ library that requires 10s of dependencies? Why don't you create a package management system that works without me having to try random bullshit for 3 hours???

- lets set as industry standard a jupyter notebook which is not git compatible and have either 2 second latency of tab completion, no tab completion, no documentation on hover or useless documentation on hover, no way to easily redo the changes, no autosave, no error highlighting and possibility to use variable defined in a cell below in the cell above it

- lets use inconsistent variable names like "read_csv" and "isfile"

- lets pass a boolean variable as a string "true"

- lets contribute to tech enabled authoritarianism and create a face recognition and object detection models that china uses to destroy uyghur minority

- lets create a license plate computer vision system that will help government surveillance everyone, guys what a great idea

I don't want to deal with this bullshit language, bullshit ecosystem and bullshit unethical tech anymore.

rant

python

machine learning