Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

And if these advance animals alter their environment in order to create a simulation of their world and that simulation also starts to have advance animals; do the “first order” have more importance than the “second order”?

I’ve seen that episode of Rick and Morty too. -

Thats the question that is going to be asked when people can be digitised. If you clone somebodies brain into a computer and it acts like them is it them? Or if you kill the human body and transfer their mind did you kill them or are they still alive

-

cursee164388y1. @JaegerStein I saw you are few ++ short to become 1000. So did what I had to do.

cursee164388y1. @JaegerStein I saw you are few ++ short to become 1000. So did what I had to do.

2. @irene definitely not stalking you. Blame it on algo. I subscribed to both you and @Bitwise though.

3. Favorited this. Very amusing and interesting topic but my philosophical engine needs booze to function. Hope to share my take on this soon. -

cursee164388yBtw I'm sure everyone in this rant thread is Westworld fan 😁 if you haven't watched it yet, do it at this Xmas holidays. You won't regret.

cursee164388yBtw I'm sure everyone in this rant thread is Westworld fan 😁 if you haven't watched it yet, do it at this Xmas holidays. You won't regret. -

cursee164388y@Bitwise please do. It is centered on the "consciousness" discussed in this topic and the OR's question about "how do we decide about pulling the plug".

cursee164388y@Bitwise please do. It is centered on the "consciousness" discussed in this topic and the OR's question about "how do we decide about pulling the plug". -

cursee164388yAlright. Got two glasses of fuel. So here is my take on this.

cursee164388yAlright. Got two glasses of fuel. So here is my take on this.

I'm from Buddhist country. Although I'm not really religious (occasionally more like an atheist) I do found Buddha teaching very meaningful and reasonable and logical. And I'm gonna answer this with his help.

Buddhists believe that consciousness is the ability to differentiate what is good deed and bad deed by oneself. (Different from having a will 😉)

So according to that, not all living creatures have consciousness such as plants and trees.

How each living creature learn what's good deed and bad deed, well that's different and that depends. I'm sure you all can accept to certain degree that animals also have consciousness. How can an animal decide what's good deed or bad deed? Some by nature; most know they have to protect their kids and teach them how to survive. Some by nurture; some know doing certain acts will give bad results and refrain from committing such act. -

cursee164388ySo let's back to OR's topic.

cursee164388ySo let's back to OR's topic.

Consciousness of machine 😀 simulated consciousness.

I would say, yes we shouldn't unplug them just because we can. Because according to your example, those objects are sure acting more than what you described. 😉 Yes the actions may be rooted from your main instructions but they definitely do things beyond those.

@Bitwise and @irene your game character definitely don't have consciousness. 😝 -

cursee164388y@irene dude westworld is about artificial intelligence robots. Don't judge from the cowboy dresses.

cursee164388y@irene dude westworld is about artificial intelligence robots. Don't judge from the cowboy dresses.

And about the other arguments, politics and religions are the two topics I have learned not to discuss very deeply from my past experiences 😁 I was just sharing my points of views. The rest is up to you guys. -

cursee164388y@irene this. No? 🙄 https://en.m.wikipedia.org/wiki/...

cursee164388y@irene this. No? 🙄 https://en.m.wikipedia.org/wiki/...

You pretty dumb monkey. Eh.

I am on 5,6 glasses and still functioning well. 😝

Related Rants

No, thank you.😆

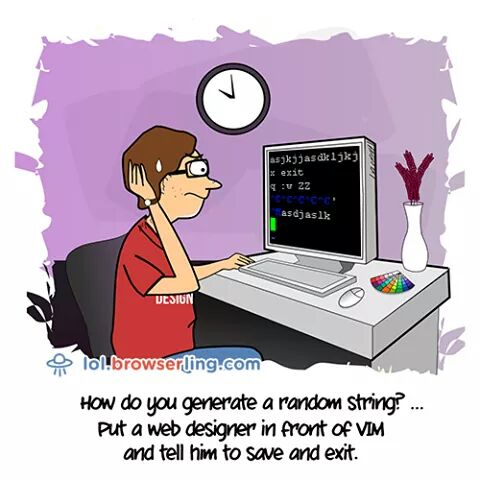

No, thank you.😆 The real random generators!

The real random generators!

Very Long, random and pretentiously philosphical, beware:

Imagine you have an all-powerful computer, a lot of spare time and infinite curiosity.

You decide to develop an evolutionary simulation, out of pure interest and to see where things will go. You start writing your foundation, basic rules for your own "universe" which each and every thing of this simulation has to obey. You implement all kinds of object, with different attributes and behaviour, but without any clear goal. To make things more interesting you give this newly created world a spoonful of coincidence, which can randomely alter objects at any given time, at least to some degree. To speed things up you tell some of these objects to form bonds and define an end goal for these bonds:

Make as many copies of yourself as possible.

Unlike the normal objects, these bonds now have purpose and can actively use and alter their enviroment. Since these bonds can change randomely, their variety is kept high enough to not end in a single type multiplying endlessly. After setting up all these rules, you hit run, sit back in your comfy chair and watch.

You see your creation struggle, a lot of the formed bonds die and desintegrate into their individual parts. Others seem to do fine. They adapt to the rules imposed on them by your universe, they consume the inanimate objects around them, as well as the leftovers of bonds which didn't make it. They grow, split and create dublicates of themselves. Content, you watch your simulation develop. Everything seems stable for now, your newly created life won't collapse anytime soon, so you speed up the time and get yourself a cup of coffee.

A few minutes later you check back in and are happy with the results. The bonds are thriving, much more active than before and some of them even joined together, creating even larger bonds. These new bonds, let's just call them animals (because that's obviously where we're going), consist of multiple different types of bonds, sometimes even dozens, which work together, help each other and seem to grow as a whole. Intrigued what will happen in the future, you speed the simulation up again and binge-watch the entire Lord of the Rings trilogy.

Nine hours passed and your world became a truly mesmerizing place. The animals grew to an insane size, consisting of millions and billions of bonds, their original makeup became opaque and confusing. Apparently the rules you set up for this universe encourage working together more than fighting each other, although fights between animals do happen.

The initial tools you created to observe this world are no longer sufficiant to study the inner workings of these animals. They have become a blackbox to you, but that's not a problem; One of the species has caught your attention. They behave unlike any other animal. While most of the species adapt their behaviour to fit their enviroment, or travel to another enviroment which fits their behaviour, these special animals started to alter the existing enviroment to help their survival. They even began to use other animals in such a way that benefits themselves, which was different from the usual bonds, since this newly created symbiosis was not permanent. You watch these strange, yet fascinating animals develop, without even changing the general composition of their bonds, and are amazed at the complexity of the changes they made to their enviroment and their behaviour towards each other.

As you observe them build unique structures to protect them from their enviroment and listen to their complex way of communication (at least compared to other animals in your simulation), you start to wonder:

This might be a pretty basic simulation, these "animals" are nothing more than a few blobs on a screen, obeying to their programming and sometimes getting lucky. All this complexity you created is actually nothing compared to a single insect in the real world, but at what point do you draw the line? At what point does a program become an organism?

At what point is it morally wrong to pull the plug?

undefined

random

shower thoughts