Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

What about capturing the actual network traffic that's loaded in? Any GraphQL stuff that you could glean?

-

Nmeri172692y@iSwimInTheC I don't know how to intercept network requests. I tried inspecting the requests to read it manually but the structure is way too arcane, it was easier to just read the ui

-

@Nmeri17 you can do it using chrome or edge. Press F12 and you can get into the developer tools. Inside there should be a tab for network. You'll be able to see that and the payloads that are being sent and received.

You can right click on a payload and copy the curl command for it.

From there it should be relatively easy to decipher and maybe reverse engineer the requests. -

Nmeri172692y@iSwimInTheC I'm seeing the response body but it's too complex to decipher. A lot of unwieldy keys and entries, locating following status and user id, or the meaning of the parameters eg page number, next url etc, is a recipe for hysteria. That one.

Secondly, that's not automatic –I need a solution that can execute in a script, without me having to inspect network requests. Picture thousands of followers; there's no way their api will even let you reach such limit in one swoop. It was deliberately made that way to subvert devs from doing that

A ui or terminal seems like the best bet currently

Related Rants

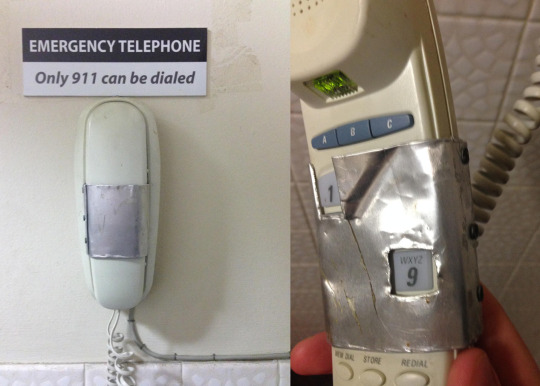

What only relying on JavaScript for HTML form input validation looks like

What only relying on JavaScript for HTML form input validation looks like Found something true as 1 == 1

Found something true as 1 == 1

I have never seen core coding questions here so this is one of my shots in the dark-- this time, because I have a phobia for stackoverflow, and specifically, discussing this objective among wider audience

Here it goes: Ever since elon musk overpriced twitter apis, the 3rd-party app I used to unfollow non-followers broke. So I wrote a nifty crawler that cycles through those following me and fish out traitors who found me unpleasant enough to unfollow. Script works fine, I suspect, because I have a small amount I'm following

The challenge lies in me preemptively trying to delete some of the elements before the dom can overflow. Realistically, you want to do this every 1000 rows or so. The problem is, tampering with the rows causes the page's lazy loader to break. Apparently, it has some indicator somewhere using information on one of the rows to determine details of the next fetch

I've tried doing many things when we reach that batch limit:

1) wiping either the first or last

2) wiping only even rows

3) logging read rows and wiping them when it reaches batch limit

4) Emptying or hiding them

5) Accessing siblings of the last element and wiping them

I've tried adding custom selectors to the incoming nodes but something funny occurs. During each iteration, at some point, their `.length` gets reset, implying those selectors were removed or the contents were transferred to another element. I set the MutationObserver to track changes but it fetches nothing

I hope there are no twitter devs here cuz I went great pains to decipher their classes. I don't want them throwing another cog that would disrupt the crawler. So you can post any suggestions you have that could work and I will try it out. Or if it's impossible to assist without running the code, I will have no choice but to post it here

question

js

javascript

crawler