Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@Demolishun default. I use AI autocompletion myself. Blast to work with (most of time). It's the default completion + 90% the thing what you would type anyway until it becomes 100% if you keep typing. There's no reason not to use AI autocomplete. How can people hate autocomplete? It can be wrong? Just keep typing so it selects the next element in the list. AI autocomplete works the same but doesn't show any list. That there's smth different is in people's heads. And no, it's not stealing someone else's code if you would've typed it anyway. Everything you know is stolen, just like the knowledge of AI so it matches many times

-

@retoor

Jeez, we are using AI now for autocomplete? AUTOCOMPLETE?!

Just use a good language with a proper type system and you can have a perfectly capable autocomplete.

This shit has been around for decades.

I guess this concept is new to the folks of dynamically typed languages. -

@Lensflare It's not the same dude. Normally autocomplete does a word. Now it does a line. It's a huge upgrade. An autocomplete using your style of coding / name declaration. That's difference. And yes, I know I kill a tree or squirrel with every time I let it predict smth. I have 700 autocompletes in a week. I would say that not nothing.

Edit: youre right! It actually would do autocomplete for dynamic languages. It's even greater than I thought -

cprn18351y@retoor AI autocomplete doesn't understand the context, though. You ask it to work on your code, and it provides exactly what you asked for… but for one of the projects it was trained on. Sometimes it even messes up variable names. It's like a very, very annoying intern goldfish - never learns, can't remember what you talked about yesterday, and lies when asked if it understands. If you want whole lines, or even paragraphs, snippets are way better.

cprn18351y@retoor AI autocomplete doesn't understand the context, though. You ask it to work on your code, and it provides exactly what you asked for… but for one of the projects it was trained on. Sometimes it even messes up variable names. It's like a very, very annoying intern goldfish - never learns, can't remember what you talked about yesterday, and lies when asked if it understands. If you want whole lines, or even paragraphs, snippets are way better. -

@cprn it can even autocomplete variables that don't exist often. It's crazy. It's the art to work with AI but don't become lazy. Stay sharp I guess. Do you use AI autocomplete?

-

@cprn ollama is cool. Not compatible with codeium I guess. I have a lot of fun with olloma. Did you try the qwen models? So light!

-

cprn18351y@retoor I switched from Deepseek Coder v2 to Qwen 2.5 Coder yesterday. 😂 I still don't use it, but if I ever feel like it, my configs are ready.

cprn18351y@retoor I switched from Deepseek Coder v2 to Qwen 2.5 Coder yesterday. 😂 I still don't use it, but if I ever feel like it, my configs are ready.

I have a separate Codeium plugin (that talks with online service, so kind of slow), but keep it disabled because it doesn't use Neovim's LSP capabilities, and provides its own API instead, so I have to invoke commands differently, use hardcoded key binds, etc. I was thinking about wrapping the plugin in a thin facade to make it “native”, but after playing with other LLMs I kind of gave up on AI in general for now. Is Codeium worth it? -

@cprn I'm a huge fan of codeium. I noticed that it works better for stricter languages as C than for dynamic like python with predicting. With C I would really recommend codeium. Had once 800 completions on a week or so. That's sick, but c is a lot of typing.

Codeium is getting better and better. First, it didn't do pointers well and so freaking much mistakes, I did also quit for a while. Now going hardcore on it. I have no idea how comfortable it is on neovim. In vscode it's quite fast. I consider it worth a try.

How many b parameters are the models you're using? I'm playing with 0.5 - 3 b models locally and on vps. I'm working on retoor1b 😁 A retoor clone of me 😁 1b is enough. It contains coding knowledge as well -

cprn18351y@retoor “A retoor clone of me … 1b is enough” 😂

cprn18351y@retoor “A retoor clone of me … 1b is enough” 😂

The `deepseek-coder-v2` I used was 16b, but `qwen2.5-coder` I use now is 7b and yet seems to be handling difficult cases better, at least according to my crude 2 hours of testing and some online comparison videos. Qwen also has 0.5b, 1.5b, 3b, 14b and 32b versions available.

Related Rants

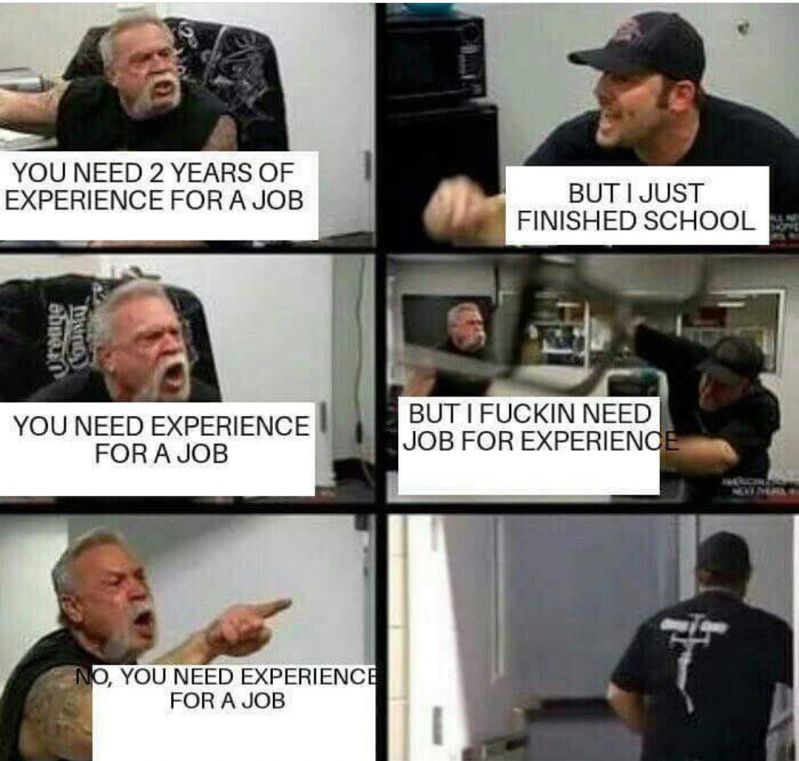

The truth, whole truth, and nothing but there truth.

The truth, whole truth, and nothing but there truth. Job requirements nowadays...

Job requirements nowadays... I don't really like memes but this one is way too relatable...

I don't really like memes but this one is way too relatable...

Rarely post a meme cuz we kinda know all I guess but this one is so good: https://reddit.com/r/VisualStudio/...

(Link cuz it's animated)

joke/meme

vscode

autocomplete

link

animated

experience