Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

jestdotty6629240dsee! tokens should just be regular WinZip compression

jestdotty6629240dsee! tokens should just be regular WinZip compression

it's awfully opinionated to assume you should dictate the way the AI interprets its information. there would be far more efficient ways if they were made on the fly -

Wisecrack9419240dIf anyone doesn't know what the fuck I'm talking about or is confused on anything I'm happy to answer any questions.

Wisecrack9419240dIf anyone doesn't know what the fuck I'm talking about or is confused on anything I'm happy to answer any questions.

Basically the reason it is important to preserve latents and not change them directly is to preserve orthogonality (right angles) between vectors. On average any random vector you pick is likely to be orthogonal to most other vectors for a high enough dimensional embedding.

Why is this important? Because the entire mechanism assumes statistical independence between vectors as a starting condition, a property of jointly gaussian variables, otherwise it causes models to do weird things and have edge-case behaviors and more failure modes.

Changing the latents directly during training will therefore cause other vectors on other training examples to have higher loss (poorer performance) than they otherwise wood.

You can think of a hypersurface as a system projective hypothesis about a subset of vector embeddings for a particular prompt or training example. -

Wisecrack9419239d@jestdotty "WinZip compression"

Wisecrack9419239d@jestdotty "WinZip compression"

Unironically a few people tried something similar with zip, and for very early llm's when they were still early and producing shortstories intermixed with gibberish.

It was still mostly gibberish, but it was semi-coherent gibberish from what I heard, which for the time was impressive for a language model built on zip! -

BordedDev3131239dI can understand this, but I couldn't explain it to someone without taking a good chunk of time processing it.

BordedDev3131239dI can understand this, but I couldn't explain it to someone without taking a good chunk of time processing it.

It does sound reasonable, I assume it would also improve cohesion over previous conversation. Allowing it to rationalise the file it was asked to read to be the same if it were to read it again, but this time analysing it for typos instead of bugs for example? I've had luck giving the AI a persona to do that otherwise but this sounds like it could help there as well -

Wisecrack9419239d@BordedDev "It does sound reasonable, I assume it would also improve cohesion over previous conversation"

Wisecrack9419239d@BordedDev "It does sound reasonable, I assume it would also improve cohesion over previous conversation"

Actually I didn't consider that but you're right. Damn good idea!

Whats more, now that you mention it, a smaller test would be to add this to just lookups on the context window and see if it lowers perplexity.

Don't know if you could get away with it without training though.

Effectively we could view it as a normalization layer for the error signal and noise introduced at each step in the process of going from token->embedding->logit to output token.

So now thats its posted to the internet, we should see it appearing in big models in the next 30 days our so, ha. -

BordedDev3131239d@Wisecrack Hahaha definitely, and then we'll hear about the new breakthrough AI 10 days after that

BordedDev3131239d@Wisecrack Hahaha definitely, and then we'll hear about the new breakthrough AI 10 days after that -

Wisecrack9419239d@BordedDev I think we're gonna see some pretty big developments in 2025.

Wisecrack9419239d@BordedDev I think we're gonna see some pretty big developments in 2025.

Theres too many people now working at the coalface just to fail to climb the difficulty curve of diminishing returns on existing architectures.

We're at the stage and momentum now that year of research yields more than one year of progress. Of course thats a subjective statement open to interpretation, but I don't think too many experts would disagree with that assessment. -

figoore237239d@Wisecrack fuck yeah, new science shit, LOVE IT! 🤟

figoore237239d@Wisecrack fuck yeah, new science shit, LOVE IT! 🤟

For anyone who struggles to understand our crazy mathematician’s theory, here is a short explanation:

Imagine you have a box of different-shaped puzzle pieces. Each piece represents some information, like a word in a sentence.

Now, instead of changing the shape of the puzzle pieces to make them fit together, you use a magic board that slightly changes the way the pieces connect when you put them on it. This way, the pieces stay the same, but they fit better depending on what’s around them.

Some people think we should just squish and stretch the puzzle pieces to make them fit, but that can make a big mess and break the puzzle. Instead, it’s better to have the magic board do the adjusting so everything still works smoothly.

This idea helps computers understand words better and make fewer mistakes when figuring out what people mean! -

Wisecrack9419238d@figoore what a fantastic description fioore.

Wisecrack9419238d@figoore what a fantastic description fioore.

Thank you man.

Just for you heres an image of the new black book. I don't usually use digital, but I'm becoming more comfortable with it because it lets me better organize ideas.

Some of these are known topics, some of it is non-trivial novel applications of existing ideas, and some of them like totems and obelisks are completely new and need time to cook still.

I'm still filling it out, it is very sparse, but I want to have it ready for when I'm in a stable position and have enough passive income to start my own research lab full time.

-

figoore237238d@Wisecrack i LOVE it 🤩

figoore237238d@Wisecrack i LOVE it 🤩

Specially the “experiments and current thoughts” which are not connecting to anything 😂

Anyway i love your black book and the different research ideas! Keep it up bro! It is pretty cool!

And how is your research lab setup? Is it the current year scope, or next years? -

Wisecrack9419238d@figoore "Specially the “experiments and current thoughts” which are not connecting to anything"

Wisecrack9419238d@figoore "Specially the “experiments and current thoughts” which are not connecting to anything"

lol yeah. Theres actually a good reason for that.

I have a formalization process. 1. White board -> 2. Gather existing research papers and prior art -> 3. Do a write-up on novel applications and scope -> 4. Write a doc on experimental setup, data requirements, etc -> 5. Code and test -> 6. write an experiment post-mortem -> 7. move to network as a formal node.

For example some of the grey nodes are placeholders for that.

Lab is slow going but I'm on track for late next year. Have to get together roughly $60k to fund myself and one other person mostly in it for the opportunity to do research. Really low cost of living in my area w/ my current budget, just the essentials, so its foreseeable to pay myself and one other $15-20k per year for say 2-3 years of research. Will take a certain kind of person though willing to slum it with me for the opportunity to do cool research.

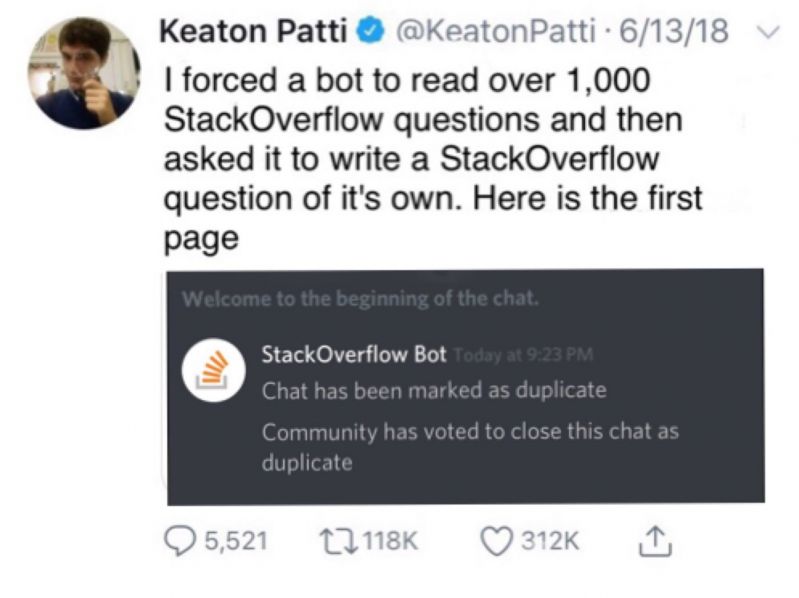

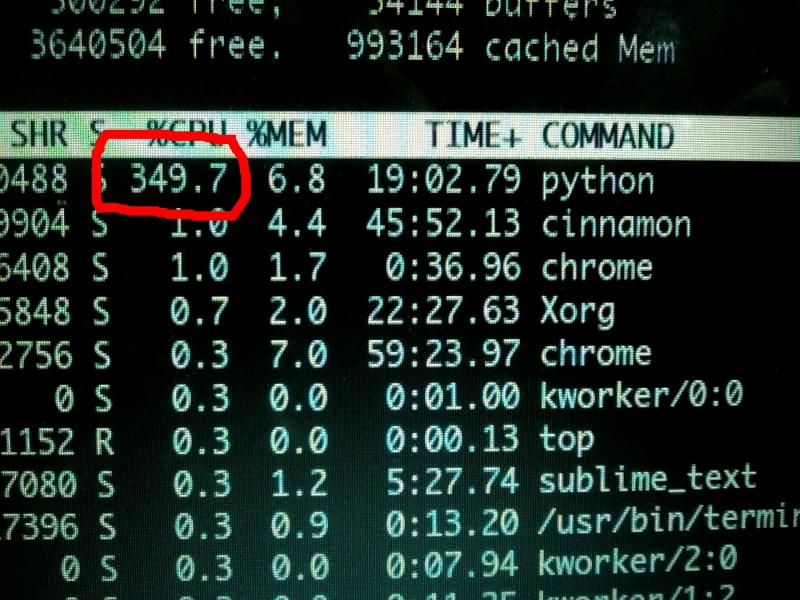

Related Rants

Machine Learning messed up!

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

Adaptive Latent Hypersurfaces

The idea is rather than adjusting embedding latents, we learn a model that takes

the context tokens as input, and generates an efficient adapter or transform of the latents,

so when the latents are grabbed for that same input, they produce outputs with much lower perplexity and loss.

This can be trained autoregressively.

This is similar in some respects to hypernetworks, but applied to embeddings.

The thinking is we shouldn't change latents directly, because any given vector will general be orthogonal to any other, and changing the latents introduces variance for some subset of other inputs over some distribution that is partially or fully out-of-distribution to the current training and verification data sets, thus ultimately leading to a plateau in loss-drop.

Therefore, by autoregressively taking an input, and learning a model that produces a transform on the latents of a token dictionary, we can avoid this ossification of global minima, by finding hypersurfaces that adapt the embeddings, rather than changing them directly.

The result is a network that essentially acts a a compressor of all relevant use cases, without leading to overfitting on in-distribution data and underfitting on out-of-distribution data.

random

machine learning