Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

I am too dumb to understand this

edit: so you have i-1 numbers and you have the average of them, and you want to add a new number to the average using the old average? -

7Raiden8647ySo when you tag it "fast average" you mean it's faster to calculate? As in, it's more intuitive? I totally agree!

7Raiden8647ySo when you tag it "fast average" you mean it's faster to calculate? As in, it's more intuitive? I totally agree! -

mundo0348297y@iZeroCool agree, it is just simplified

mundo0348297y@iZeroCool agree, it is just simplified

The question here is, why is the not simplified one the standard now? Is it really? -

Root772327yThe first one doesn't make sense to me: too much overlap. The second is how I'd calculate it.

Root772327yThe first one doesn't make sense to me: too much overlap. The second is how I'd calculate it. -

If i is very big the second one can be rounded as o + (n-o)/i = o

This especially happens using low precision types, so I would discourage using it if you think the number of elements is going to be really big.

The standard one doesn't face this problem -

@bigworld12 Yes, but I went about it differently.

@kleinerKobold You're right. The removal of the multiplication also removes risk of overflow for large numbers or large amounts of numbers.

@michezio I had noticed that too!

(o(i - 1) + n) / i

= (oi - o + n) / i

= o + (n - o) / i -

If the calculation of n is heavy, utilizing Kahan summation algorithm should alleviate the pain of low precision. You might also be able to rewrite whatever loop you're adding to the average with for pairwise summation.

-

@michezio if I is very big, new numbers won't affect the average that much, so it would make sense that the new average rounds off to old average

-

@bigworld12 I agree, but it still won't be the same. I had run some tests checking the difference with lots of random numbers; I'll find (or rewrite) it and post results.

-

I've done some testing, and at the end of the day both methods suffer from some kind of precision error. So it depends only on the precision of type used, and if efficiency is an issue, the simplified version is actually better.

Anyway a better way to deal with running averages would be to store an unaveraged sum and doing the division only when you actually need the average value. So you have less precision issues and more efficiency, but this is not always applicable... -

@michezio Agreed, but the general use case (sum) is not one we're talking about (mostly). The need for running average is generally when the average is needed in each cycle.

By the easy, what were the actual results and errors that you found, compared to sum then average? -

@anArchLinuxer a quick test over 340 millions randomly generated numbers (floats) between 0 and 1 using java doubles to calculate the averages. As you can see errors are really small.

Related Rants

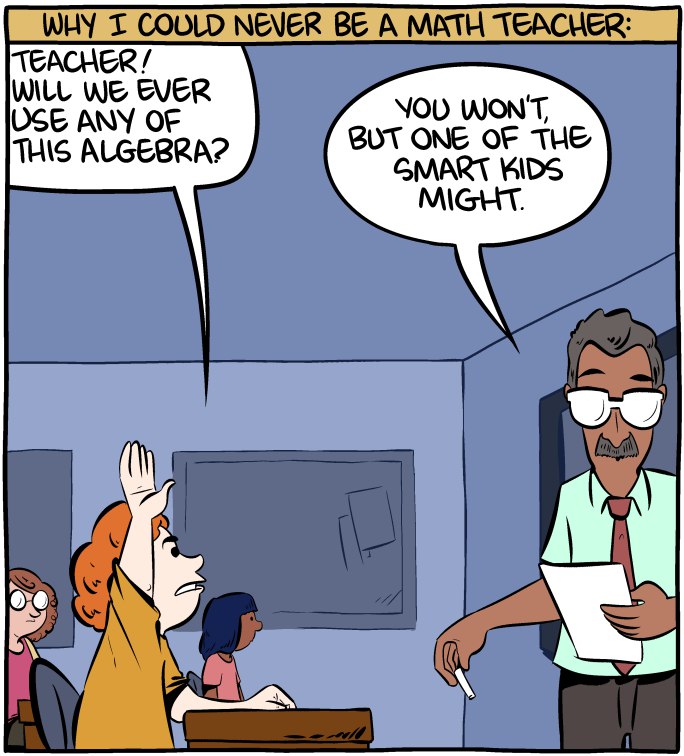

Math is hard.

Math is hard. When you wanted to know deep learning immediately

When you wanted to know deep learning immediately :)) finally a good answer

:)) finally a good answer

Devrant::Mathematicians, unite!

I found a new way to calculate running average.

Old:

a(o, n, i) = (o(i - 1) + n) / i

New:

a(o, n, i) = o + (n - o) / i

a: New average function

o: Old average

n: Element to add to average

i: New number of elements

random

math

so bored

fast average