Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Related Rants

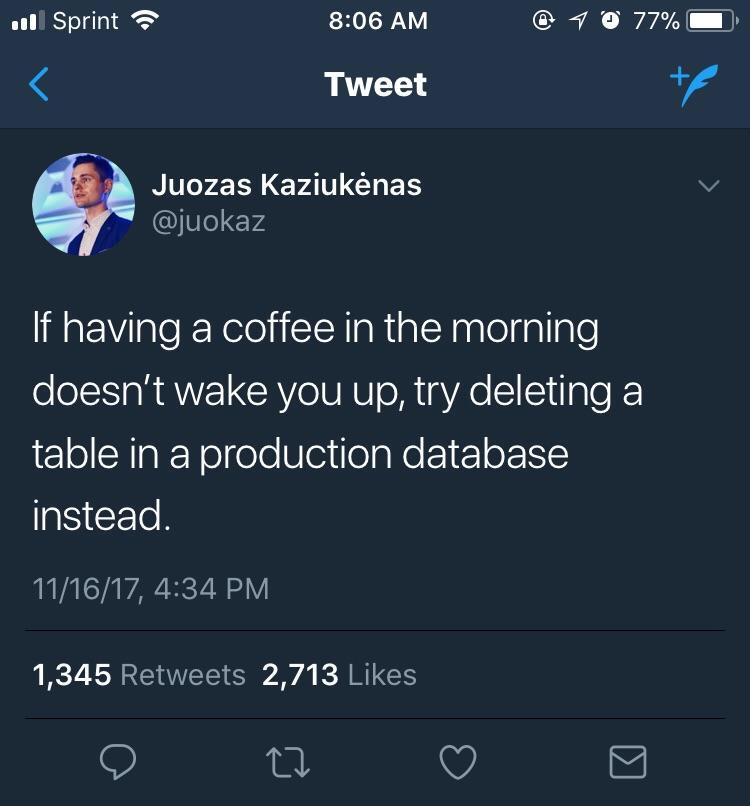

Oh sh*t

Oh sh*t Hacking yourself.

Hacking yourself. I am so glad that i learned at middleschool how to use excel😂

I am so glad that i learned at middleschool how to use excel😂

My Spark Program to process changes from Kafka topic to DB table is working end-to-end. But when it is manually terminated when an insert/update/delete is in progress, the whole tables data gets dropped. How can I handle this in a safe way?

question

kafka

database

spark