Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@iiii exploring simulations of entanglement.

If Jb and K are uncorrelated, and Ja and K *are* correlated, then it stands to reason that even though K was generated from a completely separate stream of random numbers, it in some way is intimately related to Ja. We do this to make sure there's no *incidental* correlations between J and K that might exist *before* encoding additional data into J.

And basically what we're doing is at each step randomly replacing elements of K and remeasuring its correlation with Ja and Jb, keeping the transformations of K that increase correlation (negative or positive) or in other words drive it further from 0 correlation.

This is also why it's important to use hardware entropy sources (or other high entropy number generators) because I suspect, though haven't confirmed, that J and K would turn up with a high rate of incidental correlation (as compared to high entropy sources) with things like pseudo random number generators. -

Purpose is first to see if "work" or operations can be represented in mappings between high to low entropy states.

And if the reverse process of observing, or decoding K, can be represented as a solution or space of solutions smaller than J. -

It's interesting because I'm pretty sure it can be modelled as a systems dynamics problem:

Take the bathtub problem from SDM: you have an inflow pipe, an outflow drain, as well as the tub itself, and flow, which is the throughput of the stock, in this case water, through the system based on only the pie in rate and the drain out rate.

J represents the inflow pipe. K represents the tub. L would the drain, or decoder.

If you read through "bathtub dynamics: initial results of a systems thinking inventory" by Linda Booth Sweeney over at Harvard (she's WICKED SMAAAT!), check out pages six and seven... -

"In the square wave pattern used in task 1 both inflow and outflow are constant during each segment. This is among the simplest possible graphical integration task—if the net flow into a stock is a constant, the stock increases linearly. The different segments are symmetrical, so solving the first (or, at most, first two) segments gives the solution to the remaining segments. We expected that performance on this task would be extremely good, so we also tested performance for the case where the inflow is varying: In BT/CF task 2 the outflow is again constant and the inflow follows a sawtooth wave, rising and falling linearly. Task 2, though still elementary, provides a slightly more difficult test of the subjects’ understanding of accumulations, in particular, their ability to relate the net rate of flow into a stock to the slope of the stock trajectory."

-

"Solution to Task 1: The correct answer to BT/CF task 1 is shown in Figure 3 (this is an actual subject response). Note the following features:

1.When the inflow exceeds the outflow, the stock is rising.

2. When the outflow exceeds the inflow, the stock is falling.

3. The peaks and troughs of the stock occur when the net flow crosses zero (i.e., at t = 4, 8, 12, 16).

4. The stock should not show any discontinuous jumps (it is continuous).

5. During each segment the net flow is constant so the stock must be rising (falling) linearly.

6. The slope of the stock during each segment is the net rate (i.e., ±25 units/time period).

7. The quantity added to (removed from) the stock during each segment is the area enclosed by the net rate (i.e., 25 units/time period * 4 time periods = 100 units, so the stock peaks at 200 units and falls to a minimum of 100 units)." -

Look at this, unless I've misunderstood something, with a few modifications of the system in question, namely complex time delays, any system that can be represented through system dynamics dynamics can *also* be represented by one or more functions with a nontrivial output.

And that's our system with Ja, Jb, K, and the addition of L. -

I know it's kind of out in left field. Trying to cross-pollinate or laterally think between statistics, matrix math, and information theory, but it's not that far fetched of a concept.

Look at machine learning for example, namely autoencoders: three layers, and the middle layer essentially forces the system to learn more general relations, rather than simply memorize. It's the same principle here: high entropy is noise, K represents low entropy *between* J and K. In other words K is like the middle layer of an autoencoder.

If a function can be extracted from L, that represents an approximation of Jb for input K (where Jb is J with the data added in some manner, such as a bias), then L could, potentially, in some form, be a function that produces numbers like the data in Jb for some variable n. And if L is reversible, then inputting Jb rather than K, should give the value of the variables that produced Jb, assuming Jb is the result of some blackbox function that's hard to reverse. -

Think of the knapsack problem. For a ridiculous analogy, imagine heating that knapsack to a high temperature in a electromagnetic field. (generating a K with a high correlation to Jb). Its contents are now "magnetized".

You then take a magnet, L (magnets, how the fuck do they work?), and instead of working NP hard to get at the contents, you "fish them out" with relative ease (inverting L by inputting Jb instead of K, assuming L is some function approximating a more complex function, with K and Jb as points on its surface).

That's the idea in a nutshell.

Or maybe I'm just a lunatic. -

@rEaL-jAsE quick call Neo. I THINK I OPENED A WORMHOLE!

oh no, I'm being sucked in.

Tell my body pillow of I love Lucy, that I love her.

I leave my rare fake mustache collection to my dog Lucky. I also leave my rare hat collection to her. May she be the most dapper dog to have ever doodled on anyones lawn.

Lastly in place of my body, bury my collection of antique gold and silver coins at the spot I told you about that one time, so that future generations may carry on the adventurous spirited tradition of exploration and discovery, also known as graverobbing.

Related Rants

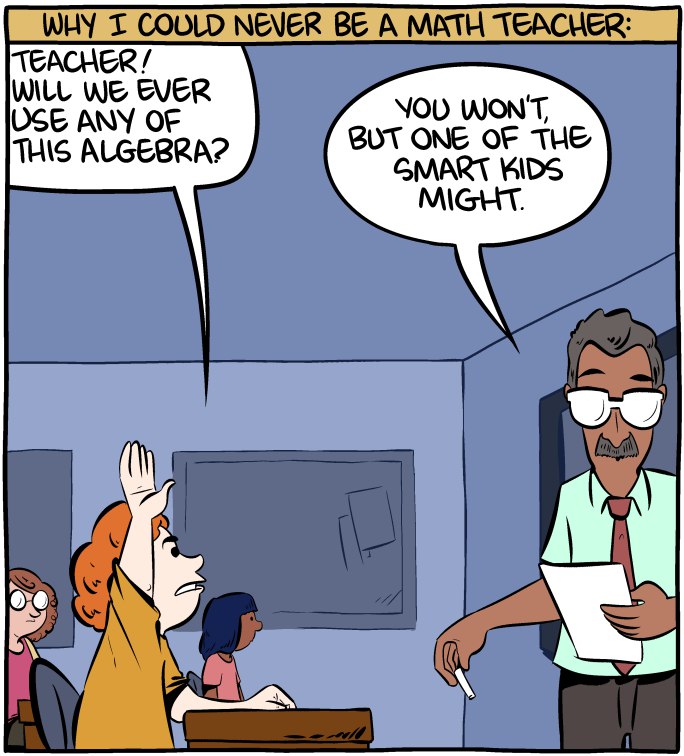

Math is hard.

Math is hard. When you wanted to know deep learning immediately

When you wanted to know deep learning immediately :)) finally a good answer

:)) finally a good answer

Let's say I take a matrix of high entropy random numbers (call it matrix J), and encode a problem into those numbers (represented as some integers which in turn represent some operations and data).

And then I generate *another* high entropy random number matrix (call it matrix K). As I do this I measure the Pearson's correlation coefficient between J (before encoding the problem into it, call Jb), and K, and the correlation between J (after encoding, let's call it Ja) and K.

I stop at some predetermined satisfactory correlation level, let's say > 0.5 or < -0.5

I do this till Ja is highly correlated with some sample of K, and Jb's correlation with K is close to 0.

Would the random numbers in K then represent, in some way, the data/problem encoded in Ja? Or is it merely a correlation?

Keep in mind K has no direct connection to J, Ja, or Jb, we're only looking for a matrix of high entropy random numbers that indicated a correlation to J and its data.

I say "high entropy", it would be trivial to generate random numbers with a PRNG that are highly correlated simply by virtue of the algorithm that generated them.

question

random numbers

statistics

math