Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

@Fast-Nop But HTTPS is more secure than HTTP and JSON has an S but XML doesn't? This doesn't make any sense!

-

@ScriptCoded Well, if you run out of X because AMD has gobbled up all of them in their product names, an S is the next best thing.

-

@ScriptCoded it's so confusing!

XML has also one Letter less than JSON, which makes it automatically more efficient. -

JSON has no validation per se.

You can tell an serializer that "this" (input JSON stream) should be converted to class X.

XML has schema validation.

Plus in XML you can define own types / properties.

That's why JSON isn't equal to XML.

Though I find the wording of the site very clickbaity -

hitko29953y@IntrusionCM XML has about as much validation as JSON - unless you specify a specific schema and actually run schema validation, both will just consume whatever data you throw in.

hitko29953y@IntrusionCM XML has about as much validation as JSON - unless you specify a specific schema and actually run schema validation, both will just consume whatever data you throw in. -

This list is garbage.

Second point: Who the fuck needs namespaces in a data exchange format?

Third point: display data. What?

Last point: Is that supposed to be a benefit? Fuck everything that’s not UTF-8! -

@Lensflare maybe does he mean like in android studio the xml for viewing your layouts

-

Grumm18943y@hitko This was the point of that list i guess.

Grumm18943y@hitko This was the point of that list i guess.

Sure you can validate XML with an XSD.

But any dev could also make a json validator to check the data.

if you use a schema with wildcards all over the place, it isn't more secure i would think. -

@joewilliams007 maybe. But that’s a viewer for xml then. Json has viewers, too. It’s not part of the language/format.

-

Or let me put it that way:

Json rarely needs a viewer because the pure text form (when indented correctly) is already perfectly readable.

Unlike xml where you apparently need a viewer to view your data. Because let’s face it: Xml is unreadable regardless of how you indent it.

Again, a plus for json. -

@hitko you will need an schema for any custom object you specify.

That's the difference most people ignore in the JSON vs XML debate:

JSON - as the name says - is a generic object notification.

XML is a markup language to define objects / types etc. (to lazy to properly write it down).

Which is the reason one can't just convert between JSON and XML.

They serve entirely different purposes. -

@IntrusionCM they are *meant* to serve different purposes. But in practice, nobody seems to care.

-

hitko29953y@IntrusionCM The only way to define types in XML is using DTD, and those aren't even proper types since they only support regular grammar and the most basic entity - child - attribute relationships. There's not even a way to tell whether a value is a number or a boolean rather than a string if you're only using DTD. And nobody uses DTD anymore, it doesn't even seem like you knew about it before I mentioned it.

hitko29953y@IntrusionCM The only way to define types in XML is using DTD, and those aren't even proper types since they only support regular grammar and the most basic entity - child - attribute relationships. There's not even a way to tell whether a value is a number or a boolean rather than a string if you're only using DTD. And nobody uses DTD anymore, it doesn't even seem like you knew about it before I mentioned it.

For everything else you need to specify a schema in XSD format. Again, that's not a part of XML (you can try searching for "schema" in https://www.w3.org/TR/xml/), just like JSON schema is not a part of JSON.

Any XML document can be directly converted to JSON. Some JSON data cannot be directly converted to XML without losing information because XML doesn't support boolean, numeric, or nullable values (which have to be encoded as strings and then interpreted along a known schema), and because it restricts what element and attribute names are available. -

@hitko I said schema...

Not DTD.

And the Wikipedia page you mention explains all what I said very clearly.

https://en.m.wikipedia.org/wiki/...

As a further reference. And there are - like said in Wikipedia - validating vs non validating parsers, so my point stands. -

The point that XSD isn't XML...

Well.

Yeah. Completely valid.

Except that noone in their right mind would use XML that way.

There's a reason XML was - and dependent what one does - is still very popular. The ecosystem around it is still active and many attempts to port functionalities to e.g. other languages like JSON failed.

JSON schema, JSON path etc are still non official standards / in draft phase last I checked. -

@IntrusionCM can you tell me some practical use cases for those features that xml has but others don’t?

(Honest question.)

Those features feel like solutions for problems that don’t exist.

I’m aware that I might not see the benefits because I simply don’t know about them, but I’d be glad to be enlightened. -

hitko29953y@IntrusionCM Maybe the reason why nobody uses XML without a schema is because you need a schema just so you know whether to interpret some string as text, a number, or a boolean? Maybe it's because you need a schema to know whether <list /> is an empty array or an element with no content? Maybe it's because you need a schema to tell you that <list><item /></list> is in fact an array with one item element and not just a child element? You know, like all the things JSON does by default?

hitko29953y@IntrusionCM Maybe the reason why nobody uses XML without a schema is because you need a schema just so you know whether to interpret some string as text, a number, or a boolean? Maybe it's because you need a schema to know whether <list /> is an empty array or an element with no content? Maybe it's because you need a schema to tell you that <list><item /></list> is in fact an array with one item element and not just a child element? You know, like all the things JSON does by default? -

The easiest examples are formats like https://en.m.wikipedia.org/wiki/...

(Or Microsofts XML formats)

XML (with schemas) allows to define own types, objects and validation.

That allows to create a verifiable specification.

Which is why - despite being hated for reasons I can understand - SOAP e.g. was liked. You don't transmit random data. You transmit specialized entities, which should be validated. If necessary, add own types for e.g. assuring that a value can only consist of a certain range (enumerations, specific ranges of integers etc.).

You can do that in JSON - but there is a standard missing like JSON schema.

So what mostly happens is that you leave it up to a serializer / deserializer to try and encode it the way "you want"… but all the serializer / deserializer does is try to make it happen, it has no clue wether it did right or not.

XSD / JSON schema would fill that role.

Serialise / deserialize based on that schema and validate it based on that.

In a certain way - GRPC carried that thought, which is in my opinion a reason why it became so successful.

It's not just another way to transmit data - it transmits specific data structures...

Small difference, but a huge one.

GRPC - with protocol buffers and data structures - eliminates like XML with XSD a whole nasty enchilada of possible type problems, subtle bugs, possible misunderstandings noone noticed etc. -

They have symmetric tooling, and any differences would be patched if anyone needed it. What's irreconcilably different is the shape of data. In JSON the nodes are either dictionaries, arrays, or one of a couple primitives. In XML every node is an array and a dictionary of primitives in principle but also has an associated label that the data format can use as either a key or a type. To replicate typenames and therefore type variance, JSON needs a tuple of name and data which looks like dogshit and introduces the exact same ambiguity XML has.

I can deal with JSON, I can see why it's a better choice in a dynamically typed world, I just really hate that world. -

What pisses me off is that JSON could have had this. The JSON standard could have included support for syntax extensions because the start of a value is unambiguous. They would be identified by various value starting strings alongside the existing ", {, [, true, false, undefined, null, 0-9. A syntax extension would receive control after its starting string has been consumed, and it would be responsible for unambiguously detecting its own end and returning control to the JSON parser before the next (JSON) character is read. It could even be provided with a "subvalue" callback to invoke the same extension matching mechanism again.

-

@lorentz I wouldn’t say that it’s a better choice in a dynamically typed world.

Because it’s being used as an agnostic format linking all the worlds together, static and dynamic. -

I didn't think too much about whitespace, maybe one character's worth of backtracking is useful.

-

@Lensflare It's useful because it allows you to not care about types and crash in production, preferably while writing to a file, because of a typo. The ability to do this appears to be an important aspect of dynamic languages, at least based on how Python handles statically identifiable syntax errors.

-

hitko29953y@IntrusionCM You still haven't shown any real benefits of XML + schema vs JSON + schema, except for the part where JSON schema might be considered less favourable as it's still not completely finalised (but the changes are versioned, so that's not even a backwards-compatibility issue).

hitko29953y@IntrusionCM You still haven't shown any real benefits of XML + schema vs JSON + schema, except for the part where JSON schema might be considered less favourable as it's still not completely finalised (but the changes are versioned, so that's not even a backwards-compatibility issue).

Without a schema XML has even less data structure than JSON. XSD can't be used to perform validation on unserialised data because it describes the data differently than most OOP languages handle it, while JSON schema can be.

Since you've mentioned Microsoft XML formats, they exist mainly thanks to .Net XmlSerializer which can map classes 1:1 to XML and back; however, the resulting XML looks more like something you'd get if you converted JSON to XML, rather than something you'd write if you actually tried to represent said data in XML as best as possible. -

@Lensflare Less emotionally, in JS it's common practice to define an interface inline by instantiating it, never give it a name, and just treat it as a standard from that point onwards. JSON caters to this better than XML. Add to this the fact that XML needs a schema to be parsed into an object in any language because every child list is a dilemma between a dictionary, an array of objects and an array of objects and strings, and you can see why JSON won.

-

The only strictly security-related aspect in XML I can think of is that a fully featured XML parser needs to be able to make HTTP requests which is a giant security risk. Imagemagick had a command injection vulnerability around this recently because they interpolated the referenced URL into a curl command instead of passing it as a single argument to libcurl.

-

@hitko you make even less sense to me with each comment.

I mentioned Microsoft XML as in the Office XML standards... Like the OpenDocument XML standards.

JSON denotes explicitly an encoding, so a string has to be UTF-8. I talked about serialization and deserialization to specific entities... I have no idea what unserialized JSON is.

You have a separate type in JSON schema, as in a JSON document cannot embed a JSON schema. In XML, XSD is integrated.

That's the main difference.

JSON schema is still not an official standard as far as I know.

XSD / XML schema is the recommended way by W3C to write XML.

JSON will - as it is not meant to be extensible in any way - never reach that state.

Again. XML was *MADE* as a markup *language* to describe and structure data to specific entities.

JSON - Java Script OBJECT *notation*.

As in general object. Not specific entity.

Notation. Not language.

Two entirely different things with entirely different purposes....

I really cannot understand why it's so hard to accept that fact. -

@IntrusionCM It's hard to understand because it's just repeated applications of the genetic fallacy. Is .eslintrc.json an object? Nope, it may be represented by one in memory but it is in fact manually edited configuration or source. Is an XSD markup? Nope, it encodes structured data and not annotations to freeform text. In fact, the overwhelming majority of XML's applications have nothing to do with text or markup, because the vast majority of data is either metadata or numeric. Sure it was originally intended to be markup, but only a handful of use-cases use it like that, and those aren't the use-cases we're debating here. No one's trying to convince anyone to write web content or ebooks in JSON. The debated use-cases are specifically those which encode structured data some of which may be unformatted text, such as XSD itself. For these purposes, non-utf8 encodings and various other text-specific features actually get in the way.

-

@IntrusionCM You're talking about what JSON and XML were originally intended to be, which has very little to do with how they're used today. The vast majority of use cases don't require implicit text. Formatted text is a niche use case. The majority of use cases for both formats tend to be metadata, numeric data and unformatted text, simply because the overwhelming majority of data is one of these.

-

@lorentz

Uhm. This has gone really downhill.

I don't even know how to answer except what the frigging fuck?

We most definitely don't wanna go back to the days where everything was unstandardized...

Or what is your point, because I can't figure it out.

REST or similar APIs require *specific* data. That's what the discussion was about - with XML and schema you can define it, JSON not.

I dunno what you mean by formatted text is niche etc. I just cannot make sense of it at all. -

@IntrusionCM I may have misunderstood your point then, I noticed that your earlier comments include a lot of things I don't understand.

What's an entity outside XML? What's an object outside JSON? You're comparing them as if they were meaningful beyond the languages that use them as atomic concepts.

What's the difference between a language and a notation in computing?

On manyeth reading these appear to be key points to your comment but I don't understand them. -

@lorentz

I think we're going now very meta....

Like full theological meta.

Notation

The act, process, method, or an instance of representing by a system or set of marks, signs, figures, or characters.

https://en.m.wiktionary.org/wiki/...

Language

A body of words, and set of methods of combining them (called a grammar), understood by a community and used as a form of communication.

https://en.m.wiktionary.org/wiki/...

Imho we humans need words an concepts to communicate.

So the concept of an object (or entity) as a distinct piece of information is relevant. The question is how to represent it.

A notification like JSON represents it in a simple way: there is a fixed set of types to represent any data.

A language like XML goes beyond that, it allows to use predefined types or generate own types based on the grammar provided.

I dunno what your point is regarding outside XML / JSON.

Imho its like asking what's water when we don't call it water?

The necessity to describe information / data will always be existent, entity / object are two words who both hold a meaning: an entity is a distinct set of information, an object is an instantiation of a class or structure (which are an representation or model of an entity).

I still don't know what your point is though. -

@IntrusionCM I specifically asked in the context of CS because I don't think there is a difference between a machine-readable notation and a formal language. I asked about object and entity outside XML and JSON because you can't compare the colour of a flower to the taste of a fruit. You need a common framework to compare two things. I think the only sensible common framework to compare these two concepts is in the shape of data they can encode, which I described above, where I noted that the entity name is analogous to either the object field name or a surplus label suitable for representing a type.

-

There are some quasi-standard elements to type systems which, in the case of a data transfer format, likely exist in both the writer's and the reader's type system. Objects with named keys and values of independent types, arrays or lists, various primitives. If you know the types exactly, this usually implies a JSON representation. The XML labels carry redundant information in this case, but you can likely just specify some standard The point is, if your goal is data transfer, the questions you're dealing with don't include validating an element you don't recognize, and therefore bundled schemas are fairly pointless. Serialization formats assume that the deserializer knows what they're parsing.

-

@lorentz i think that's the part where we have a different opinion.

A really large part of REST APIs is validation and sanitation of data.

Which is why I gave the example of GRPC - the data structures necessary for proto typing come up in every migration request as a plus point. For the simple reason that you can cut out a lot of boiler plate code for validation and sanitation - after all, what you receive *must* be compliant to the data structure defined previously.

The same with XML/XSD.

I dunno how one can just put that aside as pointless - as it's one of the things that actively aid in preventing bugs and reducing necessity of boiler plate code.

I'd be really interested in why you think it's pointless... After all your scenario is that you know what to deserialize and you trust that decision in all cases.

Serialization / Deserialization bugs are usually the hardest. Especially cause it happens at runtime - you can't validate it at compile time usually.

The amount of time to diagnosis and track this is usually exorbitant. -

Benefit of XML: Fits well with your Java application and you need it for SOAP anyways.

Benefit of JSON: It isn't XML. -

@IntrusionCM I think that sanitation should be a part of parsing. If you're expecting to deserialize an object that contains a set number of fields, it should check that fields by that name exist, that no unrecognized fields are present, and then pass the values to parsers of the expected types which will do the same recursively. This provides essentially the same guarantees as an XSD, just simpler, and your validation code is literally Turing complete so it's very easy to express complicated relations such as "x should be a prefix of y" or "pk should be a valid public key and payload should be a string that decodes to a valid subobject of type T signed with pk". I'm a big fan of how io-ts abstracts the concept of a serializer/deserializer because of how lightweight it is, but the concept can be replicated almost anywhere.

-

hitko29953y@IntrusionCM No, a large part of any API (especially REST APIs) is to enable components that can be managed and updated without affecting the system as a whole, even while it is running. You cannot do that if any change to the schema requires simultaneous updates of multiple API services and clients to ensure correct parsing and validation, or if you rely on external schema to perform parsing and validation. Using internal data structures to perform parsing and validation both ensures your service is getting the exact data it needs even if the data isn't aligned with the latest schema, and it reduces any impact the changes might have on other services.

hitko29953y@IntrusionCM No, a large part of any API (especially REST APIs) is to enable components that can be managed and updated without affecting the system as a whole, even while it is running. You cannot do that if any change to the schema requires simultaneous updates of multiple API services and clients to ensure correct parsing and validation, or if you rely on external schema to perform parsing and validation. Using internal data structures to perform parsing and validation both ensures your service is getting the exact data it needs even if the data isn't aligned with the latest schema, and it reduces any impact the changes might have on other services.

Related Rants

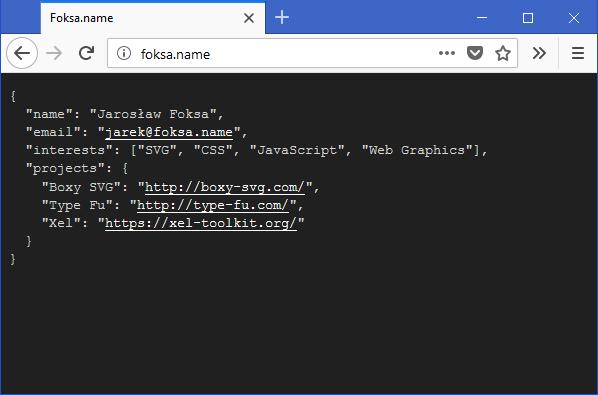

Man, this dude knows webdesign

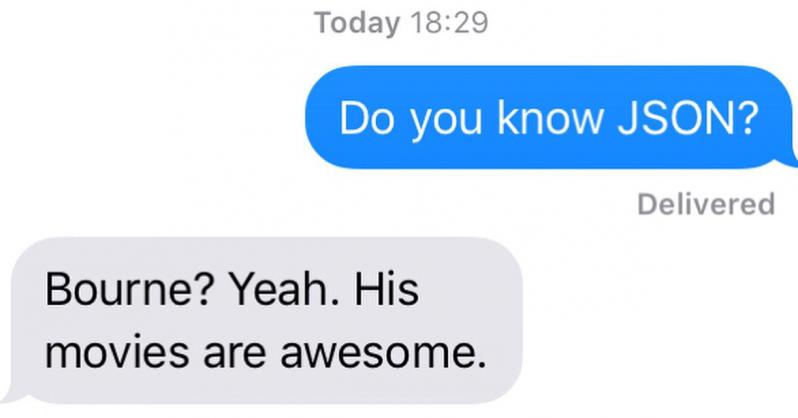

Man, this dude knows webdesign JSON Bourne

JSON Bourne

Was curious about if there are any true benefits to using XML and ended up on this page. What the actual fuck? I might be missing something here, but what's "more secure" about XML? xD

rant

json

xml