Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Worked somewhere where they were refactoring the core all the time. Also not fun. Prefer the do not touch approach above that

-

@Demolishun you have tests? We did - but refactored so hard even the tests need changes. Yes - I know how wrong this sounds. The tests should have be exactly the same. Sigh. Traumas

-

Caused almost all the time by brain drain, lack of documentation and maintainability.

Government agencies have that as an art form. -

@retoor so far the only approach that's worked for us is: characterise the legacy you're in with tests, refactor just that area where it's reasonable to do so, move on and repeat.

It's been about 2 years and the code is growing to almost "full" coverage for us to start making more invasive changes. There's definitely been some wins (Dev experience + productivity + business trust in our team + less reactive, more proactive)

We have schema lock though - but we're working to minimising its effects.

It's an interesting times and we all do TDD where I work so hopefully I'll be around for the taming of the decade legacy -

hjk10156112y@retoor really depends on the level of the tests. For example I recently changed a data model to support versioning. This reverberates through a lot of the codebase as some things need to be split to be able to benefit from this change. The current top level APIs and integration tests however remain the same for now. They just always use the latest version. Next steps are to either change those once the core is stable or just slap on some extra API for the new use cases.

hjk10156112y@retoor really depends on the level of the tests. For example I recently changed a data model to support versioning. This reverberates through a lot of the codebase as some things need to be split to be able to benefit from this change. The current top level APIs and integration tests however remain the same for now. They just always use the latest version. Next steps are to either change those once the core is stable or just slap on some extra API for the new use cases.

Related Rants

Literally xD

Literally xD I was in school and I got bored. I opened two command prompts and did what any scammer would do.

I went to t...

I was in school and I got bored. I opened two command prompts and did what any scammer would do.

I went to t...

its absolutely insane to me how many orgs out there have a "oh we don't know what that does, but don't touch it" peice of software

rant

clownworld

circusworld

🤡

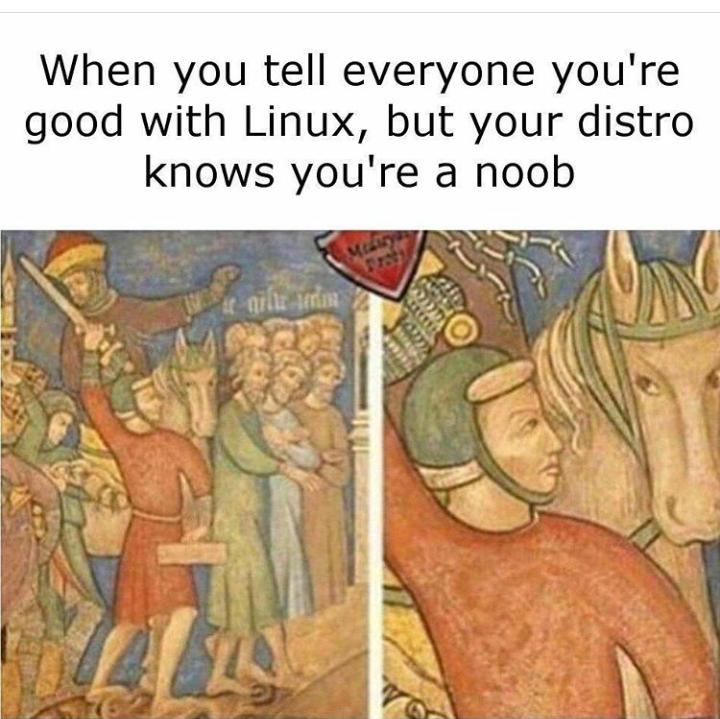

noobs

clownorg

🎪