Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

exerceo12302y> "In 2017, we rolled out a security update to the system that generates new YouTube Unlisted links"

This wording suggests they use a different algorithm for private, unlisted, and public videos. But the URL is immediately generated at the beginning of the upload, before the user even sets the visibility. -

exerceo12302yAddition:

> "The purpose of FTP is not privacy to begin with but simplicity and compatibility, given that it is widely established. "

So widely established that in fact many file explorers (Windows Explorer, Nemo for Linux) have built-in FTP browsers, and even Notepad++ has an FTP extension so it can directly save files to FTP servers!

Also, many Android file managers released in the last decade and half, including the pre-adware ES File Explorer and RhythmSoft FileManager HD, are equipped with built-in FTP access and FTP server hosting!

The built-in FTP features are less sophisticated than dedicated FTP clients like FileZilla, but can still get work done. -

The FTP feature in the browsers were very limited, it didn't support upload. So meh, I don't miss it much.

-

exerceo12302y

-

Web browsers are not file browsers, not 3D renderers, not fucking text editors. Stop moaning about removed browser features. The two plausible use cases of a web browser are

1. A document viewer for the publishing format known as the World Wide Web

OR

2. A trusted, secure sandbox for running untrusted applications written in JavaScript and WASM.

It's bad enough that these two wildly different use cases are tightly coupled, and it's the main reason why there are 3 good browsers left. Stop moaning when they cut off underutilized maintenance burden. They desperately need it. -

Also, FTP isn't usable "for non-private data". It's completely unencrypted and unsigned. Each device in the linkage kindly asks the next to please forward the same file. The contents of an FTP server are decided by each network operator based on what the next network operator says. Every single one of them has the individual choice to lie based on your request and the true data, and the rest and you are none the wiser. This is totally unnecessary and unjustifiable risk when in practice you always either know the server (direct key exchange, SFTP) or trust a common authority (certificate, FTPS), otherwise you wouldn't be talking to it.

-

exerceo12302y@tosensei "first of all, browsers were limited to downloading" - I remember that text files and HTML files could be opened directly, but no pictures or other external elements could be loaded.

> " FTP is dead. let it rest in peace."

Do you also believe getting rid of FAT32 support on operating systems is a good idea? -

@exerceo If browser developers feel that it is underutilized and a burden then yes. Linux ships with a HTTP server, as does nearly every programming language. All that would change is that shells would gain support for starting an ad-hoc http server before opening stuff in a browser. Stopping the server after 30 minutes of inactivity is also trivial no matter which one of the many HTTP servers already installed on your system you're using.

-

exerceo12302y@lorentz It doesn't have to be a burden. The code responsible for file:/// can simply be left alone.

-

@exerceo Without file:// and ftp:// there are two protocol families browsers support. Virtual pages (what Firefox calls about:) and http. If the only providers of an interface are one virtual and one shim, refactoring is probably in order.

-

FTP is totally unnecessary in browsers, good riddance. Downloads, which is all that FTP was ever used for in browsers, are via HTTP these days, and that benefits directly from "https everywhere" and Let's Encrypt being for free.

On top of that, FTP is quite a shitty protocol to begin with, much less robust that HTTP. That's because FTP needs actually two connections, data and control, and even then only works reliably if the server supports passive FTP. Without that and the common CGNAT in the mobile world, it's screwed. HTTP doesn't have such issues.

file:// is different because there are use cases for that, namely when you save a website locally and want to view it. It also isn't over the network anyway, so the FTP issues don't apply. -

@Fast-Nop External content usually breaks in file:// because it's not a secure context and absolute paths break. It only really makes sense in the document viewer role of a web browser and even then it's kind of clunky. I think it could be better if browsers supported a "snapshot" export mode where all JS is stripped, the currently applicable styles (as opposed to the downloaded styles) inlined and image paths translated.

-

@lorentz Saving a website will also save the resources locally, and adjust the pathnames. Mostly at least.

I don't see browsers removing file:// because that would also mean the first browser to do so would be unable to be the default file handler for local .htm(l) files. -

@Fast-Nop I expect the road to removing file:// starts with some OS shell replacing it as the default file opening behaviour for browsers with an ad-hoc http server to forego the jank of an untrusted context. Browsers will only remove it once this catches on.

-

The shell to do this could be Windows or MacOS because they have their own browsers so they can easily detect when the tab is closed and cleanly shut down the server, or it could be KDE because it's willing to address niche power user problems and it already comes with MariaDB to make PIM work so they clearly don't give a fuck about daemon bloat.

-

@lorentz But that would require installing an http server just for viewing files. Doesn't sound likely, and also not performing well. Plus that it wouldn't really solve the issues with saving a web page locally. You'd still need to save everything locally, even external stuff, or else it isn't a local copy.

-

@Fast-Nop It would remove some differences between the website and the saved copy. For example, a React page that pulls data from an API on the same domain would fail in file://. (I think, I can't test right now but trying to fetch() a non-CORS endpoint sounds like the archetypical trusted operation). As a cherry on top, React blanks the page if a component crashes so this will happen even if the page just checks if you're logged in to draw the little icon in the header bar.

-

@Fast-Nop Many users already have several HTTP servers installed, and they're not that heavy or complicated anyway. This server wouldn't even have to support complicated things like concurrency, just a decent MIME database is enough.

-

fjmurau4722yI'd like to genuinely thank you for this sane post which improved my weekend tremendously. You are a reasonable person, which is rather uncommon in 2023.

fjmurau4722yI'd like to genuinely thank you for this sane post which improved my weekend tremendously. You are a reasonable person, which is rather uncommon in 2023. -

Any non-secure protocol should be banned from web browsers. Want to use FTP? Use an FTP client.

-

fjmurau4722y@c3r38r170 Ban HTTP? Why? Does the content of some public information page need to be encrypted?

fjmurau4722y@c3r38r170 Ban HTTP? Why? Does the content of some public information page need to be encrypted? -

@lorentz touched on it, but the problem with using it for "simple", accessible data is not that it's unauthenticated, but that it's unsigned.

If I want to use it to access a public file, I want to be damn sure that file hasn't been MITMd or tampered with. FTP can't do that. -

@fjmurau Everything needs to be signed, no exceptions. Encryption comes with authenticity guarantees for free. I'm not aware of an unencrypted protocol that signs all server communication, it sounds unnecessary to devise a custom protocol just so your communication can be less secure than the regular clear text method wrapped in TLS.

-

@lorentz If it was unencrypted and signed then a MITM could trivially alter the signature to match tampered content - so the signing would be worthless.

-

@AlmondSauce How? Isn't the definition of a signature that you can't forge one without knowing the private key no matter how many real text-signature pairs you know?

-

@AlmondSauce request tampering is still a risk but easily solvable by including the hash of the request in the signed response. I guess such a protocol would have the advantage of being cache transparent.

-

@fjmurau Everything needs https, also static public information: https://doesmysiteneedhttps.com/

-

@tosensei Isn't FAT the best supported FS for UEFI boot partitions? I mean, I wouldn't do anything complicated or high demand with it, but using a file system the read-only implementation of which is a couple hundred lines for UEFI makes sense.

-

@fjmurau That's a very niche use case. I can't think of a website that has no need for security. And if there's one... it may need in the near future. Even a public newsletter has an admin panel that needs security, most of the time, right?

-

exerceo12302y@lorentz "Web browsers are not file browsers, not 3D renderers, not fucking text editors"

Yes, they are! Having everything in one place is convenient!

Sure, a web browser is not as sophisticated as a dedicated tool, but some times that is enough. -

exerceo12302y@Fast-Nop "That's because FTP needs actually two connections, data and control" - and yet it is much faster and much more reliable than MTP (Media Transfer Protocol) which works with one connection.

-

exerceo12302y@tosensei "just like we got rid of a lot of obsolete things. like FAT16 or FAT12. or 16-bit executables. or IPX."

FAT16 and FAT12 have a near-identical design to FAT32 and the most recent operating systems still support them for a very good reason: preventing a locked-down digital dark age.

"16-bit executables" - Anything 16-bit executeables could do has been adapted by 32-bit and 64-bit software. Also, 64-bit processors can still emulate 16-bit software. But your family photos stored on FAT16 or ISO9660 media can not be replaced. This is why FAT12 and FAT16 should be supported at least until 2100.

Also, the FAT file system is so lightweight that supporting it indefinitely is no burden at all. It is almost like TXT file support can be supported indefinitely. -

exerceo12302y@tosensei (part 2)

FTP used to be the simplest way to share really huge amounts of data between two computers over the internet for free. All those online services like WeTransfer have pathetic size limits like 2 GB, OneDrive 5 GB total, E-Mail even 25 MB, but FTP wouldn't mind me sending a 1 TB file over the 'net, and I don't have to pay a dime (except electric bills).

No means of file transfer was more widely supported, ranging from Android OS file managers, dedicated clients, to web browsers. FTP does not require a custom HTML user interface like HTTP does, hence the name "File" transfer protocol instead of "Hypertext" transfer protocol. Why create an HTTP file server with a custom hypertext-based UI when FTP lets the client have control over the UI?

Besides, the inventor of FTP is in the Internet Hall Of Fame since 2023. -

exerceo12302y@tosensei I am used to create backups in the form of disk images because they preserve the file attributes and don't touch the time stamps of folders and are the fastest speed. And FAT-based file systems are also supported by pretty much all multimedia devices such as car radios with a USB port or SD card slot.

People don't want to have to pay thousands to replace stuff just because a file system was deprecated. Having to use a virtual machine every time to be able to use a FAT file system would be a gross inconvenience.

I also believe that MTP should not be unsupported because it is decade-long established and pretty much all smartphones released since the last decade support it, even though MTP is not good. -

exerceo12302y@tosensei As far as I am aware, any computer that supports 3½ floppy disks is capable of using 5¼ floppy disks. And modern computers still support 3½ floppy disks, otherwise many abandonware games would have been lost.

-

@exerceo I don't think the browsers even supported FTP upload, only download. So for you transfer, you had to use some other program for the upload anyway.

Big news: you can use that same program for download as well! All with completely insecure FTP, blasting your password over the internet, getting hacked as result. -

@exerceo Already my desktop back in 2010 didn't even have a floppy controller on the mobo. You can maybe find a PCIe card with controller, or a USB floppy drive, but normal computers havn't supported floppy drives in a long time.

-

exerceo12302y@Fast-Nop Both Windows and Linux recognize my external USB floppy drive perfectly well and Android does too via USB-OTG! Android can not even recognize optical discs!

Windows also puts a floppy disk icon on it, so it can distinguish it from a USB stick. Perhaps because of the small size?

I totally agree that floppy disks are very obsolete. However, some people perhaps find stuff from their grandparents in the basement and want to be able to read it. Paper handwriting - easy. Just use eyes. On floppy disks? Not so much if the format is unsupported.

Anyone who has the need to read floppy disks for whichever reason should be able to. -

exerceo12302y@Fast-Nop Browsers never supported FTP upload or hosting, that's right. They were just a simple FTP client for downloading data.

"All with completely insecure FTP, blasting your password over the internet, getting hacked as result" - That's what the warning is for: "Data accessed through FTP is not private if unencrypted" or similar.

FTP was useful for purposes like sharing huge encrypted archive files with 20 GB of home videos to relatives and sending them an FTP link and then saying "You don't need to install anything! You can access it immediately through your browser!". Simple as that. Good times. No third-party software was required. Good look sharing that stuff through WeTransfer (2 GB limit). -

@exerceo Being able to read is one thing. Demanding to do that with a specific program that isn't even meant for that, that's something else.

Browsers were never meant as FTP programs and only supported the download because people were downloading from FTP sites, which is now so obsolete that browsers have no reason to offer that anymore.

Quite the opposite, they have good reason to remove it so that people download via https. No third party SW required, either. Give them, big brain move, an https link! -

exerceo12302y@Fast-Nop "Browsers were never meant as FTP programs and only supported the download because people were downloading from FTP sites" - perhaps so.

Perhaps I am just nostalgic for the times I could browse my Android smartphone like a website. No means of file sharing was so universally supported. Android file managers supported it and could even host their own FTP servers which could be set up within seconds. Windows Explorer supported FTP, and web browsers supported it too. The same files and folders viewable in so many different user interfaces.

Related Rants

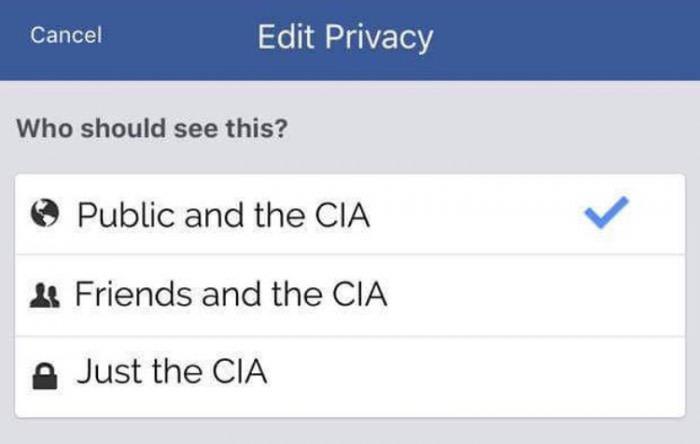

Privacy is a legend

Privacy is a legend

Web browsers removed FTP support in 2021 arguing that it is "insecure".

The purpose of FTP is not privacy to begin with but simplicity and compatibility, given that it is widely established. Any FTP user should be aware that sharing files over FTP is not private. For non-private data, that is perfectly acceptable. FTP may be used on the local network to bypass MTP (problems with MTP: https://devrant.com/rants/6198095/... ) for file transfers between a smartphone and a Windows/Linux computer.

A more reasonable approach than eliminating FTP altogether would have been showing a notice to the user that data accessed through FTP is not private. It is not intended for private file sharing in the first place.

A comparable argument was used by YouTube in mid-2021 to memory-hole all unlisted videos of 2016 and earlier except where channel owners intervened. They implied that URLs generated before January 1st, 2017, were generated using an "unsafe" algorithm ( https://blog.youtube/news-and-event... ).

Besides the fact that Google informed its users four years late about a security issue if this reason were true (hint: it almost certainly isn't), unlisted videos were never intended for "protecting privacy" anyway, given that anyone can access them without providing credentials. Any channel owner who does not want their videos to be seen sets them to "private" or deletes them. "Unlisted" was never intended for privacy.

> "In 2017, we rolled out a security update to the system that generates new YouTube Unlisted links"

It is unlikely that they rolled out a security update exactly on new years' day (2017-01-01). This means some early 2017 unlisted videos would still have the "insecure URLs". Or, likelier than not, this story was made up to sound just-so plausible enough so people believe it.

rant

security theatre

privacy