Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

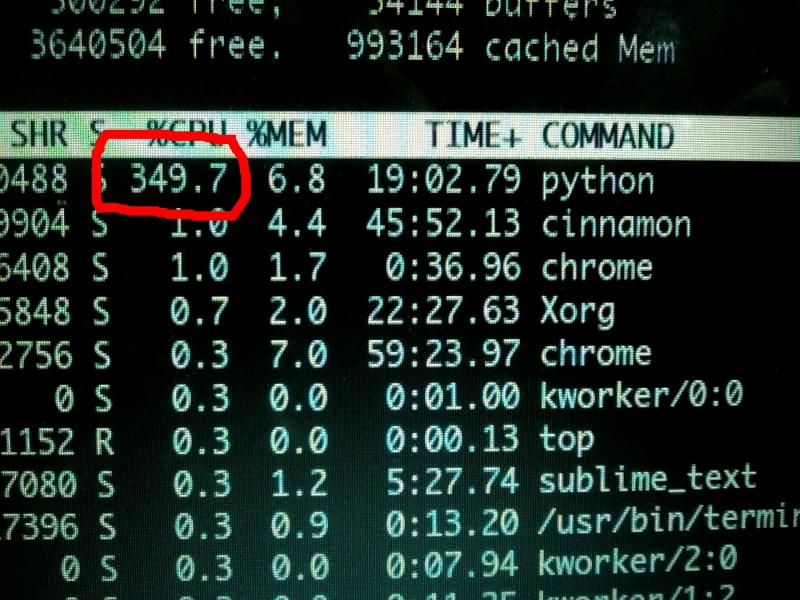

@starless The meme actually implies that the guy's laptop doesn't have good enough cpu or gpu to train and its been training for days and still didn't complete the training yet.

-

lucaIO12088yCan it really die because oft that? I mean even when you give it a pause to recover? If so I'm glad mine hasn't died yet

lucaIO12088yCan it really die because oft that? I mean even when you give it a pause to recover? If so I'm glad mine hasn't died yet -

@mohammed I mean technically you never really 'complete' training. You stop it once the improvements become negligible.

-

@DuckyMcDuckFace yes, but the cpu or gpu is so slow that it didn't reach the threshold yet!

-

Rohr7268y@lucaIO Sure... Especially laptops. They are usually not designed to run on 100% utilisation for days. And by that I do not ment that the CPU dies because of the load but the heat can't be transported out of the system fast enough at one point. So the heat might be a problem at some point and causing death.

Rohr7268y@lucaIO Sure... Especially laptops. They are usually not designed to run on 100% utilisation for days. And by that I do not ment that the CPU dies because of the load but the heat can't be transported out of the system fast enough at one point. So the heat might be a problem at some point and causing death.

Good example:

There was a GPU model (think it was an AMD) the heat design was a bit inefficient, which caused the GPU to desoldering it self from the Mainboard. - funny was, you was able to fix that by baking the GPU in the oven @80°C for an hour :D -

jdatap17898yColleague: This has been running for 2 hours, I give up; going to request 70GB server for this.

jdatap17898yColleague: This has been running for 2 hours, I give up; going to request 70GB server for this.

Me: Ram downstairs help you need better processor or parallel... 15 min later it was done. -

lucaIO12088y@Rohr Hey thank you for This detailed answer. So if heat is the problem pausing the training like every 2hours for half an hour would help? I think automating this would be a cool project. SAVE THE LAPTOPS! xD

lucaIO12088y@Rohr Hey thank you for This detailed answer. So if heat is the problem pausing the training like every 2hours for half an hour would help? I think automating this would be a cool project. SAVE THE LAPTOPS! xD -

Rohr7268y@lucaIO Well if you have a heat problem, then that might be a way of shipping around.

Rohr7268y@lucaIO Well if you have a heat problem, then that might be a way of shipping around.

Better way might be to improve the cooling of the components. But not sure if that is possible with your laptop.

You probably can adjust the load of your calculations by checking the temp of your components, or at least what you can fetch...

Modern CPUs adjusting their clock speed when temperatures getting critical to reduce the temperature. But not so low that they enter a sleep like you said :D

And high temperatures are having a negative performance impact on all components at some point like ram, chipset, psu, etc.

And ... Don't forget, could be a bug that causes your program to sleep ;P -

This meme is 100% me. No joke. I know my laptop will brake but I still use it lol

-

@kenogo well I could see that being the case for when you have a small dataset, I was more thinking along the lines of training on a larger dataset for only 1-2 epochs before stopping due to negligible improvements. I don't tend to have serious problems with overfitting on such datasets because it's much easier for the network to learn general features then to memorize 1TB+ data points.

Related Rants

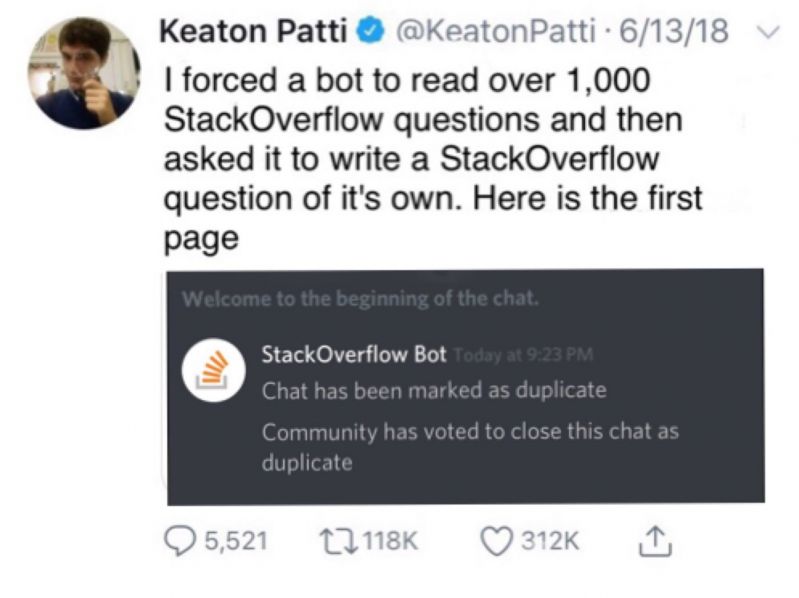

Machine Learning messed up!

Machine Learning messed up! When your CPU is motivated and gives more than his 100%

When your CPU is motivated and gives more than his 100% What is machine learning?

What is machine learning?

Training till last breath...

joke/meme

deep learning

source unknown

machine learning

training