Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

Apart from that, what in the unholy name of C# is that?

Looks cool and all but wha- -

cho-uc18366yYour accuracy for the 2nd model is 84%.

cho-uc18366yYour accuracy for the 2nd model is 84%.

If those are the real-life data you're using, then the result is pretty good in my opinion.

I don't know the nature of of your data and why you choose to use KNN Regression

Maybe you can compare with other models, if you can squeeze more accuracy. -

123abc2496y@cho-uc thanks!

123abc2496y@cho-uc thanks!

those are real data. yea i was also told that knn regression isnt normally used with volatile data.

ill probably do svm regression or LDA next. idk. do you have any model to recommend thats good with volatility?

thanks again :) -

cho-uc18366y@cuburt

cho-uc18366y@cuburt

I just try on everything from Polynomial Regression, Lasso, Ridge to ElasticNet and pick the best one.

If you have time and power, you can even try neural net for regression

@NoMad is more of an expert on this. -

NoMad134246yDisclaimer: am not an expert, it's a hit or miss with me.

NoMad134246yDisclaimer: am not an expert, it's a hit or miss with me.

First of, why are you plotting prediction dots? (green) What are you trying to see? The prediction line is basically what you need.

Your model is meh accurate. I don't get why knn, but maybe that could work. But you may need more data points to get a better answer.

What are you using (method/function) to get that correlation? Correlation without a point of reference doesn't have much meaning. But all in all, that number most likely says there should be small-ish to moderate negative correlation, which your line of prediction kinda supports that. (or so I think, correct me -anybody- if I'm wrong) -

NoMad134246yAlso maybe play with the K (currently at 20 kernels) and see if your result are any different. I could suggest 3,4, 5, 9, 10 instead. I don't think higher k could give you better answer necessarily (your data is a tad too cluttered to see any definite patterns here.)

NoMad134246yAlso maybe play with the K (currently at 20 kernels) and see if your result are any different. I could suggest 3,4, 5, 9, 10 instead. I don't think higher k could give you better answer necessarily (your data is a tad too cluttered to see any definite patterns here.) -

123abc2496y@NoMad now that i think about it, idrk why i plotted those green dots lol. i based the variable selection from a correlation matrix

123abc2496y@NoMad now that i think about it, idrk why i plotted those green dots lol. i based the variable selection from a correlation matrix -

NoMad134246y@cuburt wait, why is accuracy on training set soooo different from test set??? Like, accuracy on training set should not be on all the set at once... I'm confused about the accuracy on training set here tbh.

NoMad134246y@cuburt wait, why is accuracy on training set soooo different from test set??? Like, accuracy on training set should not be on all the set at once... I'm confused about the accuracy on training set here tbh. -

NoMad134246yLike, you train the model, regardless of accuracy, and then the accuracy on test set is your measure. While on training, your model is still learning. Accuracy has no meaning for it.

NoMad134246yLike, you train the model, regardless of accuracy, and then the accuracy on test set is your measure. While on training, your model is still learning. Accuracy has no meaning for it. -

NoMad134246y@cuburt accuracy on training has no meaning afaik ¯\_(ツ)_/¯

NoMad134246y@cuburt accuracy on training has no meaning afaik ¯\_(ツ)_/¯

Unless you mini-batch the jobs, then yes your loss/accuracy are relevant to overfitting.

My best suggestion can be use SVM instead. Use RBF and play with kernels until you get a proper "Test" result. -

NoMad134246y@cuburt actually, one more thing. Try k's 2 and 3,and compare the results of test to this one. I'd be interested to know how it goes.

NoMad134246y@cuburt actually, one more thing. Try k's 2 and 3,and compare the results of test to this one. I'd be interested to know how it goes. -

123abc2496y@NoMad so i should fit the train and score the test?

123abc2496y@NoMad so i should fit the train and score the test?

the reason i did that is so i can compare their accuracy, that way ill be able to tell if its overfitting -

NoMad134246y@cuburt well, are you batching the jobs? If yes, then yeah you can compare accuracy/loss.

NoMad134246y@cuburt well, are you batching the jobs? If yes, then yeah you can compare accuracy/loss.

And yes, bingo, ding ding ding, correct, hooray! fit the train and score the test.

It's better if you don't train/fit on test set as well, or you are actually doing magic on the model. (except if your doing life-long learning which tbh, I personally have problems wrapping my head around it so I'll leave you to it) -

NoMad134246yCan you get the same accuracy graph for kernel numbers, on test set again; without fitting on test set? That may change now.

NoMad134246yCan you get the same accuracy graph for kernel numbers, on test set again; without fitting on test set? That may change now. -

NoMad134246yJust to point out something, look at PctWTP at around 1. The variation is more than half of the range for Slippage in training set. I mean, maybe this is why your model is confused. It finds neighborhoods with very high variations.

NoMad134246yJust to point out something, look at PctWTP at around 1. The variation is more than half of the range for Slippage in training set. I mean, maybe this is why your model is confused. It finds neighborhoods with very high variations. -

NoMad134246yBecause, 0 accuracy means none of the examples were predicted right. Accuracy below zero means what? They were predicted right, but not? 🤔 Like, it can't mean reverse, unless you're not getting accuracy but the distance of something.

NoMad134246yBecause, 0 accuracy means none of the examples were predicted right. Accuracy below zero means what? They were predicted right, but not? 🤔 Like, it can't mean reverse, unless you're not getting accuracy but the distance of something. -

NoMad134246yAssuming you're using ` sklearn.neighbors.KNeighborsRegressor` I assume you're using this one:

NoMad134246yAssuming you're using ` sklearn.neighbors.KNeighborsRegressor` I assume you're using this one:

https://scikit-learn.org/stable/...

which does say " The best possible score is 1.0 and it can be negative (because the model can be arbitrarily worse). A constant model that always predicts the expected value of y, disregarding the input features, would get a R^2 score of 0.0." -

NoMad134246y@cuburt that looks nice-er. Still, 30 kernels is a shitton. Like, I don't know, maybe I'm wrong. If your model responds to it, maybe that's how it should go. Can you find out how many tests it gets wrong, with a reasonable margin? That could give you a better measure of accuracy

NoMad134246y@cuburt that looks nice-er. Still, 30 kernels is a shitton. Like, I don't know, maybe I'm wrong. If your model responds to it, maybe that's how it should go. Can you find out how many tests it gets wrong, with a reasonable margin? That could give you a better measure of accuracy -

NoMad134246yActually, another idea could be to find another feature (X, in your case is only PctWTP. Maybe add another feature that could be linked to how these neighborhoods are defined. AKA make the model 3D, or more)

NoMad134246yActually, another idea could be to find another feature (X, in your case is only PctWTP. Maybe add another feature that could be linked to how these neighborhoods are defined. AKA make the model 3D, or more)

Ofc, it'd take time so it's up to you if you want to go down that rabbit hole. -

NoMad134246yOn a second thought, ignore that accuracy measure. RMSE is your error rate in this cass.

NoMad134246yOn a second thought, ignore that accuracy measure. RMSE is your error rate in this cass. -

123abc2496y@NoMad is that what multivariate model is? because thats where im supposed to be heading

123abc2496y@NoMad is that what multivariate model is? because thats where im supposed to be heading -

NoMad134246y@cuburt I think so. Because this is showing you that PctWTP is not the only factor in estimating the Slippage.

NoMad134246y@cuburt I think so. Because this is showing you that PctWTP is not the only factor in estimating the Slippage. -

NoMad134246yTho, iirc MANOVA (which deals with multivariate stuff) deals more with variation. 🤔 At this point, I'm even confusing myself, so let's say idk. ¯\_(ツ)_/¯

NoMad134246yTho, iirc MANOVA (which deals with multivariate stuff) deals more with variation. 🤔 At this point, I'm even confusing myself, so let's say idk. ¯\_(ツ)_/¯ -

NoMad134246yOne last request (cuz curiosity totally didn't kill the cat), can you show me how your test graph looks using k=25 or 30 or 40 or whatever you like?

NoMad134246yOne last request (cuz curiosity totally didn't kill the cat), can you show me how your test graph looks using k=25 or 30 or 40 or whatever you like?

(titled KNN regression, slippage vs PctWTP) -

123abc2496y@NoMad k=30. as the previous line graph showed, accuracy also increased (although accuracy doesnt really make much sense to me now, its not even the r squared which i thought it was)

123abc2496y@NoMad k=30. as the previous line graph showed, accuracy also increased (although accuracy doesnt really make much sense to me now, its not even the r squared which i thought it was)

-

NoMad134246y@cuburt if you are actually curious, what you can do next is to map two other lines. Your prediction line +/- the RMSE and then calculate how many data points fall in between these two lines. Then, you can say your model has a variation of RMSE, and it has an error rate of {whatever number that didn't fall in between two lines divided by total number of samples}. Which could be a much better model than Just the line of prediction (that is practically what SVM does much nicer)

NoMad134246y@cuburt if you are actually curious, what you can do next is to map two other lines. Your prediction line +/- the RMSE and then calculate how many data points fall in between these two lines. Then, you can say your model has a variation of RMSE, and it has an error rate of {whatever number that didn't fall in between two lines divided by total number of samples}. Which could be a much better model than Just the line of prediction (that is practically what SVM does much nicer) -

123abc2496y@NoMad okay, ill def keep that in mind. im actually planning to do SVM regression next

123abc2496y@NoMad okay, ill def keep that in mind. im actually planning to do SVM regression next

thank you so much! it makes much more sense to me now

Related Rants

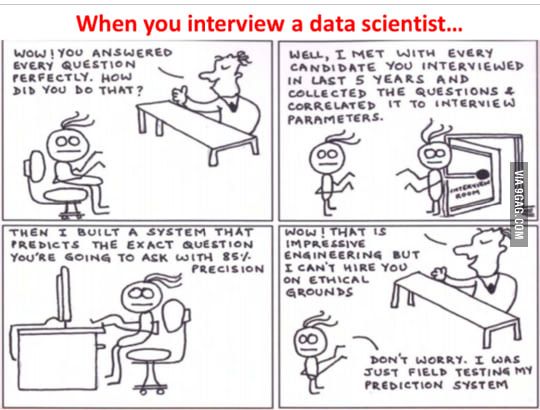

Interviewing a Data Scientist

Interviewing a Data Scientist

hey guys, im a total noob and new to this field. this post is open for criticism and suggestions.

so i wanna forecast slippage (dependent) using the % WT Plan (planned work, the predictor).

i get -0.35 for correlation.

and im using knn regression

how does this look to you? insights and comments are appreciated. thanks!

random

data science