Ranter

Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Comments

-

cprn17871y@retoor Oh, I had them set up as well… but I type faster than I notice changes on the screen.

cprn17871y@retoor Oh, I had them set up as well… but I type faster than I notice changes on the screen.

And for everyone who *doesn't* set up different terminal themes for different environments — do it. It's too easy not to:

alias prod='tput setaf 128; ssh prod-host; tput reset' -

cprn17871y@donkulator Yeah... What about a scenario where you *want* databases to have the same name (they're on different hosts anyway).

cprn17871y@donkulator Yeah... What about a scenario where you *want* databases to have the same name (they're on different hosts anyway). -

cprn17871y@tosensei @bazmd It doesn't apply. I was 100% certain I didn't need the test db any more. The issue is I called the command on a prod server by accident. And because I was patching stuff, I used a user with write access. I could, I guess, have a user that had access to things like `UPDATE`, but not `TRUNCATE`, etc… but it wasn't my job to manage users and I worked with what I was given.

cprn17871y@tosensei @bazmd It doesn't apply. I was 100% certain I didn't need the test db any more. The issue is I called the command on a prod server by accident. And because I was patching stuff, I used a user with write access. I could, I guess, have a user that had access to things like `UPDATE`, but not `TRUNCATE`, etc… but it wasn't my job to manage users and I worked with what I was given.

Yeah, there was a delayed replication set up. I just wasted time. -

cprn17871y@tosensei Oh, I fully agree I wasn't done — I still had to check a number of boxes on prod. But the initial statement regarded the necessity — I seriously didn't need the test db any more, and it really *was* safe to remove. The issue was rooted in removing the *wrong* db. So I guess… “check twice”, or something like that.

cprn17871y@tosensei Oh, I fully agree I wasn't done — I still had to check a number of boxes on prod. But the initial statement regarded the necessity — I seriously didn't need the test db any more, and it really *was* safe to remove. The issue was rooted in removing the *wrong* db. So I guess… “check twice”, or something like that. -

cprn17871y@donkulator I don't disagree, but then there are probably a number of other variables I should've checked as well… At which point, it'd just make more sense not to meddle on production at all. But that fight I lost.

cprn17871y@donkulator I don't disagree, but then there are probably a number of other variables I should've checked as well… At which point, it'd just make more sense not to meddle on production at all. But that fight I lost. -

asgs108861yHow recent was your backup? Did you manage to patch the delta data?

asgs108861yHow recent was your backup? Did you manage to patch the delta data?

Must be error prone, tedious, cumbersome, and frustrating

Related Rants

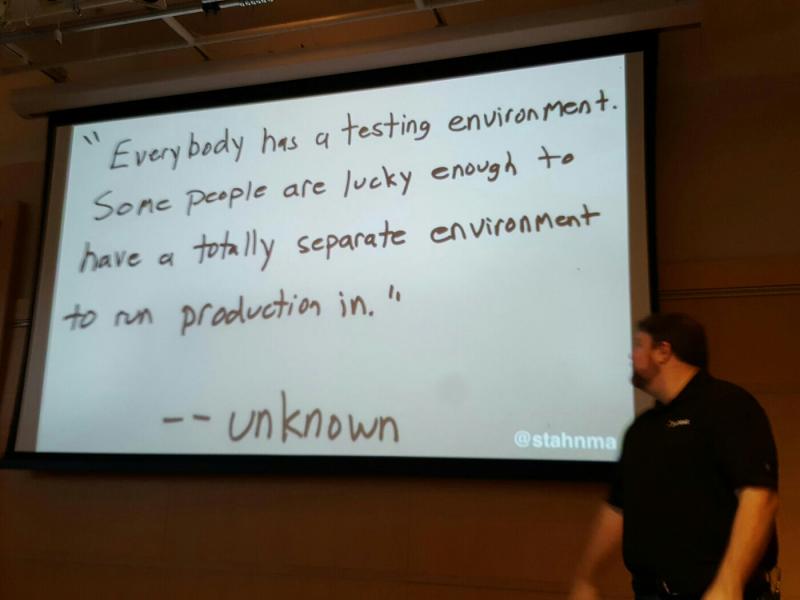

Too true, too true...

Too true, too true... And the most useless function of the year award goes to...

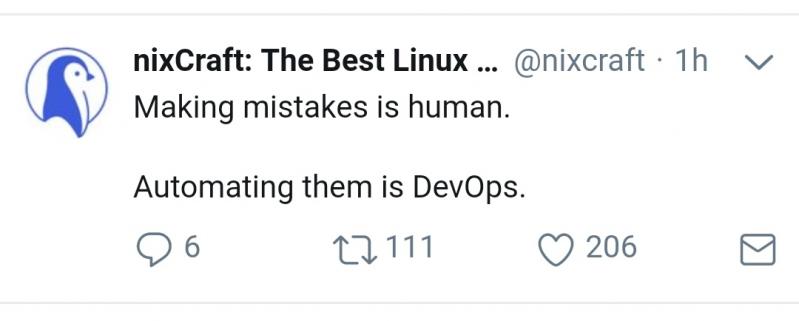

And the most useless function of the year award goes to... On my twitter feed today.

On my twitter feed today.

Dumb mistake from when I was still working:

My work laptop’s SSD went haywire, and I/O would spike every 10 minutes or so for ~50 ms. The hardware guy said he could replace the SSD right away, or I could endure it for a few weeks and get a new laptop instead. Obviously, I agreed to wait. The stutter noticeably affected screen rendering, but I didn’t notice any other issues. Little did I know that every time it happened, all input was ignored (as in: not queued). Normally it wouldn’t matter, because hitting a random ~50 ms window is hard. How-the-f×ck-ever…

A few days later — without getting into “why” — I was forced to apply a patch in production. So I opened an SSH session to prod in one terminal, spun up a dev environment in another, copied the database schema from prod to dev, and made sure to test everything. No issues, so I jumped to prod, applied the patch, restarted services, jumped back to dev, and cleaned up the now-unnecessary database. Only to discover that my “jumped back to dev” keystroke didn’t register.

rant

devops

db

manual patchwork is badwork