Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "annoying limitation"

-

Dear Firefox Screenshot,

Please rename your "Save full page" label to "Save 5000px max".

Thank you.12 -

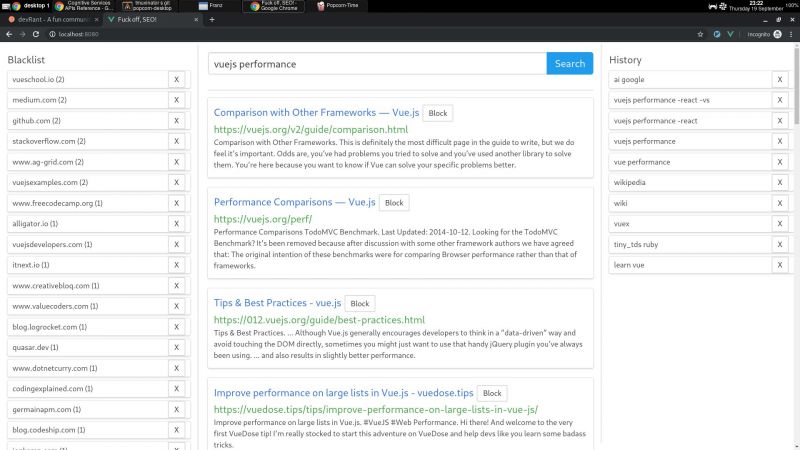

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

Trying out the new version of fasm, I realize it's good, and conclude I should update my code to work with it as there's small incompatibilities with the syntax.

So, quick flat assembler lesson: the macro system is freaking nuts, but there are limitations on the old version.

One issue, for instance, is recursive macros aren't easily possible. By "easily" I mean without resorting to black magic, of course. Utilizing the arcane power of crack, I can automatically define the same macro multiple times, up to a maximum recursion depth. But it's a flimsy patch, on top of stupid, and also has limitations. New version fixes this.

Another problem is capturing lines of code. It's not impossible, again, but a pain in the ass that requires too much drug-addled wizardry to deal with. Also fixed in new version.

Why would you want to capture lines of code? Well, because I can do this, for instance:

macro parse line {

··match a =+ b , line \{

····add a,b;

··\}

};

You can process lines of code like this. The above is a trivial example that makes no fucking sense, but essentially the assembler allows you define your own syntax, and with sufficient patience, you can use this feature to develop absolutely super fucking humongous galactic unrolls, so it's a fantastic code emitter.

Anyway, the third major issue is `{}` curlies have to be escaped according to the nesting level as seen in the example; this is due to a parser limitation. [#] hashes and [`] backticks, which are used to concatenate and stringify tokens respectively, have to be escaped as well depending on the nesting level at which the token originates. This was also fixed.

There's other minor problems but that gives you sufficient context. What happens is the new version of fasm fixes all of these problems that were either annoying me, forcing me to write much more mystical code than I'd normally agree to, and in some rare cases even limiting me in what I could do...

But "limiting" needs to be contextualized as well: I understand fasm macros well enough to write a virtual machine with them. Wish I was kidding. I called it the Arcane 9 Machine, A9M for short. Here, bitch was the prototype for the VM my fucking compiler uses: https://github.com/Liebranca/forge/...

So how am I """limited""", then? You wouldn't understand. As much as I hate to say it, that which should immediately be called into question, you're gonna have to trust me. There are many further extravagant affronts to humanity that I yearn to commit with absolute impunity, and I will NOT be DENIED.

Point is code can be rewritten in much simpler, shorter, cleaner form.

Logic can be much more intricate and sophisticated.

Recursion is no longer a problem.

Namespaces are now a thing.

Capturing -- and processing -- lines of code is easier than ever...

Nearly every problem I had with fasm is gone with this update: thusly, my power grows rather... exponentially.

And I SWEAR that I will NOT use it for good. I shall be the most corrupt, bloodthirsty, deranged tyrant ever known to this accursed digital landscape of broken souls and forgotten dreams.

*I* will reforge the world with black smoldering flame.

*I* will bury my enemies in ill-and-damned obsidian caskets.

And *I* will feed their armies to a gigantic, ravenous mass grave...

Yes... YES! This is the moment!

PREPARE THE RITUAL ROOM (https://youtube.com/watch/...)

Couriers! Ride towards the homeland! Bring word of our success.

And you, page, fetch me my sombersteel graver...

I shall inscribe the spell into these very walls...

in the ELEVENTH degree!

** MANIACAL EVIL LAUGHTER ** -

So, even as a long-time Apple user, overall there really wasn't too much exciting in the announcement last week.

That said, seeing the Mini get a spec bump caused the ears to prick up a bit. My 2014 is running fine, so it'd be a bit capricious to upgrade, but it does have one very annoying limitation:

It *will* *not* allow me to run 3 monitors at once.

Found this out for certain when I first got it and plugged one into each Thunderbolt port, and then another into HDMI, producing a courteous notice that I needed to remove one of them due to GPU limitations.

Anyone who knows more about hardware than me able to speculate if the new one might be able to support said extra monitor?14