Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "super smooth"

-

*sees that the high voltage generator kit got delivered today*

Cool, let's build this thing and integrate it into my old bugzapper! Mosquitos beware 😈

*starts building the kit, all is going very well*

Oh wow, isn't it Monday? But it's taking only 15 minutes of soldering and everything goes super smooth.. what divine power is giving me such good luck?

Alright, last thing, the transformer and then this circuit is done!!!

*solders in the transformer without realizing that the wires are coated, and the solder isn't protruding through*

Fuck. Time to desolder this shit and blast the wires with my lighter to flash that coating right off!

*engages solder pump and solder goes off extremely easily, because it only adhered to the pad*

*takes off transformer*

Me: "Nnngh..!!! Get off you piece of junk!!!"

Transformer: "Hmph!! I will stay in here no matter what!"

Me: "Get the fuck off already!!! 😡"

Transformer: *leads break off* "Alright, but these leads stay here!!!"

Me: "MotherFUCKER!!!"

Yep, it's Monday after all. 15

15 -

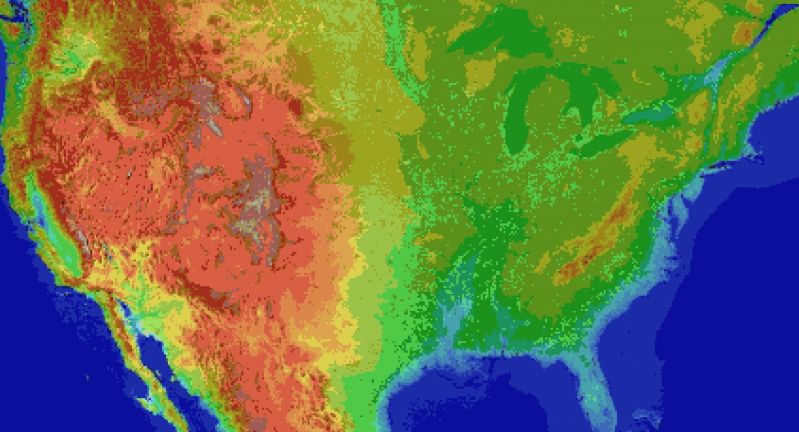

Everyone and their dog is making a game, so why can't I?

1. open world (check)

2. taking inspiration from metro and fallout (check)

3. on a map roughly the size of the u.s. (check)

So I thought what I'd do is pretend to be one of those deaf mutes. While also pretending to be a programmer. Sometimes you make believe

so hard that it comes true apparently.

For the main map I thought I'd automate laying down the base map before hand tweaking it. It's been a bit of a slog. Roughly 1 pixel per mile. (okay, 1973 by 1067). The u.s. is 3.1 million miles, this would work out to 2.1 million miles instead. Eh.

Wrote the script to filter out all the ocean pixels, based on the elevation map, and output the difference. Still had to edit around the shoreline but it sped things up a lot. Just attached the elevation map, because the actual one is an ugly cluster of death magenta to represent the ocean.

Consequence of filtering is, the shoreline is messy and not entirely representative of the u.s.

The preprocessing step also added a lot of in-land 'lakes' that don't exist in some areas, like death valley. Already expected that.

But the plus side is I now have map layers for both elevation and ecology biomes. Aligning them close enough so that the heightmap wasn't displaced, and didn't cut off the shoreline in the ecology layer (at export), was a royal pain, and as super finicky. But thankfully thats done.

Next step is to go through the ecology map, copy each key color, and write down the biome id, courtesy of the 2017 ecoregions project.

From there, I write down the primary landscape features (water, plants, trees, terrain roughness, etc), anything easy to convey.

Main thing I'm interested in is tree types, because those, as tiles, convey a lot more information about the hex terrain than anything else.

Once the biomes are marked, and the tree types are written, the next step is to assign a tile to each tree type, and each density level of mountains (flat, hills, mountains, snowcapped peaks, etc).

The reference ids, colors, and numbers on the map will simplify the process.

After that, I'll write an exporter with python, and dump to csv or another format.

Next steps are laying out the instances in the level editor, that'll act as the tiles in question.

Theres a few naive approaches:

Spawn all the relevant instances at startup, and load the corresponding tiles.

Or setup chunks of instances, enough to cover the camera, and a buffer surrounding the camera. As the camera moves, reconfigure the instances to match the streamed in tile data.

Instances here make sense, because if theres any simulation going on (and I'd like there to be), they can detect in event code, when they are in the invisible buffer around the camera but not yet visible, and be activated by the camera, or deactive themselves after leaving the camera and buffer's area.

The alternative is to let a global controller stream the data in, as a series of tile IDs, corresponding to the various tile sprites, and code global interaction like tile picking into a single event, which seems unwieldy and not at all manageable. I can see it turning into a giant switch case already.

So instances it is.

Actually, if I do 16^2 pixel chunks, it only works out to 124x68 chunks in all. A few thousand, mostly inactive chunks is pretty trivial, and simplifies spawning and serializing/deserializing.

All of this doesn't account for

* putting lakes back in that aren't present

* lots of islands and parts of shores that would typically have bays and parts that jut out, need reworked.

* great lakes need refinement and corrections

* elevation key map too blocky. Need a higher resolution one while reducing color count

This can be solved by introducing some noise into the elevations, varying say, within one standard div.

* mountains will still require refinement to individual state geography. Thats for later on

* shoreline is too smooth, and needs to be less straight-line and less blocky. less corners.

* rivers need added, not just large ones but smaller ones too

* available tree assets need to be matched, as best and fully as possible, to types of trees represented in biome data, so that even if I don't have an exact match, I can still place *something* thats native or looks close enough to what you would expect in a given biome.

Ponderosa pines vs white pines for example.

This also doesn't account for 1. major and minor roads, 2. artificial and natural attractions, 3. other major features people in any given state are familiar with. 4. named places, 5. infrastructure, 6. cities and buildings and towns.

Also I'm pretty sure I cut off part of florida.

Woops, sorry everglades.

Guess I'll just make it a death-zone from nuclear fallout.

Take that gators! 5

5 -

so my job at this startup is more of a general manager over all departments, checking in making sure everything is going smooth (basically COO), but we just did a super private beta launch today as our devs went to sleep, i spent most of my night tonight bug fixing and doing some style fixes and man did it feel good to be back and doing that (especially since most of the heavy lifting has already been done 😂)

-

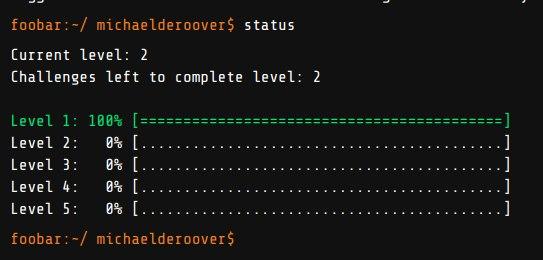

Just remembered that I still had a foobar invite link in my email inbox 😋

The challenges are odd though, first challenge was super easy (basically an idiot check), but while I was able to convert 3 cans of energy drink into a functional solution in half an hour, the verification utility is not very verbose at all. So in Python 3.7.3 in my Debian box it worked just fine, yet the testing suite in Foobar was failing the whole time. After sending an email to my friend that gave the link (several years ago now, sorry about that! 😅) asking if he knew the problem, I found out that Google is still using Python 2.7.13 for some reason. Even Debian's Python is newer, at 2.7.16. To be fair it does still default to Python 2 too. But why.. why on Earth would you use Python 2.7 in a developer oriented set of challenges from a massive company, in 2020 when Python 2 has already been dead for almost a whole year?

But hey now that it's clear that it's Python 2.7, at least the next challenges should be a bit easier. Kind of my first time developing in SnekLang regardless actually, while the language doesn't have everything I'd expect (such as integer square root, at least not in Debian or the foobar challenge's interpreter), its math expressions are a lot cleaner than bash's (either expr or bc). So far I kinda like the language. 2-headed snake though and there's so much garbage for this language online, a lot more than there is for bash. I hate that. Half the stuff flat out doesn't work because it was written by someone who requires assistance to breathe.

Meh, here's to hoping that the next challenges will be smooth sailing :) after all most of the time spent on the first one (17.5 hours) was bottling up a solution for half an hour, tearing my hair out for a few hours on why Google's bloody verification tool wouldn't accept my functioning code (I wrote it for Python 3, assuming that that's what Google would be using), and 10 hours of sleep because no Google, I'm not scrubbing toilets for 48 hours. It's fair to warn people but no, I'm not gonna work for you as a cleaning lady! 😅

Other than the issues that the environment has, it's very fun to solve the challenges though. Fuck the theoretical questions with the whiteboard, all hiring processes should be like this! 1

1 -

So macos Mojave is out and it's super smooth!

Finally high Sierra's bugs have been ironed out.

Also System-wide dark mode!1 -

I cant believe how powerful and FAST nextjs is. Very smooth and lightweight. Easy to work with.

Also angular became super fast and smooth. 5 years ago in 2018 i remember working in angular and it was not that fast. The project structure was a bit messy. But now everything has drastically improved and became simplified.

I love both now. Happy to be working in both2 -

I finally got around to setting up my own cloud with nextcloud on my own dedicated server.

Just setting up Nextcloud alone was not really the challenge ( I've set up at least 2 Nextcloud instances in the past ).

The actual challenge was to install /e/ OS on my mobile phone and get it to work with my Nextcloud instance.

It's not all performant, buttery-smooth or super-fast yet, but for a one-person / user-cloud, I think it should be just fine.

There's still room for improvement in terms of server-side performance, but it's working fine with the basics at least.

I need to figure / iron out some issues like social federation via ActivityPub not working, Nextcloud SMS not syncing up my SMS, Mail app crashing because I used a self-hosted Nextcloud instance, etc; but those are things I could work on slowly, in the course of time.

No, the server is not physically controlled by me, yet ( it's a dedicated box server though. Still, hosted and physically controlled by a provider ).

I intend on setting up another 'replica' on a RaspberryPi which I will then make primary, connecting to the internet via DynamicDNS.

I'll probably keep the server as a fallback / backup server just in case my home server loses connectivity.

Taking back control from Big Tech is something I intend on pursuing actively this year. I've had the idea in my head for too long that it has started to fester.

This is only a first step, of many, that needs to follow, in order for me to take control back from Big Tech.

Yes, there still is some room for improvement, but I think for now ‒

Mission Accomplished!🤘3