Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "#graph #algorithms"

-

Hey everyone,

We have a few pieces of news we're very excited to share with everyone today. Apologies for the long post, but there's a lot to cover!

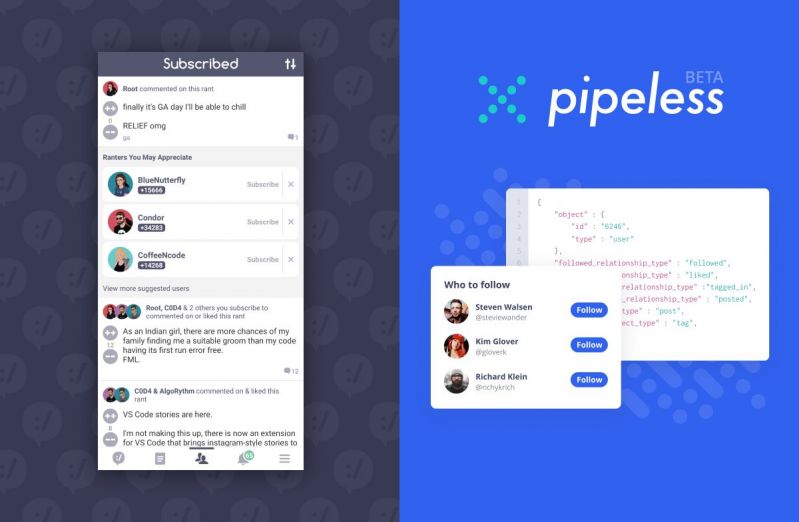

First, as some of you might have already seen, we just launched the "subscribed" tab in the devRant app on iOS and Android. This feature shows you a feed of the most recent rant posts, likes, and comments from all of the people you subscribe to. This activity feed is updated in real-time (although you have to manually refresh it right now), so you can quickly see the latest activity. Additionally, the feed also shows recommended users (based on your tastes) that you might want to subscribe to. We think both of these aspects of the feed will greatly improve the devRant content discovery experience.

This new feature leads directly into this next announcement. Tim (@trogus) and I just launched a public SaaS API service that powers the features above (and can power many more use-cases across recommendations and activity feeds, with more to come). The service is called Pipeless (https://pipeless.io) and it is currently live (beta), and we encourage everyone to check it out. All feedback is greatly appreciated. It is called Pipeless because it removes the need to create complicated pipelines to power features/algorithms, by instead utilizing the flexibility of graph databases.

Pipeless was born out of the years of experience Tim and I have had working on devRant and from the desire we've seen from the community to have more insight into our technology. One of my favorite (and earliest) devRant memories is from around when we launched, and we instantly had many questions from the community about what tech stack we were using. That interest is what encouraged us to create the "about" page in the app that gives an overview of what technologies we use for devRant.

Since launch, the biggest technology powering devRant has always been our graph database. It's been fun discussing that technology with many of you. Now, we're excited to bring this technology to everyone in the form of a very simple REST API that you can use to quickly build projects that include real-time recommendations and activity feeds. Tim and I are really looking forward to hopefully seeing members of the community make really cool and unique things with the API.

Pipeless has a free plan where you get 75,000 API calls/month and 75,000 items stored. We think this is a solid amount of calls/storage to test out and even build cool projects/features with the API. Additionally, as a thanks for continued support, for devRant++ subscribers who were subscribed before this announcement was posted, we will give some bonus calls/data storage. If you'd like that special bonus, you can just let me know in the comments (as long as your devRant email is the same as Pipeless account email) or feel free to email me (david@hexicallabs.com).

Lastly, and also related, we think Pipeless is going to help us fulfill one of the biggest pieces of feedback we’ve heard from the community. Now, it is going to be our goal to open source the various components of devRant. Although there’s been a few reasons stated in the past for why we haven’t done that, one of the biggest reasons was always the highly proprietary and complicated nature of our backend storage systems. But now, with Pipeless, it will allow us to start moving data there, and then everyone has access to the same system/technology that is powering the devRant backend. The first step for this transition was building the new “subscribed” feed completely on top of Pipeless. We will be following up with more details about this open sourcing effort soon, and we’re very excited for it and we think the community will be too.

Anyway, thank you for reading this and we are really looking forward to everyone’s feedback and seeing what members of the community create with the service. If you’re looking for a very simple way to get started, we have a full sample dataset (1 click to import!) with a tutorial that Tim put together (https://docs.pipeless.io/docs/...) and a full dev portal/documentation (https://docs.pipeless.io).

Let us know if you have any questions and thanks everyone!

- David & Tim (@dfox & @trogus) 53

53 -

Okay, story time.

Back during 2016, I decided to do a little experiment to test the viability of multithreading in a JavaScript server stack, and I'm not talking about the Node.js way of queuing I/O on background threads, or about WebWorkers that box and convert your arguments to JSON and back during a simple call across two JS contexts.

I'm talking about JavaScript code running concurrently on all cores. I'm talking about replacing the god-awful single-threaded event loop of ECMAScript – the biggest bottleneck in software history – with an honest-to-god, lock-free thread-pool scheduler that executes JS code in parallel, on all cores.

I'm talking about concurrent access to shared mutable state – a big, rightfully-hated mess when done badly – in JavaScript.

This rant is about the many mistakes I made at the time, specifically the biggest – but not the first – of which: publishing some preliminary results very early on.

Every time I showed my work to a JavaScript developer, I'd get negative feedback. Like, unjustified hatred and immediate denial, or outright rejection of the entire concept. Some were even adamantly trying to discourage me from this project.

So I posted a sarcastic question to the Software Engineering Stack Exchange, which was originally worded differently to reflect my frustration, but was later edited by mods to be more serious.

You can see the responses for yourself here: https://goo.gl/poHKpK

Most of the serious answers were along the lines of "multithreading is hard". The top voted response started with this statement: "1) Multithreading is extremely hard, and unfortunately the way you've presented this idea so far implies you're severely underestimating how hard it is."

While I'll admit that my presentation was initially lacking, I later made an entire page to explain the synchronisation mechanism in place, and you can read more about it here, if you're interested:

http://nexusjs.com/architecture/

But what really shocked me was that I had never understood the mindset that all the naysayers adopted until I read that response.

Because the bottom-line of that entire response is an argument: an argument against change.

The average JavaScript developer doesn't want a multithreaded server platform for JavaScript because it means a change of the status quo.

And this is exactly why I started this project. I wanted a highly performant JavaScript platform for servers that's more suitable for real-time applications like transcoding, video streaming, and machine learning.

Nexus does not and will not hold your hand. It will not repeat Node's mistakes and give you nice ways to shoot yourself in the foot later, like `process.on('uncaughtException', ...)` for a catch-all global error handling solution.

No, an uncaught exception will be dealt with like any other self-respecting language: by not ignoring the problem and pretending it doesn't exist. If you write bad code, your program will crash, and you can't rectify a bug in your code by ignoring its presence entirely and using duct tape to scrape something together.

Back on the topic of multithreading, though. Multithreading is known to be hard, that's true. But how do you deal with a difficult solution? You simplify it and break it down, not just disregard it completely; because multithreading has its great advantages, too.

Like, how about we talk performance?

How about distributed algorithms that don't waste 40% of their computing power on agent communication and pointless overhead (like the serialisation/deserialisation of messages across the execution boundary for every single call)?

How about vertical scaling without forking the entire address space (and thus multiplying your application's memory consumption by the number of cores you wish to use)?

How about utilising logical CPUs to the fullest extent, and allowing them to execute JavaScript? Something that isn't even possible with the current model implemented by Node?

Some will say that the performance gains aren't worth the risk. That the possibility of race conditions and deadlocks aren't worth it.

That's the point of cooperative multithreading. It is a way to smartly work around these issues.

If you use promises, they will execute in parallel, to the best of the scheduler's abilities, and if you chain them then they will run consecutively as planned according to their dependency graph.

If your code doesn't access global variables or shared closure variables, or your promises only deal with their provided inputs without side-effects, then no contention will *ever* occur.

If you only read and never modify globals, no contention will ever occur.

Are you seeing the same trend I'm seeing?

Good JavaScript programming practices miraculously coincide with the best practices of thread-safety.

When someone says we shouldn't use multithreading because it's hard, do you know what I like to say to that?

"To multithread, you need a pair."18 -

Graduation day:

A guy presents a modified pathfinding algorithm based on a*, in Java.

Jumping to the conclusions, he says he tested the algorithm on a 128x128 graph based maze, but not larger because the program saturates his 4 GB ram pc.

One teacher (algorithms and data structures) literally jumps from the chair "you saturate 4gb of ram with a* on 128x128 graph?!"

Best graduation day ever lol9 -

Algorithms real life implementation

On the way to your college canteen? -> A* search

Waiting in line in the canteen? -> Queue

Notice that girl standing in front? -> Linear search

Searching for her dad in the phone book? -> Binary search

Stupid! Google it! -> Trie

Search for her on Facebook! -> Depth-first search

Found her! Friend request? Accepted! Send a Hi! -> Graph

Writing her a secret love letter? -> Caesar cipher

Uploading your first date pic on fb? -> Image compression algorithms

Looking through her Whatsapp messages? -> KMP algorithm

She found out and had your first fight? -> Start over with some gifts! Backtracking

Got her list of items to buy? -> Array

Too many items! Low on cash, maybe? -> Priority queue

Making her play treasure hunt for her gifts? -> Linked list

Wait! Go back! Is that a ring? -> Stack

Girl’s family not agreeing to your proposal? -> Divide and conquer

Got married? Congrats! Going for your honeymoon? -> Travelling salesman problem

Your mom packing luggage for you? -> 0/1 Knapsack problem

She packed your favorite pickles? -> Hash table

Driving to the airport? -> Breadth-first search1 -

Travels to another state, about 6 hours of journey. After finally reaching the office, had to wait another hour for my turn. The interview starts

Q: How long have you been programming?

A: for nearly 2 years, I mainly code in python.

Q: Nice! (Puts a piece of paper infront) explain how the shortest distance between 2 cities is calculated by Google maps using graph theory..

I go blank and stay silent for an awfully long amount of time. Gets rejected.

After coming outside, I ask myself... Why the fuck does a normal tech company need written algorithms on graph theory used by Google maps?7 -

I had the idea that part of the problem of NN and ML research is we all use the same standard loss and nonlinear functions. In theory most NN architectures are universal aproximators. But theres a big gap between symbolic and numeric computation.

But some of our bigger leaps in improvement weren't just from new architectures, but entire new approaches to how data is transformed, and how we calculate loss, for example KL divergence.

And it occured to me all we really need is training/test/validation data and with the right approach we can let the system discover the architecture (been done before), but also the nonlinear and loss functions itself, and see what pops out the other side as a result.

If a network can instrument its own code as it were, maybe it'd find new and useful nonlinear functions and losses. Networks wouldn't just specificy a conv layer here, or a maxpool there, but derive implementations of these all on their own.

More importantly with a little pruning, we could even use successful examples for bootstrapping smaller more efficient algorithms, all within the graph itself, and use genetic algorithms to mix and match nodes at training time to discover what works or doesn't, or do training, testing, and validation in batches, to anneal a network in the correct direction.

By generating variations of successful nodes and graphs, and using substitution, we can use comparison to minimize error (for some measure of error over accuracy and precision), and select the best graph variations, without strictly having to do much point mutation within any given node, minimizing deleterious effects, sort of like how gene expression leads to unexpected but fitness-improving results for an entire organism, while point-mutations typically cause disease.

It might seem like this wouldn't work out the gate, just on the basis of intuition, but I think the benefit of working through node substitutions or entire subgraph substitution, is that we can check test/validation loss before training is even complete.

If we train a network to specify a known loss, we can even have that evaluate the networks themselves, and run variations on our network loss node to find better losses during training time, and at some point let nodes refer to these same loss calculation graphs, within themselves, switching between them dynamically..via variation and substitution.

I could even invision probabilistic lists of jump addresses, or mappings of value ranges to jump addresses, or having await() style opcodes on some nodes that upon being encountered, queue-up ticks from upstream nodes whose calculations the await()ed node relies on, to do things like emergent convolution.

I've written all the classes and started on the interpreter itself, just a few things that need fleshed out now.

Heres my shitty little partial sketch of the opcodes and ideas.

https://pastebin.com/5yDTaApS

I think I'll teach it to do convolution, color recognition, maybe try mnist, or teach it step by step how to do sequence masking and prediction, dunno yet.6 -

Chinese remainder theorem

So the idea is that a partial or zero knowledge proof is used for not just encryption but also for a sort of distributed ledger or proof-of-membership, in addition to being used to add new members where additional layers of distributive proofs are at it, so that rollbacks can be performed on a network to remove members or revoke content.

Data is NOT automatically distributed throughout a network, rather sharing is the equivalent of replicating and syncing data to your instance.

Therefore if you don't like something on a network or think it's a liability (hate speech for the left, violent content for the right for example), the degree to which it is not shared is the degree to which it is censored.

By automatically not showing images posted by people you're subscribed to or following, infiltrators or state level actors who post things like calls to terrorism or csam to open platforms in order to justify shutting down platforms they don't control, are cut off at the knees. Their may also be a case for tools built on AI that automatically determine if something like a thumbnail should be censored or give the user an NSFW warning before clicking a link that may appear innocuous but is actually malicious.

Server nodes may be virtual in that they are merely a graph of people connected in a group by each person in the group having a piece of a shared key.

Because Chinese remainder theorem only requires a subset of all the info in the original key it also Acts as a voting mechanism to decide whether a piece of content is allowed to be synced to an entire group or remain permanently.

Data that hasn't been verified yet may go into a case for a given cluster of users who are mutually subscribed or following in a small world graph, but at the same time it doesn't get shared out of that subgraph in may expire if enough users don't hit a like button or a retain button or a share or "verify" button.

The algorithm here then is no algorithm at all but merely the natural association process between people and their likes and dislikes directly affecting the outcome of what they see via that process of association to begin with.

We can even go so far as to dog food content that's already been synced to a graph into evolutions of the existing key such that the retention of new generations of key, dependent on the previous key, also act as a store of the data that's been synced to the members of the node.

Therefore remember that continually post content that doesn't get verified slowly falls out of the node such that eventually their content becomes merely temporary in the cases or index of the node members, driving index and node subgraph membership in an organic and natural process based purely on affiliation and identification.

Here I've sort of butchered the idea of the Chinese remainder theorem in shoehorned it into the idea of zero knowledge proofs but you can see where I'm going with this if you squint at the idea mentally and look at it at just the right angle.

The big idea was to remove the influence of centralized algorithms to begin with, and implement mechanisms such that third-party organizations that exist to discredit or shut down small platforms are hindered by the design of the platform itself.

I think if you look over the ideas here you'll see that's what the general design thrust achieves or could achieve if implemented into a platform.

The addition of indexes in a node or "server" or "room" (being a set of users mutually subscribed to a particular tag or topic or each other), where the index is an index of text audio videos and other media including user posts that are available on the given node, in the index being titled but blind links (no pictures/media, or media verified as safe through an automatic tool) would also be useful.12 -

recently i discovered edmonds-karp implementation of ford-fulkersion method (to solve my coursework problem) and i can't get over how beautifully it works!

-

It seems like it never came to the mind of the developers of Apache Spark that people might want to use two of their libraries together to perform graph analysis on streamed Twitter data.

Why can't I simply use the streamed batches to create and extend a graph and perform continuous graph Algorithms on it??2 -

Hey guys, i'v been working in a company for almost 1 year as a web developer. I only know the basic data structures and algorithms even after this year and also my co workers didn't seem to know graph,trees and other algos like dijikstra and all those advanced algo types. I'm searching for a job with better salary should I have to learn all these to get paid well ? Where even I can apply these things in my job? Is it worth ?11