Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "basic usage"

-

Prologue

My dad has an acquaintance - let's call him Tom. Tom is an gynecologist, one of the best in Poznań, where I live. He's a great guy but absolutely can not into tech of any kind besides his iPhone and basic PC usage. For about a year now I've been doing small jobs for him - build a new PC for his office, fix printer, fix wifi, etc. He has made a big mistake few years ago by trusting a guy, let's call him Shitface, with crating him software for work. It's supposed to be pretty simple piece of code in which you can create and modify patient file, create prescription from drugs database and such things. This program is probably one of the worst pierces of code I've ever seen and Shitface should burn for that. Worse, this guy is pretentious asshole lacking even basic IT knowledge. His code is garbage and it's taking him few months to make small changes like text wrapping. But wait, there's more. Everything is hardcoded so every PC using this software must have installed user controls for which he doesn't have license and static IP address on network card.

Part 1

Tom asked me to build him a new PC that will be acting like a server for Shitface's program. He needs it in Kalisz (around 150 km from my place). I Agred (pun intended) and after Tom brought me his old computer I've bought parts and built a new one. I have also copied everything of value and everything took me around three hours.

Part 2

Everything was ready but Shitface's program. I didn't know much about it's configuration so when I've noticed that it's not working even on the old PC I got a bit worried. Nevertheless I started breaking everything I know about it and after next three hours I've got it somewhat working. Seeing that there's still some problems with database connection (from Windows' Event Viewer) I wrote quick SMS to Shitface asking what can be wrong. He replied that he won't be able to help me any way until Monday (day after deadline). I got pissed and very courteously asked him for source code because some of libraries used in this project has license that requires either purchase of commercial license or making code open source. He replied within few minutes that he'll be able to connect remotely within next 10 minutes. He was trying to make it work for the next hour but he succeeded. It was night before deadline so I wrapped everything up and went to bed thinking that it won't take me more than an hour to get this new PC up and running in the office. Boy was I wrong.

Also, curious about his code, I've checked source and he is using beautiful ponglish (mixed Polish and English) with mistakes he couldn't even bother to fix. For people from Poland, here's an example:

TerminarzeController.DeleteTerminarzShematyDlaLekarza

Part 3

So I drove to Kalisz and started working on making everything work. Almost everything was ready so after half an hour I was done. But I wanted to check twice if it's all good because driving so far second time would be a pain. So I started up Shitface's program, logged in, tried to open ANYTHING and... KABUM. UNHANDLED EXCEPTION. WTF. I checked trace and for fuck sake something was missing. Keep in mind that then I didn't know he's using some third party control for Windows Forms that needs to be installed on client PC. After next fifteen minutes of googling I've found a solution. I just had to install this third party software and everything will work. But... It had to be exactly this version and it was old. Very old. So old that producent already removed all traces of its existence from their web page and I couldn't find it anywhere. I tried installing never version and copying files from old PC but it didn't work. After few hours of searching for a solution I called Mr Shitface asking him for this control installation file. He told me that he has it but will be able to send it my way in the evening. Resigned I asked for this new PC to be left turned on and drove home. When he sent me necessary files I remotely installed them and everything started working correctly.

So, to sum it up. Searching for parts and building new PC, installing OS and all necessary software, updating everything and configuring it for Tom taste took me around what, 1/3 of time I spent on installing Mr Shitface's stupid program which Tom is not even happy with. Gotta say it was one of worst experiences I had in recent months. Hope I won't have to see this shit again.

Epilogue

Fortunately everything seems to work correctly. Tom hasn't called me yet with any problems. Mission accomplished. I wanna kill very specific someone. With. A. Spoon.1 -

Old boy said to put my brother's old laptop to use for him because Windows is too slow. He just wants basic usage like news and checking his favorite sites. So, you know there's only one way to take care of this...install Ubuntu. He tried it, loved and now doing full install for him. Ubuntu saving old computers since..well since forever 😁

7

7 -

After a few weeks of being insanely busy, I decided to log onto Steam and maybe relax with a few people and play some games. I enjoy playing a few sandbox games and do freelance development for those games (Anywhere from a simple script to a full on server setup) on the side. It just so happened that I had an 'urgent' request from one of my old staff member from an old community I use to own. This staff member decided to run his own community after I sold mine off since I didn't have the passion anymore to deal with the community on a daily basis.

O: Owner (Former staff member/friend)

D: Other Dev

O: Hey, I need urgent help man! Got a few things developed for my server, and now the server won't stay stable and crashes randomly. I really need help, my developer can't figure it out.

Me: Uhm, sure. Just remember, if it's small I'll do it for free since you're an old friend, but if it's a bigger issue or needs a full recode or whatever, you're gonna have to pay. Another option is, I tell you what's wrong and you can have your developer fix it.

O: Sounds good, I'll give you owner access to everything so you can check it out.

Me: Sounds good

*An hour passes by*

O: Sorry it took so long, had to deal with some crap. *Insert credentials, etc*

Me: Ok, give me a few minutes to do some basic tests. What was that new feature or whatever you added?

O: *Explains long feature, and where it's located*

Me: *Begins to review the files* *Internal rage wondering what fucking developer could code such trash* *Tests a few methods, and watches CPU/RAM and an internal graph for usage*

Me: Who coded this module?

O: My developer.

Me: *Calm tone, with a mix of some anger* So, you know what, I'm just gonna do some simple math for ya. You're running 33 ticks a second for the server, with an average of about 40ish players. 33x60 = 1980 cycles a minute, now lets times that by the 40 players on average, you have 79,200 cycles per minute or nearly 4.8 fucking cycles an hour (If you maxed the server at 64 players, it's going to run an amazing fucking 7.6 million cycles an hour, like holy fuck). You're also running a MySQLite query every cycle while transferring useless data to the server, you're clusterfucking the server and overloading it for no fucking reason and that's why you're crashing it. Another question, who the fuck wrote the security of this? I can literally send commands to the server with this insecure method and delete all of your files... If you actually want your fucking server stable and secure, I'm gonna have to recode this entire module to reduce your developer's clusterfuck of 4.8 million cycles to about 400 every hour... it's gonna be $50.

D: *Angered* You're wrong, this is the best way to do it, I did stress testing! *Insert other defensive comments* You're just a shitty developer (This one got me)

Me: *Calm* You're calling me a shitty developer? You're the person that doesn't understand a timer, I get that you're new to this world, but reading the wiki or even using the game's forums would've ripped this code to shreds and you to shreds. You're not even a developer, cause most of this is so disorganized it looks like you copy and pasted it. *Get's angered here and starts some light screaming* You're wasting CPU usage, the game can't use more than 1 physical core, and after a quick test, you're stupid 'amazing' module is using about 40% of the CPU. You need to fucking realize the 40ish average players, use less than this... THEY SHOULD BE MORE INTENSIVE THAN YOUR CODE, NOT THE OPPOSITE.

O: Hey don't be rude to Venom, he's an amazing coder. You're still new, you don't know as much as him. Ok, I'll pay you the money to get it recoded.

Me: Sounds good. *Angered tone* Also you developer boy, learn to listen to feedback and maybe learn to improve your shitty code. Cause you'll never go anywhere if you don't even understand who bad this garbage is, and that you can't even use the fucking wiki for this game. The only fucking way you're gonna improve is to use some of my suggestions.

D: *Leaves call without saying anything*

TL;DR: Shitty developer ran some shitty XP system code for a game nearly 4.8 million times an hour (average) or just above 7.6 million times an hour (if maxed), plus running MySQLite when it could've been done within about like 400 an hour at max. Tried calling me a shitty developer, and got sorta yelled at while I was trying to keep calm.

Still pissed he tried calling me a shitty developer... -

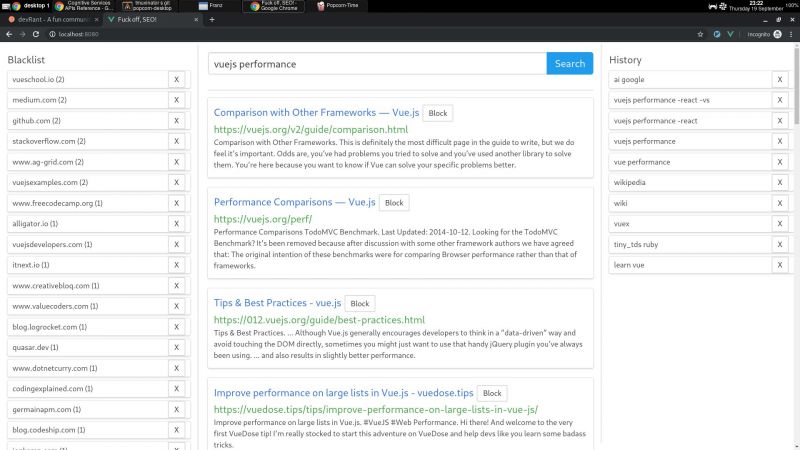

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

Buckle up, it's a long one.

Let me tell you why "Tree Shaking" is stupidity incarnate and why Rich Harris needs to stop talking about things he doesn't understand.

For reference, this is a direct response to the 2015 article here: https://medium.com/@Rich_Harris/...

"Tree shaking", as Rich puts it, is NOT dead code removal apparently, but instead only picking the parts that are actually used.

However, Rich has never heard of a C compiler, apparently. In C (or any systems language with basic optimizations), public (visible) members exposed to library consumers must have that code available to them, obviously. However, all of the other cruft that you don't actually use is removed - hence, dead code removal.

How does the compiler do that? Well, it does what Rich calls "tree shaking" by evaluating all of the pieces of code that are used by any codepaths used by any of the exported symbols, not just the "main module" (which doesn't exist in systems libraries).

It's the SAME FUCKING THING, he's just not researched enough to fully fucking understand that. But sure, tell me how the javascript community apparently invented something ELSE that you REALLY just repackaged and made more bloated/downright wrong (React Hooks, webpack, WebAssembly, etc.)

Speaking of Javascript, "tree shaking" is impossible to do with any degree of confidence, unlike statically typed/well defined languages. This is because you can create artificial references to values at runtime using string functions - which means, with the right input, almost anything can be run depending on the input.

How do you figure out what can and can't be? You can't! Since there is a runtime-based codepath and decision tree, you run into properties of Turing's halting problem, which cannot be solved completely.

With stricter languages such as C (which is where "dead code removal" is used quite aggressively), you can make very strong assertions at compile time about the usage of code. This is simply how C is still thousands of times faster than Javascript.

So no, Rich Harris, dead code removal is not "silly". Your entire premise about "live code inclusion" is technical jargon and buzzwordy drivel. Empty words at best.

This sort of shit is annoying and only feeds into this cycle of the web community not being Special enough and having to reinvent every single fucking facet of operating systems in your shitty bloated spyware-like browser and brand it with flashy Matrix-esque imagery and prose.

Fuck all of it.20 -

So, currently I am on Vacation and my dad asked me to train two of his staff members to use computer for data entry and basic usage stuff.

Now both guys are total noobs and have never used a computer before.

So I decided to take this opportunity to conduct a simple experiment. I am training one on Windows and other on Ubuntu to check out which one performs better.

The windows guy is winning.5 -

Is your code green?

I've been thinking a lot about this for the past year. There was recently an article on this on slashdot.

I like optimising things to a reasonable degree and avoid bloat. What are some signs of code that isn't green?

* Use of technology that says its fast without real expert review and measurement. Lots of tech out their claims to be fast but actually isn't or is doing so by saturation resources while being inefficient.

* It uses caching. Many might find that counter intuitive. In technology it is surprisingly common to see people scale or cache rather than directly fixing the thing that's watt expensive which is compounded when the cache has weak coverage.

* It uses scaling. Originally scaling was a last resort. The reason is simple, it introduces excessive complexity. Today it's common to see people scale things rather than make them efficient. You end up needing ten instances when a bit of skill could bring you down to one which could scale as well but likely wont need to.

* It uses a non-trivial framework. Frameworks are rarely fast. Most will fall in the range of ten to a thousand times slower in terms of CPU usage. Memory bloat may also force the need for more instances. Frameworks written on already slow high level languages may be especially bad.

* Lacks optimisations for obvious bottlenecks.

* It runs slowly.

* It lacks even basic resource usage measurement.

Unfortunately smells are not enough on their own but are a start. Real measurement and expert review is always the only way to get an idea of if your code is reasonably green.

I find it not uncommon to see things require tens to hundreds to thousands of resources than needed if not more.

In terms of cycles that can be the difference between needing a single core and a thousand cores.

This is common in the industry but it's not because people didn't write everything in assembly. It's usually leaning toward the extreme opposite.

Optimisations are often easy and don't require writing code in binary. In fact the resulting code is often simpler. Excess complexity and inefficient code tend to go hand in hand. Sometimes a code cleaning service is all you need to enhance your green.

I once rewrote a data parsing library that had to parse a hundred MB and was a performance hotspot into C from an interpreted language. I measured it and the results were good. It had been optimised as much as possible in the interpreted version but way still 50 times faster minimum in C.

I recently stumbled upon someone's attempt to do the same and I was able to optimise the interpreted version in five minutes to be twice as fast as the C++ version.

I see opportunity to optimise everywhere in software. A billion KG CO2 could be saved easy if a few green code shops popped up. It's also often a net win. Faster software, lower costs, lower management burden... I'm thinking of starting a consultancy.

The problem is after witnessing the likes of Greta Thunberg then if that's what the next generation has in store then as far as I'm concerned the world can fucking burn and her generation along with it.6 -

It's kinda cool how a $5 VPS (Linode Nanode) is able to run a vanilla Minecraft Spigot server for like 6-7 people and still can serve some basic stuff just fine. I get monitoring warnings about >90% CPU usage sometimes, but everything is more or less lagless.

Time to try hosting some other games: CS1.6, Doom Classic, and UT2004 up next.6 -

I learned basic, Visual Basic and java in high school, then one year of college studying c++. I really hate c++ and swore I would never become a programmer. After that one year of college, I spent two years out of the country with no computer usage at all. Then 4 years working at a grocery store.

Then my friend told me about an opening at his work place for a java programmer. This was 7 years since doing java and 6 years since I had programmed anything at all. But I applied and interviewed. When asked about databases, I said “I know a little Microsoft Access.” Had no idea what relational databases were, never heard of php, but by some miracle they hired me anyways. Still working for them 6 years later, now an experienced java, php, MySQL, front end developer.

Still have no idea why they saw fit to hire me.5 -

Presenters, please. Do not name your presentations "Advanced X" if you spend 75% time on explaining every detail of basic software usage. DO NOT WASTE MY TIME LIKE THAT

-

It probably will be an unanswered question, but let's try.

Does anyone know of a large project using onion / hexagonal/ ddd or similar architecture with free access to the source code...

Or an example of said architectures that goes beyond "trivial dumb example".

The new recruits need... A lot of brushing up (I'd be for electro shock treatment and other stuff, but somehow HR thinks I'm joking).

As said, most examples I found are too basic. On the other hand, if I write now a good example, I'd need to do it in either my free time (nope, just nope) or jiggle it in somewhere in company time (aka it will be never finished nor be in a useful state).

Programming language preferred would be Java, but as I'm fluent in most languages except the forbidden ones (JavaScript and it's friends) ...

Anything would be helpful.

Most welcome would be an example with a focus on Adapter / Ports, e.g. abstraction of HTTP client usage / ORM etc.

Thanks.12 -

Imposter syndrome.

A question guys, I'm a web dev since 2012, started with php, then shifted to frontend, for 3 years my main was PHP and basic HTML CSS, in 2017 I shifted to / did courses on vuejs, angular and react (loved angular the most) also laravel. Have also dabbled a bit in python, for crawling and mining. The problem is I've never worked with a team or for a full fledged Dev company, so I'm unsure as to how to judge my growth and whether I'm moving in the right direction. I feel like I need a lot better understanding of Linux usage and server control, or should I learn nativescript etc.

What do you suggest? Should I simply look for a mentorship program, if yes any clue where?4 -

WARNING - a lot of text.

I am open for questions and discussions :)

I am not an education program specialist and I can't decide what's best for everyone. It is hard process of managing the prigram which is going through a lot of instances.

Computer Science.

Speaking about schools: regular schools does not prepare computer scientists. I have a lot of thoughts abouth whether we need or do NOT need such amount of knowledge in some subjects, but that's completely different story. Back to cs.

The main problem is that IT sphere evolves exceedingly fast (compared to others) and education system adaptation is honestly too slow.

SC studies in schools needs to be reformed almost every year to accept updates and corrections, but education system in most countries does not support that, thats the main problem. In basic course, which is for everyone I'd suggest to tell about brief computer usage, like office, OS basics, etc. But not only MS stuff... Linux is no more that nerdy stuff from 90', it's evolved and ready to use OS for everyone. So basic OS tour, like wtf is MAC, Linux (you can show Ubuntu/Mint, etc - the easy stuff) would be great... Also, show students cloud technologies. Like, you have an option to do *that* in your browser! And, yeah, classy stuff like what's USB and what's MB/GB and other basic stuff.. not digging into it for 6 months, but just brief overview wuth some useful info... Everyone had seen a PC by the time they are studying cs anyway.. and somewhere at the end we can introduce programming, what you can do with it and maybe hello world in whatever language, but no more.. 'cause it's still class for everyone, no need to explain stars there.

For last years, where shit's getting serious, like where you can choose: study cs or not - there we can teach programming. In my country it's 2 years. It's possible to cover OOP principles of +/- modern language (Java or C++ is not bad too, maybe even GO, whatever, that's not me who will decide it. Point that it's not from 70') + VCS + sime real world app like simplified, but still functional bookstore managing app.

That's about schools.

Speaking about universities - logic isbthe same. It needs to be modern and accept corrections and updates every year. And now it depends on what you're studying there. Are you going to have software engineering diploma or business system analyst...

Generally speaking, for developers - we need more real world scenarios and I guess, some technologies and frameworks. Ofc, theory too, but not that stuff from 1980. Come-on, nowadays nobody specifies 1 functional requirement in several pages and, generally, nobody is writing that specification for 2 years. Product becomes obsolete and it's haven't even started yet.

Everything changes, whether it is how we write specification documents, or literally anything else in IT.

Once more, morale: update CS program yearly, goddammit

How to do it - it's the whole another topic.

Thank you for reading.3 -

I started reading this rant ( https://devrant.com/rants/2449971/... ) by @ddit because when I started reading it I could relate to it, but the further he explained, the lesser relatable it got.

( I started typing this as a comment and now I'm posting this as a rant because I have a very big opinion that wouldn't fit into the character limit for a comment )

I've been thinking about the same problem myself recently but I have very different opinion from yours.

I'm a hard-core linux fan boy - GUI or no GUI ( my opinion might be biased to some extent ). Windows is just shit! It's useless for anything. It's for n00bs. And it's only recently that it even started getting close to power usage.

Windows is good at gaming only because it was the first platform to support gaming outside of video game consoles. Just like it got all of the share of 'computer' viruses ( seesh, you have to be explicit about viruses these days ) because it was the most widely used OS. I think if MacOS invested enough in it, it could easily outperform Windows in terms of gaming performance. They've got both the hardware and the software under their control. It's just that they prefer to focus on 'professionals' rather than gamers.

I agree that the linux GUI world is not that great ( but I think it's slowly getting better ). The non-GUI world compensates for that limitation.

I'm a terminal freak. I use the TTY ( console mode, not a VTE ) even when I have a GUI running ( only for web browsing because TUI browsers can't handle javascript well and we all know what the web is made of today - no more hacking with CSS to do your bidding )

I've been thinking of getting a Mac to do all the basic things that you'd want to do on the internet.

My list :

linux - everything ( hacking power user style )

macOS - normal use ( browsing, streaming, social media, etc )

windows - none actually, but I'll give in for gaming because most games are only supported on Windows.

Phew, I needed another 750-1500 characters to finish my reply.16 -

I hate Vim and trying to install plugins or just do anything in general beyond basic usage so I’ve instead installed the vim plugin for vscode.

Actually FUCK managing dotfiles and dotfolders the size of programs themselves just to get a language server connected.

Vim stans can suck my dick1 -

i was watching a video on how whatsapp can't make enough profits coz its free and even though its a clear lie (the cartel money made by selling user data will obviously not show up in legal books), i had a thought. can any good consumer software be ever kept free for usage?

Say i made a very awesome chat app. it has 0 bugs, it does the basic tasks of sending /receiving data and media correctly and do not require any maintenence .It also is optimising a lot of cloud cost by keeping user data in their own devices and only transmitting data on triggers.

i still would require a server to keep the trigger architecture alive. and all the servers in the world are maintained by for profit corporates which will charge a premium for their services. so free products are a fallacy as someone is paying for it. it will be an investor, a different business or we the consumer (either directly as subscription , or indirectly via ads or personal data)

So i guess this realisation is going to hit soon to a lot of tiktok and insta influenza kids4 -

Find Cornrows and Micro Braids Near You at Omega African Hair Braiding

When you're searching for "cornrows near me" or "micro braids near me," you want to find a salon that offers high-quality service, skilled stylists, and a welcoming environment. At Omega African Hair Braiding, located at 5221 Equipment Dr, Charlotte, NC 28262, we specialize in creating beautiful, long-lasting cornrows and micro braids for all hair types. Our experienced team is committed to helping you achieve the hairstyle you desire, while ensuring the health and beauty of your natural hair.

Why Choose Omega African Hair Braiding for Cornrows and Micro Braids?

Finding a braiding salon near me that offers both quality and convenience can be a challenge. At Omega African Hair Braiding, we pride ourselves on offering exceptional service in a comfortable, friendly atmosphere. Here’s why we should be your first choice for cornrows and micro braids:

Expert Braiders: Our stylists are experts in braiding, particularly when it comes to cornrows and micro braids. Whether you're looking for simple, traditional cornrows or intricate, detailed designs, we have the skill and creativity to bring your vision to life. Micro braids, in particular, require a precise touch, and our team excels in delivering flawless results.

Protective and Stylish Styles: Cornrows and micro braids are not only stylish but also protective. These hairstyles minimize damage to your natural hair by reducing the need for heat styling and offering a long-lasting, low-maintenance option. Whether you want to wear your hair for a few weeks or several months, both styles help protect your hair while allowing it to grow and thrive.

High-Quality Hair Products: We use premium products to ensure that your cornrows and micro braids not only look amazing but also stay in place for a long time. Our products are chosen to promote healthy hair and scalp care, so you can enjoy beautiful braids without worrying about damage or discomfort.

Convenient Location: Searching for "cornrows near me" or "micro braids near me" becomes much easier when you know exactly where to go! Omega African Hair Braiding is conveniently located at 5221 Equipment Dr, Charlotte, NC 28262, making it easy for clients in the Charlotte area to stop by for their braiding needs. Whether you're in need of a quick touch-up or a full braid installation, we’re just a short drive away.

Affordable and Transparent Pricing: We believe everyone deserves beautiful, quality braids at an affordable price. Our pricing is competitive and transparent, ensuring that you receive the best value for your money. Whether you're getting micro braids or cornrows, you can trust that our services are priced to fit your budget.

Our Braiding Services: Cornrows and Micro Braids

At Omega African Hair Braiding, we offer a wide variety of braiding styles, but two of our specialties are cornrows and micro braids. Here’s a closer look at these two popular styles:

Cornrows

Cornrows are a classic, timeless hairstyle that has been a staple of African hair culture for centuries. Whether you prefer simple, straight-back cornrows or intricate designs, we can create the perfect look for you. Some benefits of cornrows include:

Low-Maintenance: Once installed, cornrows are relatively easy to maintain, making them a convenient option for those with busy lifestyles.

Versatility: You can wear cornrows in various ways, from basic straight-back styles to more elaborate designs with curves and patterns.

Protective: Cornrows protect your natural hair by keeping it tucked away and reducing the need for daily styling or heat usage.

Whether you’re looking for a professional look or a more fun and creative design, we have a variety of options to choose from for your cornrows.

Micro Braids

Micro braids are ultra-fine braids that are braided close to the scalp. They create a stunning, detailed appearance and are perfect for those who want a long-lasting style with a natural look. Here’s why micro braids are such a great option:

Natural and Elegant: Micro braids mimic the appearance of natural, flowing hair. When done correctly, they blend seamlessly with your natural hair, giving you a sleek and elegant look.

Long-Lasting: Micro braids are known for their longevity, lasting anywhere from 6 to 8 weeks when properly maintained.

Protective Style: Like cornrows, micro braids help protect your natural hair by minimizing exposure to heat and environmental damage. This style is ideal for those looking to grow out their hair or protect it while still enjoying a beautiful, long-lasting style.

Micro braids require a bit more time and patience to install, but the results are worth it. The finer braids offer a smooth, delicate look, and they give you plenty of styling versatility. 1

1