Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "ssh as root"

-

*SSH's into VPS*

*Starts doing some general maintainance (updating, checking the logs etc)*

*runs the who command for fun*

*NOTICES THAT THERE"S ANOTHER ACTIVE SESSION*

*FURIOUSLY STARTS TO TRY AND LOOK HOW THAT USER MIGHT HAVE GOTTEN IN (root)*

*Goes one terminal to the left after a few minutes to see if I can use that one as well*

*notices an active and forgotten SSH session to that VPS*

I am stupid.19 -

Hacking/attack experiences...

I'm, for obvious reasons, only going to talk about the attacks I went through and the *legal* ones I did 😅 😜

Let's first get some things clear/funny facts:

I've been doing offensive security since I was 14-15. Defensive since the age of 16-17. I'm getting close to 23 now, for the record.

First system ever hacked (metasploit exploit): Windows XP.

(To be clear, at home through a pentesting environment, all legal)

Easiest system ever hacked: Windows XP yet again.

Time it took me to crack/hack into today's OS's (remote + local exploits, don't remember which ones I used by the way):

Windows: XP - five seconds (damn, those metasploit exploits are powerful)

Windows Vista: Few minutes.

Windows 7: Few minutes.

Windows 10: Few minutes.

OSX (in general): 1 Hour (finding a good exploit took some time, got to root level easily aftewards. No, I do not remember how/what exactly, it's years and years ago)

Linux (Ubuntu): A month approx. Ended up using a Java applet through Firefox when that was still a thing. Literally had to click it manually xD

Linux: (RHEL based systems): Still not exploited, SELinux is powerful, motherfucker.

Keep in mind that I had a great pentesting setup back then 😊. I don't have nor do that anymore since I love defensive security more nowadays and simply don't have the time anymore.

Dealing with attacks and getting hacked.

Keep in mind that I manage around 20 servers (including vps's and dedi's) so I get the usual amount of ssh brute force attacks (thanks for keeping me safe, CSF!) which is about 40-50K every hour. Those ip's automatically get blocked after three failed attempts within 5 minutes. No root login allowed + rsa key login with freaking strong passwords/passphrases.

linu.xxx/much-security.nl - All kinds of attacks, application attacks, brute force, DDoS sometimes but that is also mostly mitigated at provider level, to name a few. So, except for my own tests and a few ddos's on both those domains, nothing really threatening. (as in, nothing seems to have fucked anything up yet)

How did I discover that two of my servers were hacked through brute forcers while no brute force protection was in place yet? installed a barebones ubuntu server onto both. They only come with system-default applications. Tried installing Nginx next day, port 80 was already in use. I always run 'pidof apache2' to make sure it isn't running and thought I'd run that for fun while I knew I didn't install it and it didn't come with the distro. It was actually running. Checked the auth logs and saw succesful root logins - fuck me - reinstalled the servers and installed Fail2Ban. It bans any ip address which had three failed ssh logins within 5 minutes:

Enabled Fail2Ban -> checked iptables (iptables -L) literally two seconds later: 100+ banned ip addresses - holy fuck, no wonder I got hacked!

One other kind/type of attack I get regularly but if it doesn't get much worse, I'll deal with that :)

Dealing with different kinds of attacks:

Web app attacks: extensively testing everything for security vulns before releasing it into the open.

Network attacks: Nginx rate limiting/CSF rate limiting against SYN DDoS attacks for example.

System attacks: Anti brute force software (Fail2Ban or CSF), anti rootkit software, AppArmor or (which I prefer) SELinux which actually catches quite some web app attacks as well and REGULARLY UPDATING THE SERVERS/SOFTWARE.

So yah, hereby :P39 -

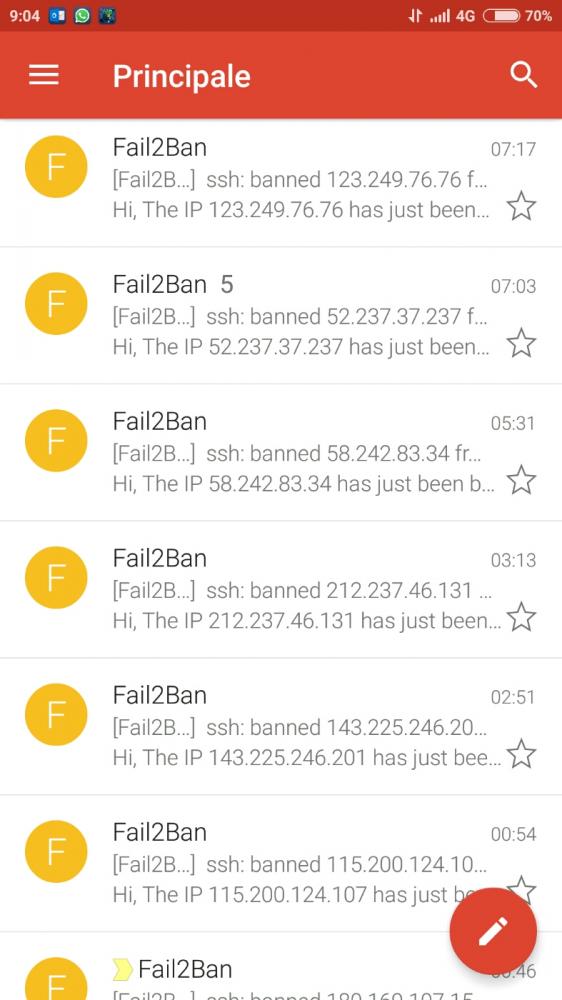

Da Fuck!?!

Yesterday I found some abnormal activity on my server, someone was trying to brute force my ssh as root since two days! Started raging and installed fail2ban (which automatically bans an IP if it fails to log X times and eventually sends me an email). Woke up this morning to find that a fucking Chinese guy/malware spent the whole night trying to brute Force me!

Fucking cunt! Don't you have any better to do!!

My key is a 32 characters long encrypted key, with the ban he can try 3 passwords /2 hours, good luck brute forcing it you bitch! 36

36 -

--- GitHub 24-hour outage post mortem ---

As many of you will remember; Github fell over earlier this month and cracked its head on the counter top on the way down. For more or less a full 24 hours the repo-wrangling behemoth had inconsistent data being presented to users, slow response times and failing requests during common user actions such as reporting issues and questioning your career choice in code reviews.

It's been revealed in a post-mortem of the incident (link at the end of the article) that DB replication was the root cause of the chaos after a failing 100G network link was being replaced during routine maintenance. I don't pretend to be a rockstar-ninja-wizard DBA but after speaking with colleagues who went a shade whiter when the term "replication" was used - It's hard to predict where a design decision will bite back and leave you untanging the web of lies and misinformation reported by the databases for weeks if not months after everything's gone a tad sideways.

When the link was yanked out of the east coast DC undergoing maintenance - Github's "Orchestrator" software did exactly what it was meant to do; It hit the "ohshi" button and failed over to another DC that wasn't reporting any issues. The hitch in the master plan was that when connectivity came back up at the east coast DC, Orchestrator was unable to (un)fail-over back to the east coast DC due to each cluster containing data the other didn't have.

At this point it's reasonable to assume that pants were turning funny colours - Monitoring systems across the board started squealing, firing off messages to engineers demanding they rouse from the land of nod and snap back to reality, that was a bit more "on-fire" than usual. A quick call to Orchestrator's API returned a result set that only contained database servers from the west coast - none of the east coast servers had responded.

Come 11pm UTC (about 10 minutes after the initial pant re-colouring) engineers realised they were well and truly backed into a corner, the site was flipped into "Yellow" status and internal mechanisms for deployments were locked out. 5 minutes later an Incident Co-ordinator was dragged from their lair by the status change and almost immediately flipped the site into "Red" status, a move i can only hope was accompanied by all the lights going red and klaxons sounding.

Even more engineers were roused from their slumber to help with the recovery effort, By this point hair was turning grey in real time - The fail-over DB cluster had been processing user data for nearly 40 minutes, every second that passed made the inevitable untangling process exponentially more difficult. Not long after this Github made the call to pause webhooks and Github Pages builds in an attempt to prevent further data loss, causing disruption to those of us using Github as a way of kicking off our deployment processes (myself included, I had to SSH in and run a git pull myself like some kind of savage).

Glossing over several more "And then things were still broken" sections of the post mortem; Clever engineers with their heads screwed on the right way successfully executed what i can only imagine was a large, complex and risky plan to untangle the mess and restore functionality. Github was picked up off the kitchen floor and promptly placed in a comfy chair with a sweet tea to recover. The enormous backlog of webhooks and Pages builds was caught up with and everything was more or less back to normal.

It goes to show that even the best laid plan rarely survives first contact with the enemy, In this case a failing 100G network link somewhere inside an east coast data center.

Link to the post mortem: https://blog.github.com/2018-10-30-...6 -

The last year my school installed MagicBoards (whiteboard with beamer that responses to touch) in every class room and called itself "ready for the future of media". What they also got is A FUCKING LOW SPEC SERVER RUNNING DEBIAN 6 W/O ANY UPDATES SINCE 2010 WHICH IS DYING CONSTANTLY.

As I'm a nice person I asked the 65 y/o technician (who is also my physics teacher) whether I could help updating this piece of shit.

Teacher: "Naahh, we don't have root access to the server and also we'll get a new company maintaining our servers in two years. And even if we would have the root access, we can't give that to a student."

My head: "Two. Years. TWO YEARS?! ARE YOU FUCKING KIDDING ME YOU RETARDED PIECE OF SHIT?! YOU'RE TELLING ME YOU DON'T HAVE TO INSTALL UPDATES EVEN THOUGH YOU CREATE AN SSH USER FOR EVERY FUCKING STUDENT SO THEY CAN LOGIN USING THEIR BIRTH DATE?! DID YOU EVER HEAR ABOUT SECURITY VULNERABILITIES IN YOUR LITTLE MISERABLE LIFE OR SOUNDS 'CVE-2016-5195' LIKE RANDOM LETTERS AND NUMBERS TO YOU?! BECAUSE - FUNFACT - THERE ARE TEN STUDENTS WHO ARE IN THE SUDO GROUP IF YOU EVEN KNOW WHAT THAT IS!"

Me (because I want to keep my good grades): "Yes, that sounds alright."13 -

I taught my 9yo sister to SSH from my Arch Linux system to an Ubuntu system, she was amazed to see terminal and Firefox launching remotely. Next I taught her to murder and eat all the memory (I love Linux, as Batman, one should also know the weaknesses). Now she can rm rf / --no-preserve-root and the forkbomb. She's amazed at the power of one liners. Will be teaching her python as she grew fond of my Raspberry Pi zero w with blinkt and phat DAC, making rainbows and playing songs via mpg123.

I made her use play with Makey Makey when it first came out but it isn't as interesting. Drop your suggestions which could be good for her learning phase?13 -

I was engaged as a contractor to help a major bank convert its servers from physical to virtual. It was 2010, when virtual was starting to eclipse physical. The consulting firm the bank hired to oversee the project had already decided that the conversions would be performed by a piece of software made by another company with whom the consulting firm was in bed.

I was brought in as a Linux expert, and told to, "make it work." The selected software, I found out without a lot of effort or exposure, eats shit. With whip cream. Part of the plan was to, "right-size" filesystems down to new desired sizes, and we found out that was one of the many things it could not do. Also, it required root SSH access to the server being converted. Just garbage.

I was very frustrated by the imposition of this terrible software, and started to butt heads with the consulting firm's project manager assigned to our team. Finally, during project planning meetings, I put together a P2V solution made with a customized Linux Rescue CD, perl, rsync, and LVM.

The selected software took about 45 minutes to do an initial conversion to the VM, and about 25 minutes to do a subsequent sync, which was part of the plan, for the final sync before cutover.

The tool I built took about 5 minutes to do the initial conversion, and about 30-45 seconds to do the final sync, and was able to satisfy every business requirement the selected software was unable to meet, and about which the consultants just shrugged.

The project manager got wind of this, and tried to get them to release my contract. He told management what I had built, against his instructions. They did not release my contract. They hired more people and assigned them to me to help build this tool.

They traveled to me and we refined it down to a simple portable ISO that remained in use as the default method for Linux for years after I left.

Fast forward to 2015. I'm interviewing for the position I have now, and one of the guys on the tech screen call says he worked for the same bank later and used that tool I wrote, and loved it. I think it was his endorsement that pushed me over and got me an offer for $15K more than I asked for.4 -

Attempting to access my colleague's NFS directory on his VM, don't know the VM's IP address, hostname or password:

- 2 minutes with nmap to narrow the possible IPs down to ~30

- Ping each and look for the one with a Dell MAC prefix as the rest of us have been upgraded to Lenovo. Find 2 of these, one for the host and one for the virtual machine.

- Try to SSH to each, the one accepting a connection is the Linux VM

- Attempt login as root with the default password, no dice. Decide it's a lost cause.

- Go to get a cup of tea, walk past his desk.

- PostIt note with his root password 😶

FYI this was all allowed by my manager as he had unpushed critical changes that we needed for the release that day.6 -

TL; DR: Bringing up quantum computing is going to be the next catchall for everything and I'm already fucking sick of it.

Actual convo i had:

"You should really secure your AWS instance."

"Isnt my SSH key alone a good enough barrier?"

"There are hundreds of thousands of incidents where people either get hacked or commit it to github."

"Well i wont"

"Just start using IP/CIDR based filtering, or i will take your instance down."

"But SSH keys are going to be useless in a couple years due to QUANTUM FUCKING COMPUTING, so why wouldnt IP spoofing get even better?"

"Listen motherfucker, i may actually kill you, because today i dont have time for this. The whole point of IP-based security is that you cant look on Shodan for machines with open SSH ports. You want to talk about quantum computing??!! Lets fucking roll motherfucker. I dont think it will be in the next thousand years that we will even come close to fault-tolerant quantum computing.

And even if it did, there have been vulnerabilities in SSH before. How often do you update your instance? I can see the uptime is 395 days, so probably not fucking often! I bet you "dont have anything important anyways" on there! No stored passwords, no stored keys, no nothing, right (she absolutely did)? If you actually think I'm going to back down on this when i sit in the same room as the dude with the root keys to our account, you can kindly take your keyboard and shove it up your ass.

Christ, I bet that the reason you like quantum computing so much is because then you'll be able to get your deepfakes of miley cyrus easier you perv."8 -

I previously worked as a Linux/unix sysadmin. There was one app team owning like 4 servers accessible in a very speciffic way.

* logon to main jumpbox

* ssh to elevated-privileges jumpbox

* logon to regional jumpbox using custom-made ssh alternative [call it fkup]

* try to fkup to the app server to confirm that fkup daemon is dead

* logon to server's mgmt node [aix frame]

* ssh to server directly to find confirm sshd is dead too

* access server's console

* place root pswd request in passwords vault, chase 2 mangers via phone for approvals [to login to the vault, find my request and aprove it]

* use root pw to login to server's console, bounce sshd and fkupd

* logout from the console

* fkup into the server to get shell.

That's not the worst part... Aix'es are stable enough to run for years w/o needing any maintenance, do all this complexity could be bearable.

However, the app team used to log a change request asking to copy a new pdf file into that server every week and drop it to app directory, chown it to app user. Why can't they do that themselves you ask? Bcuz they 'only need this pdf to get there, that's all, and we're not wasting our time to raise access requests and chase for approvals just for a pdf...'

oh, and all these steps must be repeated each time a sysadmin tties to implement the change request as all the movements and decisions must be logged and justified.

Each server access takes roughly half an hour. 4 servers -> 2hrs.

So yeah.. Surely getting your accesses sorted out once is so much more time consuming and less efficient than logging a change request for sysadmins every week and wasting 2 frickin hours of my time to just copy a simple pdf for you.. Not to mention that threr's only a small team of sysadmins maintaining tens of thousands of servers and every minute we have we spend working. Lunch time takes 10-15 minutes or so.. Almost no time for coffee or restroom. And these guys are saying sparing a few hours to get their own accesses is 'a waste of their time'...

That was the time I discovered skrillex.3 -

A vendor gave us what is turning out to be a very stable storage appliance/software, so we're happy for that. But even so, disks fail. So we need an automated way to identify, troubleshoot, isolate, and begin ticketing against disk failures. Vendor promised us a nice REST API. That was six months ago. The temporary process of SSHing(as root) to every single appliance(60-200 per site, dozens of sites) to run vendor storage audit commands remains our go-to means of automation.6

-

TL;DR my first vps got hacked, the attacker flooded my server log when I successfully discovered and removed him so I couldn't use my server anymore because the log was taking up all the space on the server.

The first Linux VPN I ever had (when I was a noob and had just started with vServers and Linux in general, obviously) got hacked within 2 moths since I got it.

As I didn't knew much about securing a Linux server, I made all these "rookie" mistakes: having ssh on port 22, allowing root access via ssh, no key auth...

So, the server got hacked without me even noticing. Some time later, I received a mail from my hoster who said "hello, someone (probably you) is running portscans from your server" of which I had no idea... So I looked in the logs, and BAM, "successful root login" from an IP address which wasn't me.

After I found out the server got hacked, I reinstalled the whole server, changed the port and activated key auth and installed fail2ban.

Some days later, when I finally configured everything the way I wanted, I observed I couldn't do anything with that server anymore. Found out there was absolutely no space on the server. Made a scan to find files to delete and found a logfile. The ssh logfile. I took up a freaking 95 GB of space (of a total of 100gb on the server). Turned out the guy who broke into my server got upset I discovered him and bruteforced the shit out of my server flooding the logs with failed login attempts...

I guess I learnt how to properly secure a server from this attack 💪3 -

I think I made someone angry, then sad, then depressed.

I usually shrink a VM before archiving them, to have a backup snapshot as a template. So Workflow: prepare, test, shrink, backup -> template, document.

Shrinking means... Resetting root user to /etc/skel, deleting history, deleting caches, deleting logs, zeroing out free HD space, shutdown.

Coworker wanted to do prep a VM for docker (stuff he's experienced with, not me) so we can mass rollout the template for migration after I converted his steps into ansible or the template.

I gave him SSH access, explained the usual stuff and explained in detail the shrinking part (which is a script that must be explicitly called and has a confirmation dialog).

Weeeeellll. Then I had a lil meeting, then the postman came, then someone called.

I had... Around 30 private messages afterwards...

- it took him ~ 15 minutes to figure out that the APT cache was removed, so searching won't work

- setting up APT lists by copy pasta is hard as root when sudo is missing....

- seems like he only uses aliases, as root is a default skel, there were no aliases he has in his "private home"

- Well... VIM was missing, as I hate VIM (personal preferences xD)... Which made him cry.

- He somehow achieved to get docker working as "it should" (read: working like he expects it, but that's not my beer).

While reading all this -sometimes very whiney- crap, I went to the fridge and got a beer.

The last part was golden.

He explicitly called the shrink script.

And guess what, after a reboot... History was gone.

And the last message said:

Why did the script delete the history? How should I write the documentation? I dunno what I did!

*sigh* I expected the worse, got the worse and a good laugh in the end.

Guess I'll be babysitting tomorrow someone who's clearly unable to think for himself and / or listen....

Yay... 4h plus phone calls. *cries internally*1 -

OpenSuse'e sarcasm is BRILLIANT!

```

~$ ssh 192.168.122.43 -l root

Last login: Thu Feb 2 19:12:45 2023 from 192.168.122.1

Have a lot of fun...

localhost:~ #

```2 -

I remember someday from a few years ago, because i just got off the phone with a customer calling me way too early! (meaning i still was in my pyjamas)

C:"Hey NNP, why si that software not available (He refers to fail2ban on his server)

Me: "It's there" (shows him terminal output)

C: " But i cannot invoke it, there is no fail2ban command! you're lieing"

Me: "well, try that sudoers command i gave you (basically it just tails all the possible log files in /var/log ) , do you see that last part with fail2ban on it?

C: "Yeah, but there is only a file descriptor! nothing is showing! It doesnt do anything.

Me: "That's actually good, it means that fail2ban does not detect any anomalies so it does not need to log it"

C:" How can you be sure!?"

Me: "Shut up and trust me, i am ROOT"

(Fail2ban is a software service that checks log files like your webserver or SSH to detect floods or brute force attempts, you set it up by defining some "jails" that monitor the things you wish to watch out for. A sane SSH jail is to listen to incoming connection attempts and after 5 or 10 attempts you block that user's IP address on firewall level. It uses IPtables. Can be used for several other web services like webservers to detect and act upon flooding attempts. It uses the logfiles of those services to analyze them and to take the appropriate action. One those jails are defined and the service is up, you should see as little log as possible for fail2ban.) 5

5 -

Logged into the vm as root. Saw that there were some security updates pending. Ran apt-get upgrade. Lost all ssh access to the vm. FML6

-

You know what is THE stupidest and most fucking anoying thing ever? (And partially my fault) I recently reinstalled Ubuntu on my device, meaning I lost my SSH keys. Today I wanted to make a quick change to a website hosted on digitalocean. Now as per good practice I had disabled the root account and the only way to log in is via SSH or using their web terminal. Obviously I couldn't use SSH so I had no choice but their awful web terminal. Not only is it laggy as balls but it would keep hanging up meaning I had to close it and start again. As if that wasnt fucking frustrating enough all I wanted to do was add my new SSH so I could just use my terminal. But NO you can't fucking paste anything into their terminal! Like what the fuck? How can you not have this basic functionality in 2017???3

-

I've been wondering about renting a new VPS to get all my websites sorted out again. I am tired of shared hosting and I am able to manage it as I've been in the past.

With so many great people here, I was trying to put together some of the best practices and resources on how to handle the setup and configuration of a new machine, and I hope this post may help someone while trying to gather the best know-how in the comments. Don't be scared by the lengthy post, please.

The following tips are mainly from @Condor, @Noob, @Linuxxx and some other were gathered in the webz. Thanks for @Linux for recommending me Vultr VPS. I would appreciate further feedback from the community on how to improve this and/or change anything that may seem incorrect or should be done in better way.

1. Clean install CentOS 7 or Ubuntu (I am used to both, do you recommend more? Why?)

2. Install existing updates

3. Disable root login

4. Disable password for ssh

5. RSA key login with strong passwords/passphrases

6. Set correct locale and correct timezone (if different from default)

7. Close all ports

8. Disable and delete unneeded services

9. Install CSF

10. Install knockd (is it worth it at all? Isn't it security through obscurity?)

11. Install Fail2Ban (worth to install side by side with CSF? If not, why?)

12. Install ufw firewall (or keep with CSF/Fail2Ban? Why?)

13. Install rkhunter

14. Install anti-rootkit software (side by side with rkhunter?) (SELinux or AppArmor? Why?)

15. Enable Nginx/CSF rate limiting against SYN attacks

16. For a server to be public, is an IDS / IPS recommended? If so, which and why?

17. Log Injection Attacks in Application Layer - I should keep an eye on them. Is there any tool to help scanning?

If I want to have a server that serves multiple websites, would you add/change anything to the following?

18. Install Docker and manage separate instances with a Dockerfile powered base image with the following? Or should I keep all the servers in one main installation?

19. Install Nginx

20. Install PHP-FPM

21. Install PHP7

22. Install Memcached

23. Install MariaDB

24. Install phpMyAdmin (On specific port? Any recommendations here?)

I am sorry if this is somewhat lengthy, but I hope it may get better and be a good starting guide for a new server setup (eventually become a repo). Feel free to contribute in the comments.24 -

Somebody: (whinwy) we need something to log into nonprivileged technical accounts without our rootssh proxy. We want this pammodule pam_X.so

me: this stuff is old (-2013) and i can't find any source for it. How about using SSSD with libsss_sudo? Its an modern solution which would allow this with an advantage of using the existing infrastructure.

somebody: NO I WANT THIS MODULE.

me: ok i have it packaged under this name. Could you please test it by manipulating the pam config?

Somebody: WHAT WHY DO I NEED TO MANIPULATE THE PAMCONFIG?

me: because another package on our servers already manipulates the config and i don't want to create trouble by manipulate it.

Somebody: why are we discussing this. I said clearly what we need and we need it NOW.

we have an package that changes the pam config to our needs, we are starting to roll out the config via ansible, but we still use configuration packages on many servers

For authentication as root we use cyberark for logging the ssh sessions.

The older solution allowed additionally the login into non-rootaccounts, but it is shut down in the next few weeks after over half an year of both systems active and over half an year with the information that the login into non-privileged accounts will be no more.7