Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "code optimization"

-

Me to IT team: I need a more powerful machine to run my optimization code on.

IT: Okay take this - 64 gigs + i7HQ

Me after an hour: 20

20 -

Fuck you, devs who quote Knuth:

"Premature optimization is the root of all evil"

I agree with the spirit of the quote. I agree that long-winded arguments comparing microsecond differences in performance between looping or matching constructs in a language syntax is almost always nonsense. Slightly slower code can even be preferable if it's significantly clearer, safer and easier to maintain.

But, two fucking points need to be made to you lazy quickfix hipsters trying to sell your undercooked spaghetti code as "al dente", just fucking admit that you had no clue what you were doing.

So here we go:

1. If you write neat correct code in one go, you don't need to spend time to optimize it. Takes time to learn the right patterns, but will save you time during the rest of your career.

2. If you quote Knuth, at least provide the context: "We should forget about small efficiencies, say about 97% of the time [...] Yet we should not pass up our opportunities in that critical 3%"

YES THAT CRITICAL 3% IS WHERE YOU MESSED UP.

I'll forgive you for disgorging your codevomit into this silly PR.

BUT YOU'RE QUOTING KNUTH IN YOUR DEFENSE?

Premature optimization is the root of all evil... 6300 SQL queries to show a little aggregate graph on the dashboard... HE WOULD FUCKING SLAP YOUR KEYBOARD IN HALF IN YOUR FACE.3 -

I sometimes write code by first putting comments and then writing the code.

Example

#fetch data

#apply optimization

#send data back to server

Then i put the code in-between the comments so that i can understand the flow.

Anyone else has this habit?18 -

Hey, Root? How do you test your slow query ticket, again? I didn't bother reading the giant green "Testing notes:" box on the ticket. Yeah, could you explain it while I don't bother to listen and talk over you? Thanks.

And later:

Hey Root. I'm the DBA. Could you explain exactly what you're doing in this ticket, because i can't understand it. What are these new columns? Where is the new query? What are you doing? And why? Oh, the ticket? Yeah, I didn't bother to read it. There was too much text filled with things like implementation details, query optimization findings, overall benchmarking results, the purpose of the new columns, and i just couldn't care enough to read any of that. Yeah, I also don't know how to find the query it's running now. Yep, have complete access to the console and DB and query log. Still can't figure it out.

And later:

Hey Root. We pulled your urgent fix ticket from the release. You know, the one that SysOps and Data and even execs have been demanding? The one you finished three months ago? Yep, the problem is still taking down production every week or so, but we just can't verify that your fix is good enough. Even though the changes are pretty minimal, you've said it's 8x faster, and provided benchmark findings, we just ... don't know how to get the query it's running out of the code. or how check the query logs to find it. So. we just don't know if it's good enough.

Also, we goofed up when deploying and the testing database is gone, so now we can't test it since there are no records. Nevermind that you provided snippets to remedy exactly scenario in the ticket description you wrote three months ago.

And later:

Hey Root: Why did you take so long on this ticket? It has sat for so long now that someone else filed a ticket for it, with investigation findings. You know it's bringing down production, and it's kind of urgent. Maybe you should have prioritized it more, or written up better notes. You really need to communicate better. This is why we can't trust you to get things out.

*twitchy smile*rant useless people you suck because we are incompetent what's a query log? it's all your fault this is super urgent let's defer it ticket notes too long; didn't read19 -

Fuck the memes.

Fuck the framework battles.

Fuck the language battles.

Fuck the titles.

Anybody who has been in this field long enough knows that it doesn't matter if your linus fucking torvalds, there is no human who has lived or ever will live that simultaneously understands, knows, and remembers how to implement, in multiple languages, the following:

- jest mocks for complex React components (partial mocks, full mocks, no mocks at all!)

- token cancellation for asynchronous Tasks in C#

- fullstack CRUD, REST, and websocket communication (throw in gRPC for bonus points)

- database query optimization, seeding, and design

- nginx routing, https redirection

- build automation with full test coverage and environment consideration

- docker container versioning, restoration, and cleanup

- internationalization on both the front AND backends

- secret storage, security audits

- package management, maintenence, and deprecation reviews

- integrating with dozens of APIs

- fucking how to center a div

and that's a _comically_ incomplete list; barely scratches the surface of the full range of what a dev can encounter in a given day of writing software

have many of us probably done one or even all of these at different times? surely.

but does that mean we are supposed to draw that up at a moment's notice some cookie-cutter solution like a fucking robot and spit out an answer on a fax sheet?

recruiters, if you read this site (perhaps only the good ones do anyway so its wasted oxygen), just know that whoever you hire its literally the luck of the draw of how well they perform during the interview. sure, perhaps some perform better, but you can never know how good someone is until they literally start working at your org, so... have fun with that.

Oh and I almost forgot, again for you recruiters, on top of that list which you probably won't ever understand for the entirety of your lives, you can also add writing documentation, backup scripts, and orchestrating / administrating fucking JIRA or actually any somewhat technical dashboard like a CMS or website, because once again, the devs are the only truly competent ones - and i don't even mean in a technical sense, i mean in a HUMAN sense of GETTING SHIT DONE IN GENERAL.

There's literally 2 types of people in the world: those who sit around drawing flow charts and talking on the phone all day, and those WHO LITERALLY FUCKING BUILD THE WORLD

why don't i just run the whole fucking company at this point? you guys are "celebrating" that you made literally $5 dollars from a single customer and i'm just sitting here coding 12 hours a day like all is fine and well

i'm so ANGRY its always the same no matter where i go, non-technical people have just no clue, even when you implore them how long things take, they just nod and smile and say "we'll do it the MVP way". sure, fine, you can do that like 2 or 3 times, but not for 6 fucking months until you have a stack of "MVPs" that come toppling down like the garbage they are.

How do expect to keep the "momentum" of your customers and sales (I hope you can hear the hatred of each of these market words as I type them) if the entire system is glued together with ducktape because YOU wanted to expedite the feature by doing it the EASY way instead of the RIGHT way. god, just forget it, nobody is going to listen anyway, its like the 5th time a row in my life

we NEED tests!

we NEED to know our code coverage!

we NEED to design our system to handle large amounts of traffic!

we NEED detailed logging!

we NEED to start building an exception database!

BILBO BAGGINS! I'm not trying to hurt you! I'm trying to help you!

Don't really know what this rant was, I'm just raging and all over the place at the universe. I'm going to bed.19 -

#2 Worst thing I've seen a co-worker do?

Back before we utilized stored procedures (and had an official/credentialed DBA), we used embedded/in-line SQL to fetch data from the database.

var sql = @"Select

FieldsToSelect

From

dbo.Whatever

Where

Id = @ID"

In attempts to fix database performance issues, a developer, T, started putting all the SQL on one line of code (some sql was formatted on 10+ lines to make it readable and easily copy+paste-able with SSMS)

var sql = "Select ... From...Where...etc";

His justification was putting all the SQL on one line make the code run faster.

T: "Fewer lines of code runs faster, everyone knows that."

Mgmt bought it.

This process took him a few months to complete.

When none of the effort proved to increase performance, T blamed the in-house developed ORM we were using (I wrote it, it was a simple wrapper around ADO.Net with extension methods for creating/setting parameters)

T: "Adding extra layers causes performance problems, everyone knows that."

Mgmt bought it again.

Removing the ORM, again took several months to complete.

By this time, we hired a real DBA and his focus was removing all the in-line SQL to use stored procedures, creating optimization plans, etc (stuff a real DBA does).

In the planning meetings (I was not apart of), T was selected to lead because of his coding optimization skills.

DBA: "I've been reviewing the execution plans, are all the SQL code on one line? What a mess. That has to be worst thing I ever saw."

T: "Yes, the previous developer, PaperTrail, is incompetent. If the code was written correctly the first time using stored procedures, or even formatted so people could read it, we wouldn't have all these performance problems."

DBA didn't know me (yet) and I didn't know about T's shenanigans (aka = lies) until nearly all the database perf issues were resolved and T received a recognition award for all his hard work (which also equaled a nice raise).5 -

I swear I work with mentally deranged lunatics.

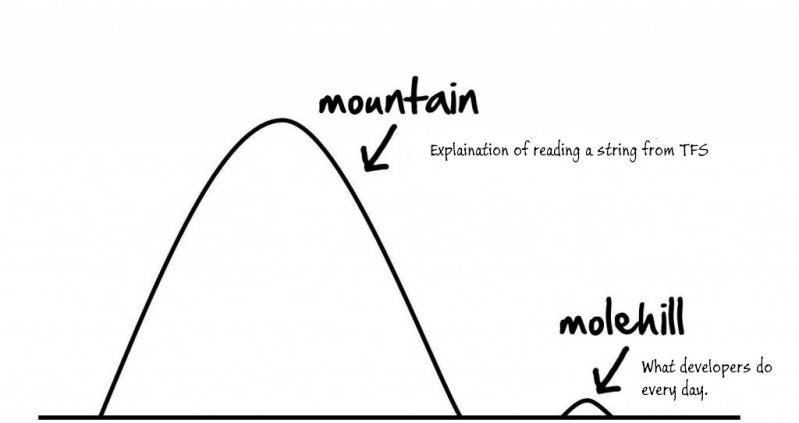

Dev is/was using TFS's web api to read some config stuff..

Ralph: "Ugh..this is driving me crazy. I've spent all day trying to read this string from TFS and it is not working"

Me: "Um, reading a string from an web api is pretty easy, what's the problem?"

Ralph: "I'm executing the call in a 'using' statement and cannot return the stream."

Me: "Why do you need to return a stream? Return the object you are looking for."

Ralph: "Its not that easy. You can return anything from TFS. All you get back is a stream. Could be XML, JSON, text file, image, anything."

Me: "What are you trying to return?"

Ralph: "XML config. If I use XDoc, the stream works fine, but when I step into each byte from the stream, I the first three bytes have weird characters. I shouldn't have to skip the first three bytes to get the data. I spent maybe 5 hours yesterday digging around the .Net stream readers used in XDoc trying to figure out how it skips the first few bytes."

Me: "Wow...I would have used XDoc and been done and not worried about that other junk."

Ralph: "But I don't know the stream is XML. That's what I need to figure out."

Me: "What is there to figure out? You do know. Its your request. You are requesting a XML config."

Ralph: "No, the request can be anything. What if Sam requests an image? XDoc isn't going to work."

Me: "Is that a use-case? Sam requesting an image?"

Ralph: "Uh..I don't know...he could"

Me: "Sounds like your spending a lot of time doing premature optimization. You know what your accessing TFS for, if it's XML, return XML. If it's an image, return an image. Something new comes along, modify the code to handle it. Eazy peezy."

<boss walks in from a meeting>

Boss: "Whats up guys?"

Ralph: "You know the problem with TFS and not being able to stream the data I had all day yesterday? I finally figured it out. I need to keep this TFS reader simple. I'll start with the XML configs and if we more readers later, we can add them."

Boss: "Oh yea, always start simple and add complexity only when you need it."

Frack...Frack..Frack...you played some victim complaining to anyone who would listen yesterday (which I mostly ignored) about reading data from TFS was this monumental problem no one could solve, then you start complaining to me, I don't fall for the BS, then tell the boss the solution was your idea?

Lunatic or genius? Wally would be proud. 4

4 -

Today I saw a code written by my junior. Basically excel export. The laravel excel package provide great ways for optimization.

My junior instead did 6 times loop to modify the data before giving that data to the export package. We need to export around 50K users.

When I asking him why this ? He said it works and it's fast so what the issue ???

Noob , you have only 100 users in the database and production has 10 million.

Sometime I just want to kill him.15 -

Windows 10 source code apparently leaked!

The main.c file is short tho and unchanged since 95(it's just run in parallel) so I'mma just paste it here:

void WinMain(int nCmdArgs){

static int userDisgust = 5; //he/she booted up Win10 so 5 to begin with

Time time = Time.time;

while(!crashed){

Time time2 = Time.time;

if(time + 3600 < time2){

ApplyUpdate();

sleep(500000); //users can stretch, have a tea and overall be healthier, happier and better part of society

if(restartNecessary){

Restart();

}

Restart(); // just in case

}

SendUserData();

SendMoreUserData();

for(int i = 1; i < 99+1; i++){

RunUserApps();

UninstallUnnecessaryApps(); // keeps blacklist of apps that John and I don't like

AnnoyUser(); // added a function that slows the computer down significantly and reduces lifespan of the hardware so it's not just a short term gain

if(shouldRepeat){

//break; -- this caused some issue in one special case and we don't know why so we'll just omit it and add a fancy button elsewhere

}

userDisgust++;

}

if(userDisgust >= 2147483647) { //mission finished

Crash();

crashed = true;

}

}

String error = Windows.GetFullErrorMessage();

error.cat(Windows.GetRegistersDump());

error.cat(Windows.GetErrorCode());

error.cat(Windows.GetStackTrace());

error.erase(); // so that it doesn't occupy any space and allows the error to be resolved more quickly, release major optimization Update

BSOD(_T("We're sorry :/"));

}5 -

After a long time just reading your posts, here's my first post:

Just for clarification: I'm studying electrical engineering in Germany. During your time at university, you have to work half a year as a intern to get some practical experience. So I'm in a position where I mainly have to say "yes" to work that is given to me. Also I'm working with a lot of PLC programmers, so I'm nearly the only one who programs non-PLC stuff at the department.

But now it's time for my rant (and also my most satisfying optimization ever). In the job interview for the internship, my task at the company was described as C# programmer. I only programmed C and Python before, but C# looked interesting and so I learned C# from ground up in the summer before the internship. I quite liked it and I was really happy on my first day of work. Then I was greeted with this message: "I know you are hired as C# programmer, but could you please look into this VBA program, it takes 55 seconds until it finishes its task and that's to slow". So I (midly angry because I had to do VBA and not C#) started the program and it was really horribly slow (it just created a table with certain contents from a very big imported symbol file). I then opened up the source code and immideately saw bad code. The guy who wrote it basically just clicked on the macro recording button and used the recorded mouse clicks in the source code. The code was like: Click on cell A1 -> copy cell A1 -> move to sheet XY -> click on cell A2 -> paste copied stuff and so on... I never 'programmed' in VBA before, so I used my knowledge of 'real' programming languages to do this task. After using some arrays and for-loops, which did not iterate over all the 1.000.000 unused cells after the last used one, the program took only 3 seconds after it finished the new table! Everybody was quite impressed, which led to much more VBA optimization... That was clearly not my goal haha :)9 -

Hmm our bundle js is already 1.35Mb maybe I should do something with that.

... Insert 2 hours of frantic webpack magic + babel-preset tweeking, tree-shake code optimization ...

- npm run build

- bundle.js => 1.37Mb

Great Success! I'm going to take a lunch...4 -

“Get the code working first, then worry about how to clean and optimize it.”

For me when I learnt about optimization and how one thing was better than something else, I tended to focus on that. I’d have a picture of that in my mind, and would try to write as clean of code with less hacks in the middle and as optimized as I could in the first go, which slowed me the way fuck down.

After he said that to me, I realized I was stupid and just wasting time if I worried about that from the start. Would waste time, and just cause more headaches from the start than it was worth.

——

Oh also another one, I knew never to trust the client from the start but the way he said it was funny. “Never ever trust the fucking client, don’t trust them with anything. I trust Satan more than I trust the client.” 😂6 -

I've been writing a complex mutation engine that dynamically modifies compiled C++ code. Now there's alot of assembly involved, but I got it to work. I finished off writing the last unit test before it was time to port it all to windows. I switched into a release build, ready to bask in the glory of it all. FUCKING GCC OPTIMIZATIONS BROKE EVERYTHING. I had been doing all my dev in debug mode and now some obscure optimization GCC does in release mode is causing a segfault...somewhere. Just when I thought I was done 😅5

-

Used hashmap instead of arraylist for 13000+ entries and fetched it from hashmap. Earlier used to take 1500ms to execute and now only 500ms.. First time, optimization of code for which i can see the difference in real world.. Its a good feeling.

-

So I was finally done with the project, every feature was implemented the way I wanted it be, every piece of code optimized and well written, I was completely satisfied with my code and didn't feel the urge to add a "last little feature/optimization"...

.

.

.

Then I woke up 😭😭😭2 -

>Advanced code optimization class

>Professor : Your midterm exam will be written on paper....with no code.

>Me: ?????????????3 -

The morons at work state the obvious like it’s a goddamn epiphany, and get cheered or publicly rebuked based entirely on their standing within company politics.

“While writing code, think of performance. Performance is everyone’s job, and it doesn’t take much effort to write much more performant code.”

> Premature optimization! BOOO! You should be ashamed! Move fast and break things OR ELSE.

“Security isn’t profit driven, but allows us to retain customers and therefore profit. Everyone should be security conscious and work with the security team *before* releases”

> BOOOO! Do your job! Don’t tell devs how to do theirs!

“Being reactive is good, but we should focus more on recovering from outages faster!”

> YAAAY! GENIUS!

ffs.8 -

what kind of dumb fuck you have to be to get the react js dev job in company that has agile processes if you hate the JS all the way along with refusing to invest your time to learn about shit you are supposed to do and let's add total lack of understanding how things work, specifically giving zero fucks about agile and mocking it on every occasion and asking stupid questions that are answered in first 5 minutes of reading any blog post about intro to agile processes? Is it to annoy the shit out of others?

On top of that trying to reinvent the wheels for every friggin task with some totally unrelated tech or stack that is not used in the company you work for?

and solution is always half-assed and I always find flaw in it by just looking at it as there are tons of battle-tested solutions or patterns that are better by 100 miles regarding ease of use, security and optimization.

classic php/mysql backend issues - "ooh, the java has garbage collector" - i don't give a fuck about java at this company, give me friggin php solution - 'ooh, that issue in python/haskel/C#/LUA/basically any other prog language is resolved totally different and it looks better!' - well it seems that he knows everything besides php!

Yeah we will change all the fucking tech we use in this huge ass app because your inability to learn to focus on the friggin problem in the friggin language you got the job for.

Guy works with react, asked about thoughts on react - 'i hope it cease to exists along with whole JS ecosystem as soon as possible, because JS is weird'. Great, why did you fucking applied for the job in the first place if it pushes all of your wrong buttons!

Fucking rockstar/ninja developers! (and I don't mean on actual 'rockstar' language devs).

Also constantly talks about game development and we are developing web-related suite of apps, so why the fuck did you even applied? why?

I just hate that attitude of mocking everything and everyone along with the 'god complex' without really contributing with any constructive feedback combined with half-assed doing something that someone before him already mastered and on top of that pretending that is on the same level, but mainly acting as at least 2 levels above, alas in reality just produces bolognese that everybody has to clean up later.

When someone gives constructive feedback with lenghty argument why and how that solution is wrong on so many levels, pulls the 'well, i'm still learning that' card.

If I as code monkey can learn something in 2 friggin days including good practices and most of crazy intricacies about that new thing, you as a programmer god should be able to learn it in 2 fucking hours!

Fucking arrogant pricks!8 -

(long post is long)

This one is for the .net folks. After evaluating the technology top to bottom and even reimplementing several examples I commonly use for smoke testing new technology, I'm just going to call it:

Blazor is the next Silverlight.

It's just beyond the pale in terms of being architecturally flawed, and yet they're rushing it out as hard as possible to coincide with the .Net 5 rebranding silo extravaganza. We are officially entering round 3 of "sacrifice .Net on the altar of enterprise comfort." Get excited.

Since we've arrived here, I can only assume the Asp.net Ajax fiasco is far enough in the past that a new generation of devs doesn't recall its inherent catastrophic weaknesses. The architecture was this:

1. Create a component as a "WebUserControl"

2. Any time a bound DOM operation occurs from user interaction, send a payload back to the server

3. The server runs the code to process the event; it spits back more HTML

Some client-side js then dutifully updates the UI by unceremoniously stuffing the markup into an element's innerHTML property like so much sausage.

If you understand that, you've adequately understood how Blazor works. There's some optimization like signalR WebSockets for update streaming (the first and only time most blazor devs will ever use WebSockets, I even see developers claiming that they're "using SignalR, Idserver4, gRPC, etc." because the template seeds it for them. The hubris.), but that's the gist. The astute viewer will have noticed a few things here, including the disconnect between repaints, inability to blend update operations and transitions, and the potential for absolutely obliterative, connection-volatile, abusive transactional logic flying back and forth to the server. It's the bring out your dead approach to seeing how much of your IT budget is dedicated to paying for bandwidth and CPU time.

Blazor goes a step further in the server-side render scenario and sends every DOM event it binds to the server for processing. These include millisecond-scale events like scroll, which, at least according to GitHub issues, devs are quickly realizing requires debouncing, though they aren't quite sure how to accomplish that. Since this immediately becomes an issue with tickets saying things like, "scroll event crater server, Ugg need help! You said Blazorclub good. Ugg believe, Ugg wants reparations!" the team chooses a great answer to many problems for the wrong reasons:

gRPC

For those who aren't familiar, gRPC has a substantial amount of compression primarily courtesy of a rather excellent binary format developed by Google. Who needs the Quickie Mart, or indeed a sound markup delivery and view strategy when you can compress the shit out of the payload and ignore the problem. (Shhh, I hear you back there, no spoilers. What will happen when even that compression ceases to cut it, indeed). One might look at all this inductive-reasoning-as-development and ask themselves, "butwai?!" The reason is that the server-side story is just a way to buy time to flesh out the even more fundamentally broken browser-side story. To explain that, we need a little perspective.

The relationship between Microsoft and it's enterprise customers is your typical mutually abusive co-dependent relationship. Microsoft goes through phases of tacit disinterest, where it virtually ignores them. And rightly so, the enterprise customers tend to be weaksauce, mono-platform, mono-language types who come to work, collect a paycheck, and go home. They want to suckle on the teat of the vendor that enables them to get a plug and play experience for delivering their internal systems.

And that's fine. But it's also dull; it's the spouse that lets themselves go, it's the girlfriend in the distracted boyfriend meme. Those aren't the people who keep your platform relevant and competitive. For Microsoft, that crowd has always been the exploratory end of the developer community: alt.net, and more recently, the dotnet core community (StackOverflow 2020's most loved platform, for the haters). Alt.net seeded every competitive advantage the dotnet ecosystem has, and dotnet core capitalized on. Like DI? You're welcome. Are you enjoying MVC? Your gratitude is understood. Cool serializers, gRPC/protobuff, 1st class APIs, metadata-driven clients, code generation, micro ORMs, etc., etc., et al. Dear enterpriseur, you are fucking welcome.

Anyways, b2blazor. So, the front end (Blazor WebAssembly) story begins with the average enterprise FOMO. When enterprises get FOMO, they start to Karen/Kevin super hard, slinging around money, privilege, premiere support tickets, etc. until Microsoft, the distracted boyfriend, eventually turns back and says, "sorry babe, wut was that?" You know, shit like managers unironically looking at cloud reps and demanding to know if "you can handle our load!" Meanwhile, any actual engineer hides under the table facepalming and trying not to die from embarrassment.36 -

I often read articles describing developer epiphanies, where they realized, that it was not Eclipse at fault for a bad coding experience, but rather their lack of knowledge and lack of IDE optimization.

No. Just NO.

Eclipse is just horrendous garbage, nothing else. Here are some examples, where you can optimize Eclipse and your workflow all you like and still Eclipse demonstrates how bad of an IDE it is:

- There is a compilation error in the codebase. Eclipse knows this, as it marks the error. Yet in the Problems tab there is absolutely nothing. Not even after clean. Sometimes it logs errors in the problems tab, sometimes t doesn't. Why? Only the lord knows.

- Apart from the fact that navigating multiple Eclipse windows is plain laughable - why is it that to this day eclipse cannot properly manage windows on multi-desktop setups, e.g. via workspace settings? Example: Use 3 monitors, maximize Eclipse windows of one Eclipse instance on all three. Minimize. Then maximize. The windows are no longer maximized, but spread somehow over the monitors. After reboot it is even more laughable. Windows will be just randomly scrabled and stacked on top of each other. But the fact alone that you cannot navigate individual windows of one instance.. is this 2003?

- When you use a window with e.g. class code on a second monitor and your primary Eclipse window is on the first monitor, then some shortcuts won't trigger. E.g. attempting to select, then run a specific configuration via ALT+R, N, select via arrows, ALT+R won't work. Eclipse cannot deal with ALT+R, as it won't be able to focus the window, where the context menus are. One may think, this has to do with Eclipse requiring specific perspectives for specific shortcuts, as shortcuts are associated with perspectives - but no. Because the perspective for both windows is the same, namely Java. It is just that even though Shortcuts in Eclipse are perspective-bound, but they are also context-sensitive, meaning they require specific IDE inputs to work, regarldless of their perspective settings. Is that not provided, then the shortcut will do absolutely nothing and Eclipse won't tell you why.

- The fact alone that shortcut-workarounds are required to terminate launches, even though there is a button mapping this very functionality. Yes this is the only aspect in this list, where optimizing and adjusting the IDE solves the problem, because I can bind a shortcut for launch selection and then can reliably select ant trigger CTRL+F2. Despite that, how I need to first customize shortcuts and bind one that was not specified prior, just to achieve this most basic functionality - teminating a launch - is beyond me.

Eclipse is just overengineered and horrendous garbage. One could think it is being developed by people using Windows XP and a single 1024x768 desktop, as there is NO WAY these issues don't become apparent when regularily working with the IDE.9 -

[vent]

I am java dev with 5 yoe at a place which has really good engineering talent.

Was assigned a feature request.

Feature request requires me and one more older dev(in age, not in exp at company) to write the code. My piece is really super complex because of the nature of the problem and involves caching, lazy loading and tonne of other optimization. Naturally it makes up 90% of the tasks in the feature request. On the other hand, the older dev simply has to write a select query (infact he only needs to call it since a function is already written).

Older dev takes up all the credit, gives the demo, knows nothing but wrongly answers in meetings with higher ups and was recently awarded employee of qtr.

It looks as if I do the easy work whereas he is the one pulling in all the hard work.

Need advice to justify my work and make others realise it's significance, nuances of area and complexity of it.

Do not expect monetary benefits, just expect credit and recognition for the worth of work I am doing.14 -

First real dev project was a calculator for a browser game, that calculates the optimal number/combination of buildings to build. I got bored constantly doing it manually, so I made this program as a fun and useful challenge. It involved basic math, and I did it in VB.

Second one was a stats tracking page for my team in another browser game, that let us easily share and keep track of stuff. It allowed us to minmax our actions and reduced the downtime between actions of different players. HTML, CSS, JS, PHP, MySQL.

Third one was a userscript for the same game that added QoL features and made the game easier to play. JS

Fourth was for the first game, also a QoL feature userscript, that added colors/names, number limit validation to inputs, and optimization calculators built in the interface. It also fixed and improved various UI things. Also had a cheating feature where I could see the line of sight of enemies in the fog of war (lol the dev kept the data on the page even if you couldnt see the enemies on the map), but I didnt use it, it was just fun to code it. JS

From there on, I just continued learning and doing more and more complex shit, and learning new languages.2 -

> Moment you thought you did a good job, but ended up failing

> Times the bug wasn't actually your fault

> Times you took the blame for a junior or other dev

> Times someone took the blame for you

> Times you got away with something you shouldn't have.

> Most valuable data loss

> The bug you never fixed

> Most satisfying bug to fix

> Times where a "simple" task turned out to be not so simple

> Debug code left in production?

> Moments you wish you could undo

> Most satisfying optimization

> Have you ever been ranted about? -

After a lot of work I figured out how to build the graph component of my LLM. Figured out the basic architecture, how to connect it in, and how to train it. The design and how-to is 100%.

Ironically generating the embeddings is slower than I expect the training itself to take.

A few extensions of the design will also allow bootstrapped and transfer learning, and as a reach, unsupervised learning but I still need to work out the fine details on that.

Right now because of the design of the embeddings (different from standard transformers in a key aspect), they're slow. Like 10 tokens per minute on an i5 (python, no multithreading, no optimization at all, no training on gpu). I've came up with a modification that takes the token embeddings and turns them into hash keys, which should be significantly faster for a variety of reasons. Essentially I generate a tree of all weights, where the parent nodes are the mean of their immediate child nodes, split the tree on lesser-than-greater-than values, and then convert the node values to keys in a hashmap to make lookup very fast.

Weight comparison can be done either directly through tree traversal, or using normalized hamming distance between parent/child weight keys and the lookup weight.

That last bit is designed already and just needs implemented but it is completely doable.

The design itself is 100% attention free incidentally.

I'm outlining the step by step, only the essentials to train a word boundary detector, noun detector, verb detector, as I already considered prior. But now I'm actually able to implement it.

The hard part was figuring out the *graph* part of the model, not the NN part (if you could even call it an NN, which it doesn't fit the definition of, but I don't know what else to call it). Determining what the design would look like, the necessary graph token types, what function they should have, *how* they use the context, how thats calculated, how loss is to be calculated, and how to train it.

I'm happy to report all that is now settled.

I'm hoping to get more work done on it on my day off, but thats seven days away, 9-10 hour shifts, working fucking BurgerKing and all I want to do is program.

And all because no one takes me seriously due to not having a degree.

Fucking aye. What is life.

If I had a laptop and insurance and taxes weren't a thing, I'd go live in my car and code in a fucking mcdonalds or a park all day and not have to give a shit about any of these other externalities like earning minimum wage to pay 25% of it in rent a month and 20% in taxes and other government bullshit.3 -

In an algorithm class, professor introduced us to some simple search algorithms (bubble sort, selection sort, insertion sort, shell sort). He did a quite decent job and most of the students were able to grasp the code and understand the differences in those algorithms. But then he spoiled his whole lecture with one additional slide. There he proposed an optimization: Instead of using a temporary swap variable, we just could use the first array element (or the zeroth element, respectively: the one ad index 0) for doing all the swapping. We just had to document that, so that the caller would "leave the first position of the array empty", resulting in "cleaner code". And he did that in the same class where he used Big-O notation to argue about runtime complexity. But having the caller to resize the array and to shift all the elements by one position did not matter to him at all, because it was "not part of the actual algorithm".1

-

Optimization issue pops out with one of our queries.

> Team leader: You need to do this and that, it's a thing you know NOTHING about but don't worry, the DBA already performed all the preliminary analysis, it's tested and it should work. Just change these 2 lines of code and we're good to go

> ffwd 2 days, ticket gets sent back, it's not working

> Team leader: YOU WERE SUPPOSED TO TEST IT YOUR CHANGE IS NOT WORKING

> IHateForALiving: try it on our production machine and you'll see the exact same error, it's been there for years

> Team leader: BUT YOU WERE SUPPOSED TO TEST IT

Just so we're clear, when I perform a change in the code, I test the changes I made. I don't know in which universe I should be held accountable for tards breaking features 10 years ago, but you can't seriously expect me to test the whole fucking software from scratch every time I add an index to the db. -

When I was an apprentice in a small company, ...

my boss told me that his company would never ship release builds, because the "evil optimization option" is responsible for breaking his code.

My first thought was that it wouldn't make any sense at all. The default option for code optimization is always set to zero.

After investigating his code, I found out that he didn't care to properly initialize his variables. The default compiler option for debug builds did implicitly initialize all variables to zero. After that I've confronted him with the fact that implicit null initialization does not conform to the standard of C and C++. He didn't believe me what I was saying and he was questioning my knowledge about C and C++. He refused to fix his code to this day, so he keeps building his libraries and applications always in debug mode.

Bonus fact: He would never build 64-bit applications, because his serialization functions do get incompatible with exisiting file formats. -

I know by heart that "premature optimization is the root of all evil" but not when I'm coding, no! I premature optimize the shit out of my code until I get crippled down and then I undo everything and make a working version no matter how terrible it is.6

-

One of professors has an interesting philosophy in regards to how software is planned. He makes us forget that we dealing with a computer and has us write instructions as if we are teaching a human (no optimization, binary, or unnecessary numeral variables). Then we change it into code, then we optimize it. Every time. It freaks me out, but it gets us thinking. Not sure. If that is genius or insanity.1

-

If you're working on close to hardware things, make sure you run static analysis, and manually inspect the output of your compiler if you feel something's off - it may be doing something totally different from what you expect, because of optimization and what not. Also, optimizations don't always trigger as expected. Also, sometimes abstractions can cost a fair amount too (C++ std::string c/dtor, for example, dtors in general), more than you'd expect, and in those cases you might want to re-examine your need for them.

Having said all that, also know how to get the compiler to work for you, hand-optimization at the assembly level isn't usually ideal. I've often been surprised by just how well compilers figure out ways to speed up / compactify code, especially when given hints, and it's way better than having a blob of assembly that's totally unmaintainable.

Learnt this from programming MCUs and stuff for hobby/college team/venture, and from messing around with the Haskell compiler and LLVM optimization passes.1 -

Got asked by a coworker for some help, looked at the code and told him it could be written better and show him the example.

His reply : "It's not easy to read for me"

That was ok but then, now here's the kicker, he asked another coworker to come and see which was easier to read.

You did what mate?

So of course i got pissed and went out for a smoke just to return to see my version being used.

WHY THE FUCK DID YOU ARGUE ABOUT READABILITY IF YOU"RE GOING TO USE IT ANYWAY???

Fuken fuk, never again am i going to offer optimization support to people. -

OK what the actual fuck is going on within this company.

TL;DR: Spaghetti Copy/Pasted code that made me mad because it's just a mess

I just looked into a code file to search for a specific procedure regarding the creation of invoices.

I thought "Oh this is gonna be a quick look-through of like 1000 lines MAX" turns out this script is 11317 fucking lines long and most of it's logic is written there multiple (up to 6-7 times). And I'm not talking about a simple 10 lines or something. No! Logic of over 300 lines.. copy & pasted over .. and over .. and over?! I mean what the fuck did this guy drink when he wrote this.

Alsooo 10000 of those 11317 lines is ONE FUNCTION.. I kid you not! It's just a gigantic if / else if construct that, as I said before, contains copy-pasted code all over the place.

Sadly my TL thinks that code cleanup / optimization is "not necessary as long as it works" like wtf dude. If anyone wants to ever fix something in this mess or add a new feature they take a few hours longer just to "adjust" to this fucking shit.

This is a nightmare. The worst part: This is not the only script that has shit like this. We got over 150 "modules" (Yeah, we ATTEMPTED something OOP-ish but failed miserably) that sometimes have over 15000 lines which could be easily cut down to 1/3 and/or splitted into multiple files.

Let's not start about centralization of methods or encoding handling or coding standards or work code review or .. you get the point because there's a character limit for one rant and I guess I'd overshoot that by a lot if I'd start with that. Holy shit I can't wait until my internship is over and I can leave this code-hell!!2 -

for my job I need to know,

Programming, C#, Optimization, Multithreading and Async code, Working certain tools, Reading difficult written code, Understanding, Physics, Networking, Rendering, Codeloops, Memory management, Profiling tools, Being able to make Jira tickets and read Jira tickets. Understanding source control branching, merging, push and pull. bug fixing.

And I write almost 1 line of code a week on average..

I'm a programmer.2 -

This is really annoying. My single manual test takes 10 minutes to run. Can't blame the task because its big, can't blame me, code optimization is not necessary and won't speed anything up. So here writing a rant waiting for it to finish1

-

My biggest dev sin is premature optimization. I'll try to produce the best possible code without the need for it to be there. I will waste my time thinking of wierd edge cases that can be handled with a simple if-else, but why not tweak the algo to handle them internally.

-

Up all damn night making the script work.

Wrote a non-sieve prime generator.

Thing kept outputting one or two numbers that weren't prime, related to something called carmichael numbers.

Any case got it to work, god damn was it a slog though.

Generates next and previous primes pretty reliably regardless of the size of the number

(haven't gone over 31 bit because I haven't had a chance to implement decimal for this).

Don't know if the sieve is the only reliable way to do it. This seems to do it without a hitch, and doesn't seem to use a lot of memory. Don't have to constantly return to a lookup table of small factors or their multiple either.

Technically it generates the primes out of the integers, and not the other way around.

Things 0.01-0.02th of a second per prime up to around the 100 million mark, and then it gets into the 0.15-1second range per generation.

At around primes of a couple billion, its averaging about 1 second per bit to calculate 1. whether the number is prime or not, 2. what the next or last immediate prime is. Although I'm sure theres some optimization or improvement here.

Seems reliable but obviously I don't have the resources to check it beyond the first 20k primes I confirmed.

From what I can see it didn't drop any primes, and it didn't include any errant non-primes.

Codes here:

https://pastebin.com/raw/57j3mHsN

Your gotos should be nextPrime(), lastPrime(), isPrime, genPrimes(up to but not including some N), and genNPrimes(), which generates x amount of primes for you.

Speed limit definitely seems to top out at 1 second per bit for a prime once the code is in the billions, but I don't know if thats the ceiling, again, because decimal needs implemented.

I think the core method, in calcY (terrible name, I know) could probably be optimized in some clever way if its given an adjacent prime, and what parameters were used. Theres probably some pattern I'm not seeing, but eh.

I'm also wondering if I can't use those fancy aberrations, 'carmichael numbers' or whatever the hell they are, to calculate some sort of offset, and by doing so, figure out a given primes index.

And all my brain says is "sleep"

But family wants me to hang out, and I have to go talk a manager at home depot into an interview, because wanting to program for a living, and actually getting someone to give you the time of day are two different things.1 -

So I promised a post after work last night, discussing the new factorization technique.

As before, I use a method called decon() that takes any number, like 697 for example, and first breaks it down into the respective digits and magnitudes.

697 becomes -> 600, 90, and 7.

It then factors *those* to give a decomposition matrix that looks something like the following when printed out:

offset: 3, exp: [[Decimal('2'), Decimal('3')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('1')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: [[Decimal('7'), Decimal('1')]]

Each entry is a pair of numbers representing a prime base and an exponent.

Now the idea was that, in theory, at each magnitude of a product, we could actually search through the *range* of the product of these exponents.

So for offset three (600) here, we're looking at

2^3 * 3 ^ 1 * 5 ^ 2.

But actually we're searching

2^3 * 3 ^ 1 * 5 ^ 2.

2^3 * 3 ^ 1 * 5 ^ 1

2^3 * 3 ^ 1 * 5 ^ 0

2^3 * 3 ^ 0 * 5 ^ 2.

2^3 * 3 ^ 1 * 5 ^ 1

etc..

On the basis that whatever it generates may be the digits of another magnitude in one of our target product's factors.

And the first optimization or filter we can apply is to notice that assuming our factors pq=n,

and where p <= q, it will always be more efficient to search for the digits of p (because its under n^0.5 or the square root), than the larger factor q.

So by implication we can filter out any product of this exponent search that is greater than the square root of n.

Writing this code was a bit of a headache because I had to deal with potentially very large lists of bases and exponents, so I couldn't just use loops within loops.

Instead I resorted to writing a three state state machine that 'counted down' across these exponents, and it just works.

And now, in practice this doesn't immediately give us anything useful. And I had hoped this would at least give us *upperbounds* to start our search from, for any particular digit of a product's factors at a given magnitude. So the 12 digit (or pick a magnitude out of a hat) of an example product might give us an upperbound on the 2's exponent for that same digit in our lowest factor q of n.

It didn't work out that way. Sometimes there would be 'inversions', where the exponent of a factor on a magnitude of n, would be *lower* than the exponent of that factor on the same digit of q.

But when I started tearing into examples and generating test data I started to see certain patterns emerge, and immediately I found a way to not just pin down these inversions, but get *tight* bounds on the 2's exponents in the corresponding digit for our product's factor itself. It was like the complications I initially saw actually became a means to *tighten* the bounds.

For example, for one particular semiprime n=pq, this was some of the data:

n - offset: 6, exp: [[Decimal('2'), Decimal('5')], [Decimal('5'), Decimal('5')]]

q - offset: 6, exp: [[Decimal('2'), Decimal('6')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('5')]]

It's almost like the base 3 exponent in [n:7] gives away the presence of 3^1 in [q:6], even

though theres no subsequent presence of 3^n in [n:6] itself.

And I found this rule held each time I tested it.

Other rules, not so much, and other rules still would fail in the presence of yet other rules, almost like a giant switchboard.

I immediately realized the implications: rules had precedence, acted predictable when in isolated instances, and changed in specific instances in combination with other rules.

This was ripe for a decision tree generated through random search.

Another product n=pq, with mroe data

q(4)

offset: 4, exp: [[Decimal('2'), Decimal('4')], [Decimal('5'), Decimal('3')]]

n(4)

offset: 4, exp: [[Decimal('2'), Decimal('3')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('3')]]

Suggesting that a nontrivial base 3 exponent (**2 rather than **1) suggests the exponent on the 2 in the relevant

digit of [n], is one less than the same base 2 digital exponent at the same digit on [q]

And so it was clear from the get go that this approach held promise.

From there I discovered a bunch more rules and made some observations.

The bulk of the patterns, regardless of how large the product grows, should be present in the smaller bases (some bound of primes, say the first dozen), because the bulk of exponents for the factorization of any magnitude of a number, overwhelming lean heavily in the lower prime bases.

It was if the entire vulnerability was hiding in plain sight for four+ years, and we'd been approaching factorization all wrong from the beginning, by trying to factor a number, and all its digits at all its magnitudes, all at once, when like addition or multiplication, factorization could be done piecemeal if we knew the patterns to look for.7 -

I'm currently doing project in Java using JavaFX for GUI and after like 6 months I found out we can bind textfields to variables(yea,dumb me) and I got more than 20 forms so of course, binding is useful than that getText method. So I think there are many things like this which will help me to optimize my code but I dont know, so can anyone tell me more stuff like this?

I'm using MongoDb so I'm currently finding easiest way to make Bson document from textfield values. Any suggestions?

(Sorry, for my bad English)1 -

Why open-source matters: I can remove annoyances like starting in front-facing mode from a smartphone camera software, and hide the button for the "effects" drawer that I never use since I can add Sepia or black/white in post processing should I ever need it.

Both of these annoyances cause missing moments. If the source code of the camera software is open, and if the operating system is rooted, these utter annoyances can be removed.

There are open-source third party applications like "Open Camera", but they lack quick launch support and might have, presumably due to lack of optimization, a two-second shutter lag. Big no. -

How do you implement TDD in reality?

Say you have a system that is TDD ready, not too sure what that means exactly but you can go write and run any unit tests.

And for example, you need to generate a report that uses 2 database tables so:

1. Read/Query

2. Processor logic

3. Output to file

So 1 and 3 are fairly straightforward, they don't change much, just mock the inputs.

But what about #2. There's going to be a lot of functions doing calculations, grouping/merging the data. And from my experience the code gets refactored a lot. Changing requirements, optimization (first round is somewhat just make it work) so entire functions and classes maybe deleted. Even the input data may change. So with TDD wouldn't you end up writing a lot of throwaway code?

A lot of times I don't know exactly what I want or need other than I need a class that can do something like this... but then I might end up throwing the whole thing out and writing a new one one I get a clearer idea of what i or the user wants or needs.

Last week I was building a new REST API, the parameters and usage changed like 3 times. And even now the code is in feasibility/POC testing just to figure out what needs to be used. Do I need more, less parameters, what should they be. I've moved and rewritten a lot of code because "oh this way won't work, need to try this way instead"

All I start with is my boss telling me I need an API that lets users to ... (Very general requirements).10 -

Tried Cursor. It's "Agent" thing have given me the whole new appreciation for my existing codebase. For how every line of code and every micro-decision I make is deliberate. Though it did it in the worst way possible — by "optimizing" my code that I didn't request to be optimized.

I feel like I delegated a surgeon's work to a lawnmower roomba. Yes, both of them do cut stuff, but are they interchangeable?

Cursor's autocomplete is stellar though. Because of how consistent my code is, it almost never fails. It baffles me that the codebase that is consistent enough for the AI autocomplete to never fail somehow causes the same AI, but acting as an agent instead, to completely shit the bed.

My code is consistent because I copy and paste a lot. But, because of how expressive it is (thanks to my zero-framework approach), I only ever need to copy and paste ten lines or so max to do what I need. When I say that I "copy and paste code", what comes to mind? I bet it's acres of boilerplate. Not here.

I'm now applying Orwellian newspeak rules to my naming. For a distinct entity, I create the shortest possible name and try to carry it everywhere I go. Yes, short names will run out, but naming entities differently to avoid conflicts is the job for future me. Premature optimization is the root of all evil, and thinking about variable names in advance is premature optimization too.

For example, if the user entity in the database has lastName field, its variable in imperative code will be the same, and the form input name will be lastName too. Why use "userLastName" and "lastNameInput" or "lastNameField", or even "userLastNameField"? YAGNI.

I'm inching closer and closer to a universal gray goo architecture that can absorb anything. Wait till I replace what I copy and paste with brand new keywords and create a new language. I've already started doing that with the way I write util functions.2 -

!rant

REEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEEE

fuck you Samsung and your "APPLICATION OPTIMIZATION" I need this fucking 2FA code right now1 -

I'm wondering if there is a way to use Machine Learning algorithm to optimize games like Screeps.com, which is a game that you control your game by writing JS code. Letting the algorithm write human readable code might be too challenging, but optimizing some aspect of the game should be possible, like the best scale up route optimization using re-enforced learning.3

-

figured maybe you can specify dependencies specifically to be used in main.rs (as a standalone executable) or lib.rs (as a library)

since for some reason there's dev-dependencies which specifies they will only be used in tests or whatever

well rust actually doesn't compile code that wasn't ever called / would be run (and nags you about code you have but didn't use anywhere). this means binaries are smaller and all that. i've known about this but seemingly the AI insists nobody needs to specify dependency differences between main.rs and lib.rs because of this quirk of rust compiling

ok well then why the hell is there a dev dependencies and a normal dependencies then?

well no good reason.

- "intentionality" -- how about the clarity of intentionality between being an executable or a library?! no? guess not

- build optimization, because traversing usability graphs can be taxing especially in big projects. ok. again still applies to executable vs library problem

- "community and ecosystem practices". really? we've always done it this way? shove it 🙄😩. you try to innovative and then willfully inherit the problems you solved of other languages... because that's how we've always done it. lame

double standards. so annoying -

Google just integrated Gemini AI into Chrome DevTools, and it’s a groundbreaking upgrade! 🚀

AI-driven debugging, smarter code optimization, and built-in security insights—this is changing the game for developers.

I covered all the hidden gems in my latest newsletter. Check it out here ⤵

🔗 https://techscoop.hashnode.dev/gemi...4 -

Code Optimization 😉

Before doing: After doing the changes, it ll be much easier to maintain😇

While doing : how it was working before 🤔 why I need these lines 🙄

After doing : Never gonna optimize existing file instead will start following the standard hereafter😏

While working thereafter.. 😛 : Instead of following these standards which consumes time, I can make the logic quicker and will optimize later...

Again the cycle repeats 😂 -

https://appleinsider.com/articles/...

Tl;Dr This guy thinks apple is poised to switch the Macs to a custom arm based chip over x86! He's now on my idiot list.

I paraphrase:

"They've made a custom GPU", great! That's as helpful as "The iPad is a computer now", and guess what Arm Mali GPUs exist! Just because they made their own GPU doesn't make it suitable for desktop graphics (or ML)!

"They released compilation tools right when they released their new platform, so developers could compile for it right away", who would be an idiot not too...

"Because Android apps run in so many platforms, it's not optimized for any. But apple can optimize their apps for a sepesific users device", what!? What did I miss? What do you optimize? Sure, you can optimize this, you can optimize that... But the reason why IOS software is "optimized", and runs better/smoother (only on the newest devices of course) is because it's a closed loop, proprietary system (quality control), and because they happen to have done a better job writing some of their code (yes Android desperately needs optimization in numerous places...).

I could go on... "WinTel's market share has lowly plataued", "tHeY iNtRoDuCeD a FiElD pRoGrAmMaBlE aRrAy"

For apple to switch Macs to arm would be a horrible idea, face it: arm is slower than x86, and was never meant to be faster, it was meant to be for mobile usage, a good power to Wh ratio favoring the Wh side.

Stupid idiot.19 -

Folks should give Clojure a look. It may be Lisp on steroids. Need to wrap your brain around macros to use it properly. It's interpreted so it must be slow, riiight?

Not so, er, fast.Ran across a discussion re C++ vs Clojure running data acquisition at 100 MBPS or better. Bottom line, original Clojure code was sped up 76.6x and blew the doors off the C++ code.

Be warned, a number of optimization steps were required. The end result blew me away. Had a link I wanted to insert but it's not on my phone and I may have re-installed Linux wiping it out. Have looked for the post for hours, no joy.

https://clojureverse.org/t/...6 -

I've recently had an exam, a C++ exam that was about sorting, pointers, etc... The usual. The exam was about Huffman's

optimization algorithm along with some pointer problems. He asked for a function to find something in a stack, what I did was write down a class that has a constructor, deconstructor, SetSize(), Add(), Remove(), Lenght(), etc.. He didn't give me any points for that, why? Because I didn't write down everything like in his book... I had classmates that literally had phones open with his book, he just watched how they copied code and gave them 10/10 points. But nothing to the guy that wrote down 20 pages of code. YES!! On paper, an IT university that asks you to program on a fucking paper. Good thing that at the very least I passed.

TL;DR

Teacher has book, I refuse to remember code from it/copy from it, I get lower grades than people that literally copied word for word.

Life is really fair. -

Relatively often the OpenLDAP server (slapd) behaves a bit strange.

While it is little bit slow (I didn't do a benchmark but Active Directory seemed to be a bit faster but has other quirks is Windows only) with a small amount of users it's fine. slapd is the reference implementation of the LDAP protocol and I didn't expect it to be much better.

Some years ago slapd migrated to a different configuration style - instead of a configuration file and a required restart after every change made, it now uses an additional database for "live" configuration which also allows the deployment of multiple servers with the same configuration (I guess this is nice for larger setups). Many documentations online do not reflect the new configuration and so using the new configuration style requires some knowledge of LDAP itself.

It is possible to revert to the old file based method but the possibility might be removed by any future version - and restarts may take a little bit longer. So I guess, don't do that?

To access the configuration over the network (only using the command line on the server to edit the configuration is sometimes a bit... annoying) an additional internal user has to be created in the configuration database (while working on the local machine as root you are authenticated over a unix domain socket). I mean, I had to creat an administration user during the installation of the service but apparently this only for the main database...

The password in the configuration can be hashed as usual - but strangely it does only accept hashes of some passwords (a hashed version of "123456" is accepted but not hashes of different password, I mean what the...?) so I have to use a single plaintext password... (secure password hashing works for normal user and normal admin accounts).

But even worse are the default logging options: By default (atleast on Debian) the log level is set to DEBUG. Additionally if slapd detects optimization opportunities it writes them to the logs - at least once per connection, if not per query. Together with an application that did alot of connections and queries (this was not intendet and got fixed later) THIS RESULTED IN 32 GB LOG FILES IN ≤ 24 HOURS! - enough to fill up the disk and to crash other services (lessons learned: add more monitoring, monitoring, and monitoring and /var/log should be an extra partition). I mean logging optimization hints is certainly nice - it runs faster now (again, I did not do any benchmarks) - but ther verbosity was way too high.

The worst parts are the error messages: When entering a query string with a syntax errors, slapd returns the error code 80 without any additional text - the documentation reveals SO MUCH BETTER meaning: "other error", THIS IS SO HELPFULL... In the end I was able to find the reason why the input was rejected but in my experience the most error messages are little bit more precise.2 -

It often feels like the logic and the equivalent final application code have nothing to do with each other.

Logic: Find the only element in this list that matches criterion, or the first element in this other list, or none. If the first list has multiple matches, fail.

Application: Produce information about the criterion checks for all elements in both lists for info logging. Find any elements in first list that match. Save the number of matches for an optimization that relies on a lot of assumptions about the search criterion that are only ever expressed in doc text. If one, return, if multiple, fail. Otherwise find first match in second list, produce debug hint on why the preceding elements in that list didn't match by aggregating the criterion check info. If multiple matched in second list, check highly specific interdependency, and if absent, produce warning about ambiguity. Return first match if any.

The first can be beautifully expressed as a 5 line iterator transform. The second takes 3 mutable arguments (cache, logger, criterion because it also may cache and log), must compute everything eagerly and has constraints that are neither strictly necessary for a correct implementation nor expressible in the type system.1 -

Can anyone tell me what bun does and what’s the hype about, like if I have a vue project does bun build it for me and make it faster? Or it’s just for backend code optimization4

-

So, I had a friendly debate with my senior dev today working over this feature.

What do you say is the best approach?

1. Optimize at the time of building the feature.

2. Do the feature work, optimize all at once. (let's say on a time cycle).5 -

It seems Java on Linux runs slower than Windows... Same code takes about 2x longer to run. And well this code is for a batch job...

Does anyone have experience with JVM tuning/optimization or can confirm/disprove this is the case?22 -

My Advent of Code solution is calculating and calculating, which means I clearly did it wrong. But I already spent 4 hours trying to code it in Clojure (OMG, why can't tail call optimization just work there?!?!)

-

SoSoLoveTech: Comprehensive Tech Solutions for Modern Needs

In the dynamic world of technology, finding reliable and innovative tools to streamline tasks is paramount. SoSoLoveTech emerges as a prominent name in the tech landscape, offering a suite of solutions that cater to diverse needs. This platform positions itself as a one-stop destination for practical and user-friendly tools designed to enhance productivity, simplify complex processes, and empower users across the globe.

A Vision of Excellence

At its core, SoSoLoveTech embodies a commitment to providing tech solutions that bridge the gap between complex technological demands and user-friendly implementations. Whether you are a developer, a digital marketer, or a general user, SoSoLoveTech offers resources that can elevate your efficiency and enable seamless execution of tasks.

Features That Define SoSoLoveTech

1. User-Centric Design

The platform is built with the user in mind. Its tools and features are structured to be intuitive, accessible, and effective. Whether you're a tech-savvy professional or someone with minimal technical knowledge, SoSoLoveTech ensures that you can navigate and utilize its offerings without hassle.

2. Diverse Range of Tools

SoSoLoveTech offers a wide array of tools, each designed to solve specific problems. Some of the notable tools include:

Dummy Image Placeholder Generator: Simplifies design workflows by allowing users to generate placeholder images for web and app development projects.

Hex to RGB Color Converter: A precise tool for designers and developers to switch between color formats effortlessly.

YouTube Thumbnail Downloader: Enables users to download high-quality thumbnails from YouTube videos with ease.

QR Code Decoder: A convenient tool for scanning and decoding QR codes to retrieve data quickly.

Bank Details to IFSC Code Converter: Assists in locating IFSC codes based on bank details, simplifying financial transactions.

3. Speed and Reliability

In today’s fast-paced world, speed matters. The tools on SoSoLoveTech are optimized for swift performance, ensuring that users can complete their tasks in record time. Moreover, the platform prioritizes reliability, ensuring uninterrupted access to its resources.

4. SEO-Friendly Resources

For digital marketers and content creators, SoSoLoveTech provides tools that enhance search engine optimization (SEO) efforts. By enabling quick access to critical utilities, the platform becomes a valuable companion for those looking to improve their online visibility.

Exploring Key Tools on SoSoLoveTech

Dummy Image Placeholder Generator

Web developers often require placeholder images during the design phase. The Dummy Image Placeholder Generator on SoSoLoveTech allows users to generate images of specific dimensions, colors, and formats. This tool saves time and ensures consistency in design mockups, making it an indispensable resource for UI/UX designers and developers.

Hex to RGB Color Converter

Designers frequently switch between color models to meet project requirements. This converter simplifies the process of transforming hexadecimal color codes into RGB format. Its precision and ease of use make it a favorite among graphic designers and frontend developers.

YouTube Thumbnail Downloader

A tool for content creators, the YouTube Thumbnail Downloader provides an easy way to save thumbnails in high resolution. Whether for reference or reuse, this tool ensures that users have quick access to video thumbnails without compromising quality.

QR Code Decoder

QR codes are omnipresent in the digital world, from marketing campaigns to payment systems. The QR Code Decoder on SoSoLoveTech allows users to scan and decode these codes effortlessly, revealing the embedded information within seconds.

Bank Details to IFSC Code Converter

For individuals and businesses managing multiple transactions, finding accurate IFSC codes is often a challenge. SoSoLoveTech addresses this with its Bank Details to IFSC Code Converter, ensuring quick access to accurate banking information, thus simplifying transactions.

Why Choose SoSoLoveTech?

SoSoLoveTech distinguishes itself through its commitment to innovation, accessibility, and reliability. Here's why it's a preferred platform for many users:

Free and Accessible Tools: Most tools on the platform are available free of cost, making them accessible to a global audience.

Continuous Updates: The team behind SoSoLoveTech ensures that the tools are regularly updated to meet evolving technological standards.

Comprehensive Documentation: Each tool is accompanied by detailed guides and FAQs, ensuring that users can make the most of its features.

Mobile-Friendly Interface: The platform’s design is responsive, ensuring seamless access across devices, including smartphones and tablets.

Future Prospects

As the digital landscape evolves, SoSoLoveTech is poised to expand its offerings.