Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "index problem"

-

Made a list of all my friends in Java.

then I run:

list.get(0);

I get: Index out of bound exception.

What is the problem, send help10 -

A few weeks ago a client called me. His application contains a lot of data, including email addresses (local part and domain stored separately in SQL database). The application can filter data based on the domain part of the addresses. He ask me why sub.example.com is not included when he asked the application for example.com. I said: No problem, I can add this feature to the application, but the process will take a longer.

Client: No problem, please add this ASAP.

So, the next day I changed some of the SQL queries to lookup using the LIKE operator.

After a week the client called again: The process is really slow, how can this be?

Me: Well, you asked me to filter the subdomains as well. Before, the application could easily find all the domains (SQL index), but now it has to compare all the domains to check if it ends with the domain you are looking for.

Client: Okay, but why is it a lot slower than before?

Me: Do you have a dictionary in your office?

<Client search for a dictionary, came back with one>

Me: give me the definition of the word "time"

<Client gives definition of time>

Me: Give me the definition of all words ending with "time"

Client: But, ...

Never heard from him again on this issues :-P5 -

Got a call while on vacations, the main server is down.

*holy shit* I thought.

As I opened my MacBook, the phone kept ringing and slack was going crazy.

“What should I do? Where is the problem?”, the voices in my head said..

I opened the terminal and tried to get a ssh connection.

Sweat was dropping down from my forehead.

“Connected” the terminal said.

“Fuck yeah, the server is up, only the app is not responding”, I thought and opened the log files.

Suddenly, “STOP” I shout at the log files that were appended way too fast.

Then I saw it.

TimeOutExceptions..

I added an index to the modification date column,“ kill -9“ed the process, started again and went back to vacation mode 🙂

And of course I was the office hero for a while💪

For the smart asses, I’m aware that it’s a bad idea to -9 the app process in prod, but it was so overloaded that i was not able to kill it any other way. And we needed that server up again.4 -

THE WORST PRANK ATTEMPT

If i remember true, it was 2012. april fool day..

me and my co-worker (we were the founders) decided to fool our members (we had a script's unofficial support forum). so, we did the plan. we register another account on march and wrote a few useful messages with it. help guys with that fake account (named as Root).

on fool day, we move the site to hidden folder (but didnt backup it) and added an index file as "hi, i am Root. you know me who am i. i hacked this site and deleted all dbs. cya later" (in turkish of course)

and we sit our chairs, began the watch our messages from facebook,skype,whatsapp etc..

we act like we are in trouble and we cant solve the problem.

at the same time, one of the our crew, decided to help us :D

so, he contact with our server's management crew. they dont know the fool too :)

server management looked up the situation without try to contact with me or my co. and we got an email from server like that

"hello tilkibey and impack, we just realized your site is hacked. so we delete your all ftp and db for safety. please contact with us asap"

we shocked and contact with them, explain the truths and request the recover our site (because we though they backup site before deleting all things). but they didnt backup it :(

so, we recover our last backup which is got nearly 10 days ago :(8 -

doNotMessWithITTeamInAFuckingProject();

Last night me with my team have a discussion with my project team. Currently we have a project for our insurance client building a Learning Management System. The project condition already messed up since the first day i join a meeting. Because since its a consortium project with multiple company involved, one of company had a bad experience with another company. It happened few years back when both of company were somehow break up badly because miss communication (i heard this from one of my team).

Skip..skip... And then day to day like another stereotype IT projects when client and business analyst doing requirements gathering, the specs seems unclear and keep changing day by day even when I type this rant I'm sure it will change again.

Then something happened last night when my team leader force our business analyst to re index the use case number (imho) this is no need to be done, and i know the field conditions its so tough for all team members.

So many problems occured, actually this is a boring problem like lack of dev resource, lack of project management and all other stereotype IT projects had. Its sucks why this things is happening again.

Finally my fellow business analyst type a quite long message in our group and said that he maybe quit because its too tired and he felt that the leader only know about push push pushhhhhy fcking pussy, he never go to the client site and look what we've done and what we struggle so far.

I just don't know why, i know this guy earlier was an IT geek also, but when he leading a team he act like he never done IT project before, just know about pushing people without knowing what the context and sound to me like just rage push!

Damnit, i maybe quit also, you know we IT guy never affraid to quit anytime from the messed up condition like this. Even though we were at the bottom level in a project, but we hold the most main key for development.

Hope he (my leader) read this rant. And can realize what happened and fix this broken situation. I don't know what to say again, im in steady mode to quit anytime if something chaos happen nearly in the future.

doNotMessWithITTeamInAFuckingProject(); 1

1 -

Do things in JS they said, it'll be easier they said...

(After a few WTF's i found the problem, arr.map passes more parameters to parseInt than just the strings. It also passes an array index that gets interpreted as radix) 18

18 -

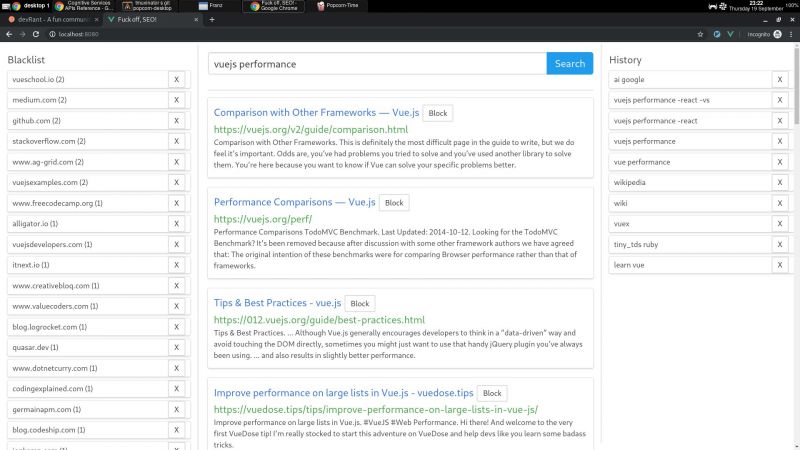

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

I really felt like a badass one time when I managed to recover all projects on our dev server after a full meltdown of the HDD.

We had no recent backups, because our backup server was down for a few months, and our (at the time small) company was in a tight spot on finances, and couldn't get a replacement.

The problem was that the HDD on the backup server failed, but we were storing all projects also on the dev server, along with our local git repos (no GitHub at the time for us), but then the dev server HDD also broke, and I used every piece of data recovery software I found trying to recover the data, until one actually managed to read the raw data from the HDD and store it as a virtual drive, that I then used to try and build another partition index and it actually worked!

Lost about 10% of the data, but that was enough, as i managed to recover all the git repos and databases...

I don't even remember the tools that got the job done in the end, but that was one hell of a week, and at the end I felt like a true IT God!

True story!

PS: 2 weeks later we had a new backup server, another offsite backup solution and a GitHub account for the company. Was delayed on salary in order to manage it (me and the CEO both agreed to give our pay for one month to get them), but worth it!1 -

Im getting annoyed by the new layout of google. Hovering the sidebar will make a scrollbar appear but the main part of the site's scrollbar will disapear. This results in most content moving from their original place. Let's make a Stlyish script to fix this problem I thought. Guess what now somethings stay where they should be, but the things that were first on the right place have moved. Also this will make the header shorter. I'm getting more and more amazed how shitty some frontend devs are at google.

To fix one bug they, instead of solving the bug, tried to counter the result of the bug.

I do like the z-index of the sidemenu though (it's 2005, the year youtube was created)12 -

My hatered for stack overflow has been incredibly increasing every day.

-Was struck in a basic problem for last 16 hours.. finally solved it at 2:am, was very happy.

- its quite a nice situation with fragments in Android, so thought of writing an example q/a on stack overflow.

- its FUCKING 7 am. It took me an hour to document my code(mind you, i always document in the best way possible, created a nice readme.md file) , and when it comes to uploading, it says " your question seems to have mismatch index". Well, fuck it, but okay i will edit it.

-Edited whole code, made sure it looks fine in preview pane , pressed upload.

-same fucking answer... for past 3 hours i have been trying to add questions and answer in various possible ways but that shit don't want to accept it..at the end uploaded the md to my github profile and added a fucking link there...

Please, someone upvote that damning link, i qant some repo their so i could ask other authors with questions in the comment sections(and if possible, add back my code into my answer) ;____;

https://stackoverflow.com/questions...7 -

The more I work with performance, the less I like generated queries (incl. ORM-driven generators).

Like this other team came to me complaining that some query takes >3minutes to execute (an OLTP qry) and the HTTP timeout is 60 seconds, so.... there's a problem.

Sure, a simple explain analyze suggests that some UIDPK index is queried repeatedly for ~1M times (the qry plan was generated for 300k expected invocations), each Index Scan lasts for 0.15ms. So there you go.. Ofc I'd really like to see more decimal zeroes, rather than just 0.15, but still..

Rewriting the query with a CTE cut down the execution time to pathetic 0.04sec (40ms) w/o any loops in the plan.

I suggest that change to the team and I am responded a big fat NO - they cannot make any query changes since they don't have any control on their queries

....

*sigh*

....

*sigh*

but down to 0.04sec from 3+ minutes....

*sigh*

alright, let's try to VACUUM ANALYZE, although I doubt this will be of any help. IDK what I'll do if that doesn't change the execution plan :/ Prolly suggest finding a DBA (which they won't, as the client has no € for a DBA).

All this because developers, the very people sho should have COMPLETE control over the product's code, have no control over the SQLs.

This sucks!27 -

This story just left me speechless in any way and i want to share it. tl;dr at the end.

Im studying computer science in germany and in the first of the small classes i noticed... no, i was disturbed by a guy who would just say that the thing we're learning atm were so easy and the teacher shouldn't even bother to explain it to the class. I don't understand why you would spoile a class that hard... I'm here to learn and listen to the teacher, not to you little asshole. (We were doing basic stuff like binary system etc. but still, let us learn)

So he became unpopular pretty fast.

Fast forward, a few weeks of studying later there was a coding competition where you had to solve different algorithmic problems in a team as fast as possible.

I came there, without a team because my friends aren't interested but I enjoy such tournaments. This guy and me were the only ones without a team and we had to work together.

After him being a total dick for hours i had to watch him code a simple for-loop, that iterates through a sorted array. Nothing special, at this point anyone could do that task in our class so it shouldn't be a problem for him.

He made a simple for-loop and it worked fine, but we figured we had to iterate through the array the other way around.

'Alright', I think. 'Just let the index decr..' 'Pssshhh', he interrupted me and said he knows exactly how to do this.

I was quite impressed when he started to type in 'public int backsort..' in a new line. He tried to resort the array backwards with a quicksort that he then struggled to implement. (Of course we had to implement a quick runtime and we needed that quicksort badly)

I was kind of annoyed but impressed at the same time. I mumbled 'Java has an internal sorting algorithm already' just to amuse myself.

He then used that implementation.

After a few minutes of my pleasure and multiple tests without hitting the requested runtime, i tried to explain to him why we wouldn't need to sort that array backwards and he just couldn't believe it.

I hope that he stays more humble after that..

Also we became last place but thats ok :)

tl;dr: Guy spoiles whole class, brags with his untouchable knowledge (when we do things like binary system). In a competition has to iterate through a sorted array backwards - tries to implement a sorting algorithm to sort it backwards first. I tell him, we could use a already implemented java method. Then tell him we could simply iterate through decreasing the index. Mind-Blown2 -

TIFU by showing login data during presentation

I was presenting my school project when my teacher asked if I could show him the source code. I said ofc, just let me login to the FTP server. I completely forgot that it was also shown on the big screen, and a random funny student logged in and tried to replace the index file with a joke file. Of course, he didn't want to make damage, so he made a backup. But this backup caused the problem, because he connected to the FTP through Windows Explorer (wtf?), and when he made a copy of the original file, it was renamed to "Copy of xy", but in a localized version, which contains special characters. Because of these characters, some FTP clients couldn't even connect, others just couldn't interact with the file. No download, no rename, no delete, nothing. After trying out like 8-9 FTP clients, I just remembered that I could rename it in PHP. Well, it got deleted instead of being renamed, but at least it wasn't there anymore. I have spent like half more hour with searching for a backup version on my computer until I found it.

TL;DR: showed FTP credentials during presentation on big screen, random student accessed and renamed a file, special characters in name fucked up the server, luckily I found a backup.1 -

<iframe src="index5.jsp">

Hello Mr. Tester Guy, At last you finally saw this. I don't know how to say this but I'm sick and tired of your bs!

You wanna know what’s wrong with everything?

I could tell you what’s wrong with this country – or at least I could give you my opinion about it. I could tell you what’s wrong with “the church” (as though all churches are guilty of what some churches do). But I can't fucking tell what your problem is!

Let’s get pragmatic for a second.

I have worked tirelessly for over only God knows how long, trying to get this platform running on all browsers in this world even on obsolete ones (IE7,6,5,4,3... to the shithole).

You are heartless!

After all these pain you still rant about index pages not rendering equally in time across all browsers.

You are a demon from hell!

I could go on, but with your degree in Q.A. (like measuring the margin between two images using a tape-rule or looking for typos in a dummy text) you should understand my point fucking cunt.

I realize I just ranted a little, but I’d like to think that this rant is more of an attempt to end the useless practice of ranting about your moronic findings on this platform.

The devil awaits you in hell, bitch!

</iframe>5 -

"=$B1*INDEX(A:A,ROW())"

See this absolute bullshit right here?

This fucking cunt of a problem designed by some dippity-do finger-painting fucking jackass at google doesn't work why?

Because for some *god damn reason* they decided it would be a good idea to setup it up in a way that when you use absolute cell references in a formula, you can't use functions in the formula too. No the other side has to be a literal or cell reference apparently.

Motherfuckers.3 -

I started working my new job as a programmer(c#, java, etc.) in a very good programming company.

My first task was to optimise their DB. The DB has indexes and around 3mil rows. The db is slowwww as fuck.

So i made a windows service that reorganises indexes (Depending on blank pages and fragmentation of the index) in DB each week on time.

But as soon as new rows start to come in, the fragmentation of the indexes just sky rocket.

I tried with changing idexes so there will accually be onli indexes we need.

Can anyone help me how can i fix fragmentation problem so the select querries will be much faster.

Sorry if I don't know the solution, I'm new at this task.

Thank you!7 -

Heres some research into a new LLM architecture I recently built and have had actual success with.

The idea is simple, you do the standard thing of generating random vectors for your dictionary of tokens, we'll call these numbers your 'weights'. Then, for whatever sentence you want to use as input, you generate a context embedding by looking up those tokens, and putting them into a list.

Next, you do the same for the output you want to map to, lets call it the decoder embedding.

You then loop, and generate a 'noise embedding', for each vector or individual token in the context embedding, you then subtract that token's noise value from that token's embedding value or specific weight.

You find the weight index in the weight dictionary (one entry per word or token in your token dictionary) thats closest to this embedding. You use a version of cuckoo hashing where similar values are stored near each other, and the canonical weight values are actually the key of each key:value pair in your token dictionary. When doing this you align all random numbered keys in the dictionary (a uniform sample from 0 to 1), and look at hamming distance between the context embedding+noise embedding (called the encoder embedding) versus the canonical keys, with each digit from left to right being penalized by some factor f (because numbers further left are larger magnitudes), and then penalize or reward based on the numeric closeness of any given individual digit of the encoder embedding at the same index of any given weight i.

You then substitute the canonical weight in place of this encoder embedding, look up that weights index in my earliest version, and then use that index to lookup the word|token in the token dictionary and compare it to the word at the current index of the training output to match against.

Of course by switching to the hash version the lookup is significantly faster, but I digress.

That introduces a problem.

If each input token matches one output token how do we get variable length outputs, how do we do n-to-m mappings of input and output?

One of the things I explored was using pseudo-markovian processes, where theres one node, A, with two links to itself, B, and C.

B is a transition matrix, and A holds its own state. At any given timestep, A may use either the default transition matrix (training data encoder embeddings) with B, or it may generate new ones, using C and a context window of A's prior states.

C can be used to modify A, or it can be used to as a noise embedding to modify B.

A can take on the state of both A and C or A and B. In fact we do both, and measure which is closest to the correct output during training.

What this *doesn't* do is give us variable length encodings or decodings.

So I thought a while and said, if we're using noise embeddings, why can't we use multiple?

And if we're doing multiple, what if we used a middle layer, lets call it the 'key', and took its mean

over *many* training examples, and used it to map from the variance of an input (query) to the variance and mean of

a training or inference output (value).

But how does that tell us when to stop or continue generating tokens for the output?

Posted on pastebin if you want to read the whole thing (DR wouldn't post for some reason).

In any case I wasn't sure if I was dreaming or if I was off in left field, so I went and built the damn thing, the autoencoder part, wasn't even sure I could, but I did, and it just works. I'm still scratching my head.

https://pastebin.com/xAHRhmfH33 -

Sometimes in our personal projects we write crazy commit messages. I'll post mine because its a weekend and I hope someone has a well deserved start. Feel free to post yours, regex out your username, time and hash and paste chronologically. ISSA THREAD MY DUDES AND DUDETTES

--

Initialization of NDM in Kotlin

Small changes, wiping drive

Small changes, wiping drive

Lottie, Backdrop contrast and logging in implementation

Added Lotties, added Link variable to Database Manifest

Fixed menu engine, added Smart adapter, indexing, Extra menus on home and Calendar

b4 work

Added branch and few changes

really before work

Merge remote-tracking branch 'origin/master'

really before work 4 sho

Refined Search response

Added Swipe to menus and nested tabs

Added custom tab library

tabs and shh

MORE TIME WASTED ON just 3 files

api and rx

New models new handlers, new static leaky objects xd, a few icons

minor changes

minor changesqwqaweqweweqwe

db db dbbb

Added Reading display and delete function

tryin to add web socket...fail

tryin to add web socket...success

New robust content handler, linked to a web socket. :) happy data-ring lol

A lot of changes, no time to explain

minor fixes ehehhe

Added args and content builder to content id

Converted some fragments into NDMListFragments

dsa

MAjor BiG ChANgEs added Listable interface added refresh and online cache added many stuff

MAjor mAjOr BiG ChANgEs added multiClick block added in-fragment Menu (and handling) added in-fragment list irem click handling

Unformatted some code, added midi handler, new menus, added manifest

Update and Insert (upsert) extension to Listable ArrayList

Test for hymnbook offline changing

Changed menuId from int to key string :) added refresh ...global... :(

Added Scale Gesture Listener

Changed Font and size of titlebar, text selection arg. NEW NEW Readings layout.

minor fix on duplicate readings

added isUserDatabase attribute to hymn database file added markwon to stanza views

Home changes :)

Modular hymn Editing

Home changes :) part 2

Home changes :) part 3

Unified Stanza view

Perfected stanza sharing

Added Summernote!!

minor changes

Another change but from source tree :)))

Added Span Saving

Added Working Quick Access

Added a caption system, well text captions only

Added Stanza view modes...quite stable though

From work changes

JUST a [ush

Touch horizontal needs fix

Return api heruko

Added bible index

Added new settings file

Added settings and new icons

Minor changes to settings

Restored ping

Toggles and Pickers in settings

Added Section Title

Added Publishing Access Panel

Added Some new color changes on restart. When am I going to be tired of adding files :)

Before the confession

Theme Adaptation to views

Before Realm DB

Theme Activity :)

Changes to theme Activity

Changes to theme Activity part 2 mini

Some laptop changes, so you wont know what changed :)

Images...

Rush ourd

Added palette from images

Added lastModified filter

Problem with cache response

works work

Some Improvements, changed calendar recycle view

Tonic Sol-fa Screen Added

Merge Pull

Yes colors

Before leasing out to testers

Working but unformated table

Added Seperators but we have a glithchchchc

Tonic sol-fa nice, dots left, and some extras :)))

Just a nice commit on a good friday.

Just a quickie

I dont know what im committing...2 -

Math question time!

Okay so I had this idea and I'm looking for anyone who has a better grasp of math than me.

What if instead of searching for prime factors we searched for a number above p?

One with a certain special property. BEAR WITH ME. I know I make these posts a lot and I'm a bit of a shitposter, but I'm being genuine here.

Take this cherry picked number, 697 for example.

It's factors are 17, and 41. It's trivial but just for demonstration.

If we represented it's factors as a bit string, where each bit represents the index that factor occurs at in a list of primes, it looks like this

1000001000000

When converted back to an integer that number becomes 4160, which we will call f.

And if we do 4160/(2**n) until the result returns

a fractional component, then N in this case will be 7.

And 7 is the index of our lowest factor 17 (lets call it A, and our highest factor we'll call B) in our primes list.

So the problem is changed from finding a factorization of p, to finding an algorithm that allows you to convert p into f. Once you have f it's a matter of converting it to binary, looking up the indexes of all bits set to 1, and finding the values of those indexes in the list of primes.

I'm working on doing that and if anyone has any insights I'm all ears.9 -

Tldr:

Can't fucking figure out why I'm the only one who can't solve a DP problem in code, when me and friends use the same idea and no one knows why only mine doesn't work...

We are given a task to solve a problem using DP. My friends write their code with the same idea as a solution. Copying the code is not allowed. I follow the same idea but my code won't work. Others look into it, in case they find errors. They can't find any.

The problem (for reference):

Given a fixed list of int's a = (a_1,a_2,...,a_n) and b = (b_1,b_2,...,b_n), a_i and b_i >= 0, a.length == b.length

We want to maximize the sum of a_i's chosen. Every a_i is connected with the b_i at the same index. b_i tells us how many indexes of a we have to skip if choosing the corresponding a_i, so list index of b_i + b_i's value + 1 would be the position of the next a_i available.

The idea:

Create a new list c with same length as a (or b).

Begin at the end of c and save a_n at the same position in c. Iterate backwards through c and at each position add the max value of all previously saved values of c (with regards to the b_i-restriction) with the current a_i, else a_i + 0 if the b_i-resctriction goes beyond the list.

Return the max value of c.

How does that not work for me but for the others?? Funny enough, a few given samples work with my code. I'm questioning my coding ability...6 -

Duuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuuck off you bloody infamous basterds flattening their fat asses at Microsoft.

I wasted half of my dev day to configure my wcf rest-api to return an enumeration property as string instead of enum index as integer.

There is actually no out-of-the-box attribute option to trigger the unholy built-in json serializer to shit out the currently set enum value as a pile of characters clenched together into a string.

I could vomit of pure happiness.

And yes.

I know about that StringEnumConverter that can be used in the JsonConvert Attribute.

Problem is, that this shit isn't triggered, no matter what I do, since the package from Newtonsoft isn't used by my wcf service as a standard serializer.

And there is no simple and stable way to replace the standard json serializer.

Christ, almighty!

:/ -

And now I've run into a whole another issue which is really fucking strange.

Has it ever occured that a Object in java looses all it's values after being put into an array of same type?

My problem:

[code...]

Mat[] matArray = new Mat[totalFramesOfVideo];

videoCapture.open();

Mat currentFrame = new Mat();

int frameCounter = 0;

while(videoCapture.read()) {

currentFrame = (last read frame as a Mat)

matArray[frameCounter] = currentFrame;

frameCounter++;

}

then, after filling the array and accessing elements, they lose all their object values.

Eg. Before currentFrame's dimensions were 1080*1920, but matArray[index] dimensions are suddenly 0*0.6 -

Made a custom pop up for the web app im building then i encouter a problem when i saw the pop up in safari it doesnt show up properly -_- deym cross platform compatibility the background is not grey and i think safari ignored the z index in my css :(

-

I don't even really know where to start, so I figure I'll just throw this out there and see where it goes.

My daughter is disabled. She's in sports and dance, but it's taken my wife and I years to find out about the organizations she's now in, and that's mostly through word of mouth. Other families have told us because they've had the years of experience that we didn't. And now we're passing the information on to other less experienced families. And that's a problem that everyone we've talked to agrees upon: there's really no good way of discovering what organizations are out there, and what they can help with.

There exist some sites out there like https://challengedathletes.org/reso... which are really just lists of sites, but really nothing more to indicate that this group has wheelchair basketball, that group has adaptive ballet, that kind of thing. So I'm thinking, what if I built a site that provided an index. Searchable, faceted, like Algolia or AWS Cloudsearch. That part I can do. But how would I go about gathering the information? Could I somehow scrape it? If so, how do I organize it? Do I crowdsource by petitioning /r/disability, the Facebook support groups my family belongs to, and other places across the interwebs?

I can design the data model. I can build the webapp. I can make it fast and pretty and easy to use. But how do I get the data?2 -

Me and a friend are making a discord bot, and added a warning command to warn users.

All was going well, and when we tested the deleting a warning it seemed fine. I then tried to delete all the warnings one by one, when we came across a problem. It wont delete the last warning in the array, the 1st, 2nd, 3rd etc? Thats fine, just the last index that isnt working.

Our code is like this:

list = data.warns//a list of JSON format warnings

console.log(list)//shows the value is in the list

console.log(list[index])//shows the value

delete list[index]

console.log(list)//shows its gone

data.warns = list;

console.log(data.warns)//shows the value is still no longer present

data.save().catch(blah blah)//no error is caught, and nothing crashes, it proceeds to send a message to the channel after this

but then the value at index is still there in the database as if it didn't save it, but only if the index is the last item in the array.

We have been stuck on this for over an hour and I now remember why I hate programming.2 -

Okay, summary of previous episodes:

1. Worked out a simple syntax to convert markdown into hashes/dictionaries, which is useful for say writing the data in a readable format and then generating a structured representation from it, like say JSON.

2. Added a preprocessor so I could declare and insert variables in the text, and soon enough realized that this was kinda useful for writing code, not just data. I went a little crazy on it and wound up assembling a simple app from this, just a bunch of stuff I wanted to share with friends, all packed into a single output html file so they could just run it from the browser with no setup.

3. I figured I might as well go all the way and turn this into a full-blown RPG for shits and giggles. First step was testing if I could do some simple sprites with SVG to see how far I could realistically get in the graphics department.

Now, the big problem with the last point is that using Inkscape to convert spritesheets into SVG was bit of a trouble, mostly because I am not very good at Inkscape. But I'm just doing very basic pixel art, so my thought process was maybe I can do this myself -- have a small tool handle the spritesheet to SVG conversion. And well... I did just that ;>

# pixel-to-svg:

- Input path-to-image, size.

- grep non-transparent pixels.

- Group pixels into 'islands' when they are horizontally or vertically adjacent.

- For each island, convert each pixel into *four* points because blocks:

· * (px*2+0, py*2+0), (px*2+1, py*2+0), (px*2+1, py*2+1), (px*2+0, py*2+1).

· * Each of the four generated coordinates gets saved to a hash unique to that island, where {coord: index}.

- Now walk that quad-ified output, and for each point, determine whether they are a corner. This is very wordy, but actually quite simple:

· * If a point immediately above (px, py-1) or below (px, py+1) this point doesn't exist in the coord hash, then you know it's either top or bottom side. You can determine whether they are right (px+1, py) or left (px-1, py) the same way.

· * A point is an outer corner if (top || bottom) && (left || right).

· * A point is an inner corner if ! ((top || bottom) && (left || right)) AND there is at least _one_ empty diagonal (TR, TL, BR, BL) adjacent to it, eg: (px+1, py+1) is not in the coord hash.

· * We take note of which direction (top, left, bottom, right) every outer or inner corner has, and every other point is discarded. Yes.

Finally, we connect the corners of each island to make a series of SVG paths:

- Get starting point, remember starting position. Keep the point in the coord hash, we want to check against it.

- Convert (px, py) back to non-quadriplied coords. Remember how I made four points from each pixel?

. * {px = px*0.5 + (px & 1)*0.5} will transform the coords from quadriple back to actual pixel space.

· * We do this for all coordinates we emit to the SVG path.

- We're on the first point of a shape, so emit "M${px} ${py}" or "m${dx} ${dy}", depending on whether absolute or relative positioning would take up less characters.

· * Delta (dx, dy) is just (last_position - point).

- We walk from the starting point towards the next:

· * Each corner has only two possible directions because corners.

· * We always begin with clockwise direction, and invert it if it would make us go backwards.

· * Iter in given direction until you find next corner.

· * Get new point, delete it from the coord hash, then get delta (last_position - new_point).

· * Emit "v${dy}" OR "h${dx}", depending on which direction we moved in.

· * Repeat until we arrive back at the start, at which point you just emit 'Z' to close the shape.

· * If there are still points in the coord hash, then just get the first one and keep going in the __inverse__ direction, else stop.

I'm simplifying here and there for the sake of """brevity""", but hopefully you get the picture: this fills out the `d` (for 'definition') of a <path/>. Been testing this a bit, likely I've missed certain edge cases but it seems to be working alright for the spritesheets I had, so me is happiee.

Elephant: this only works with bitmaps -- my entire idea was just adding cute little icons and calling it a day, but now... well, now I'm actually invested. I can _probably_ support full color, I'm just not sure what would be a somewhat efficient way to go about it... but it *is* possible.

Anyway, here's first output for retoori maybe uuuh mystery svg tag what could it be?? <svg viewBox="0 0 8 8" height="16" width="16"><path d="M0 2h1v-1h2v1h2v-1h2v1h1v3h-1v1h-1v1h-1v1h-2v-1h-1v-1h-1v-1h-1Z" fill="#B01030" stroke="#101010" stroke-width="0.2" paint-order="stroke"/></svg>4 -

So I’ve been recording the same project into dr who bullshit meets the invasion of the body snatchers

If I were sleeping and motivated to finish something some bastardized people would steal it would be so simple to keep progressing through it and it would be contributed allowing people to write their implementations to parse and index Gis formats and integrate a lot of valuable data regarding travel and survivable conditions

Except for all the above mentioned bs which cuts my goddamn motivation down to next to nothing

And no one else seems to see a problem with this across the goddamn board because the pods already sucker their innards out13