Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "malloc"

-

Does anyone else have that one guy or gal you work with that's ALWAYS the one to find the weirdest, inexplicable bugs possible? Yup. That's me. Here's some fun examples.

*Unplugs monitor from laptop, causing kernel panic*

*Mouse moves in reverse when inside canvas*

*Program fails to compile, yet compiler blames a syntax error that doesn't exist*

*malloc on the first line of a program causes a segfault*

And for how the conversation usually goes

Me: "[coworker], mind taking a look at this?"

Coworker: "Sure.This better not be another one of 'your bugs'. ... ... ... Well, if you need me I'll be at my desk."

Me: "So you know what's causing it?"

Coworker: "Nope. I've accepted that you're cursed and you should do the same."8 -

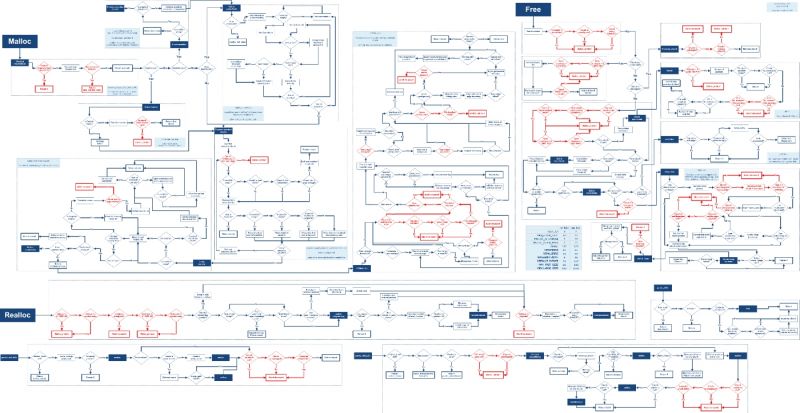

How malloc works? It's easy. Just follow this simple flowchart and you will understand in no time.

9

9 -

fork() can fail: this is important

Ah, fork(). The way processes make more processes. Well, one of them, anyway. It seems I have another story to tell about it.

It can fail. Got that? Are you taking this seriously? You should. fork can fail. Just like malloc, it can fail. Neither of them fail often, but when they do, you can't just ignore it. You have to do something intelligent about it.

People seem to know that fork will return 0 if you're the child and some positive number if you're the parent -- that number is the child's pid. They sock this number away and then use it later.

Guess what happens when you don't test for failure? Yep, that's right, you probably treat "-1" (fork's error result) as a pid.

That's the beginning of the pain. The true pain comes later when it's time to send a signal. Maybe you want to shut down a child process.

Do you kill(pid, signal)? Maybe you do kill(pid, 9).

Do you know what happens when pid is -1? You really should. It's Important. Yes, with a capital I.

...

...

...

Here, I'll paste from the kill(2) man page on my Linux box.

If pid equals -1, then sig is sent to every process for which the calling process has permission to send signals, except for process 1 (init), ...

See that? Killing "pid -1" is equivalent to massacring every other process you are permitted to signal. If you're root, that's probably everything. You live and init lives, but that's it. Everything else is gone gone gone.

Do you have code which manages processes? Have you ever found a machine totally dead except for the text console getty/login (which are respawned by init, naturally) and the process manager? Did you blame the oomkiller in the kernel?

It might not be the guilty party here. Go see if you killed -1.

Unix: just enough potholes and bear traps to keep an entire valley going.

Source: https://rachelbythebay.com/w/2014/...12 -

Someone on a C++ learning and help discord wanted to know why the following was causing issues.

char * get_some_data() {

char buffer[1000];

init_buffer(&buffer[0]);

return &buffer[0];

}

I told them they were returning a pointer to a stack allocated memory region. They were confused, didn't know what I was talking about.

I pointed them to two pretty decently written and succinct articles, the first about stack vs. heap, and the second describing the theory of ownership and lifetimes. I instructed to give them a read, and to try to understand them as best as possible, and to ping me with any questions. Then I promised to explain their exact issue.

Silence for maybe five minutes. They disregard the articles, post other code saying "maybe it's because of this...". I quickly pointed them back at their original code (the above) and said this is 100% an issue you're facing. "Have you read the articles?"

"Nope" they said, "I just skimmed through them, can you tell me what's wrong with my code?"

Someone else chimed in and said "you need to just use malloc()." In a C++ room, no less.

I said "@OtherGuy please don't blindly instruct people to allocate memory on the heap if they do not understand what the heap is. They need to understand the concepts and the problems before learning how C++ approaches the solution."

I was quickly PM'd by one of the server's mods and told that I was being unhelpful and that I needed to reconsider my tone.

Fuck this industry. I'm getting so sick of it.24 -

WASM was a mistake. I just wanted to learn C++ and have fast code on the web. Everyone praised it. No one mentioned that it would double or quadruple my development time. That it would cause me to curse repeatedly at the screen until I wanted to harm myself.

The problem was never C++, which was a respectable if long-winded language. No no no. The problem was the lack of support for 'objects' or 'arrays' as parameters or return types. Anything of any complexity lives on one giant Float32Array which must surely bring a look of disgust from every programmer on this muddy rock. That is, one single array variable that you re-use for EVERYTHING.

Have a color? Throw it on the array. 10 floats in an object? Push it on the array - and split off the two bools via dependency injection (why do I have 3-4 line function parameter lists?!). Have an image with 1,000,000 floats? Drop it in the array. Want to return an array? Provide a malloc ptr into the code and write to it, then read from that location in JS after running the function, modifying the array as a side effect.

My- hahaha, my web worker has two images it's working with, calculations for all the planets, sun and moon in the solar system, and bunch of other calculations I wanted offloaded from the main thread... they all live in ONE GIANT ARRAY. LMFAO.If I want to find an element? I have to know exactly where to look or else, good luck finding it among the millions of numbers on that thing.

And of course, if you work with these, you put them in loops. Then you can have the joys of off-by-one errors that not only result in bad results in the returned array, but inexplicable errors in which code you haven't even touched suddenly has bad values. I've had entire functions suddenly explode with random errors because I accidentally overwrote the wrong section of that float array. Not like, the variable the function was using was wrong. No. WASM acted like the function didn't even exist and it didn't know why. Because, somehow, the function ALSO lived on that Float32Array.

And because you're using WASM to be fast, you're typically trying to overwrite things that do O(N) operations or more. NO ONE is going to use this return a + b. One off functions just aren't worth programming in WASM. Worst of all, debugging this is often a matter of writing print and console.log statements everywhere, to try and 'eat' the whole array at once to find out what portion got corrupted or is broke. Or comment out your code line by line to see what in forsaken 9 circles of coding hell caused your problem. It's like debugging blind in a strange and overgrown forest of code that you don't even recognize because most of it is there to satisfy the needs of WASM.

And because it takes so long to debug, it takes a massively long time to create things, and by the time you're done, the dependent package you're building for has 'moved on' and find you suddenly need to update a bunch of crap when you're not even finished. All of this, purely because of a horribly designed technology.

And do they have sympathy for you for forcing you to update all this stuff? No. They don't owe you sympathy, and god forbid they give you any. You are a developer and so it is your duty to suffer - for some kind of karma.

I wanted to love WASM, but screw that thing, it's horrible errors and most of all, the WASM heap32.7 -

It's so fucking amazing : working on very low levels concepts such as a malloc implementation makes me understand computer science as a real science. I love it 😍2

-

Achievement unlocked: malloc failed

😨

(The system wasn't out of memory, I was just an idiot and allocated size*sizeof(int) to an int**)

I'd like to thank myself for this delightful exercise in debugging, the GNU debugger, Julian Seward and the rest of the valgrind team for providing the necessary tools.

But most of all, I'd like that three hours of my life back 😩4 -

So this is an update of the afore mentioned IT related RPG I am making. I have settled on the title "Lords of Bullshit: a tale of corporate incompetence".

I need some ideas guys. I have Java, C, Python, PHP, bash and git as skill types, but I need spells for each.

For example in C I have malloc and dealloc as spells (revive and death spells).

I am having trouble with Java spells. I am trying to come up with things that focus on OOP or reflection and meta programming, but I am having trouble.

Any ideas? Also, anyone want to help with some sprites? All of the sprites the character generator can make are medieval looking.17 -

The GashlyCode Tinies

A is for Amy whose malloc was one byte short

B is for Basil who used a quadratic sort

C is for Chuck who checked floats for equality

D is for Desmond who double-freed memory

E is for Ed whose exceptions weren’t handled

F is for Franny whose stack pointers dangled

G is for Glenda whose reads and writes raced

H is for Hans who forgot the base case

I is for Ivan who did not initialize

J is for Jenny who did not know Least Surprise

K is for Kate whose inheritance depth might shock

L is for Larry who never released a lock

M is for Meg who used negatives as unsigned

N is for Ned with behavior left undefined

O is for Olive whose index was off by one

P is for Pat who ignored buffer overrun

Q is for Quentin whose numbers had overflows

R is for Rhoda whose code made the rep exposed

S is for Sam who skipped retesting after wait()

T is for Tom who lacked TCP_NODELAY

U is for Una whose functions were most verbose

V is for Vic who subtracted when floats were close

W is for Winnie who aliased arguments

X is for Xerxes who thought type casts made good sense

Y is for Yorick whose interface was too wide

Z is for Zack in whose code nulls were often spied

- Andrew Myers4 -

I and my mates finally finished the project : a console calculator that manages parenthesis, infinite numbers, the four major operators and also all the bases from 1 to 94

We were not allowed to use stdlib except for malloc, stdio was disallowed too, but we had the unistd write, read and sizeof ahaha

That was a long road of a week but finally we did it :D2 -

So, I applied for a job. People tend *not* to answer my applications, probably because my resume very clearly states I implemented malloc in fasm, among other things.

I imagine them going like "Sir, this is a Wendy's", or rather "we're looking for a 10X rockstar AnalScript ZAZQUACH mongoose-deus puffery quarter-stack developer". Fair enough, I certainly don't fit that bill.

But this time I not only got an answer, the guy went like "I'm impressed". Is this... recognition? From a human? What?

Fellas, I cannot process this emotion. Being frank, it's not even about the job. But willfully going against the idiocy of the industry standard, and then seeing that utterly deranged move actually amounting to something -- no matter how small -- is quite uncanny.

And of fucking course, it's a Perl job. Figures. Great minds think alike.3 -

Today I experienced cruelty of C and mercy of Sublime and SublimeLinter.

So yesterday I was programming late at night for my uni homework in C. So I had this struct:

typedef struct {

int borrowed;

int user_id;

int book_id;

unsigned long long date;

} entry;

and I created an array of this entry like this:

entry *arr = (entry*) malloc (sizeof(arr) * n);

and my program compiled. But at the output, there was something strange...

There were some weird hexadecimal characters at the beginning but then there was normal output. So late at night, I thought that something is wrong with printf statement and I went googling... and after 2 hours I didn't found anything. In this 2 hours, I also tried to change scanf statement if maybe I was reading the wrong way. But nothing worked. But then I tried to type input in the console (before I was reading from a file and saving output in a file). And it outputted right answer!!! AT THAT POINT I WAS DONE!!! I SAID FUCK THIS SHIT I AM GOING TO SLEEP.

So this morning I continued to work on homework and tried on my other computer with other distro to see if there is the same problem. And it was..

So then I noticed that my sublime lint has some interesting warning in this line

entry *arr = (entry*) malloc (sizeof(arr) * n);

Before I thought that is just some random indentation or something but then I saw a message: Size of pointer 'arr' is used instead of its data.

AND IT STRUCT ME LIKE LIGHTNING.

I just changed this line to this:

entry *arr = (entry*) malloc (sizeof(entry) * n);

And It all worked fine. At that moment I was so happy and so angry at myself.

Lesson learned for next time: Don't program late at night especially in C and check SublimeLInter messages.7 -

Why is pointers... bit shifting.. malloc.. anything that is regarding embedded development is so hard to grasp...15

-

!rant

I just remembered some joke I said while we had C++ classes.

To see who will actually listen to me, I said : "Hey, I heard you can malloc a dynamic array."1 -

Many people here rant about the dependency hell (rightly so). I'm doing systems programming for quite some time now and it changed my view on what I consider a dependency.

When you build an application you usually have a system you target and some libraries you use that you consider dependencies.

So the system is basically also a dependency (which is abstracted away in the best case by a framework).

What many people forget are standard libraries and runtimes. Things like strlen, memcpy and so on are not available on many smaller systems but you can provide implementations of them easily. Things like malloc are much harder to provide. On some system there is no heap where you could dynamically allocate from so you have to add some static memory to your application and mimic malloc allocating chunks from this static memory. Sometimes you have a heap but you need to acquire the rights to use it first. malloc doesn't provide an interface for this. It just takes it. So you have to acquire the rights and bring them magically to malloc without the actual application code noticing. So even using only the C standard library or the POSIX API can be a hard to satisfy dependency on some systems. Things like the C++ standard library or the Go runtime are often completely unavailable or only rudimentary.

For those of you aiming to write highly portable embedded applications please keep in mind:

- anything except the bare language features is a dependency

- require small and highly abstracted interfaces, e.g. instead of malloc require a pointer and a size to be given to you application instead of your application taking it

- document your ABI well because that's what many people are porting against (and it makes it easier to interface with other languages)2 -

What is it with certain colleagues who "wanna write C, not C++"

Motherfucker if I see another malloc in the code I will physically asssault you.

Like damn we're failing to teach people C++ badly when a newcomer from university, who had 2 semesters of "C++" doesn't even understand RAII.

And how in gods name do software engineers with *decades* of experience get so stuck on old technologies?

Like I've seen them write 3 nested try-catch to make sure a delete is called or some mutex is unlocked....

If youre in the position of teaching others C++, please stop teaching C first.18 -

Talking about adrenaline sports in a class and our favorites.

Me ? Writing C code without checking for null pointers!

What about you ?1 -

aaaaaAAAAaAh segmentation faults are horrible cause its a runtime error and it wont show u where u went wrong

ive been stuck for a few days with seg faults and i just realized i havent allocated space uuuuggghh its so dumb. a simple mistake of not putting malloc() can make u go crazy for a few days2 -

I found a vulnerability in an online compiler.

So, I heard that people have been exploiting online compilers, and decided to try and do it (but for white-hat reasons) so I used the system() function, which made it a lot harder so i decided to execute bash with execl(). I tried doing that but I kept getting denied. That is until I realized that I could try using malloc(256) and fork() in an infinite loop while running multiple tabs of it. It worked. The compiler kept on crashing. After a while I decided that I should probably report the vulnerabilites.

There was no one to report them to. I looked through the whole website but couldn't find any info about the people who made it. I searched on github. No results. Well fuck.6 -

I wrote a parody of Sound of Silence based on the struggles of cleaning up people's shit in the shop

============

Hello problems, my old friends

I've come to talk with you again

Because a driver softly creeping

Left its seeds while RAM was leaking

And the vision that was planted in my brain

Still remains

Within the sound of crashing

In restless dreams I walked alone

Narrow bands of networking

'Neath the halo of a burned-out fan

I turned my collar to the hot and spinning

When my eyes were stabbed by the flash of an LED light

That split the night

And touched the sound of crashing

And in the naked light I saw

Ten thousand tasks, maybe more

Programs malloc with no swap

Programs writing with no space

Programs writing bits that voices never play

And no one dared

Disturb the sound of crashing

"Fools, " said I, "You do not know

Malware, like a plague, it grows

Hear my words that I might teach you

Take my tools that I might help you"

But my words, like silent raindrops fell

And echoed in the wells, of crashing

And the programs bowed and prayed

To the malware god they made

And Windows flashed out its warning

In the words that it was forming

And Windows said, "The words of the prophets are written in the event log

And dumped over COM"

And whispered in the sounds of crashing2 -

So I have never done 'real' development on anything bar my current game engine Virgil, however found myself referring to C documentation for GLib and SDL2 rather than valadoc documentation.

Decided fuck it, I'm already converting everything to Vala's pointer syntax so I can have manual memory control, implementing stb_image and contemplating reworking SDL2_image into raw C so I'm not depending on extra libraries... Why do all this when I can just learn C and have more control.

Everything was going well and decided to buy the C programming language book, already knew about pointers and structs but ohhhhhhhhhhhhhhhhhhhh boi was I not ready for malloc .-.7 -

YGGG IM SO CLOSE I CAN ALMOST TASTE IT.

Register allocation pretty much done: you can still juggle registers manually if you want, but you don't have to -- declaring a variable and using it as operand instead of a register is implicitly telling the compiler to handle it for you.

Whats more, spilling to stack is done automatically, keeping track of whether a value is or isnt required so its only done when absolutely necessary. And variables are handled differently depending on wheter they are input, output, or both, so we can eliminate making redundant copies in some cases.

Its a thing of beauty, defenestrating the difficult aspects of assembly, while still writting pure assembly... well, for the most part. There's some C-like sugar that's just too convenient for me not to include.

(x,y)=*F arg0,argN. This piece of shit is the distillation of my very profound meditations on fuckerous thoughtlessness, so let me break it down:

- (x,y)=; fuck you in the ass I can return as many values as I want. You dont need the parens if theres only a single return.

- *F args; some may have thought I was dereferencing a pointer but Im calling F and passing it arguments; the asterisk indicates I want to jump to a symbol rather than read its address or the value stored at it.

To the virtual machine, this is three instructions:

- bind x,y; overwrite these values with Fs output.

- pass arg0,argN; setup the damn parameters.

- call F; you know this one, so perform the deed.

Everything else is generated; these are macro-instructions with some logic attached to them, and theres a step in the compilation dedicated to walking the stupid program for the seventh fucking time that handles the expansion and optimization.

So whats left? Ah shit, classes. Disinfect and open wide mother fucker we're doing OOP without a condom.

Now, obviously, we have to sanitize a lot of what OOP stands for. In general, you can consider every textbook shit, so much so that wiping your ass with their pages would defeat the point of wiping your ass.

Lets say, for simplicity, that every program is a data transform (see: computation) broken down into a multitude of classes that represent the layout and quantity of memory required at different steps, plus the operations performed on said memory.

That is most if not all of the paradigm's merit right there. Everything else that I thought to have found use for was in the end nothing but deranged ways of deriving one thing from another. Telling you I want the size of this worth of space is such an act, and is indeed useful; telling you I want to utilize this as base for that when this itself cannot be directly used is theoretically a poorly worded and overly verbose bitch slap.

Plainly, fucktoys and abstract classes are a mistake, autocorrect these fucking misspelled testicle sax.

None of the remaining deeper lore, or rather sleazy fanfiction, that forms the larger cannon of object oriented as taught by my colleagues makes sufficient sense at this level for me to even consider dumping a steaming fat shit down it's execrable throat, and so I will spare you bearing witness to the inevitable forced coprophagia.

This is what we're left with: structures and procedures. Easy as gobblin pie.

Any F taking pointer-to-struc as it's first argument that is declared within the same namespace can be fetched by an instance of the structure in question. The sugar: x ->* F arg0,argN

Where ->* stands for failed abortion. No, the arrow by itself means fetch me a symbol; the asterisk wants to jump there. So fetch and do. We make it work for all symbols just to be dicks about it.

Anyway, invoking anything like this passes the caller to the callee. If you use the name of the struc rather than a pointer, you get it as a string. Because fuck you, I like Perl.

What else is there to discuss? My mind seems blank, but it is truly blank.

Allocating multitudes of structures, with same or different types, should be done in one go whenever possible. I know I want to do this, and I know whichever way we settle for has to be intuitive, else this entire project has failed.

So my version of new always takes an argument, dont you just love slurping diarrhea. If zero it means call malloc for this one, else it's an address where this instance is to be stored.

What's the big idea? Only the topmost instance in any given hierarchy will trigger an allocation. My compiler could easily perform this analysis because I am unemployed.

So where do you want it on the stack on the heap yyou want to reutilize any piece of ass, where buttocks stands for some adequately sized space in memory -- entirely within the realm of possibility. Furthermore, evicting shit you don't need and replacing it with something else.

Let me tell you, I will give your every object an allocator if you give the chance. I will -- nevermind. This is not for your orifices, porridges, oranges, morpheousness.

Walruses.8 -

I spent 13 hours on a class project using c only to not finish because malloc had a mind of its own and kept segfaulting with errno 16, or "resource busy". Fml.6

-

Some compilers give an error message on forgotten type casting. From that it shows good typing style casting. So you also avoid clerical errors that can lead to the program crash in the worst case. With some types it is also necessary to perform type casting comma on others Types, however, do this automatically for the compiler.

In short:Type casting is used to prevent mistakes.

An example of such an error would be:

#include <stdio.h>

#include <stdlib.h>

int main ()

{

int * ptr = malloc (10*sizeof (int))+1;

free(ptr-1);

return 0;

}

By default, one tries to access the second element of the requested memory. However, this is not possible, since pointer calculation (+,-) does not work for a void pointer.

The improved example would be:

int * ptr = ((int *) malloc (10*sizeof (int)))+1;

Here, typecasting is done beforehand and this turns the void pointer into its int pointer and pointer calculation can be applied. Note: If instead of error "no output" is displayed on the sololearn C compiler try another compiler. 1

1 -

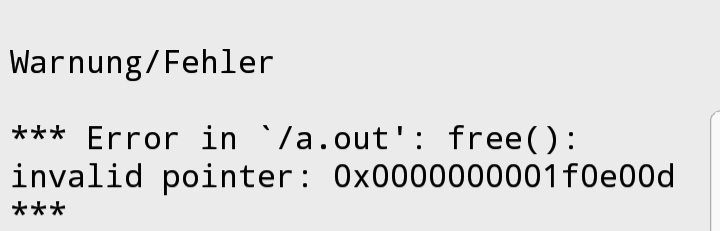

Recoding the malloc is a mess. It seems to be a very good exercise and it is. But you know there are so much mystical issues that happens when you're working with memory.

I just figured out that I got 90% of my free function calls by the "ls" command that was in reality a bad pointer following my own verifications.

So, I don't know why because I just make a normal vérification so except if the "ls" was developer with the ass... -

My N3DS is currently on its way to its grave. I've had malloc issues since I got it, but recently buttons have begun to fail and it's begun to randomly hardlock, when it happens it's so badly locked up that even NMIs fail to get through.

Luckily, it's hacked, so I can decrypt and export everything now before it's totally toast.

Still feels bad, though... it's been the home of 2 DSi's worth of data for a while now as well as new stuff. It's got some emotional weight to it.4 -

I first started off with a pentium 3 machine in 2004, started gaming on warcraft 3 and maplestory and eventually got addicted to it because nothing else was interesting in my life. Okay extending this story, i eventually got banned, dad smashed 1000 bucks of his money by kicking and throwing it. Years later (i think it was 2011), i got hold of my first Android device. This time round, things were different and I spent 6 months with it problem free and then it started lagging. Google search led me to XDA, started modding the device, eventually startedgetting interested about how people do it and voila, C prog, write some management drivers for malloc and etc. Eventually i dropped kernel development 3 years later and now im in .NET Core.5

-

So there's a proposal for C++ to zero initialize pretty much everything that lands on the stack.

I think this is a good thing, but I also think malloc and the likes should zero out the memory they give you so I'm quite biased.

What's devrants opinion on this?

https://isocpp.org/files/papers/...14 -

On Windows, which one line input will get this code to print "Finally I get a sticker. Yayyyy!!!" immediately

#include <stdio.h>

int main()

{

char *c = (char*) malloc(sizeof(char) * 10);

int rants = 0;

while(rants<20)

{

printf("U don't want me to get a sticker?\n");

scanf("%s", c);

if(c[0] == 'y')

rants--;

else

rants++;

}

printf("Finally I get a sticker. Yayyyy!!!\n");

} -

How do you handle error checking? I always feel sad after I add error checking to a code that was beautifully simple and legible before.

It still remains so but instead of each line meaning something it becomes if( call() == -1 ) return -1; or handleError() or whatever.

Same with try catch if the language supports it.

It's awful to look at.

So awful I end up evading it forever.

"Malloc can't fail right? I mean it's theoetically possible but like nah", "File open? I'm not gonna try catch that! It's a tmp file only my program uses come oooon", all these seemingly reasonable arguments cross my head and makes it hard to check the frigging errors. But then I go to sleep and I KNOW my program is not complete. It's intentionally vulnerable. Fuck.

How do you do it? Is there a magic technique or one has to reach dev nirvana to realise certain ugliness and cluttering is necessary for the greater good sometimes and no design pattern or paradigm can make it clean and complete?15 -

Why in all fucks would you NOT preconfigure your language client BUT provide a shitload of highly biased default shortcuts just IN CASE some sorry soul took time to preconfigure one.

I'ma be totally honest here, Neovim has lost its way. Every single day I pick it up there's a fuckton of shitty new default bindings...

But that's not the worst of it

You see, they've cramming all sorts of shitty code in there. Like this one default commenting plugin... It does in 600 lines what my setup does in 50. Why? Because, while mine uses the lpeg lib maintainers decided to cram into the editor, the other does a fuckton of hacks so fucked that refactoring is impossible, impossível! Despicable.

Now, their C codebase... Ok, ok arena beats vanilla malloc, alright, kudos to that, BUT refactoring out that old fart of quasillions of legacy C? MADNESS! They should be focused on adding built-in auto completion??? Well-defined syntax highlighting conventions? A FUCKING FUZZY PICKER for fucksakes!! But, oh no, we've got better things to do like FUCKING THE USER IN THEIR ASSSSSSS

--

DIS-FUCKINGTRESSED here

FUUUUUUUUUCKKKKKKKKKKK6 -

Okay, so I am learning Python and I have to say it's a very interesting language but I have some questions about how the language is built under the hood as the documentation I can find by Guido doesn't give away all the secrets.

So for the question I am referencing this documentation:

https://python.org/download/...__

So, what does __new__ actually look like inside? Is there a way to see how python itself implements __new__?

I know that the mechanism for C++ malloc and new are well known definitions within that space, but I am having issues understanding exactly what the default __new__ is doing on the machine level.

The documentation I found is great for explaining how to use and override __new__ but it doesn't show what python does it once you hand off operations back to the system.

Any help is greatly appreciated!3 -

ioctl with FIONREAD as request

it returns size of bytes ready to read, but to store that size it requires pointer to int passed in va_args

when i want to malloc a memory using that size i need variable of type size_t which is 8 bytes on 64-bit system

why do this types mismatch? if ioctl returns size with FIONREAD request it should accept pointer to size_t variable in va_args -

As a person trying to learn C, I am sick and tired of the lack of visuals for C functions. As a person who best learns by visualization, I find it rather difficult to learn the difference between functions such as malloc, calloc and memset. All I can find on Google is the same written documentations.