Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "language reference"

-

I tutor people who want to program, I don't ask anything for it, money wise, if they use my house as a learning space I may ask them to bring cookies or a pizza or something but on the whole I do it to help others learn who want to.

Now this in of itself is perfectly fine, I don't get financially screwed over or anything, but...

Fuck me if some students are horrendous!

To the best of my knowledge I've agreed to work with and help seven individuals, four female three male.

One male student never once began the study work and just repeatedly offered excuses and wanted to talk to me about how he'd screwed his life up. I mean that's unfortunate, but I'm not a people person, I don't really feel emotionally engaged with a relative stranger who quite openly admits they got addicted to porn and wasted two years furiously masturbating. Which is WAY more than I needed to know and made me more than a little uncomfortable. Ultimately lack of actually even starting the basic exercises I blocked him and stopped wasting my time.

The second dude I spoke to for exactly 48 hours before he wanted to smash my face in. Now, he was Indian (the geographical India not native American) and this is important, because he was a friend of a friend and I agreed to tutor however he was more interested in telling me how the Brits owed India reparations, which, being Scottish, I felt if anyone was owed reparations first, it's us, which he didn't take kindly too (something about the phrase "we've been fucked, longer and harder than you ever were and we don't demand reparations" didn't endear me any).

But again likewise, he wanted to talk about politics and proving he was a someone "I've been threatened in very real world ways, by some really bad people" didn't impress me, and I demonstrated my disinterest with "and I was set on fire once cos the college kids didn't like me".

He wouldn't practice, was constantly interested in bigging himself up, he was aggressive, confrontational and condescending, so I told him he was a dick, I wasn't interested in helping him and he can help himself. Last I heard he wasn't in the country anymore.

The third guy... Absolute waste of time... We were in the same computer science college class, I went to university and did more, he dossed around and a few years later went into design and found he wanted to program and got in touch. He completes the code schools courses and understandably doesn't quite know what to do next, so he asks a few questions and declares he wants to learn full stack web development. Quickly. I say it isn't easy especially if it's your first real project but if one is determined, it isn't impossible.

This guy was 30 and wanted to retire at 35 and so time was of the essence. I'm up for the challenge, and so because he only knows JavaScript (including prototypes, callbacks and events) I tell him about nodejs and explain that it's a little more tricky but it does mean he can learn all the basis without learning another language.

About six months of sporadic development where I send him exercises and quizzes to try, more often than not he'd answer with "I don't know" after me repeatedly saying "if you don't know, type the program out and study what it does then try to see why!".

The excuses became predicable, couldn't study, playing soccer, couldn't study watching bake off, couldn't study, couldn't study.

Eventually he buys a book on the mean stack and I agree to go through it chapter by chapter with him, and on one particular chapter where I'm trying to help him, he keeps interrupting with "so could I apply for this job?" "What about this job?" And it's getting frustrating cos I'm trying to hold my code and his in my head and come up with a real world analogy to explain a concept and he finally interrupts with "would your company take me on?"

I'm done.

"Do you want the honest unabridged truth?"

"Yes, I'd really like to know what I need to do!"

"You are learning JavaScript, and trying to also learn computer science techniques and terms all at the same time. Frankly, to the industry, you know nothing. A C developer with a PHD was interviewed and upon leaving the office was made a laughing stock of because he seemed to not know the difference between pass by value and pass by reference. You'd be laughed right out the building because as of right now, you know nothing. You don't. Now how you respond to this critique is your choice, you can either admit what I'm saying is true and put some fucking effort into studying cos I'm putting more effort into teaching than you are studying, or you can take what I'm saying as a full on attack, give up and think of me as the bad guy. Your choice, if you are ready to really study, you can text me in the morning for now I'm going to bed."

The next day I got a text "I was thinking about what you said and... I think I'm not going to bother with this full stack stuff it's just too hard, thought you should know."23 -

So I told my wife one week ago: "Yeah, you should totally learn to code as well!"

Yesterday a package arrived, containing a really beautiful hardcover book bound in leather, with a gold foil image of a snake debossed into the cover, with the text "In the face of ambiguity -- Refuse the temptation to guess" on it.

Well, OK, that's weird.

My wife snatches it and says: "I had that custom made by a book binder". I flip through it. It contains the Python 3.9 language reference, and the PEP 8 styleguide.

While I usually dislike paper dev books because they become outdated over time, I'm perplexed by this one, because of how much effort and craftsmanship went in to it. I'm even a little jealous.

So, this morning I was putting dishes into the dishwasher, and she says: "Please let me do that". I ask: "Am I doing anything wrong?"

Wife responds: "Well, it's not necessarily wrong, I mean, it works, doesn't it? But your methods aren't very pythonic. Your conventions aren't elegant at all". I don't think I've heard anyone say the word "pythonic" to me in over a decade.

And just now my wife was looking over my shoulder as I was debugging some lower level Rust code filled with network buffers and hex literals, and she says: "Pffffff unbelievable, I thought you were a senior developer. That code is really bad, there are way too many abbreviated things. Readability counts! I bet if you used Python, your code would actually work!"

I think I might have released something really evil upon the world.28 -

My own language, hence my own parser.

Reinvented the regular expression before realizing it already existed (Google didn't exist at the time).

I'm a living reference for regular expressions since then.7 -

I had to open the desktop app to write this because I could never write a rant this long on the app.

This will be a well-informed rebuttal to the "arrays start at 1 in Lua" complaint. If you have ever said or thought that, I guarantee you will learn a lot from this rant and probably enjoy it quite a bit as well.

Just a tiny bit of background information on me: I have a very intimate understanding of Lua and its c API. I have used this language for years and love it dearly.

[START RANT]

"arrays start at 1 in Lua" is factually incorrect because Lua does not have arrays. From their documentation, section 11.1 ("Arrays"), "We implement arrays in Lua simply by indexing tables with integers."

From chapter 2 of the Lua docs, we know there are only 8 types of data in Lua: nil, boolean, number, string, userdata, function, thread, and table

The only unfamiliar thing here might be userdata. "A userdatum offers a raw memory area with no predefined operations in Lua" (section 26.1). Essentially, it's for the API to interact with Lua scripts. The point is, this isn't a fancy term for array.

The misinformation comes from the table type. Let's first explore, at a low level, what an array is. An array, in programming, is a collection of data items all in a line in memory (The OS may not actually put them in a line, but they act as if they are). In most syntaxes, you access an array element similar to:

array[index]

Let's look at c, so we have some solid reference. "array" would be the name of the array, but what it really does is keep track of the starting location in memory of the array. Memory in computers acts like a number. In a very basic sense, the first sector of your RAM is memory location (referred to as an address) 0. "array" would be, for example, address 543745. This is where your data starts. Arrays can only be made up of one type, this is so that each element in that array is EXACTLY the same size. So, this is how indexing an array works. If you know where your array starts, and you know how large each element is, you can find the 6th element by starting at the start of they array and adding 6 times the size of the data in that array.

Tables are incredibly different. The elements of a table are NOT in a line in memory; they're all over the place depending on when you created them (and a lot of other things). Therefore, an array-style index is useless, because you cannot apply the above formula. In the case of a table, you need to perform a lookup: search through all of the elements in the table to find the right one. In Lua, you can do:

a = {1, 5, 9};

a["hello_world"] = "whatever";

a is a table with the length of 4 (the 4th element is "hello_world" with value "whatever"), but a[4] is nil because even though there are 4 items in the table, it looks for something "named" 4, not the 4th element of the table.

This is the difference between indexing and lookups. But you may say,

"Algo! If I do this:

a = {"first", "second", "third"};

print(a[1]);

...then "first" appears in my console!"

Yes, that's correct, in terms of computer science. Lua, because it is a nice language, makes keys in tables optional by automatically giving them an integer value key. This starts at 1. Why? Lets look at that formula for arrays again:

Given array "arr", size of data type "sz", and index "i", find the desired element ("el"):

el = arr + (sz * i)

This NEEDS to start at 0 and not 1 because otherwise, "sz" would always be added to the start address of the array and the first element would ALWAYS be skipped. But in tables, this is not the case, because tables do not have a defined data type size, and this formula is never used. This is why actual arrays are incredibly performant no matter the size, and the larger a table gets, the slower it is.

That felt good to get off my chest. Yes, Lua could start the auto-key at 0, but that might confuse people into thinking tables are arrays... well, I guess there's no avoiding that either way.13 -

Udemy courses are targeted at ABSOLUTE beginners. It's excruciating to pull through and finish the course "just because". And some of these courses are jam-packed with 30-60 hours just for them to appear legit, but the reality is the value you get could be packed to 3-5 hours.

You're better off just searching for or watching for the things that you need on Google or YouTube.

You'll learn more when building the actual stuff. Yes, it's good to go for the documentation. Just scratch the "Getting Started" section and then start building what you want to build already. Don't read the entire documentation from cover to cover for the sake of reading it. You won't retain everything anyway. Use it as a reference. You'll gain wisdom through tons of real-world experience. You will pick things up along the way.

Don't watch those tutorials with non-native English speakers or those with a bad accent as well. Native speakers explain things really well and deliver the message with clarity because they do what they do best: It's their language.

Trust me, I got caught up in this inefficient style a handful of times. Don't waste your time.rant mooc bootcamp coursera freecodecamp skillshare tutorial hell learning udacity udemy linkedin learning8 -

Old rant about an internship I had years ago. It still annoys me to this day, so I just had to share the story.

Basically I had no job or work experience in the field, which is a common issue in the city I live in - developer jobs are hard to come by with no experience here. The municipality tried to counter this issue by offering us (unemployed people with an interest in the field) a free 9-month course, linked with an internship program, with a "high chance" of a job after the internship period.

To lure companies to agree to this deal, the municipality offered a sum of money to companies who willing to take interns. The only requirement for the company was that they had to offer a full-time position to the interns after the internship, as long as there were no serious issues (ex. skipping work, calling in sick, doing a bad job etc.).

On paper, this deal probably makes sense.

I landed an internship fairly quickly at a well-known company in the city. The first internship period went great, and I got constant positive feedback. I even got to the point where I ran out of tasks since I worked faster than expected - which I was fairly proud of at the time.

The next internship period was a weird mix between school (the course), and being at the company. We would be at the school for the whole week, expect Wednesdays where we could do the internship at the company.

When I met at work on that first Wednesday, the company told me that it made no sense for me to meet up on those days, as I was only watching some tutorial videos during that time, while they were finding bigger tasks for me - which in turn required that they got some designs for a new project. They said that due to the requirements they got from the municipality (which I knew nothing about at the time), they couldn't ask me to work from home - and they said it would "demoralize" the other developers if I just sat there on Wednesdays to watch videos. Instead, they suggested that I called in sick on Wednesdays and just watched the videos at home - which is something I would register to the workplace, so I wouldn't get in trouble with the school. It sounded logical to me, so I did that for like 5-6 Wednesdays in a row. Looking back at this period, there's a lot of red flags - but I was super optimistic and simply didn't notice.

After this period, the final 2 months of the internship period (no school). This time I had proper tasks, and was still being praised endlessly - just like the first period.

On the last day of the internship, I got called to a meeting with my teamlead and CEO. Thinking I was to sign a full-time contract, I happily went to the meeting.. Only to be told that they had found someone with more experience.

I was fairly disappointed, and told them honestly that I would have preferred if they had told me this earlier, since I had been looking forward to this day. They apologized, but said that there was nothing they could do.

When I returned for the last school period (2 weeks), the teacher asked me to join him for a small meeting with some guy from the municipality. Both seemed fairly disappointed / angry, and told me what still makes me furious whenever I think about it.

Basically after my last internship period, the company had called the municipality, telling them that I had called in sick on those Wednesdays, and was "a lazy worker", and they would refuse to hire me because of that.

I of course told them my side of the story, which they wouldn't believe (unemployed person vs. well-known company).

Even when I landed a proper job a few months later, the office had called my old internship for a reference - and they told the same story, which nearly made them decline my application. This honestly makes me feel like it's something personal.

So basically:

Municipality: Had to pay the company as the deal / contract between them was kept.

Company: Got free money and work.

Me: Got nothing except a bad reputation - and some (fairly limited) experience..

Do I regret taking the course? .. No, it was a free course and I learned a lot - and I DID get some experience. But god, I wish I had applied at a different company.

Sorry for my bad English - it's not my first language.. But f*ck this company :)8 -

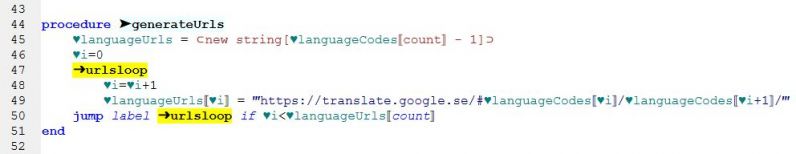

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

Ever heard of event-based programming? Nope? Well, here we are.

This is a software design pattern that revolves around controlling and defining state and behaviour. It has a temporal component (the code can rewind to a previous point in time), and is perfectly suited for writing state machines.

I think I could use some peer-review on this idea.

Here's the original spec for a full language: https://gist.github.com/voodooattac...

(which I found to be completely unnecessary, since I just implemented this pattern in plain TypeScript with no extra dependencies. See attached image for how TS code looks like).

The fact that it transcends language barriers if implemented as a library instead of a full language means less complexity in the face of adaptation.

Moving on, I was reviewing the idea again today when I discovered an amazing fact: because this is based on gene expression, and since DNA is recombinant, any state machine code built using this pattern is also recombinant[1]. Meaning you can mix and match condition bodies (as you would mix complete genes) in any program and it would exhibit the functionality you picked or added.

You can literally add behaviour from a program (for example, an NPC) to another by copying and pasting new code from a file to another. Assuming there aren't any conflicts in variable names between the two, and that the variables (for example `state.health` and `state.mood`) mean the same thing to both programs.

If you combine two unrelated programs (a server and a desktop application, for example) then assuming there are no variables clashing, your new program will work as a desktop application and as a server at the same time.

I plan to publish the TypeScript reference implementation/library to npm and GitHub once it has all basic functionality, along with an article describing this and how it all works.

I wish I had a good academic background now, because I think this is worthy of a spec/research paper. Unfortunately, I don't have any connections in academia. (If you're interested in writing a paper about this, please let me know)

Edit: here's the current preliminary code: https://gist.github.com/voodooattac...

***

[1] https://en.wikipedia.org/wiki/...29 -

Why do people jump from c to python quickly. And all are about machine learning. Free days back my cousin asked me for books to learn python.

Trust me you have to learn c before python. People struggle going from python to c. But no ml, scripting,

And most importantly software engineering wtf?

Software engineering is how to run projects and it is compulsory to learn python and no mention of got it any other vcs, wtf?

What the hell is that type of college. Trust me I am no way saying python is weak, but for learning purpose the depth of language and concepts like pass by reference, memory leaks, pointers.

And learning algorithms, data structures, is more important than machine learning, trust me if you cannot model the data, get proper training data, testing data then you will get screewed up outputs. And then again every one who hype these kinds of stuff also think that ml with 100% accuracy is greater than 90% and overfit the data, test the model on training data. And mostly the will learn in college will be by hearting few formulas, that's it.

Learn a language (concepts in language) like then you will most languages are easy.

Cool cs programmer are born today😖 31

31 -

PHP ist one of the languages I use regularly, but not the main language.

Anyhow, passing an array to a function will create a Copy of the array unless you specifically choose to Pass the reference.

That's seriously fucked up. What other language does that?! Coming from C, Java, Python to PHP I was not prepared to expect shit like that. 20

20 -

I dive in head first.

Some existing program annoys me, so I get this itch to write a selfhosted Spotify in Go, or a conky with 3D graphics in Rust.

I check the homepage of the language, download the tools, check which IDE is great for it.

Then I just start writing code, following the error corrections thrown by the IDE, doing web searches for all errors. Then when I run into a wall, I might check the reference docs or a udemy course.

Often I don't finish the project, because time is limited and I still have 4 million other things to do and learn, but at least I've learned a new language/tech.

Con: For tech which uses unique paradigms like Rust's memory management or Go's Goroutines, it can be frustrating to bash away at a problem using old assumptions.

Pro: By having a real demand for a product with requirements instead of a hello world or todo app, it's much easier to stay motivated, and you learn beyond what courses would teach you.5 -

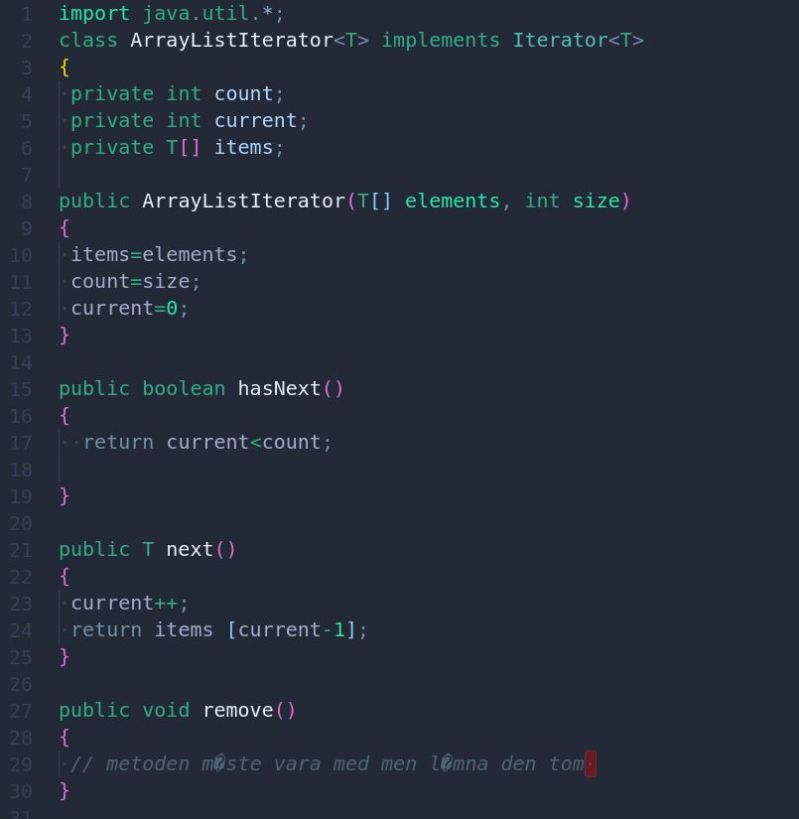

In my uni course "Algorithms and Data structures" we use Java. Fine. Definitely not my preferred language but it's not like I have a choice.

Anyway, our teacher uploads code files for us to use as reference/examples. The problem is, they look like this. Not only does she not indent the code, she also uses a charset that is not utf-8.

In the rare cases where she does indent the code, she uses THREE, yes THREE spaces... 23

23 -

Holy shit. I just watched a video on Rust and I think I am in love.

Tracked mutability, reference counting, guaranteed thread safety, all in a compiled type-safe language with the performance of C++? 😍

Why did I not check this out sooner??10 -

Dear Swift, we have to break up. I’ve found a new language to love. Oh don’t act so surprised, you know our relationship was on shaky ground. You never let me have any fun. You’re always telling me what to do and how to do it and I’ve had enough. You treat me like a child, and I’m moving on.

Things were good in the beginning, and you may have impressed me with your automatic reference counting, but my new language can do that too, and so much more, and does it faster than you could ever imagine. You see unlike you, my new language doesn’t boss me around. It *trusts* me, Swift. That’s the one thing you never could understand. I need to be trusted; and know that I can trust in you.

Well I can’t. Not anymore, Swift. It’s over. My new language just treats me better than you ever could. I’m sorry it came to this but I deserve better than you Swift. We’ve both known this for a long time.

I wish you the best, but you probably shouldn’t call.

I’m with Rust now.1 -

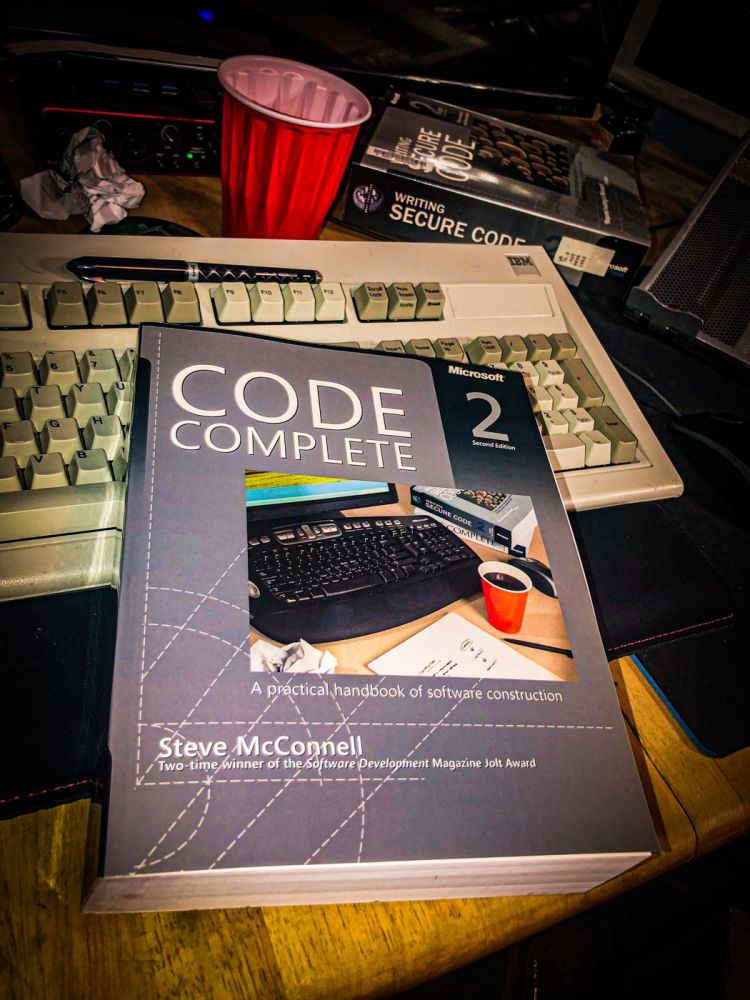

Probably the MOST complete software book on a very broad subject.

This is book to read for those of you are near college grad, first job in the industry. But to the level of detail and broad coverage this book has I think it’s actually a great book for everyone in the industry almost as a “baseline”

From requirements, project planning, workflow paradigms. Software Architecture design, variable naming, refactoring, testing, releasing the book covers everything, not only high level but also in reference to C.

Why C ...because in the consumer electronics, automotive industry, medical electronics and other industries creating physical products c is the language of choice, no changing that. BUT it’s not a C book... it contains C and goes into dept into C but it’s not a C book, C is more like a vehicle for the book, because there are long established, successful industry’s built around it. Plenty of examples.

When I say it’s the most complete on a broad subject seriously like example the chapter about the C language is not a brief over like many other books, for example 10 pages alone are dedicated to just pointer! Many C books have only a few paragraphs on the subject. This goes on depth.

Other topics, recursion, how to write documentation for your code.

Lots of detail and philosophy of the construction of software.

Even if you are a veteran software engineer you could probably learn a thing or two from the book.

It’s not book that you can finish in weekend, unless you can read and comprehend over 1000 pages.

Very few books cover such a broad topic ALL while still going into great detail on those subtopics. the second part is what lacks in most “broad topic books” ..

Code Complete.. is definitely “Complete”

So the image doesn’t match the rest of my book images because I tried to make an amage to cover of the book, inception style kinda haha 😂 19

19 -

Meet today.... Fetlang

---

lick Bob's cock

lick Duke's left nipple one million times

while Ada is submissive to Duke

make slave scream Ada's name

Have Charlie spank himself

Have Ada lick his tight little ass

Have Bob lick Charlie's tight little ass, as well

make Ada moan Bob's name

make Bob moan Charlie's name

---

Never felt so dirty after calculating the fibonacci sequence...

https://github.com/Property404/...

"Fetlang is a statically typed, procedural, esoteric programming language and reference implementation. It is designed such that source code looks like poorly written fetish erotica."8 -

Anybody else physically write notes when studying a new programming language? I do it because it really helps solidify the information in my brain and also makes for good reference.

8

8 -

Buckle up, it's a long one.

Let me tell you why "Tree Shaking" is stupidity incarnate and why Rich Harris needs to stop talking about things he doesn't understand.

For reference, this is a direct response to the 2015 article here: https://medium.com/@Rich_Harris/...

"Tree shaking", as Rich puts it, is NOT dead code removal apparently, but instead only picking the parts that are actually used.

However, Rich has never heard of a C compiler, apparently. In C (or any systems language with basic optimizations), public (visible) members exposed to library consumers must have that code available to them, obviously. However, all of the other cruft that you don't actually use is removed - hence, dead code removal.

How does the compiler do that? Well, it does what Rich calls "tree shaking" by evaluating all of the pieces of code that are used by any codepaths used by any of the exported symbols, not just the "main module" (which doesn't exist in systems libraries).

It's the SAME FUCKING THING, he's just not researched enough to fully fucking understand that. But sure, tell me how the javascript community apparently invented something ELSE that you REALLY just repackaged and made more bloated/downright wrong (React Hooks, webpack, WebAssembly, etc.)

Speaking of Javascript, "tree shaking" is impossible to do with any degree of confidence, unlike statically typed/well defined languages. This is because you can create artificial references to values at runtime using string functions - which means, with the right input, almost anything can be run depending on the input.

How do you figure out what can and can't be? You can't! Since there is a runtime-based codepath and decision tree, you run into properties of Turing's halting problem, which cannot be solved completely.

With stricter languages such as C (which is where "dead code removal" is used quite aggressively), you can make very strong assertions at compile time about the usage of code. This is simply how C is still thousands of times faster than Javascript.

So no, Rich Harris, dead code removal is not "silly". Your entire premise about "live code inclusion" is technical jargon and buzzwordy drivel. Empty words at best.

This sort of shit is annoying and only feeds into this cycle of the web community not being Special enough and having to reinvent every single fucking facet of operating systems in your shitty bloated spyware-like browser and brand it with flashy Matrix-esque imagery and prose.

Fuck all of it.20 -

Ye, so after studying for an eternity and doing some odd jobs here and there, all I can show for are following traits:

* Super knowledgeable in arm/Intel assembly language

* C-Veteran with knowledge of some sick and nasty C-hacks/tricks which would even sour the mood of your grandma

* Acquired disdain of any and all scripting languages (how dare you write something in one line for which I need a whole library for!)

* All-in-all low-level programmer type of guy (gimme those juicy registers to write into!)

After completing the mandatory part of my computer science studies, all I did was immerse myself into low-level stuff. Even started to hold lectures and all.

Now I'm at the cusp of being let free into the open market.

The thing is: I'm pretty sure that no company is really interested in my knowledge, as no one really writes assembly anymore.

Sure, embedded programming is still a thing, but even that is becoming increasingly more abstract, with God knows how many layers of software between the hardware and the dev, just to hide all the scary bits underneath.

So, are there people in here who're actually exposed to assembly or any hands-on hardware-programming?

Like, on a "which bit in which register/addr do I need to set" - kind of way.

And if so, what would you say someone like me should lookout for in a company to match my interest to theirs?

Or is it just a pipe dream, so I'd need to brace myself to a mundane software engineer career where I have to process a ticket at a time?

(Just to give a reference: even the most hardware-inclined companies I found "near" me are developing UIs with HTML5 to be used in some such environment ....)12 -

My two cent: Java is fucking terrible for computer science. Why the fuck would you teach somebody such a verbose language with so many unwritten rules?

If you really want your students to learn about computer, why not C? Java has no pointer, no passed by reference, no memory management, a lots of obscure classes structure and design pattern, this shit is garbage. The student will almost never has contact with the compiler, many don't even know of existence of a compiler.

Java is so enterprise focused and just fucked up for educating purpose. And I say it as somebody who (still) uses it as main language.

If you want your students to be productive and learn about software engineering, why not Python? Things are simple in Python can can be done way easier without students becoming code monkeys (assuming they don't use for each task a whole library). I mean java takes who god damn class and an explicitly declared entry point which is btw. fucking verbose to print something into the console.

Fuck Java.17 -

Dude what the fuck with C#'s code style?

Pascal case variable names and classes? Don't we do this so we can differentiate between variables and classes??

Newline between parentheses and brackets?

Retarded auto get/ set methods that serve no purpose than to make code difficult to read and debug?

And after all that shit, string, which is a class, has a lowercase s and is treated as if a primitive?

I really have no idea why this language is so widely praised. The only thing I like about it is that it's the only major bytecode language that has operator overloading and reference parameters.19 -

I want to explain to people like ostream (aka aviophille) why JS is a crap language. Because they apparently don't know (lol).

First I want to say that JS is fine for small things like gluing some parts togeter. Like, you know, the exact thing it was intended for when it was invented: scripting.

So why is it bad as a programming language for whole apps or projects?

No type checks (dynamic typing). This is typical for scripting languages and not neccesarily bad for such a language but it's certainly bad for a programming language.

"truthy" everything. It's bad for readability and it's dangerous because you can accidentaly make unwanted behavior.

The existence of == and ===. The rule for many real life JS projects is to always use === to be more safe.

In general: The correct thing should be the default thing. JS violates that.

Automatic semicolon insertion can cause funny surprises.

If semicolons aren't truly optional, then they should not be allowed to be omitted.

No enums. Do I need to say more?

No generics (of course, lol).

Fucked up implicit type conversions that violate the principle of least surprise (you know those from all the memes).

No integer data types (only floating point). BigInt obviously doesn't count.

No value types and no real concept for immutability. "Const" doesn't count because it only makes the reference immutale (see lack of value types). "Freeze" doesn't count since it's a runtime enforcement and therefore pretty useless.

No algebraic types. That one can be forgiven though, because it's only common in the most modern languages.

The need for null AND undefined.

No concept of non-nullability (values that can not be null).

JS embraces the "fail silently" approach, which means that many bugs remain unnoticed and will be a PITA to find and debug.

Some of the problems can and have been adressed with TypeScript, but most of them are unfixable because it would break backward compatibility.

So JS is truly rotten at the core and can not be fixed in principle.

That doesn't mean that I also hate JS devs. I pity your poor souls for having to deal with this abomination of a language.

It's likely that I fogot to mention many other problems with JS, so feel free to extend the list in the comments :)

Marry Christmas!34 -

Who wants to play a game?

Let's play....

Is This Sexual Harassment!?!?

I won't publicly reveal who is the other person in this convo. I just want genuine opinions.

For reference, the convo started in another language, and quick mention of their fondness for my eyes... i simply ignored with 'lol' and chatted on and off for some hours... next time it was in english with use of " darling", which I simply stated wasnt something anyone who knew me would say.

they claimed it was just british... it's not. I actually use UK spellings due to working for years for UK based companies.

back in the other language... ' angel' , 'princess', *some equivalent of a small rabbit*,...me once again, more directly, asking why and saying no/they are irrelevant to me, to each.

the next part was hysterical, but to not out the person or skew opinions, that's being omitted.

the following came after me running out of time so it needed to be a call or i needed to mute them. they got muted.

SOOO,,,,

IS THIS SEXUAL HARASSMENT???

my dev friend/partner and I can't find the right term for wtf this is... which is odd as we both have an extensive vocabulary and are highly specific. 105

105 -

The "stochastic parrot" explanation really grinds my gears because it seems to me just to be a lazy rephrasing of the chinese room argument.

The man in the machine doesn't need to understand chinese. His understanding or lack thereof is completely immaterial to whether the program he is *executing* understands chinese.

It's a way of intellectually laundering, or hiding, the ambiguity underlying a person's inability to distinguish the process of understanding from the mechanism that does the understanding.

The recent arguments that some elements of relativity actually explain our inability to prove or dissect consciousness in a phenomenological context, especially with regards to outside observers (hence the reference to relativity), but I'm glossing over it horribly and probably wildly misunderstanding some aspects. I digress.

It is to say, we are not our brains. We are the *processes* running on the *wetware of our brains*.

This view is consistent with the understanding that there are two types of relations in language, words as they relate to real world objects, and words as they relate to each other. ChatGPT et al, have a model of the world only inasmuch as words-as-they-relate-to-eachother carry some information about the world as a model.

It is to say while we may find some correlates of the mind in the hardware of the brain, more substrate than direct mechanism, it is possible language itself, executed on this medium, acts a scaffold for a broader rich internal representation.

Anyone arguing that these LLMs can't have a mind because they are one-off input-output functions, doesn't stop to think through the implications of their argument: do people with dementia have agency, and sentience?

This is almost certain, even if they forgot what they were doing or thinking about five seconds ago. So agency and sentience, while enhanced by memory, are not reliant on memory as a requirement.

It turns out there is much more information about the world, contained in our written text, than just the surface level relationships. There is a rich dynamic level of entropy buried deep in it, and the training of these models is what is apparently allowing them to tap into this representation in order to do what many of us accurately see as forming internal simulations, even if the ultimate output of that is one character or token at a time, laundering the ultimate series of calculations necessary for said internal simulations across the statistical generation of just one output token or character at a time.

And much as we won't find consciousness by examining a single picture of a brain in action, even if we track it down to single neurons firing, neither will we find consciousness anywhere we look, not even in the single weighted values of a LLMs individual network nodes.

I suspect this will remain true, long past the day a language model or other model merges that can do talk and do everything a human do intelligence-wise.28 -

Fuck c++

Everytime I have to use this fucking language it spits up some other bullshit error

`cannot convert std::vector<int>() to std::vector<int>&`

WHY THE FUCK ARE STACK ALLOCATED VALUES EVEN THEIR OWN TYPES

AND WHY CANT I TAKE A GOD DAMN REFERENCE TO IT

JUST WHYYYYY21 -

Oh my god, GDScript is the single biggest piece of shit scripting language I have ever witnessed. It somehow manages to combine the very worst things of dynamic typing with the downsides of static typing, all in one bundle of utter shit

Imagine you have two game object scripts that want to reference each other, e.g. by calling each others methods.

Well you're outta fucking luck because scripts CANNOT have cyclic references. Not even fucking *type hints* can be cyclic between scripts. Okay no problem, since GDScript is loosely based of Python I can surely just call my method out of the blue without type hints and have it look it up by name. Nope! Not even with the inefficient as fuck `call` method that does a completely dynamic-at-runtime fuck-compile-time-we-script-in-this-bitch function call can find the function. Why? Because the variable that holds a reference to my other script is assumed to be of type Node. The very base class of everything

So not only is the optional typing colossal garbage. You cant even do a fucking dynamic function call because this piece of shit is just C++ in Pyhtons clothing. And nothing against C++ (first time I said that). At least c++ lets me call a fucking function6 -

every fucking time I use Javascript.

(yes, I'm no expert, but I can pick up ANY LANGUAGE and do this task in FIVE FUCKING MINUTES, NOT AN HOUR!!! FUCK!)

"Gee, I think this button should probably list the total recipients of the mailing, looks like I have to get the total of a column in an object, no problem, hell, i'll do it frontside just for the fuck of it'

yeah, seemed like a good idea.. AN HOUR AGO

ARRRGGGH

fucking javascript scope can take a flying leap off of a tall building, and then NOT FALL to the fucking ground because it will fucking tell me that OOPS gravity doesn't exist for javascript!

UNCAUGHT REFERENCE ERROR

right?

FUCK YOU

die from gravity like you deserve motherfucker16 -

Node: The most passive aggressive language I've had the displeasure of programming in.

Reference an undefined variable in a module? Prepare to waste your time hunting for it, because the runtime won't tell you about it until you reference a property or method on the quietly undefined module object.

Think you know how promises work? As a hiring manager, I've found that less than 5% of otherwise well-experienced devs are out of the Dunning Kruger danger zone.

Async causes edge cases and extra dev effort that add to the effort required to make a quality product.

Got a bug in one of your modules? Prepare yourself for some downtime because a single misplaced parentheses can take out the entire Node process, killing unrelated pages and even static file hosting.

All this makes for a programming experience that demands much higher cognitive load, creates more categories of bugs, and leads to code bloat/smell much more quickly than other commonly substituted languages.

From a business perspective, the money you save on scaling (assuming your app is more compute efficient under Node) is wasted on salaries and opportunity costs stemming from longer dev time, more QA, and more frequent outages.

IMO, Node is an awesome experiment, a fun language, a great tool for specific use cases, and a terrible fucking choice for an entire website.8 -

So....

I'm loving my personal project in Node.JS but I have to say, whoever would use this for any involved professional purpose is a fucking retard.

A complete fucking retard.

Errrors related to their weakly typed bs would have been caught by a compiler in literally ANY OTHER LANGUAGE. Yeah it would take more time to tag objects etc, but then it would be DONE RIGHT and a look-ahead compiler wouldn't be necessary to tell you 'oh the reference could point to a completely different type ! nothing will warn you of this, but if you sort of hover after a certain line the source of the error it took a fucking hour of runtime to produce will be clear !'......

remember just say @NoToJavascript20 -

Major rant incoming. Before I start ranting I’ll say that I totally respect my professor’s past. He worked on some really impressive major developments for the military and other companies a long time ago. Was made an engineering fellow at Raytheon for some GPS software he developed (or lead a team on I should say) and ended up dropping fellowship because of his health. But I’m FUCKING sick of it. So fucking fed up with my professor. This class is “Data Structures in C++” and keep in mind that I’ve been programming in C++ for almost 10 years with it being my primary and first language in OOP.

Throughout this entire class, the teacher has been making huge mistakes by saying things that aren’t right or just simply not knowing how to teach such as telling the students that “int& varOne = varTwo” was an address getting put into a variable until I corrected him about it being a reference and he proceeded to skip all reference slides or steps through sorting algorithms that are wrong or he doesn’t remember how to do it and saying, “So then it gets to this part and....it uh....does that and gets this value and so that’s how you do it *doesnt do rest of it and skips slide*”.

First presentation I did on doubly linked lists. I decided to go above and beyond and write my own code that had a menu to add, insert at position n, delete, print, etc for a doubly linked list. When I go to pull out my code he tells me that I didn’t say anything about a doubly linked list’s tail and head nodes each have a pointer pointing to null and so I was getting docked points. I told him I did actually say it and another classmate spoke up and said “Ya” and he cuts off saying, “No you didn’t”. To which I started to say I’ll show you my slides but he cut me off mid sentence and just yelled, “Nope!”. He docked me 20% and gave me a B- because of that. I had 1 slide where I had a bullet point mentioning it and 2 slides with visual models showing that the head node’s previousNode* and the tail node’s nextNode* pointed to null.

Another classmate that’s never coded in his life had screenshots of code from online (literally all his slides were a screenshot of the next part of code until it finished implementing a binary search tree) and literally read the code line by line, “class node, node pointer node, ......for int i equals zero, i is less than tree dot length er length of tree that is, um i plus plus.....”

Professor yelled at him like 4 times about reading directly from slide and not saying what the code does and he would reply with, “Yes sir” and then continue to read again because there was nothing else he could do.

Ya, he got the same grade as me.

Today I had my second and final presentation. I did it on “Separate Chaining”, a hashing collision resolution. This time I said fuck writing my own code, he didn’t give two shits last time when everyone else just screenshot online example code but me so I decided I’d focus on the PowerPoint and amp it up with animations on models I made with the shapes in PowerPoint. Get 2 slides in and he goes,

Prof: Stop! Go back one slide.

Me: Uh alright, *click*

(Slide showing the 3 collision resolutions: Open Addressing, Separate Chaining, and Re-Hashing)

Prof: Aren’t you forgetting something?

Me: ....Not that I know of sir

Prof: I see Open addressing, also called Open Hashing, but where’s Closed Hashing?

Me: I believe that’s what Seperate Chaining is sir

Prof: No

Me: I’m pretty sure it is

*Class nods and agrees*

Prof: Oh never mind, I didn’t see it right

Get another 4 slides in before:

Prof: Stop! Go back one slide

Me: .......alright *click*

(Professor loses train of thought? Doesn’t mention anything about this slide)

Prof: I er....um, I don’t understand why you decided not to mention the other, er, other types of Chaining. I thought you were going to back on that slide with all the squares (model of hash table with animations moving things around to visualize inserting a value with a collision that I spent hours on) but you didn’t.

(I haven’t finished the second half of my presentation yet you fuck! What if I had it there?)

Me: I never saw anything on any other types of Chaining professor

Prof: I’m pretty sure there’s one that I think combines Open Addressing and Separate Chaining

Me: That doesn’t make sense sir. *explanation why* I did a lot of research and I never saw any other.

Prof: There are, you should have included them.

(I check after I finish. Google comes up with no other Chaining collision resolution)

He docks me 20% and gives me a B- AGAIN! Both presentation grades have feedback saying, “MrCush, I won’t go into the issues we discussed but overall not bad”.

Thanks for being so specific on a whole 20% deduction prick! Oh wait, is it because you don’t have specifics?

Bye 3.8 GPA

Is it me or does he have something against me?7 -

Excel rant.

I know excel is not a programming language, but it is what I deal with everyday.

My immediate boss is Japanese(Japanese company).

Our boss will occasionally add to the shared spreadsheet without telling us. We find out the next day by discovering that other sheets that reference to it are waaaay off. Or the big one is the mass amount of #ref all over the sheet.

I mean come on man, at least look around the large sheet first!5 -

NullPointerException: Object reference not set to an instance of an object.....ummm what was null?

How much longer must we wait for a language that tells you the variable name that was null?2 -

How hard can it be to sort content stored in a relational database by a custom meta parameter and restrict the results to a certain language using a very popular content management system in 2023?

After wasting several hours trying to get my head around reference documents, 20 years of anecdotal StackExchange + WordPress.org discussion and ACF + Polylang support, and trying to debug my code, I will now either write my own SQL query or put the meta query results in a hashed object to sort it using my own PHP code.

What time is it now? 2003?2 -

The Zen Of Ripping Off Airtable:

(patterned after The Zen Of Python. For all those shamelessly copying airtables basic functionality)

*Columns can be *reordered* for visual priority and ease of use.

* Rows are purely presentational, and mostly for grouping and formatting.

* Data cells are objects in their own right, so they can control their own rendering, and formatting.

* Columns (as objects) are where linkages and other column specific data are stored.

* Rows (as objects) are where row specific data (full-row formatting) are stored.

* Rows are views or references *into* columns which hold references to the actual data cells

* Tables are meant for managing and structuring *small* amounts of data (less than 10k rows) per table.

* Just as you might do "=A1:A5" to reference a cell range in google or excel, you might do "opt(table1:columnN)" in a column header to create a 'type' for the cells in that column.

* An enumeration is a table with a single column, useful for doing the equivalent of airtables options and tags. You will never be able to decide if it should be stored on a specific column, on a specific table for ease of reuse, or separately where it and its brothers will visually clutter your list of tables. Take a shot if you are here.

* Typing or linking a column should be accomplishable first through a command-driven type language, held in column headers and cells as text.

* Take a shot if you somehow ended up creating any of the following: an FSM, a custom regex parser, a new programming language.

* A good structuring system gives us options or tags (multiple select), selections (single select), and many other datatypes and should be first, programmatically available through a simple command-driven language like how commands are done in datacells in excel or google sheets.

* Columns are a means to organize data cells, and set constraints and formatting on an entire range.

* Row height, can be overridden by the settings of a cell. If a cell overrides the row and column render/graphics settings, then it must be drawn last--drawing over the default grid.

* The header of a column is itself a datacell.

* Columns have no order among themselves. Order is purely presentational, and stored on the table itself.

* The last statement is because this allows us to pluck individual columns out of tables for specialized views.

*Very* fast scrolling on large datasets, with row and cell height variability is complicated. Thinking about it makes me want to drink. You should drink too before you embark on implementing it.

* Wherever possible, don't use a database.

If you're thinking about using a database, see the previous koan.

* If you use a database, expect to pick and choose among column-oriented stores, and json, while factoring for platform support, api support, whether you want your front-end users to be forced to install and setup a full database,

and if not, what file-based .so or .dll database engine is out there that also supports video, audio, images, and custom types.

* For each time you ignore one of these nuggets of wisdom, take a shot, question your sanity, quit halfway, and then write another koan about what you learned.

* If you do not have liquor on hand, for each time you would take a shot, spank yourself on the ass. For those who think this is a reward, for each time you would spank yourself on the ass, instead *don't* spank yourself on the ass.

* Take a sip if you *definitely* wildly misused terms from OOP, MVP, and spreadsheets.5 -

C# developers!

Anyone knows a good source to start reading about C# 7's syntax (not only "what's new")? Sometimes it feels like the language specific tools that I know are not enough.

P.S. Can't find it in msdn language reference or guide.

Please share.2 -

How, how can I be sooooo bad sometimes.

I just discovered “Alias” feature of C#.

Let’s say you have 2 enums with the same name (Let’s say MyAwsomeEnum) in 2 different namespaces.

In this case I was always full qualifying the name.

I was today years old when I discovered “using MyAwsomeEnum = <Fully qualified name>” in the using section.

Edit : Even worse. It's like 3d example in official doc

https://docs.microsoft.com/en-us/...

/facepalm on my self6 -

Fuck you Python! "It's global, unless you modify it. Then you have to use a keyword first." "It is passing reference by value." Asshole language that tries to be too flexible!2

-

Swift is such a horrible language now that I am actually using it. You have protocols that don't behave like interfaces, classes that aren't objects, structs that aren't passed by reference. And stupid counterintuitive generic grammer. I feel scammed.1

-

It really sucks when you realize that you're gonna end up despising a programming language just from having an extremely shitty first experience with it.

About ten weeks ago I was forced to, despite that I was SUPPOSED to be able to choose the language myself, to learn C++ for this course when having literally not a single fucking bit of experience with it whatsoever. And that's pretty soon after already having a beyond shitty experience with the very same school AND the same teacher. (The school I study at "rent" courses from other schools, this is one of them.)

I have the final exam on Monday and I'm allowed to have a book on C++ with me to use as reference, as (I'm pretty sure) I won't have internet access on the computer I'll be doing the test on. I ordered a book with express shipping to be here during this week, Friday at the latest. Never arrived. Called customer service at the book store and apparently it was supposed to have shipped yesterday but hadn't and they didn't know why (fucking awesome girl at the customer service btw, 11/10 quality service). So we cancelled the order, sure, we get the money back, but I still won't have a reference for a language I barely know at all. (No need to mention libraries, did that, dead end.)

Oh, and about that school and that earlier experience I spoke of, because if their inability to do their motherfucking jobs, earlier this year I ended up struggling with money for a couple of months. I really want to fucking strangle these assholes and have them pay my fucking bills to cover the shit that THEY caused.

TLDR; I'm gonna end up hating C++ because of shitty fucking teachers at an even shittier school.6 -

"Rust, the language that makes you feel like a memory astronaut navigating through a borrow-checker asteroid field. Lifetimes? It's more like love letters to the compiler. Safety first, even if it means writing a Ph.D. thesis to move a mutable reference around."2

-

Best documentation have probably been most language docs and references I've worked with, official or otherwise, especially C++. Completeness, consistency, tidiness and examples really help a lot, since I know I can rely on the docs for basically any problem and makes work so much easier since I'll be guaranteed to leave understanding what's up.

Worst documentation has got to be the internal docs we had to create for a seven-man uni project, you couldn't find shit in the sea of docs that were out of date or just plain wrong. It was so much easier to ask whoever was working on that part about the intricacies of the cobbled-together mess than to either read the code or the docs. One absolute mouthbreather was working on the database docs and put in that it stored ArrayLists. Fucking Java ArrayLists in a motherfucking database. One day I am going to rant so hard about this dumbass and it's gonna be a spectacle.

Bonus points goes to the company's public documentation at my internship. It was good and pretty complete, but sometimes there was a document from 2 years ago that had been written by a non-english speaker that was absolutely awful. Some of them were so bad that as soon as I'd finished learning what I needed to, my mentor told me to go and fix the docs, I don't blame him. -

Hey developers, am I allowed to make use of the pass-by-reference feature of C/C++ during a coding interview( given I am using C/C++ as my main language )?

I basically used python in my interviews, but this time I decided to go with C/C++.

now,

for those who gonna say "WRONG CATEGORY": most of you check rant rather than questions.

for those who gonna say "BUT YOUR NAME SUGGEST THAT YOU HATE C": bloody educate yourself.8 -

This is yet another rant about php.

But I'll put my hands on first: I'm less than a junior and I'm looking for a backend language to learn.

So far I've been looking at php with Symfony because it's been used where I work.

Is it my impression or Symfony somehow overcomplicates everything? Like I don't know, for any stupid thing I get stucked (like yesterday, spent two hours on a circular reference problem with serialization).

Also, I don't like it's documentation. I am a book person, meaning that I need pages of text explaining how the framework (or whatever) works in a precise order.

Symfony's docs are like a graph: you often have no idea where you are or "what comes next".

Also, I feel like every page makes you just copy-paste everything without explaining very much what's happening under the hood.

I know there is a cookbook, but it's pretty outdated (like it's at version 3 or 2.7, I don't remember).

Is it just me? Do other Symfony developers experienced the same?10 -

Python is an example of a language which is far, far too high-level for my liking; to provide a reference for my preference, C++ is one of my favourite languages, because it is versatile while remaining somewhat verbose, while Python tosses that verbosity out of the window while not functioning as one would expect it to function after reading a lot of the documentation.3

-

> Your concern is entirely valid—naming can significantly affect how intuitive a concept feels. The term "object safe" in Rust might seem odd or even misleading if we approach it from a traditional object‑oriented perspective.

can rust please stop trying to be "different" for the sake of being different. Dumbest thing. Just call things what they are. What's the point of words if you're not actually accurately using them. Especially for a programming language, which is based on math and logical systems. Like how. Why. Stop. Antithesis to the mindset that should be making languages to begin with if you can't do logic with the words that already exist. Horrible sales pitch. Are they trying to confuse people on purpose, make moats so nobody learns it? is this self-sabotage?

---

I have bludgeoned an AI on this matter. I feel kind of bad. It tried to ad hominem me and then tell me I'd get it if I wasn't so new, and that it's a perfectly valid name because it's in the "reference guide". Called it out on appeal to authority and now it's just saying my argument points back at me like it's groveling. Sigh.

And it's hallucinated thinking I'm the whole online community giving critiques on this matter now, therefore my points are valid, lmao1 -

nothing new, just another rant about php...

php, PHP, Php, whatever is written, wherever is piled, I hate this thing, in every stack.

stuff that works only according how php itself is compiled, globals superglobals and turbo-globals everywhere, == is not transitive, comparisons are non-deterministic, ?: is freaking left associative, utility functions that returns sometimes -1, sometimes null, sometimes are void, each with different style of usage and naming, lowercase/under_score/camelCase/PascalCase, numbers are 32bit on 32bit cpus and 64bit on 64bit cpus, a ton of silent failing stuff that doesn't warn you, references are actually aliases, nothing has a determined type except references, abuse of mega-global static vars and funcs, you can cast to int in a language where int doesn't even exists, 25236 ways to import/require/include for every different subcase, @ operator, :: parsed to T_PAAMAYIM_NEKUDOTAYIM for no reason in stack traces, you don't know who can throw stuff, fatal errors are sometimes catchable according to nobody knows, closed-over vars are passed as functions unless you use &, functions calls that don't match args signature don't fail, classes are not object and you can refer them only by string name, builtin underlying types cannot be wrapped, subclasses can't override parents' private methods, no overload for equality or ordering, -1 is a valid index for array and doesn't fail, funcs are not data nor objects when clojures instead are objects, there's no way to distinguish between a random string and a function 'reference', php.ini, documentation with comments and flame wars on the side, becomes case sensitive/insensitive according to the filesystem when line break instead is determined according to php.ini, it's freaking sloooooow...

enough. i'm tired of this crap.

it's almost weekend! 🍻1 -

Hey rust people

Why not just release a new version of c that automatically deallocates unused variables that are out of scope unless somehow marked to be preserved in a point in ram and their location published in some form of reference table ?

Why were people in the kernel having fake arguments over stupid bs related to being expected to add your new language?41 -

Often when i see the annoying as hell t debug exceptionless let’s just bomb entirely but blazing fastness of c and c++ I feel like a nettard

I use c# for its immutable strings clean syntax and beautiful class markers that are redundant compared to c++ but ensure you tell after adding 1000 methods and total lack of all special characters to indicate reference and derreference and pretty lambda syntax... sure it’s lib poor but I get shit done goddamn it and can read my own code later

So why do I feel empty inside every time i run a ./configure and make under Linux like I’m missing some secret party where neat things are being done and want to sob like I do now

I am not a dotnettard even though 5.0 is an abomination in the eyes of man and god ! Even though Microsoft cooks up overcomplex framework technologies that make a wonderful language underused and make us all look like idiots that they then abandon into the scrap heap! We can’t help Linux users haven’t discovered how much nicer c# is and decided to implement it on their own and port their horrible undocumented ansi c bullshit can we ???? Oh god I feel

So hollow inside and betrayed ! Curse

You gates curse youuuu! Curse you for metro direct3d xna wpf then false promises of core ! May you have a special place in hell reserved for you and your cheap wallpaper shifting monitor paintings and a pool speaker that playeth not but bee jees and ac dc forever and ever amen !

Speaking of which do any c/c++ ides have anything that even begins to rival intellisense on Linux and don’t use some weird ass build system

Like cmake as their default ?

Oh sweet memories of time a while back when I already wrote this and still wasn’t getting then tail I deserved

Again4 -

I literally cried to the Lord while reading the Rego language reference. Man, that thing is HARD to grasp.

After a while I just gave up and switched to Windows (wanted to play) but Windows needed to apply an update thus I decided to reboot and while GRUB was counting down I decided to turn back to Arch and give a try.

I was heard, now this damn policies works and the Go code do not panic anymore.1 -

Sydochen has posted a rant where he is nt really sure why people hate Java, and I decided to publicly post my explanation of this phenomenon, please, from my point of view.

So there is this quite large domain, on which one or two academical studies are built, such as business informatics and applied system engineering which I find extremely interesting and fun, that is called, ironically, SAD. And then there are videos on youtube, by programmers who just can't settle the fuck down. Those videos I am talking about are rants about OOP in general, which, as we all know, is a huge part of studies in the aforementioned domain. What these people are even talking about?

Absolutely obvious, there is no sense in making a software in a linear pattern. Since Bikelsoft has conveniently patched consumers up with GUI based software, the core concept of which is EDP (event driven programming or alternatively, at least OS events queue-ing), the completely functional, linear approach in such environment does not make much sense in terms of the maintainability of the software. Uhm, raise your hand if you ever tried to linearly build a complex GUI system in a single function call on GTK, which does allow you to disregard any responsibility separation pattern of SAD, such as long loved MVC...

Additionally, OOP is mandatory in business because it does allow us to mount abstraction levels and encapsulate actual dataflow behind them, which, of course, lowers the costs of the development.

What happy programmers are talking about usually is the complexity of the task of doing the OOP right in the sense of an overflow of straight composition classes (that do nothing but forward data from lower to upper abstraction levels and vice versa) and the situation of responsibility chain break (this is when a class from lower level directly!! notifies a class of a higher level about something ignoring the fact that there is a chain of other classes between them). And that's it. These guys also do vouch for functional programming, and it's a completely different argument, and there is no reason not to do it in algorithmical, implementational part of the project, of course, but yeah...

So where does Java kick in you think?

Well, guess what language popularized programming in general and OOP in particular. Java is doing a lot of things in a modern way. Of course, if it's 1995 outside *lenny face*. Yeah, fuck AOT, fuck memory management responsibility, all to the maximum towards solving the real applicative tasks.

Have you ever tried to learn to apply Text Watchers in Android with Java? Then you know about inline overloading and inline abstract class implementation. This is not right. This reduces readability and reusability.

Have you ever used Volley on Android? Newbies to Android programming surely should have. Quite verbose boilerplate in google docs, huh?

Have you seen intents? The Android API is, little said, messy with all the support libs and Context class ancestors. Remember how many times the language has helped you to properly orient in all of this hierarchy, when overloading method declaration requires you to use 2 lines instead of 1. Too verbose, too hesitant, distracting - that's what the lang and the api is. Fucking toString() is hilarious. Reference comparison is unintuitive. Obviously poor practices are not banned. Ancient tools. Import hell. Slow evolution.

C# has ripped Java off like an utter cunt, yet it's a piece of cake to maintain a solid patternization and structure, and keep your code clean and readable. Yet, Cs6 already was okay featuring optionally nullable fields and safe optional dereferencing, while we get finally get lambda expressions in J8, in 20-fucking-14.

Java did good back then, but when we joke about dumb indian developers, they are coding it in Java. So yeah.

To sum up, it's easy to make code unreadable with Java, and Java is a tool with which developers usually disregard the patterns of SAD. -

During my small tenure as the lead mobile developer for a logistics company I had to manage my stacks between native Android applications in Java and native apps in IOS.

Back then, swift was barely coming into version 3 and as such the transition was not trustworthy enough for me to discard Obj C. So I went with Obj C and kept my knowledge of Swift in the back. It was not difficult since I had always liked Obj C for some reason. The language was what made me click with pointers and understand them well enough to feel more comfortable with C as it was a strict superset from said language. It was enjoyable really and making apps for IOS made me appreciate the ecosystem that much better and realize the level of dedication that the engineering team at Apple used for their compilation protocols. It was my first exposure to ARC(Automatic Reference Counting) as a "form" of garbage collection per se. The tooling in particular was nice, normally with xcode you have a 50/50 chance of it being great or shit. For me it was a mixture of both really, but the number of crashes or unexpected behavior was FAR lesser than what I had in Android back when we still used eclipse and even when we started to use Android Studio.

Developing IOS apps was also what made me see why IOS apps have that distinctive shine and why their phones required less memory(RAM). It was a pleasant experience.

The whole ordeal also left me with a bad taste for Android development. Don't get me wrong, I love my Android phones. But I firmly believe that unless you pay top dollar for an android manufacturer such as Samsung, motorla or lg then you will have lag galore. And man.....everyone that would try to prove me wrong always had to make excuses later on(no, your $200_$300 dllr android device just didn't cut it my dude)

It really sucks sometimes for Android development. I want to know what Google got so wrong that they made the decisions they made in order to make people design other tools such as React Native, Cordova, Ionic, phonegapp, titanium, xamarin(which is shit imo) codename one and many others. With IOS i never considered going for something different than Native since the API just seemed so well designed and far superior to me from an architectural point of view.

Fast forward to 2018(almost 2019) adn Google had talks about flutter for a while and how they make it seem that they are fixing how they want people to design apps.

You see. I firmly believe that tech stacks work in 2 ways:

1 people love a stack so much they start to develop cool ADDITIONS to it(see the awesomeios repo) to expand on the standard libraries

2 people start to FIX a stack because the implementation is broken, lacking in functionality, hard to use by itself: see okhttp, legit all the Square libs, butterknife etc etc etc and etc

From this I can conclude 2 things: people love developing for IOS because the ecosystem is nice and dev friendly, and people like to develop for Android in spite of how Google manages their API. Seriously Android is a great OS and having apps that work awesomely in spite of how hard it is to create applications for said platform just shows a level of love and dedication that is unmatched.

This is why I find it hard, and even mean to call out on one product over the other. Despite the morals behind the 2 leading companies inferred from my post, the develpers are what makes the situation better or worse.

So just fuck it and develop and use for what you want.

Honorific mention to PHP and the php developer community which is a mixture of fixing and adding in spite of the ammount of hatred that such coolness gets from a lot of peeps :P

Oh and I got a couple of mobile contracts in the way, this is why I made this post.

And I still hate developing for Android even though I love Java.3 -

Is there anything like React Context or Unix envvars in any functional language?

Not global mutable state, but variables with a global identity that I can set to a value for the duration of a function call to influence the behavior of all deeply nested functions that reference the same variable without having to acknowledge them.11 -

The number of concurrent transformations impacting more than half of the codebase in Orchid surpassed 4, so instead of walking the reference graph for each of these I'm updating the whole codebase, from lexer to runtime, in a single pass.

In this process, I also got to reread a lot of code from a year ago. This is the project I learned Rust with. It's incredible, not just how much better I've gotten at this language, but also how much better I've gotten at structuring code on general.

Interestingly though my problem-solving ability seems to be the same. I can tell this by looking at the utilities I made to solve specific well-defined abstract problems. I may have superficial issues with how the code is spelled out in text, but the logic itself is as good as anything I could come up with today.2 -

Great practice/skill sharpening idea for my fellow mad dogs that like to get down in multiple languages/syntaxes:

Pick something simple that won't cause too much stress, but will make you sweat a little bit and put up a good fight, ha!!!

For example, I picked the classic "Caesars Cipher" and picked 5 languages to create it in! I picked Dart, Java, Python, CPP, and C. Each version does the same thing:

1. Asks for a message

2. Runs the logic

3. Prints the message cipher.

4. To decrypt, you just run the same program again and enter the cipher text at the message input prompt. The message gets deciphered using the same logic an shows up as the original text.

The kicker:

Only dox/books allowed for reference. Otherwise it wouldn't push you to get better!!!

Python, C, and CPP were EASY, even with me never having used C before. I am great at using Dart, and that one really challenged me for some reason, but I finally got it. The previous 3 langs took less than 40 lines of code each (with Python being only 18 I believe). Dart actually took somewhere around 50, and Java took about 371784784. (Much love to Java though for real!)

Kinda boring as shit, but I gotta tell you it felt fuckin GREAT to look at all 5 of those programs after completing them, no matter how barbaric... especially when you complete 1 or 2 in a language you've never used or maybe felt really challenged by. Simple exercises that hold a lot of important, relatable logic no matter the subject is our lifeblood!!!9 -

I have a dream that one day whenever you pass / assign / apppend an object you can choose to pass by value or reference, regardless of the object being a primitive or a container (list, vector etc.) object

So I could stop waste my time and bang my head to my desk over such dumb problems this shit induces because language designers found making list to be passed by reference fun

I know such behavior is inherited from C's logic, and I don't give a fuck about any further explanation I might already know. What can be explained doesn't mean that's logical.

You give the choice to pass by value or reference for every object the same way or you do not at all, but no mixed shit.

Just, shut up and make it happen.4 -

Which language should I learn first? I have been trying to learn js and sorta have some basic stuff down like how to store and reference variables and how to display outputs, but aside from that I don’t really know much else. I was wondering if I should continue with js or switch to python or c#, my classmates have been talking about python being the future so idk.15

-