Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "memory error"

-

Being paid to rewrite someone else's bad code is no joke.

I'll give the dev this, the use of gen 1,2,3 Pokemon for variable names and class names in beyond fantastic in terms of memory and childhood nostalgia. It would be even more fantastic if he spelt the names correctly, or used it to make a Pokemon game and NOT A FUCKING ACCOUNTANCY PROGRAM.

There's no correspondence in name according to type, or even number. Dev has just gone batshit, left zero comments, and now somehow Ryhorn is shitting out error codes because of errors existing in Charmeleon's asshole.

The things I do for money...24 -

The stupid stories of how I was able to break my schools network just to get better internet, as well as more ridiculous fun. XD

1st year:

It was my freshman year in college. The internet sucked really, really, really badly! Too many people were clearly using it. I had to find another way to remedy this. Upon some further research through Google I found out that one can in fact turn their computer into a router. Now what’s interesting about this network is that it only works with computers by downloading the necessary software that this network provides for you. Some weird software that actually looks through your computer and makes sure it’s ok to be added to the network. Unfortunately, routers can’t download and install that software, thus no internet… but a PC that can be changed into a router itself is a different story. I found that I can download the software check the PC and then turn on my Router feature. Viola, personal fast internet connected directly into the wall. No more sharing a single shitty router!

2nd year:

This was about the year when bitcoin mining was becoming a thing, and everyone was in on it. My shitty computer couldn’t possibly pull off mining for bitcoins. I needed something faster. How I found out that I could use my schools servers was merely an accident.

I had been installing the software on every possible PC I owned, but alas all my PC’s were just not fast enough. I decided to try it on the RDS server. It worked; the command window was pumping out coins! What I came to find out was that the RDS server had 36 cores. This thing was a beast! And it made sense that it could actually pull off mining for bitcoins. A couple nights later I signed in remotely to the RDS server. I created a macro that would continuously move my mouse around in the Remote desktop screen to keep my session alive at all times, and then I’d start my bitcoin mining operation. The following morning I wake up and my session was gone. How sad I thought. I quickly try to remote back in to see what I had collected. “Error, could not connect”. Weird… this usually never happens, maybe I did the remoting wrong. I went to my schools website to do some research on my remoting problem. It was down. In fact, everything was down… I come to find out that I had accidentally shut down the schools network because of my mining operation. I wasn’t found out, but I haven’t done any mining since then.

3rd year:

As an engineering student I found out that all engineering students get access to the school’s VPN. Cool, it is technically used to get around some wonky issues with remoting into the RDS servers. What I come to find out, after messing around with it frequently, is that I can actually use the VPN against the screwed up security on the network. Remember, how I told you that a program has to be downloaded and then one can be accepted into the network? Well, I was able to bypass all of that, simply by using the school’s VPN against itself… How dense does one have to be to not have patched that one?

4th year:

It was another programming day, and I needed access to my phones memory. Using some specially made apps I could easily connect to my phone from my computer and continue my work. But what I found out was that I could in fact travel around in the network. I discovered that I can, in fact, access my phone through the network from anywhere. What resulted was the discovery that the network scales the entirety of the school. I discovered that if I left my phone down in the engineering building and then went north to the biology building, I could still continue to access it. This seems like a very fatal flaw. My idea is to hook up a webcam to a robot and remotely controlling it from the RDS servers and having this little robot go to my classes for me.

What crazy shit have you done at your University?9 -

Every single one of them, and every one that will come after them.

Google, it started out as 2 people in their garage, wanting to make a search engine that was better than the others. Nothing else, nothing evil. Just make the world a little bit better. And look what it's become now. A megacorporation with little to no regards for their user base. Because who cares about users anyway?

Microsoft, it started out with Bill Gates - young high school computer nerd - who wanted to make an operating system for the world to use. Something that's better than the competition. And boy did he do so. Well "better than the competition" aside, he did make it for the world to use. And the world adopted it. And look what it's become now. A megacorporation with little to no regards for their user base. Because who cares about users anyway?

See where I'm going here?

Apple, it started out with Steve Jobs and Steve Wozniak in their garage, just like Google did, wanting to make hardware that was better than the others. Nothing else, nothing evil. Just to make the world a little bit better. And look what it's become now. Planned obsolescence has been baked into it, just like it is in every other piece of technology. Quality control and thinking through the design has become a thing of the past. User choice, yeah who cares about that.

Samsung, it started out centuries ago actually, and I don't really remember the details of it.. ColdFusion has a video on it if memory serves me right. Do watch it if you're interested. Anyway, just like all the others they started out as a company which wanted to make the world a little bit better. And damn right did they do so.. initially. Look what they've become now. Forcing their stupid TouchWiz UI upon their customers (or products?), a Bixby button that can't even be reprogrammed.. and the latest thing.. Knox, advertised as a security feature, but as everyone who likes rooting their devices and mucking with it knows, it is an anti-feature that only serves for lockdown. Why shouldn't you be able to turn in a phone for RMA when a hardware error occurs, when all you've personally modified is the software? Why should changing the software blow that eFuse, so that you can be sure that you can't replace it without specialized equipment and a very steady hand?

I could go on and on forever about more of the tech giants out there, but I feel like this suffices for now. Otherwise I won't have anything else left for future rants! But one thing I know for sure. Every tech company started, starts, and will start out with a desire to make the world a better place, and once they gain a significant customer base, they will without exception turn into the same kind of Evil Megacorp., just like the ones before them. Some may say that capitalism itself is to blame for this, the greed for more when you already have a lot. Who knows? I'd rather say that the very human nature itself is to blame for it. We're by design greedy beings, and I hate it. I hate being human for that. I don't want humans to be evil towards one another, and be greedy for ever more. But I guess that that's just the way it is, and some things do actually never change...17 -

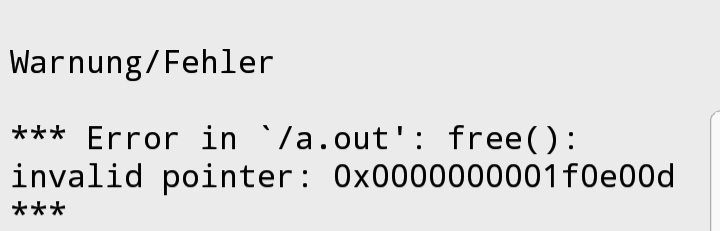

When valgrind (C Memory allocation error detection tool) aborts due to a memory allocation error...1

-

Okay, story time.

Back during 2016, I decided to do a little experiment to test the viability of multithreading in a JavaScript server stack, and I'm not talking about the Node.js way of queuing I/O on background threads, or about WebWorkers that box and convert your arguments to JSON and back during a simple call across two JS contexts.

I'm talking about JavaScript code running concurrently on all cores. I'm talking about replacing the god-awful single-threaded event loop of ECMAScript – the biggest bottleneck in software history – with an honest-to-god, lock-free thread-pool scheduler that executes JS code in parallel, on all cores.

I'm talking about concurrent access to shared mutable state – a big, rightfully-hated mess when done badly – in JavaScript.

This rant is about the many mistakes I made at the time, specifically the biggest – but not the first – of which: publishing some preliminary results very early on.

Every time I showed my work to a JavaScript developer, I'd get negative feedback. Like, unjustified hatred and immediate denial, or outright rejection of the entire concept. Some were even adamantly trying to discourage me from this project.

So I posted a sarcastic question to the Software Engineering Stack Exchange, which was originally worded differently to reflect my frustration, but was later edited by mods to be more serious.

You can see the responses for yourself here: https://goo.gl/poHKpK

Most of the serious answers were along the lines of "multithreading is hard". The top voted response started with this statement: "1) Multithreading is extremely hard, and unfortunately the way you've presented this idea so far implies you're severely underestimating how hard it is."

While I'll admit that my presentation was initially lacking, I later made an entire page to explain the synchronisation mechanism in place, and you can read more about it here, if you're interested:

http://nexusjs.com/architecture/

But what really shocked me was that I had never understood the mindset that all the naysayers adopted until I read that response.

Because the bottom-line of that entire response is an argument: an argument against change.

The average JavaScript developer doesn't want a multithreaded server platform for JavaScript because it means a change of the status quo.

And this is exactly why I started this project. I wanted a highly performant JavaScript platform for servers that's more suitable for real-time applications like transcoding, video streaming, and machine learning.

Nexus does not and will not hold your hand. It will not repeat Node's mistakes and give you nice ways to shoot yourself in the foot later, like `process.on('uncaughtException', ...)` for a catch-all global error handling solution.

No, an uncaught exception will be dealt with like any other self-respecting language: by not ignoring the problem and pretending it doesn't exist. If you write bad code, your program will crash, and you can't rectify a bug in your code by ignoring its presence entirely and using duct tape to scrape something together.

Back on the topic of multithreading, though. Multithreading is known to be hard, that's true. But how do you deal with a difficult solution? You simplify it and break it down, not just disregard it completely; because multithreading has its great advantages, too.

Like, how about we talk performance?

How about distributed algorithms that don't waste 40% of their computing power on agent communication and pointless overhead (like the serialisation/deserialisation of messages across the execution boundary for every single call)?

How about vertical scaling without forking the entire address space (and thus multiplying your application's memory consumption by the number of cores you wish to use)?

How about utilising logical CPUs to the fullest extent, and allowing them to execute JavaScript? Something that isn't even possible with the current model implemented by Node?

Some will say that the performance gains aren't worth the risk. That the possibility of race conditions and deadlocks aren't worth it.

That's the point of cooperative multithreading. It is a way to smartly work around these issues.

If you use promises, they will execute in parallel, to the best of the scheduler's abilities, and if you chain them then they will run consecutively as planned according to their dependency graph.

If your code doesn't access global variables or shared closure variables, or your promises only deal with their provided inputs without side-effects, then no contention will *ever* occur.

If you only read and never modify globals, no contention will ever occur.

Are you seeing the same trend I'm seeing?

Good JavaScript programming practices miraculously coincide with the best practices of thread-safety.

When someone says we shouldn't use multithreading because it's hard, do you know what I like to say to that?

"To multithread, you need a pair."18 -

What an absolute fucking disaster of a day. Strap in, folks; it's time for a bumpy ride!

I got a whole hour of work done today. The first hour of my morning because I went to work a bit early. Then people started complaining about Jenkins jobs failing on that one Jenkins server our team has been wanting to decom for two years but management won't let us force people to move to new servers. It's a single server with over four thousand projects, some of which run massive data processing jobs that last DAYS. The server was originally set up by people who have since quit, of course, and left it behind for my team to adopt with zero documentation.

Anyway, the 500GB disk is 100% full. The memory (all 64GB of it) is fully consumed by stuck jobs. We can't track down large old files to delete because du chokes on the workspace folder with thousands of subfolders with no Ram to spare. We decide to basically take a hacksaw to it, deleting the workspace for every job not currently in progress. This of course fucked up some really poorly-designed pipelines that relied on workspaces persisting between jobs, so we had to deal with complaints about that as well.

So we get the Jenkins server up and running again just in time for AWS to have a major incident affecting EC2 instance provisioning in our primary region. People keep bugging me to fix it, I keep telling them that it's Amazon's problem to solve, they wait a few minutes and ask me to fix it again. Emails flying back and forth until that was done.

Lunch time already. But the fun isn't over yet!

I get back to my desk to find out that new hires or people who got new Mac laptops recently can't even install our toolchain, because management has started handing out M1 Macs without telling us and all our tools are compiled solely for x86_64. That took some troubleshooting to even figure out what the problem was because the only error people got from homebrew was that the formula was empty when it clearly wasn't.

After figuring out that problem (but not fully solving it yet), one team starts complaining to us about a Github problem because we manage the github org. Except it's not a github problem and I already knew this because they are a Problem Team that uses some technical authoring software with Git integration but they only have even the barest understanding of what Git actually does. Turns out it's a Git problem. An update for Git was pushed out recently that patches a big bad vulnerability and the way it was patched causes problems because they're using Git wrong (multiple users accessing the same local repo on a samba share). It's a huge vulnerability so my entire conversation with them went sort of like:

"Please don't."

"We have to."

"Fine, here's a workaround, this will allow arbitrary code execution by anyone with physical or virtual access to this computer that you have sitting in an unlocked office somewhere."

"How do I run a Git command I don't use Git."

So that dealt with, I start taking a look at our toolchain, trying to figure out if I can easily just cross-compile it to arm64 for the M1 macbooks or if it will be a more involved fix. And I find all kinds of horrendous shit left behind by the people who wrote the tools that, naturally, they left for us to adopt when they quit over a year ago. I'm talking entire functions in a tool used by hundreds of people that were put in as a joke, poorly documented functions I am still trying to puzzle out, and exactly zero comments in the code and abbreviated function names like "gars", "snh", and "jgajawwawstai".

While I'm looking into that, the person from our team who is responsible for incident communication finally gets the AWS EC2 provisioning issue reported to IT Operations, who sent out an alert to affected users that should have gone out hours earlier.

Meanwhile, according to the health dashboard in AWS, the issue had already been resolved three hours before the communication went out and the ticket remains open at this moment, as far as I know.5 -

I could bitch about XSLT again, as that was certainly painful, but that’s less about learning a skill and more about understanding someone else’s mental diarrhea, so let me pick something else.

My most painful learning experience was probably pointers, but not pointers in the usual sense of `char *ptr` in C and how they’re totally confusing at first. I mean, it was that too, but in addition it was how I had absolutely none of the background needed to understand them, not having any learning material (nor guidance), nor even a typical compiler to tell me what i was doing wrong — and on top of all of that, only being able to run code on a device that would crash/halt/freak out whenever i made a mistake. It was an absolute nightmare.

Here’s the story:

Someone gave me the game RACE for my TI-83 calculator, but it turned out to be an unlocked version, which means I could edit it and see the code. I discovered this later on by accident while trying to play it during class, and when I looked at it, all I saw was incomprehensible garbage. I closed it, and the game no longer worked. Looking back I must have changed something, but then I thought it was just magic. It took me a long time to get curious enough to look at it again.

But in the meantime, I ended up played with these “programs” a little, and made some really simple ones, and later some somewhat complex ones. So the next time I opened RACE again I kind of understood what it was doing.

Moving on, I spent a year learning TI-Basic, and eventually reached the limit of what it could do. Along the way, I learned that all of the really amazing games/utilities that were incredibly fast, had greyscale graphics, lowercase text, no runtime indicator, etc. were written in “Assembly,” so naturally I wanted to use that, too.

I had no idea what it was, but it was the obvious next step for me, so I started teaching myself. It was z80 Assembly, and there was practically no documents, resources, nothing helpful online.

I found the specs, and a few terrible docs and other sources, but with only one year of programming experience, I didn’t really understand what they were telling me. This was before stackoverflow, etc., too, so what little help I found was mostly from forum posts, IRC (mostly got ignored or made fun of), and reading other people’s source when I could find it. And usually that was less than clear.

And here’s where we dive into the specifics. Starting with so little experience, and in TI-Basic of all things, meant I had zero understanding of pointers, memory and addresses, the stack, heap, data structures, interrupts, clocks, etc. I had mastered everything TI-Basic offered, which astoundingly included arrays and matrices (six of each), but it hid everything else except basic logic and flow control. (No, there weren’t even functions; it has labels and goto.) It has 27 numeric variables (A-Z and theta, can store either float or complex numbers), 8 Lists (numeric arrays), 6 matricies (2d numeric arrays), 10 strings, and a few other things like “equations” and literal bitmap pictures.

Soo… I went from knowing only that to learning pointers. And pointer math. And data structures. And pointers to pointers, and the stack, and function calls, and all that goodness. And remember, I was learning and writing all of this in plain Assembly, in notepad (or on paper at school), not in C or C++ with a teacher, a textbook, SO, and an intelligent compiler with its incredibly helpful type checking and warnings. Just raw trial and error. I learned what I could from whatever cryptic sources I could find (and understand) online, and applied it.

But actually using what I learned? If a pointer was wrong, it resulted in unexpected behavior, memory corruption, freezes, etc. I didn’t have a debugger, an emulator, etc. I had notepad, the barebones compiler, and my calculator.

Also, iterating meant changing my code, recompiling, factory resetting my calculator (removing the battery for 30+ sec) because bugs usually froze it or corrupted something, then transferring the new program over, and finally running it. It was soo slowwwww. But I made steady progress.

Painful learning experience? Check.

Pointer hell? Absolutely.4 -

Had nothing to do today, so I thought I´ll test the migration of SVN to Git in Gitlab.

Boss sent me a mail today, that when I migrate we need to preserve the history, so I actually have to put some effort in it. *sigh*

Shout-out to the Gitlab documentation at this point.

That´s probably the best doc I´v ever read...

Well so I tried to use svn2git. And well...

Who the fuck thought that this piece of shit software is in any way usable?

Holy crap!

If it fails, it just does so without any info why. Even in verbose mode.

And the RAM usage? What the actual fuck?

This whole thing is a complete memory leak!

32Gigs of RAM full in Minutes and the whole system starts to stall!

And then when I thought it finally runs through.

Bam another git checkout error...

Googling for that error then I found something. A version of svn2git made in .Net Core.

Didn´t expect much but I tried it anyways.

And would you look at that!

It ran so smooth and didn´t need that much RAM , I had some doubt it did work correctly.

But it did!

I think I´m gonna pay a coffee or two to some guy over in China now!6 -

Ok friends let's try to compile Flownet2 with Torch. It's made by NVIDIA themselves so there won't be any problem at all with dependencies right?????? /s

Let's use Deep Learning AMI with a K80 on AWS, totally updated and ready to go super great always works with everything else.

> CUDA error

> CuDNN version mismatch

> CUDA versions overwrite

> Library paths not updated ever

> Torch 0.4.1 doesn't work so have to go back to Torch 0.4

> Flownet doesn't compile, get bunch of CUDA errors piece of shit code

> online forums have lots of questions and 0 answers

> Decide to skip straight to vid2vid

> More cuda errors

> Can't compile the fucking 2d kernel

> Through some act of God reinstalling cuda and CuDNN, manage to finally compile Flownet2

> Try running

> "Kernel image" error

> excusemewhatthefuck.jpg

> Try without a label map because fuck it the instructions and flags they gave are basically guaranteed not to work, it's fucking Nvidia amirite

> Enormous fucking CUDA error and Torch error, makes no sense, online no one agrees and 0 answers again

> Try again but this time on a clean machine

> Still no go

> Last resort, use the docker image they themselves provided of flownet

> Same fucking error

> While in the process of debugging, realize my training image set is also bound to have bad results because "directly concatenating" images together as they claim in the paper actually has horrible results, and the network doesn't accept 6 channel input no matter what, so the only way to get around this is to make 2 images (3 * 2 = 6 quick maths)

> Fix my training data, fuck Nvidia dude who gave me wrong info

> Try again

> Same fucking errors

> Doesn't give nay helpful information, just spits out a bunch of fucking memory addresses and long function names from the CUDA core

> Try reinstalling and then making a basic torch network, works perfectly fine

> FINALLY.png

> Setup vid2vid and flownet again

> SAME FUCKING ERROR

> Try to build the entire network in tensorflow

> CUDA error

> CuDNN version mismatch

> Doesn't work with TF

> HAVE TO FUCKING DOWNGEADE DRIVERS TOO

> TF doesn't support latest cuda because no one in the ML community can be bothered to support anything other than their own machine

> After setting up everything again, realize have no space left on 75gb machine

> Try torch again, hoping that the entire change will fix things

At this point I'll leave a space so you can try to guess what happened next before seeing the result.

Ready?

3

2

1

> SAME FUCKING ERROR

In conclusion, NVIDIA is a fucking piece of shit that can't make their own libraries compatible with themselves, and can't be fucked to write instructions that actually work.

If anyone has vid2vid working or has gotten around the kernel image error for AWS K80s please throw me a lifeline, in exchange you can have my soul or what little is left of it5 -

I dive in head first.

Some existing program annoys me, so I get this itch to write a selfhosted Spotify in Go, or a conky with 3D graphics in Rust.

I check the homepage of the language, download the tools, check which IDE is great for it.

Then I just start writing code, following the error corrections thrown by the IDE, doing web searches for all errors. Then when I run into a wall, I might check the reference docs or a udemy course.

Often I don't finish the project, because time is limited and I still have 4 million other things to do and learn, but at least I've learned a new language/tech.

Con: For tech which uses unique paradigms like Rust's memory management or Go's Goroutines, it can be frustrating to bash away at a problem using old assumptions.

Pro: By having a real demand for a product with requirements instead of a hello world or todo app, it's much easier to stay motivated, and you learn beyond what courses would teach you.5 -

Friend: why do I get this error help

[I check the logs]

Me: uh,its a OOM, did you allocate enough memory for GC?

Friend: wait hold on

[changes a method]

[works]

Friend: I shouldn't use this experimental method

Me: Cool man blog it2 -

I propose that the study of Rust and therefore the application of said programming language and all of the technology that compromises it should be made because the language is actually really fucking good. Reading and studying how it manages to manipulate and otherwise use memory without a garbage collector is something to be admired, illuminating in its own accord.

BUT going for it because it is a "beTter C++" should not constitute a basis for it's study.

Let me expand through anecdotal evidence, which is really not to be taken seriously, but at the same time what I am using for my reasoning behind this, please feel free to correct me if I am wrong, for I am a software engineer yes, I do have academic training through a B.S in Computer Science yes, BUT my professional life has been solely dedicated to web development, which admittedly I do not go on about technical details of it with you all because: I am not allowed to(1) and (2)it is better for me to bitch and shit over other petty development related details.

Anecdotal and otherwise non statistically supported evidence: I have seen many motherfuckers doing shit in both C and C++ that ADMIT not covering their mistakes through the use of a debugger. Mostly because (A) using a debugger and proper IDE is for pendejos and debugging is for putos GDB is too hard and the VS IDE is waaaaaa "I onlLy NeeD Vim" and (B) "If an error would have registered then it would not have compiled no?", thus giving me the idea that the most common occurrences of issues through the use of the C father/son languages come from user error, non formal training in the language and a nice cusp of "fuck it it runs" while leaving all sorts of issues that come from manipulating the realm of the Gods "memory".

EVERY manual, book, coming all the way back to the K&C book talks about memory and the way in which developers of these 2 languages are able to manipulate and work on it. EVERY new standard of the ISO implementation of these languages deals, through community effort or standard documentation about the new items excised through features concerning MODERN (meaning, no, the shit you learned 20 years ago won't fucking cut it) will not cut it.

THUS if your ass is not constantly checking what the scalpel of electrical/circuitry/computational representation of algorithms CONDONES in what you are doing then YOU are the fucking problem.

Rust is thus no different from the original ideas of the developers behind Go when stating that their developers are not efficient enough to deal with X language, Rust protects you, because it knows that you are a fucking moron, so the compiler, advanced, and well made as it is, will give you warnings of your own idiotic tendencies, which would not have been required have you not been.....well....a fucking idiot.

Rust is a good language, but I feel one that came out from the necessity of people writing system level software as a bunch of fucking morons.

This speaks a lot more of our academic endeavors and current documentation than anything else. But to me DEALING with the idea of adapting Rust as a better C++ should come from a different point of view.

Do I agree with Linus's point of view of C++? fuck no, I do not, he is a kernel engineer, a damn good one at that regardless of what Dr. Tanenbaum believes(ed) but not everyone writes kernels, and sometimes that everyone requires OOP and additions to the language that they use. Else I would be a fucking moron for dabbling in the dictionary of languages that I use professionally.

BUT in terms of C++ being unsafe and unsecured and a horrible alternative to Rust I personaly do not believe so. I see it as a powerful white canvas, in which you are able to paint software to the best of your ability WHICH then requires thorough scrutiny from the entire team. NOT a quick replacement for something that protects your from your own stupidity BY impending the use of what are otherwise unknown "safe" features.

To be clear: I am not diminishing Rust as the powerhouse of a language that it is, myself I am quite invested in the language. But instead do not feel the reason/need before articles claiming it as the C++ killer.

I am currently heavily invested in C++ since I am trying a lot of different things for a lot of projects, and have been able to discern multiple pain points and unsafe features. Mainly the reason for this is documentation (your mother knows C++) and tooling, ide support, debugging operations, plethora of resources come from it and I have been able to push out to my secret project a lot of good dealings. WHICH I will eventually replicate with Rust to see the main differences.

Online articles stating that one will delimit or otherwise kill the other is well....wrong to me. And not the proper approach.

Anyways, I like big tits and small waists.14 -

Rant:

I am at work, some one says to me this system we are working on is multi threaded. I tell the no its not multi threaded and in this context. Things cannot happen concurrently. Its a single core arm 7tdmi. Arguments ensue abot the difference between multithread multitasking an multiprocessing. I proceed to explain this is a multitasking interrupt driven system. With no context switching or memory segmentation so one heap for all tasks cause thats how we have it configured and there is only one core. So there is no way the error he just described could possibly happen. Then he tells me im wrong but refuses to even look at the processor manual and rejects the Wikipedia entry for multithreading. So I plan on calling off so i can just have the next two weeks off while he trys to figure out why two things ar happening at once on this system. He deserves all the frustration that is to follow.1 -

In college when we had programming labs where we had to use the schools unix server to compile and run.

My professor was very bad at explaining what actually needed to be done in the labs to the point where even the TAs didn't know what to do.

We were suppose to write an application in C to find out by "trial and error" how large we could make an array (or something like that, it's been too long). This not being explained well and no one knowing that much about C, I wrote a loop that just kept growing an array until it couldn't anymore. I watched it consume 72GB or memory from the servers before quitting the loop and realizing with the TA what the professor really meant.

I now feel bad for the IT staff monitoring the system wondering where 72GB just went...2 -

MTP is utter garbage and belongs to the technological hall of shame.

MTP (media transfer protocol, or, more accurately, MOST TERRIBLE PROTOCOL) sometimes spontaneously stops responding, causing Windows Explorer to show its green placebo progress bar inside the file path bar which never reaches the end, and sometimes to whiningly show "(not responding)" with that white layer of mist fading in. Sometimes lists files' dates as 1970-01-01 (which is the Unix epoch), sometimes shows former names of folders prior to being renamed, even after refreshing. I refer to them as "ghost folders". As well known, large directories load extremely slowly in MTP. A directory listing with one thousand files could take well over a minute to load. On mass storage and FTP? Three seconds at most. Sometimes, new files are not even listed until rebooting the smartphone!

Arguably, MTP "has" no bugs. It IS a bug. There is so much more wrong with it that it does not even fit into one post. Therefore it has to be expanded into the comments.

When moving files within an MTP device, MTP does not directly move the selected files, but creates a copy and then deletes the source file, causing both needless wear on the mobile device' flash memory and the loss of files' original date and time attribute. Sometimes, the simple act of renaming a file causes Windows Explorer to stop responding until unplugging the MTP device. It actually once unfreezed after more than half an hour where I did something else in the meantime, but come on, who likes to wait that long? Thankfully, this has not happened to me on Linux file managers such as Nemo yet.

When moving files out using MTP, Windows Explorer does not move and delete each selected file individually, but only deletes the whole selection after finishing the transfer. This means that if the process crashes, no space has been freed on the MTP device (usually a smartphone), and one will have to carefully sort out a mess of duplicates. Linux file managers thankfully delete the source files individually.

Also, for each file transferred from an MTP device onto a mass storage device, Windows has the strange behaviour of briefly creating a file on the target device with the size of the entire selection. It does not actually write that amount of data for each file, since it couldn't do so in this short time, but the current file is listed with that size in Windows Explorer. You can test this by refreshing the target directory shortly after starting a file transfer of multiple selected files originating from an MTP device. For example, when copying or moving out 01.MP4 to 10.MP4, while 01.MP4 is being written, it is listed with the file size of all 01.MP4 to 10.MP4 combined, on the target device, and the file actually exists with that size on the file system for a brief moment. The same happens with each file of the selection. This means that the target device needs almost twice the free space as the selection of files on the source MTP device to be able to accept the incoming files, since the last file, 10.MP4 in this example, temporarily has the total size of 01.MP4 to 10.MP4. This strange behaviour has been on Windows since at least Windows 7, presumably since Microsoft implemented MTP, and has still not been changed. Perhaps the goal is to reserve space on the target device? However, it reserves far too much space.

When transfering from MTP to a UDF file system, sometimes it fails to transfer ZIP files, and only copies the first few bytes. 208 or 74 bytes in my testing.

When transfering several thousand files, Windows Explorer also sometimes decides to quit and restart in midst of the transfer. Also, I sometimes move files out by loading a part of the directory listing in Windows Explorer and then hitting "Esc" because it would take too long to load the entire directory listing. It actually once assigned the wrong file names, which I noticed since file naming conflicts would occur where the source and target files with the same names would have different sizes and time stamps. Both files were intact, but the target file had the name of a different file. You'd think they would figure something like this out after two decades, but no. On Linux, the MTP directory listing is only shown after it is loaded in entirety. However, if the directory has too many files, it fails with an "libmtp: couldn't get object handles" error without listing anything.

Sometimes, a folder appears empty until refreshing one more time. Sometimes, copying a folder out causes a blank folder to be copied to the target. This is why on MTP, only a selection of files and never folders should be moved out, due to the risk of the folder being deleted without everything having been transferred completely.

(continued below)24 -

Linker crashed while building LLVM from source AT FUCKING 97% ARE YOU FUCKING KIDDING ME?

(Antergos , GCC 7)

The error was that it exhausted the memory. How the fuck does a system with 16GB RAM and a swapfile run out of memory while building something? Dayum.5 -

When the poet in me fuses with the geek in me:

Will you be the css to my html?

When I encountered you,

My system threw a fatal error

My RAM was overloaded,

And my CPU went haywire

Will you be the css to my html?

I would show you my source code,

And let you merge your branch into mine

I will help you fix your memory leaks

And I will try filling all your nullpointers

Will you be the css to my html?

Your frontend would perfectly plug into my backend

I can compile all your heavy code,

Just in time

Baby just promise me,

You'll provide the JSON

To my API calls

Will you be the css to my html?

This is my first draft... Constructive criticism is welcome!4 -

Whenever I come across an error I can't solve, my passion and enjoyment for programming steadily goes downhill as I furiously search Stack Overflow and debug. And just when I'm about to give up, to say "this is the opposite of enjoyable, I'm quitting" I figure out the stupid mistake I made, and the moment of sheer bliss that comes with solving a stubborn issue boosts my passion for coding up even higher then it was before.

And at times like this, I wonder if that majority of time spent staring frustratedly at an error message is actually made worthwhile by the sudden hit of adrenaline that comes from solving the problem.

I imagine myself like a drug addict in that regard. Like a drug addict, I spend most of my time feeling like shit, but that short feeling of happiness makes me put up with the shittiness. Is it really worth it? I subject myself to so much angst, angst that I only keep pushing through because I'm certain I'll figure it out eventually, I'll solve the problem and everything will be okay.

Maybe that means programming isn't truly for me. I'm sure many people actually enjoy the process of overcoming obstacles, but honestly, I don't. The only reason I keep trying to scale that obstacle is because of my memory of the past obstacle, and the feeling I felt as I climbed down the other side, having finally reached the top.1 -

porra; caralho; toma no cu.

this fucking shit xamarin. I wish the ass who programed the xamarin vs2017 integration to go fuck off.

srsly, I just want to fucking code this fucking fucker VS2017 keep shitting all around me

first I was gonna install it. didn't install because no memory left. fair enough, my fault there.

cleaned 35 gbs.

finish installing VS, with xamarin. FIRST GOD DAMN TIME I create fucking project, 2 fucking errors and 3 warnings. I DIDN'T EVEN TYPE A COMMA.

ok, tried fucking it. it seems to be conflict between version of Android and xamarin forms. fucker you it shouldn't be like this. anyway.

tried downloading the updated Android version.

it failed at 80%! what error you ask? missing fucking space ok, fuck that thing is huge, ok, my fault again. uninstalled all programs I was not using, all projects I'm not current working on. more fucking 30GB free. tried again. ANDROID IS TOO FUVKING HUGE CAN'T INSTALL IN 30GB!!!

Ok. instead of updating android, gonna downgrade xamarin, can't downgrade. ok gonna remove and install an early version.

unistalled. CAN'T FIND XAMARIN DLLS.

I was like, fuck this project, gonna start a new one. ok, all seems fine, for some weird reason. Except no. I try adding a new page, ops, APPARENTLY VS2017 CAN'T LOAD A GODDAMN .XAML

Ok, I can create a .cs page. done, except now I get a fucking timeout error. fuck.

I search the internet for a workaround, see a guy saying I could manually add a .xaml + .cs by creating this files and then adding them to the proj file.

did it. I go again, everything seems fine. but now I can't freaking reference the damn page.

I'm fucking losing my mind here.

In the mean time I have to turn in this project at the end of the week AND I CAN'T FUCKING OPEN THE GOD DAMN FREKING PROJECT PROPERLY!

FUCK. MY. LIFE.

FUCK XAMARIM AS WELL

FUCK VISUAL STUDIO

FUCK MICROSOFT

FUCK THAT DAMN SSD

FUCK THAT BOSS WHO THINK THAT A 128GB SSD IS ENOUGH

FUCK IT ALL...15 -

I found this on a wiki with Haskell Humor... it's interesting...

How to Shoot Your Self in the Foot With Haskell: Putting the unsafe in unsafePerformIO!

You shoot the gun, but the bullet gets trapped in the IO monad.

Couldn't match expected type 'Deer' against inferred type 'Foot'.

While compiling your program the compiler produces a type error long enough to overflow a kernel buffer, overwrite the trigger control register and shoot you in the foot.

After trying to decipher the type errors from the compiler, your head explodes.

After you've finally found a way to circumvent the type system and shoot yourself in the foot, Oleg appears out of nothing and shoots you in the foot for coming up with it before him.

You shoot the gun but nothing happens (Haskell is pure, after all).

Your foot is fine, until you try to walk on it, at which point it becomes mangled.

You have a shootFoot function which you've proven correct. QuickCheck validates it for arbitrary you-like values. It will be evaluated only when you end up at the hospital. You hope this doesn't come to pass, as it actually returns a bullet-ridden copy of yourself and you don't want to be garbage-collected.

foreign import ccall "shootparts.h shootfoot" shoot_foot :: Gun -> Programmer -> IO ()

shootSelfInFoot = unsafePerformIO . shoot . foot $ self -- Shoot self in foot 0 or more times depending on evaluation order

No instance for (Target Foot)

arising from use of `shoot' at SelfInflictedInjury.hs:1:0

Possible fix: add an instance declaration for (Target Foot)

In the expression: shoot foot

You go to shoot yourself in the foot but the bullet is in the ST monad and the gun is in the IO monad, so you can't.

You ask Haskell to shoot you in the foot but by the rules of lazy evaluation you don't need the result yet so it doesn't happen.

You decide to shoot yourself in the foot but get distracted devising a ballistics algebra and wondering if you can do the calculations in the type system.

You want to shoot yourself in the foot but realize there is no Gun datatype so use Arrows instead.

You shoot in the direction of your foot, but since you are inside the STM monad you can just retry until you figure out what to do.

You shoot yourself in the foot, but you are perfectly fine as long you just don't evaluate the foot.

You shoot yourself in the foot, but nothing happens unless you start walking.

Don't forget about memory consumption! If you don't look, the bullet causes heap overflow. If you look, the bullet causes stack overflow.

You *appear* to have deliberately shot yourself in the foot, and yet your program actually runs perfectly OK due to lazy evaluation. (So long as you remember to not look at your foot...)

You aim the gun at your foot, pull the trigger and remove the clip. When you look at your undamaged foot, the hammer clicks on an empty barrel.1 -

So I'm making a file uploader for a buddy of mine and I got an error that I had never seen before. Suddenly I had C++ code and some other weird shite in my terminal. Turns our that I got a memory leak and the first thing that sprung to mind was "Fuck yes, I get to do some NCIS ass debugging".

Now the app worked fine for smaller files, like 5MB - 10MB files, but when I tried with some Linux ISO's it would produce the memory leak.

Well I opened the app with --inspect and set some breakpoints and after setting some breakpoints I found it. Now, for this app I needed to do some things if the user uploads an already existing file. Now to do that I decided to take the SHA string of the file and store it in a database. To do this I used fs.readFile aaaaaaaaaand this is where it went wrong. fs.readFile doesn't read the file as a stream.

Well when I found that, boy did I feel stupid :v 5

5 -

>Be client

>Have an issue with incredibly slow webpage load time

>Blame memcache issues

So... I look into the problem. Yes, the page either loads up fast, or times out. So, into the logs I go. Webserver is fine (except the timeout), PHP though... Error log is fine (just notices), but slow log shows the issue is the database (of course... its always the database... ugh)

So, checking the database, there is one ugly query that seems to be an issue. 5 joins and a huge where condition.

So I run EXPLAIN on the query and... Proceed to bang my head against the wall.

OF COURSE ITS SLOW YOU FU******, NONE OF YOUR TABLES HAVE ANY INDEXES.

What do they expect when the database has to always go down the whole table and do everything in memory, until it runs out and has to dump it all on disk and work with it there.

Ugh... Some clients... -

Learning Rust.

Holy brainfucking brain melt, those references, scoping and borrowing and cloning and whatnot, because there is no garbage collector, but also no direct memory management.

It's cool, but also hard for a noob coming from the JVM/Android. The compiler error messages are helpful, but I immediately found some cryptic ones that don't help me at all.9 -

WINDOWS 10 low on memory error! How do i fucking get rid of this keeps restarting my computer 😫😫😫! PHOTOSHOP USER10

-

FUCKING HELL!

I just shutdown my computer after deciding to leave the unfinished feature that I started a couple hours ago for tomorrow.

Not 5 fucking minuets later I had found a solution in my head but now don’t want to spend the time to turn my computer on to fix it. Ugh1 -

garbage collectors' lifestyle matters!

Ever eyeballed the abyss of your memory leaks? Shit, garbage collectors deserve a raise.

Unsung heroes, janitorialing thru that VM like a dung beetle, silently fucking up your perf so you can do that delicious spaghetti. Indiana-jonesing the fuck out of that memory trash can and euthanizing all that disgusting heap of pointers hanging, dangling, like... well, like garbage.

At the very least they're deterministic, unlike that Markov chain we all had the displeasure of fucking up. Amen? Amen! 🙌🏻

You gotta wonder, though, what goes through their nuggin. Do they reminisce about the potential of that half-ass-written class? Do they weep for the elegance of a forgotten function bottlenecking their job? Nah, probably just counting down the nanoseconds till their next full GC cycle. Aaah, like cold beer in Saturday barbecue.

So next time your program miraculously avoids a memory error, take a moment, put your hands up in the air and say a prayer to your garbage collector.

Silently covering for your fuckups1 -

Avoid ACPICA if at all possible. It's one garbage tier cluster fuck of bad design, horrible documentation and downright misleading and wrong code

It's meant to consist of an ASL compiler, disassembler, debugger, dumper, various user space utitilies and a kernel resident OSPM implementation *if* you can figure out what belongs to what. Even just compiling this pile of trash is a mystery in itself. Think you need the source files in source/common? EEEEH, wrong. Well, at least partially since most of them seem to be for the user space stuff..? Other ones *are* needed on the other hand. At least the disassembler and/or debugger and/or dumper components seem to reference them. Not that I could figure out how to compile those anyways. The real path to your goal seems to be to ignore a seemingly arbitrary subset of source and header files until your linker stops complaining

There's also a bunch of configuration defines, some of which *you* define, some defined *for* you, based on again others. Of course most of them do stupid shit. Enabling the debugger automatically enables debug logging. Enabling the disassembler force enables debug allocation tracking... What?

The code itself isn't of much help either. Looking in "os_specific/service_layers" you find what looks to be reference implementations of acpica functions in certain os' like windows and unix. Of course I had a look because AcpiOsReadMemory is supposed to read physical memory and I don't know how I would even implement that. But hey, osunixxf.c (xf for interface... of course) should tell me. I'll let you see for yourself in the attached image. Apparently it does fuck all and just returns AE_OK. No error, no logging, no nothing. Just ok. As you can imagine, AcpiOsWriteMemory doesn't do much more either.

...okay so maybe physical memory accesses aren't actually used and these functions are some sort of relic from past times? Nope! They are absolutely necessary for doing low level device interaction. WTF. So finally I went to the linux source and checked how *they* implemented them, and just as I thought, these functions are anything but no-ops...

...So for what fucking reason do these stupid interface implementations even exist but to purposefully mislead you?? They aren't used for fucking anything! As far as I know Windows doesn't even *use* ACPICA and Linux have their own fork with working implementations... They just sit there, just to tell you how to NOT do it

So that's some of my thoughts about ACPICA. Note that I haven't even used it as a library yet, I just got it to compile and link and it already fucked with me this much.

There's also so much more I didn't mention like that you *have* to modify the acpica source in order to get your own platform header working (else #error) eventhough the docs explicitely instruct you not too but you get the point

Don't use ACPICA if you don't have to. Save your sanity for something that's worth it

-

Okey, so the recruiters are getting smarter, I just clicked how well do you know WordPress quiz (I know it's from a recruiter, already entered a php quiz An might win a drone)

So the question is how to solve this issue:

Fatal error: Allowed memory size of 33554432 bytes exhausted (tried to allocate 2348617 bytes) in /home4/xxx/public_html/wp-includes/plugin.php on line xxx

A set memory limit to 256

B set memory limit to Max

C set memory limit to 256 in htaccess

D restart server

These all seem like bad answers to me.

I vote E don't use the plug-in, or the answer that trumps the rest, F don't use WordPress 4

4 -

I used to be a sysadmin and to some extent I still am. But I absolutely fucking hated the software I had to work with, despite server software having a focus on stability and rigid testing instead of new features *cough* bugs.

After ranting about the "do I really have to do everything myself?!" for long enough, I went ahead and did it. Problem is, the list of stuff to do is years upon years long. Off the top of my head, there's this Android application called DAVx5. It's a CalDAV / CardDAV client. Both of those are extensions to WebDAV which in turn is an extension of HTTP. Should be simple enough. Should be! I paid for that godforsaken piece of software, but don't you dare to delete a calendar entry. Don't you dare to update it in one place and expect it to push that change to another device. And despite "server errors" (the client is fucked, face it you piece of trash app!), just keep on trying, trying and trying some more. Error handling be damned! Notifications be damned! One week that piece of shit lasted for, on 2 Android phones. The Radicale server, that's still running. Both phones however are now out of sync and both of them are complaining about "400 I fucked up my request".

Now that is just a simple example. CalDAV and CardDAV are not complicated protocols. In fact you'd be surprised how easy most protocols are. SMTP email? That's 4 commands and spammers still fuck it up. HTTP GET? That's just 1 command. You may have to do it a few times over to request all the JavaScript shit, but still. None of this is hard. Why do people still keep fucking it up? Is reading a fucking RFC when you're implementing a goddamn protocol so damn hard? Correctness be damned, just like the memory? If you're one of those people, kill yourself.

So yeah. I started writing my own implementations out of pure spite. Because I hated the industry so fucking much. And surprisingly, my software does tend to be lightweight and usually reasonably stable. I wonder why! Maybe it's because I care. Maybe people should care more often about their trade, rather than those filthy 6 figures. There's a reason why you're being paid that much. Writing a steaming pile of dogshit shouldn't be one of them.6 -

*leaning back in the story chair*

One night, a long time ago, I was playing computer games with my closest friends through the night. We would meet for a whole weekend extended through some holiday to excessively celebrate our collaborative and competitive gaming skills. In other words we would definitely kick our asses all the time. Laughing at each other for every kill we made and game we won. Crying for every kill received and game lost. A great fun that was.

Sleep level through the first 48 hours was around 0 hours. After some fresh air I thought it would be a very good idea to sit down, taking the time to eventually change all my accounts passwords including the password safe master password. Of course I also had to generate a new key file. You can't be too serious about security these days.

One additional 48 hours, including 13 hours of sleep, some good rounds Call of Duty, Counter Strike and Crashday plus an insane Star Wars Marathon in between later...

I woke up. A tiereing but fun weekend was over again. After I got the usual cereals for breakfast I set down to work on one of my theory magic decks. I opened the browser, navigated to the Web page and opened my password manager. I type in the password as usual.

Error: incorrect password.

I retry about 20 times. Each time getting more and more terrified.

WTF? Did I change my password or what?...

Fuck.

Ffuck fuck fuck FUCKK.

I've reset and now forgotten my master password. I completely lost memory of that moment. I'm screwed.

---

Disclaimer: sure it's in my brain, but it's still data right?

I remembered the situation but until today I can't remember which password I set.

Fun fact. I also could not remember the contents of episode 6 by the time we started the movie although I'd seen the movie about 10 - 15 times up to that point. Just brain afk. -

¡rant|rant

Nice to do some refactoring of the whole data access layer of our core logistics software, let me tell an story.

The project is around 80k lines of code, with a lot of integrations with an ERP system and an sql database.

The ERP system is old, shitty api for it also, only static methods through an wrapper to an c++ library

imagine an order table.

To access an order, you would first need to open the database by calling Api.Open(...file paths) (yes, it's an fucking flat file type database)

Now the database is open, now you would open the orders table with method Api.Table(int tableId) and in return you would get an integer value, the pointer.

Now for the actual order. first you need to search for it by setting the search parameter to the column ID of the order number while checking all calls for some BS error code

Api.SetInt(int pointer, int column, int query Value)

Then call the find method.

Api.Find(int pointer)

Then to top this shitcake of an api of: if it doesn't find your shit it will use the "close enough" method of search.

And now to read a singe string 😑

First you will look in the outdated and incorrect documentation given to you from the devil himself and look for the column ID to find the length of the column.

Then you create a string variable with ALL FUCKING SPACES.

Now you call the Api.GetStr(int pointer, int column, ref string emptyString, int length)

Now you have passed your poor string to the api's demon orgy by reference.

Then some more BS error code checking.

Now you have read an string value 😀

Now keep in mind to repeat these steps for all 300+ columns in the order table.

News from the creators: SQL server? yes, sql is good so everything will be better?

Now imagine the poor developers that got tasked to convert this shitcake to use a MS SQL server, that they did.

Now I can honestly say that I found the best SQL server benchmark tool. This sucker creams out just above ~105K sql statements per second on peak and ~15K per second for 1.5 second to read an order. 1.5 second to read less than 4 fucking kilobytes!

Right at that moment I released that our software would grind to an fucking halt before even thinking about starting it. And that me & myself and I would be tasked to fix it.

4 months later and two weeks until functional beta, here I am. We created our own api with the SQL server 😀

And the outcome of all this...

Fixes bugs older than a year, Forces rewriting part of code base. Forces removal of dirty fixes. allows proper unit and integration testing and even database testing with snapshot feature.

The whole ERP system could be replaced with ~10 lines of code (provided same relational structure) on the application while adding it to our own API library.

Best part is probably the performance improvements 😀. Up to 4500 times faster and 60 times less memory usage also with only managed memory.3 -

Windows rant incoming!

For fucks sake! I think Windows have asked me 117 times if I want to update now. The answer is still fucking no!

And I don't care how much of a security improvement it might be, when your shitty update causes a Memory Management error.

So fuck off, stop minimising my game while I play and go fix your shitty update first!

Fuck you Microsoft, fuck your QA team and while I'm at it, I want to say fuck you to all versions of Windows Server as well!5 -

We had 1 Android app to be developed for charity org for data collection for ground water level increase competition among villages.

Initial scope was very small & feasible. Around 10 forms with 3-4 fields in each to be developed in 2 months (1 for dev, 1 for testing). There was a prod version which had similar forms with no validations etc.

We had received prod source, which was total junk. No KT was given.

In existing source, spelling mistakes were there in the era of spell/grammar checking tools.

There were rural names of classes, variables in regional language in English letters & that regional language is somewhat known to some developers but even they don't know those rural names' meanings. This costed us at great length in visualizing data flow between entities. Even Google translate wasn't reliable for this language due to low Internet penetration in that language region.

OOP wasn't followed, so at 10 places exact same code exists. If error or bug needed to be fixed it had to be fixed at all those 10 places.

No foreign key relationships was there in database while actually there were logical relations among different entites.

No created, updated timestamps in records at app side to have audit trail.

Small part of that existing source was quite good with Fragments, MVP etc. while other part was ancient Activities with business logic.

We have to support Android 4.0 to 9.0 of many screen sizes & resolutions without any target devices issued to us by the client.

Then Corona lockdown happened & during that suddenly client side professionals became over efficient.

Client started adding requirements like very complex validation which has inter-entity dependencies. Then they started filing bugs from prod version on us.

Let's come to the developers' expertise,

2 developers with 8+ years of experience & they're not knowing how to resolve conflicts in git merge which were created by them only due to not following git best practice for coding like only appending new implementation in existing classes for easy auto merge etc.

They are thinking like handling click events is called development.

They don't want to think about OOP, well structured code. They don't want to re-use code mostly & when they copy paste, they think it's called re-use.

They wanted to follow old school Java development in memory scarce Android app life cycle in end user phone. They don't understand memory leaks, even though it's pin pointed by memory leak detection tools (Leak canary etc.).

Now 3.5 months are over, that competition was called off for this year due to Corona & development is still ongoing.

We are nowhere close to completion even for initial internal QA round.

On top of this, nothing is billable so it's like financial suicide.

Remember whatever said here is only 10% of what is faced.

- An Engineering lead in a half billion dollar company.4 -

iOS is rotting my soul.

I've been a user of iPhone for 6 years now. For the first couple years, I wasnt really mindful of software I use, or I guess I didnt really care. As long as it did the bare minimum, I.e. bank app, call, text, browse, watch youtube vids, I didnt really care. However, in the last couple years, ive become very interested in tech and have worked on small developer projects, spent a lot of time coding in my free time, found really inspiring software and apps on my regular computer that just blow my mind on how advanced they are, and how I, some dumb guy with internet access, can just download it on my PC and use it.

This led me into a kind of software honeymoon phase, where I created a shiny new Github account and started exploring what other cool tools are just out there, available to me for free. My software honeymoon was spent on the beaches and resorts of the open-source software ecosystem. Exploring the gem-bearing caves and beautiful forests of anything from free open-source OCR programs(I needed it to convert my dads manuscript from scanned PDF .jpeg's to actual UTF8 text) to open-source RGB lighting/keymapping software to escape the memory-and-CPU-hungry(and most likely advertising-ID-interested) proprietary software that comes with the brand of mouse/keyboard/controller/etc.

It was like I was a kid exploring Disneyland for the first time or something. But then... then... I got off my computer. Picked up my phone to check notifications. Ew, tinder is blowing up notification center with marketing shit. I go to settings. Notification settings. Tinder's at the bottom so I just want to use a search bar instead of scrolling. There's no search bar. Minor inconvenience. Dark mode isnt dark enough for me. I guess thats just too damn bad, because for the next two hours, I'll have to figure it out by messing with accessibility settings. Time for bed, and I'm just getting plum tired of having to turn on my alarms every night for work the next morning. So I used the 'Automations' app to do it for me. For the next two weeks, at the time specified, 'There was an error running your automation' until I just delete the automation. Browsing through the FaceID settings, I see 'Attention Aware Features'. Cool, maybe now my phone won't automatically dim the screen when im in the middle of reading notifications on my lock screen. Haha, nope still does it. After turning on my alarms, I go to sleep. I wake up an hour late for work because those handy 'Attention Aware Features' silenced my alarm immediately because I fell asleep watching a youtube video.

I could go on and on. Its actually making me feel depressed typing this on my phone, fighting with Apple's primitive autocorrect and annoying implementation of Swype to type.4 -

I inherited a nextjs project from an unknown guy and am fangirling the codebase

But the deeper I familiarise myself with it, the more the cracks begin to appear:

1) The dude Is incapable of grasping the basics of DRY concept. He actually setup a ton of stuff I may have done poorly if I'd started working straight out of the docs, so I feel like I owe him a shower of praise. I guess being new to nextjs makes it look more impressive than it actually is. He was paid off, yet getting the credit seems unearned to me. I'm just afraid reaching out to him might turn around to bite me in the ass

***

I had the above in my drafts, contemplating sending him a token to show some appreciation for unknowingly showing me the ropes. I was going to find him on LinkedIn using his commit names. But after doing everything I've done, undergoing the anxiety and severe pressure I faced at the hands of the project owners, I'm not sharing a farthing with anybody

Yes, I may not have known about zustand and persist middleware. Yes, he did all the ui. Yes, he created the base components and fancy wrappers around form and button html elements. For those, I'm grateful

But the amount of refactoring I had to do to, for an opportunity to implement my own target features, I'd say I can lay as much claim to the project as he does.

Side note #1: I have some newfound respect for front end devs. We used to discriminate against them for doing just css but that was only relevant in the jquery days. Now, they have to use cryptic css frameworks (sass, less, tailwind), they have to learn esoteric syntax of some js framework and write controllers/components as the case may be. They have to (the worst part), bind this data to an API, which would never make sense to me coming from a php ssr-natural world

Back rewarding the guy, some of the challenges I came back from were:

1) Next server outages: I still don't know the workaround this. The app terminates, browser giving an error about using up memory. I have to wait for about 10 minutes before I can access the app again

2) spring Webflux authentication not hydrating: I was unexpectedly asked to work on the back end too, where I got tortured with this horrifying condition. The most poorly documented framework for the Web has no upto date guide on how to implement jwt security measures. I opened a question on stackoverflow. A day later, both my question and the helpful answer got downvoted

3) Zustand not retrieving any data from localstorage once page reloads, until I miraculously stumbled on a hack: there's a config callback for reading state after rehydration or thereabout. So I interact with the state there. That's the only way content clearly in localstorage can get transmuted into dynamic format accessible by the code

4) Mongo database suddenly disconnecting: for no apparent reason, this bailed. Accessible on compass. This was even when I realised it was responsible for front end requests not going through. Eventually created a new database and requests surprisingly began connecting again. Thankfully, my laravel background taught me about seeders so I had them on standby from the onset. Wasn't difficult to just port to a fresh database after confirming the first one was inaccessible to the app

After this painful odyssey and the time constraints, threats of moving forward with someone else, I deserve every dime they deem me worthy of and more3 -

TL;DR: TIL for heavy queries use PDO and not some frameworks DB class

ffs I was trying to save 300k+ lines at once with Laravel for weeks. Mind you from a text file. 1gb ram on the vps so while trying to prepare the text to save: Fatal Error: Allowed Memory Size of bla bla Bytes Exhausted

ok so lets put it in a loop: Fatal error: Maximum execution time of 30 seconds exceeded (set_time_limit(0); lol)

optimising, varying the code got me into a situation when the content got saved in the BD but inconsistent (duplicates) and the table had often more than 1,5M rows. That was what told me its not a performance issue, my code is the issue. (dah)

I was starting to think it would be easier to export a prepared query to a sql file and load the file into the db as thats the fastest possible option...I even started to think about switching to python, then it hit me, Laravel has a shitload of routes to the DB so I switched to PDO

benchmark on 1vCPU, 1GB RAM VPS with SSD

379k lines with 11 columns in less than 10 sec with a loop of saving every ~6000 rows (if i tried choking it to save the whole thing at once it went up to 16-17sec)2 -

!rant

Digging though my old emails found this joke sent to me long time ago. Think that originally was posted in a 1997 issue of Computerworld. Maybe you already suffered the effect of the "Opcodes" listed here. Hope that !tl;dr

ARG Agree to Run Garbage

BDM Branch and Destroy Memory

CMN Convert to Mayan Numerals

DDS Damage Disk and Stop

EMR Emit Microwave Radiation

ETO Emulate Toaster Oven

FSE Fake Serious Error

GSI Garble Subsequent Instructions

GQS Go Quarter Speed

HEM Hide Evidence of Malfunction

IDD Inhale Dust and Die

IKI Ignore Keyboard Input

IMU Irradiate and Mutate User

JPF Jam Paper Feed

JUM Jeer at Users Mistake

KFP Kindle Fire in Printer

LNM Launch Nuclear Missiles

MAW Make Aggravating Whine

NNI Neglect Next Instruction

OBU Overheat and Burn if Unattended

PNG Pass Noxious Gas

QWF Quit Working Forever

QVC Question Valid Command

RWD Read Wrong Device

SCE Simulate Correct Execution

SDJ Send Data to Japan

TTC Tangle Tape and Crash

UBC Use Bad Chip

VDP Violate Design Parameters

VMB Verify and Make Bad

WAF Warn After Fact

XID eXchange Instruction with Data

YII Yield to Irresistible Impulse

ZAM Zero All Memory -

I just built a TypeScript microservice and got a heap out of memory fatal error. What the actual fuck? Micro my ass7

-

I feel like writing or telling people about the time I jumped from Windows 7 Ultimate and jumping to Windows 10. (I'm not against 10, but I'm never updating after what had happened to me)

It all starts when none of my games will play due to a possible issue with my graphics card. I look up "3D source game bug" and not many results pop up. I go on Microsoft's Qna areas and ask this question but to my surprise nothing they say would make sense. "Clean the pins of your graphics card, make sure you verify the games on Steam". I verified the games and they checked out as perfectly fine. I don't have access to my graphics card because this is a laptop, sadly not a tower.

Two months pass and my computer is already showing signs of stress, like it didn't want to live in a sense. It was three times slower than when I was on Windows 7 and it was unallocating areas of my main hard drive where I could make virtual hard drives.

Instantly I start looking up Linux distros and find Linux Mint. 17.3 was the current version at the time. I downloaded it and burned it onto a DVD-rom and rebooted my computer. I loaded into the disc and to my surprise it seemed almost like Windows 7 apart from the Linux part. I grab my external hard drive and partition it to hold the Linux distro and leave it plugged in incase Windows 10 does actually fail.

On December 19, a few months after Windows 10 had released. I start my laptop to try and continue my studies in video game development. But to my surprise, Windows 10 had finally crashed permanently. The screen flickered blue and black, and an error box saying Loginui.exe failed to start. I look at it for a solid minute as my computer had just committed suicide in a sense.

I reboot thinking it would fix the error but it didn't. I couldn't log in anymore.

I force shutdown the laptop and turn it back on putting it into safe mode.

To my surprise loginui.exe works and I sign in. I look at my desktop, the space wallpaper I always admired, the sound files, screen shots I had saved.

I go into file explorer and grab everything out of my default hard drive Windows was installed on. Nothing but 400gb got left behind and that was mainly garbage prototypes I had made and Windows itself. I formatted my external hard drive and placed everything on it. Escaping Windows 10 with around 100GB of useful data I looked at the final shutdown button I would look at.

I click it and try to boot into normal Windows 10. But it doesn't work. It flickers and the error pops up once more.

I force it to shutdown and insert the previous Linux Mint disc I made and format the default hard drive through Linux. I was done. 10 gave me a lot of shit. Java wouldn't work, my games has a functional UI but no screen popped up except a black abyss and it wouldn't even let me try to update my graphics card, apparently my AMD Radeon 5450 was up to date at the AMD Radeon 5000's.

I installed Linux Mint and thinking the games would actually play I open steam and Launch Half-Life 2 to check if Linux would be nicer to me than Windows 10 had been.

To my surprise the game ran. The scene from Highway 17 popped on screen and the UI was fully functional. But it was playing at 10-15fps rather than the usual 60-70fps. Keep look at my drivers and see my graphics card isn't in use. I do some research and it turns out I have a Hybrid Laptop.

Intel HD Graphics and an AMD Radeon 5450 and it was using the Intel and not the AMD. Months of testing and attempts of getting the games to work at high frame rates pass and the Damn thing still functions at a low terrible fps. Finally I give up. I ask my mom for a Windows 7 disc and she says we can't afford it. A few months pass and I finally get a Windows 7 installation disc through money I've saved up. Proudly I put it into my optical disc drive and install it to my main hard drive deleting Linux completely. I announced to all my friends my computer was back in working order and I install everything I needed, Steam, Skype, Blender, and Unity as well as all my games. I test Half-Life 2 and it's running exceptionally smoothly, I test Minecraft at max settings and it's working beautifully. The computer was functioning properly once again and my life as a developer started as I modeled things and blender, learned beginners C# and learned a lot of Batch. Today the computer still runs at a great speed and I warn others of what happened to me after I installed Windows 10 to my machine if they are thinking of switching from 7 or 8 on an older machine.

Truly the damage to my data cannot be undone. But the memory of the maintenance, work, tests, all are a memory of how Windows 10 ruined me and every night before the one year anniversary of Windows 10's release, I took out the battery of my laptop and unplugged it from the a.c. power, just so Windows 10 doesn't show it's DLLs, batch scripts, vbs scripts, anything on my computer. But now, after this has happened and I have recovered, I now only have a story to tell5 -

So I recently finished a rewrite of a website that processes donations for nonprofits. Once it was complete, I would migrate all the data from the old system to the new system. This involved iterating through every transaction in the database and making a cURL request to the new system's API. A rough calculation yielded 16 hours of migration time.

The first hour or two of the migration (where it was creating users) was fine, no issues. But once it got to the transaction part, the API server would start using more and more RAM. Eventually (30 minutes), it would start doing OOMs and the such. For a while, I just assumed the issue was a lack of RAM so I upgraded the server to 16 GB of RAM.

Running the script again, it would approach the 7 GiB mark and be maxing out all 8 CPUs. At this point, I assumed there was a memory leak somewhere and the garbage collector was doing it's best to free up anything it could find. I scanned my code time and time again, but there was no place I was storing any strong references to anything!

At this point, I just sort of gave up. Every 30 minutes, I would restart the server to fix the RAM and CPU issue. And all was fine. But then there was this one time where I tried to kill it, but I go the error: "fork failed: resource temporarily unavailable". Up until this point, I believed this was simply a lack of memory...but none of my SWAP was in use! And I had 4 GiB of cached stuff!