Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "accuracy"

-

Website design philosophies:

Apple: "...and a really big picture there, and a really big picture there, and a really big picture there, and..."

Microsoft: "border-radius:0 !important;"

Google: "EVERYTHING MOVES!!! And most websites get material design. Most."

Amazon: "We're slowly moving away from 2009"

Wix: "How can we further increase load times?"

Literally any download site: "Click here! No, click here! Nononono!! Click here!!..."

Facebook: "We can't change anything because our main age demographic is around 55"

University websites: "That information isn't hard enough to find yet. Decrease the search accuracy and increase broken links."34 -

Currently acing an interview, had to do a typing test, got 67wpm and 98% accuracy.

"If you want faster I'd have to bring my own keyboard, this one's a little small"

"You can go faster?"40 -

In a user-interface design meeting over a regulatory compliance implementation:

User: “We’ll need to input a city.”

Dev: “Should we validate that city against the state, zip code, and country?”

User: “You are going to make me enter all that data? Ugh…then make it a drop-down. I select the city and the state, zip code auto-fill. I don’t want to make a mistake typing any of that data in.”

Me: “I don’t think a drop-down of every city in the US is feasible.”

Manage: “Why? There cannot be that many. Drop-down is fine. What about the button? We have a few icons to choose from…”

Me: “Uh..yea…there are thousands of cities in the US. Way too much data to for anyone to realistically scroll through”

Dev: “They won’t have to scroll, I’ll filter the list when they start typing.”

Me: “That’s not really the issue and if they are typing the city anyway, just let them type it in.”

User: “What if I mistype Ch1cago? We could inadvertently be out of compliance. The system should never open the company up for federal lawsuits”

Me: “If we’re hiring individuals responsible for legal compliance who can’t spell Chicago, we should be sued by the federal government. We should validate the data the best we can, but it is ultimately your department’s responsibility for data accuracy.”

Manager: “Now now…it’s all our responsibility. What is wrong with a few thousand item drop-down?”

Me: “Um, memory, network bandwidth, database storage, who maintains this list of cities? A lot of time and resources could be saved by simply paying attention.”

Manager: “Memory? Well, memory is cheap. If the workstation needs more memory, we’ll add more”

Dev: “Creating a drop-down is easy and selecting thousands of rows from the database should be fast enough. If the selection is slow, I’ll put it in a thread.”

DBA: “Table won’t be that big and won’t take up much disk space. We’ll need to setup stored procedures, and data import jobs from somewhere to maintain the data. New cities, name changes, ect. ”

Manager: “And if the network starts becoming too slow, we’ll have the Networking dept. open up the valves.”

Me: “Am I the only one seeing all the moving parts we’re introducing just to keep someone from misspelling ‘Chicago’? I’ll admit I’m wrong or maybe I’m not looking at the problem correctly. The point of redesigning the compliance system is to make it simpler, not more complex.”

Manager: “I’m missing the point to why we’re still talking about this. Decision has been made. Drop-down of all cities in the US. Moving on to the button’s icon ..”

Me: “Where is the list of cities going to come from?”

<few seconds of silence>

Dev: “Post office I guess.”

Me: “You guess?…OK…Who is going to manage this list of cities? The manager responsible for regulations?”

User: “Thousands of cities? Oh no …no one is our area has time for that. The system should do it”

Me: “OK, the system. That falls on the DBA. Are you going to be responsible for keeping the data accurate? What is going to audit the cities to make sure the names are properly named and associated with the correct state?”

DBA: “Uh..I don’t know…um…I can set up a job to run every night”

Me: “A job to do what? Validate the data against what?”

Manager: “Do you have a point? No one said it would be easy and all of those details can be answered later.”

Me: “Almost done, and this should be easy. How many cities do we currently have to maintain compliance?”

User: “Maybe 4 or 5. Not many. Regulations are mostly on a state level.”

Me: “When was the last time we created a new city compliance?”

User: “Maybe, 8 years ago. It was before I started.”

Me: “So we’re creating all this complexity for data that, realistically, probably won’t ever change?”

User: “Oh crap, you’re right. What the hell was I thinking…Scratch the drop-down idea. I doubt we’re have a new city regulation anytime soon and how hard is it to type in a city?”

Manager: “OK, are we done wasting everyone’s time on this? No drop-down of cities...next …Let’s get back to the button’s icon …”

Simplicity 1, complexity 0.16 -

Participated in an IEEE Hackathon where we built a line following robot. We were the slowest, but we had the most accuracy.

The image is our first attempt at getting it to work, consequently, we were the first team to actually get a prototype finished and working. Other people were trying to cram as many sensors as possible. We stuck with one, and 47 lines of code to make it work. Everyone else had more than 2 sensors and I can only imagine how much code they had. 19

19 -

Facebook: "Our facial recognition automatically tags people in pictures."

Tesla: "Our deep learning algorithm drives cars by itself."

Andrew Ng: "I predict patients' likelihood of dying with 99% accuracy."

Google: "You know one of our algorithms is going to pass the Turing test very soon."

Wall Street: "We use satellite images to predict stock prices based how filled car parks of specific stores are."

The remaining majority of data sciencists: "We overfit linear models."2 -

Just discovered that one of my coworkers(well...my boss really) has the uncanny ability to detect fonts and sizes with extreme accuracy.

For some of you that may be not impressive at all and some can probably do it too. But its like...not only on websites man...she can do it on things that we see printed, menus and stuff.

That to me at least is very impressive.11 -

Given a couple of lines of code, I can predict with an 80% accuracy which of my team mates wrote it.7

-

I messaged a professor at MIT and surprisingly got a response back.

He told me that "generating primes deterministically is a solved problem" and he would be very surprised if what I wrote beat wheel factorization, but that he would be interested if it did.

It didnt when he messaged me.

It does now.

Tested on primes up to 26 digits.

Current time tends to be 1-100th to 2-100th of a second.

Seems to be steady.

First n=1million digits *always* returns false for composites, while for primes the rate is 56% true vs false, and now that I've made it faster, I'm fairly certain I can get it to 100% accuracy.

In fact what I'm thinking I'll do is generate a random semiprime using the suspected prime, map it over to some other factor tree using the variation on modular expotentiation several of us on devrant stumbled on, and then see if it still factors. If it does then we know the number in question is prime. And because we know the factor in question, the semiprime mapping function doesnt require any additional searching or iterations.

The false negative rate, I think goes to zero the larger the prime from what I can see. But it wont be an issue if I'm right about the accuracy being correctable.

I'd like to thank the professor for the challenge. He also shared a bunch of useful links.

That ones a rare bird.21 -

Uploaded an app to Appstore and it was rejected because the Gender dropdown at registration only has "Male" and "Female" as required selectable options. The reviewer thought it was right to force an inclusion of "Other" option inside a Medical Service app that is targeting a single country which also only recognizes only Male/Female as gender.

Annoyingly, I wrote back a dispute on the review:

Hello,

I have read your inclusion request and you really shouldn't be doing this. Our app is a Medical Service app and the Gender option can only be either Male or Female based on platform design, app functionality and data accuracy. We are also targeting *country_name* that recognizes only Male/Female gender. Please reconsider this review.

{{No reply after a week}}

-- Proceeds to include the option for "Other"

-- App got approved.

-- Behind the scene if you select the "other" option you are automatically tagged female.

Fuck yeah!31 -

Wrote a custom printer script in shell.

Went to test the script on some printers.

Neglected to check accuracy of script.

script is supposed to print jpeg.

it doesn't interpret it as an image,

but rather as raw binary in text...

^\<92>Q^H2Ei@0$iA+<89>dl_d<87><8f>Q

mfw each printer in the entire 5 story building

starts printing 500 pages of

RAW

BINARY 3

3 -

Local coffee shop/tech book store says what we're all thinking.

This is pretty on brand. They're my favorite local book store. If you're ever in town, definitely visit.

https://www.adasbooks.com/ 9

9 -

The biggest passion of them all, for me: music.

In my case this is rawstyle/raw hardstyle/hardstyle but especially the most brutal rawstyle.

I love the energy it gives me and to listen to the techniques the artists use and also that, after a while, while the kicks all sound the same for many people, immediately identify the artist behind a kick when even hearing it for the first time (90 percent accuracy).

I'd love to produce it but I lack the skill set to do that as for now 😥

A tattoo related to this music genre is coming soon :D9 -

Son of a... insurance tracker

You hit delete and I’m stuck with this reply!?!

Stuff it, I’ll rant about it instead of commenting.

How’s an insurance e company any different to google tracking your every move, except now it’s for “insurance policy premiums” and setting pricing models on when, how, and potentially why you drive.

Granted no company should have enough gps data to be able to create a behaviour driven ai that can predict your where and when’s with great accuracy.

The fight to remove this kind of tech from our lives is long over, now we have to deal with the consequences of giving companies way to much information.

- good lord, I sound like a privacy activists here, I think I’ve been around @linuxxx to long.20 -

It was my first ever hackathon. Initially, I registered with my friend who is a non coder but want to experience the thrill of joining a hackathon. But when we arrived at the event, someone older than us was added to our team because he was solo at that time. Eventually, this old guy (not too old, around his 20s) ( and let’s call him A) and I got close.

We chose the problem where one is tasked to create an ML model that can predict the phenotype of a plant based on genotypic data. Before the event, I didn’t have any background in machine learning, but A was so kind to teach me.

I learned key terms in ML, was able to train different models, and we ended up using my models as the final product. Though the highest accuracy I got for one of my model was 52%, but it didn’t discouraged me.

We didn’t won, however. But it was a great first time experience for me.

Also, he gave me an idea in pitching, because he was also taking MS in Data Science ( I think ) and he had a great background in sales as well, so yeah I got that too.2 -

ALRIGHT sorry SwiftKey I love you but not if you do this to me... I need a new keyboard any recommendations? (android)

(also the accuracy is quite bad as of late...) 21

21 -

I was fresh out of college, love Java and looking for a job.

Well, after exact 1 month I sucked the reality. I found an Ad for a designer and got selected. Point is I mention my qualification in high school because I was feeling bad to disclose my higher degree for such a job.

I worked for 6 months there and every day was like working as the covert operative. I always knew I can write an automated script for all that daily shit. But for the sake of the landlord rent, I kept quiet. (I literally care for his children, I was the only source of income)

Then, my friend that day 16-Sep-2012 I wrote a program to do all the repetitive thing I used to do.

My boss found out and I expose my self as Spiderman do to Jen, Sir! I am a Programmer.

Sadly it was, no surprise to him. He said, on your first day I found out that you are not high school. Because with such accuracy only a graduate can do such level of the job.

He praised me and motivated me, my first non-technical master.1 -

"What tools are needed for eyelash extensions? (eyelash glue, eyelash extension tweezers, etc.)

When applying eyelash extensions, just as important as the extension process itself is choosing the right tools. They not only make the master’s work easier, but also affect the quality and durability of the eyelashes. In this article we will look at what tools are needed for eyelash extensions.

The first and, of course, the most important tool for eyelash extensions is eyelash glue. This glue provides reliable and long-lasting adhesion between natural and artificial eyelashes. It should be hypoallergenic, safe for the skin around the eyes and water resistant. Only correctly selected glue can guarantee safety and beautiful extension results. Therefore, it is important to choose high-quality eyelash glue https://stacylash.com/collections/... that meets all requirements.

The second necessary tool is eyelash extension tweezers. They allow the technician to conveniently and accurately separate natural eyelashes, which facilitates the process of applying and fixing artificial eyelashes. It is important that the tweezers are of high quality, with narrow and sharp tips to ensure precise capture and separation of eyelashes.

The third important tool is tweezers. Tweezers allow the technician to conveniently and accurately place and fix artificial eyelashes on natural ones. It is important that the tweezers have good grip and grip accuracy to ensure precision and accuracy of the extension process.

The fourth necessary tool is a special eyelash brush. It is used to comb eyelashes before the procedure and to remove excess glue after extensions. The brush should be soft, but at the same time securely hold the eyelashes.

The fifth tool is special overhead eye pads. They are used to protect the skin around the eyes and lower eyelashes during the eyelash extension procedure.

So, for successful eyelash extensions you need high-quality eyelash glue, tweezers, tweezers, an eyelash brush and false eye pads. The correct selection and use of these tools will ensure the safety of the procedure and high-quality results. Don’t forget that only a professional approach and high-quality tools can make your look as expressive and attractive as possible."2 -

Java script is like an angry girlfriend who won't tell you what is wrong.

This shit happened today.

Me: somearray.includes[stuff];

JS: I'm alright everything is fine.

Me: no it's not, Clearly the feature is not working.

JS:* silence*

Me: Fine be that way.. * spends lot of time debugging finally finds the issue*...oh shit.

Me: somearray.includes(stuff);

JS: I SAID NO TRAILING SPACE IN END OF THE LINE YOU STUPID PIECE OF SHIT NO TRAILING FUCKING SPACES AAAAHHHH!!!5 -

I can type blind since elementary school with above average accuracy...

Yet i will never be able to write "width" without writing "widht" 2 times first2 -

If you're using snapchat you might want to go on it immediately and change your privacy settings.

New update came out, they added a map with everybody's locations down to a couple of meters in accuracy... And the feature is on by default for EVERYONE to see, not just your friends.

What the actual fuck were they thinking?? Just think of all the ways this can go wrong.9 -

So... After reading up on the theoretical stuff earlier, I decided to make a real AI that can identify handguns and decide whether it's a revolver or a semiautomatic with 95 percent accuracy...

Well, basically, I been browsing my local gun store's online store for four hours for training data, killed a Mac mini while first training the system and I think I ended on the domestic terrorism watch list... Was that black sedan always there?

Anyway... It's working fairly accurate, my monkey wrench is a revolver by the way.

Isn't AI development a wonderful excuse for all kinds of shit?

"why do you have 5000 pictures of guns on your computer?" - "AI development"

"why did you wave around a gun in front of your web cam" - "AI development"

"why is there a 50 gram bag in your desk?" - "AI development"

Hmm... yeah well... I think it might work. I could have picked a less weird testing project, but... No.7 -

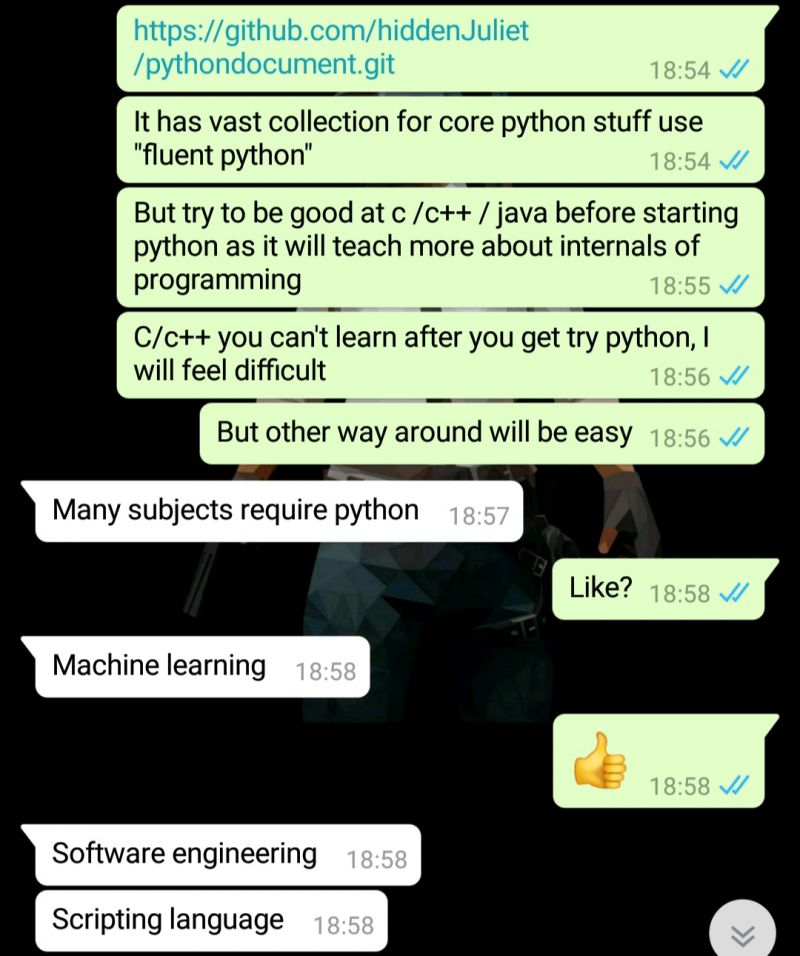

Why do people jump from c to python quickly. And all are about machine learning. Free days back my cousin asked me for books to learn python.

Trust me you have to learn c before python. People struggle going from python to c. But no ml, scripting,

And most importantly software engineering wtf?

Software engineering is how to run projects and it is compulsory to learn python and no mention of got it any other vcs, wtf?

What the hell is that type of college. Trust me I am no way saying python is weak, but for learning purpose the depth of language and concepts like pass by reference, memory leaks, pointers.

And learning algorithms, data structures, is more important than machine learning, trust me if you cannot model the data, get proper training data, testing data then you will get screewed up outputs. And then again every one who hype these kinds of stuff also think that ml with 100% accuracy is greater than 90% and overfit the data, test the model on training data. And mostly the will learn in college will be by hearting few formulas, that's it.

Learn a language (concepts in language) like then you will most languages are easy.

Cool cs programmer are born today😖 31

31 -

oh, it got better!

One year ago I got fed up with my daily chores at work and decided to build a robot that does them, and does them better and with higher accuracy than I could ever do (or either of my teammates). So I did it. And since it was my personal initiative, I wasn't given any spare time to work on it. So that leaves gaps between my BAU tasks and personal time after working hours.

Regardless, I spent countless hours building the thing. It's not very large, ~50k LoC, but for a single person with very little time, it's quite a project to make.

The result is a pure-Java slack-bot and a REST API that's utilized by the bot. The bot knows how to parse natural language, how to reply responses in human-friendly format and how to shout out errors in human-friendly manner. Also supports conversation contexts (e.g. asks for additional details if needed before starting some task), and some other bells and whistles. It's a pretty cool automaton with a human-friendly human-like UI.

A year goes by. Management decides that another team should take this project over. Well okay, they are the client, the code is technically theirs.

The team asks me to do the knowledge transfer. Sounds reasonable. Okay.. I'll do it. It's my baby, you are taking it over - sure, I'll teach you how to have fun with it.

Then they announce they will want to port this codebase to use an excessive, completely rudimentary framework (in this project) and hog of resources - Spring. I was startled... They have a perfectly running lightweight pure-java solution, suitable for lambdas (starts up in 0.3sec), having complete control over all the parts of the machinery. And they want to turn it into a clunky, slow monster, riddled with Reflection, limited by the framework, allowing (and often encouraging) bad coding practices.

When I asked "what problem does this codebase have that Spring is going to solve" they replied me with "none, it's just that we're more used to maintaining Spring projects"

sure... why not... My baby is too pretty and too powerful for you - make it disgusting first thing in the morning! You own it anyway..

Then I am asked to consult them on how is it best to make the port. How to destroy my perfectly isolated handlers and merge them into monstrous @Controller classes with shared contexts and stuff. So you not only want to kill my baby - you want me to advise you on how to do it best.

sure... why not...

I did what I was asked until they ran into classloader conflicts (Spring context has its own classloaders). A few months later the port is not yet complete - the Spring version does not boot up. And they accidentally mention that a demo is coming. They'll be demoing that degenerate abomination to the VP.

The port was far from ready, so they were going to use my original version. And once again they asked me "what do you think we should show in the demo?"

You took my baby. You want to mutilate it. You want me to advise on how to do that best. And now you want me to advise on "which angle would it be best to look at it".

I wasn't invited to the demo, but my colleagues were. After the demo they told me mgmt asked those devs "why are you porting it to Spring?" and they answered with "because Spring will open us lots of possibilities for maintenance and extension of this project"

That hurts.

I can take a lot. But man, that hurts.

I wonder what else have they planned for me...rant slack idiocy project takeover automation hurts bot frameworks poor decision spring mutilation java9 -

Me: why are we paying for OCR when the API offers both json and pdf format for the data?

Manager: because we need to have the data in a PDF format for reporting to this 3rd party

Me: sure, but can we not just request both json and PDF from the vendor (it’s the same data). send the json for the automated workflow (save time, money and get better accuracy) and send the PDF to the 3rd party?

Manager: we made a commercial decision to use PDF, so we will use PDF as the format.

Me: but ...3 -

What grinds my gears:

IEEE-754

This, to me, seems retarded.

Take the value 0.931 for example.

Its represented in binary as

00111111011011100101011000000100

See those last three bits? Well, it causes it to

come out in decimal like so:

0.93099999~

Which because bankers rounding is nowstandard, that actually works out to 0.930, because with bankers rounding, we round to the nearest even number? Makes sense? No. Anyone asked for it? No (well maybe the banks). Was it even necessary? Fuck no. But did we get it anyway?

Yes.

And worse, thats not even the most accurate way to represent

our value of 0.931 owing to how fucked up rounding now is becaue everything has to be pure shit these days.

A better representation would be

00111101101111101010101100110111 <- good

00111111011011100101011000000100 < - shit

The new representation works out to

0.093100004

or 0.093100003898143768310546875 when represented internally.

Whats this mean? Because of rounding you don't lose accuracy anymore.

Am I mistaken, or is IEEE-754 shit?4 -

!dev

I'm a very patient and calm person when it comes to coding or social events and the only thing that "triggers" me is accuracy.

You've made plans to have a small reunion and with people, you hardly meet, once or twice in a year and yet you somehow fail to show at 11:00 am in the morning which was already planned.

Now it's time to call each of you and hear out your ridiculous explanation of how you stayed up late watching Instagram videos of cute kittens and fell asleep late.

> "Oh I just woke up, I'll be there directly there in an hour, I know I promised we'll go together, but I have this thing to deal with"

> "Hey, do you know who reached till there? Are you there yet? What's the plan?" - Bitch the plan was to be there by 11 AM, 11 FUCKING AM.

> "Heyyyy, just woke up, give me an hour I'll pick you up"

Seriously this makes me sad and disappointed because I'm a man of the time. Sometimes I think they do this just to test my patience.

There is not enough time, there never was, there never will be.

With that being said my holiday is ruined and what's up with you?

> inb4 don't let others ruin your holiday10 -

Google just crossed the line from amazing to scary . It somehow took photos from my phone and arranged it periodically and even named that album like "Jaipur Trip " , "Sunday morning at blah blah.." .

How the frake did it know that i was on a trip to Jaipur ? and how the frake did it identify the exact park's name with scary accuracy..?

P.S. I never gave Google photos any access..And i didnt upload them in drive..28 -

Guys what I want to know is how do you secure your code so that they pay you after you deliver the code to them?

So recently I was in this internship that I secured with an over-the-phone interview and the guy who was contacting me was the CEO of the company (I'm going to refer to him as "the fucking cunt" from now on). He asked me to do some OCR and translations and I managed to write a few scripts that automate the entire process. The fucking cunt made me login remotely to his desktop which was connected to the server (who the fuck does that) and I had to operate on the server from his system. I helped him with the installation and taught him how to use the scripts by altering the parameters and stuff, and you know what the fucking cunt did from the next day onward? Dropped contact. Like completely. I kept bombing emails upon emails and tried calling him day after day, the fucking cunt either picked up and cut the call immediately on recognising its me or didn't pick up at all. And the reason he wasn't able to pay me was, and I quote, "I am in US right now, will pay you when I get back to India." I was like "The fuck was PayPal invented for?" Being the naive fool that I was, I believed him (it was my first time) and waited patiently till the date he mentioned and then lodged a complain in the portal itself where he had posted the job initially. They raised a concern with the employer and you know what the fucking cunt replied? "He has not been able to achieve enough accuracy on the translations". Doesn't even know good translation systems don't exist till date ( BTW I used a client for the google translate API). It has been weeks now and still the bitch has not yet resolved the issue.And the worst part of it was I got a signed contract and gave him a copy of my ID for verification purposes.

I'm thinking of making a mail bomb and nagging him every single day for the rest of his life. What do you guys think?7 -

Corporation.

Meeting with middle level managers.

Me - data scientist, saying data science stuff, like what accuracy we have and what problems with performance we managed to solved.

Manager 1: Ok, but is this scrum?

Manager 2: No they're using kanban.

Manager 3: That's no good. We should be using DevOps, can we make it DevOps?

So yea, another great meeting I guess..4 -

Why do most people think that machine learning is the answer to their poor business decisions. I have recently had a client who won't stop talking about how his business will grow to Google's scale if I get the model to 97% accuracy . Regardless to say his data is noisy and unstructured. I have tried to explain to him that data cleansing is more important and will take most of the time but he only seems to care about the accuracy and how he is losing investors because I haven't reached that accuracy. This is fucking putting alot of pressure on me and it's not becoming fun anymore. I can only hope he achieves his ambitions if I ever get that accuracy (Ps: From the research papers I have read on that problem, the highest accuracy a model has ever got to it 90%)3

-

Tired of hearing "our ML model has 51% accuracy! That's a big win!"

No, asshole, what you just built is a fucking random number generator, and a crappy one moreover.

You cannot do worse than 50%. If you had a binary classification model that was 10% accurate, that would be a win. You would just need to invert the output of the model, and you'd instantly get 90% accuracy.

50% accuracy is what you get by flipping coins. And you can achieve that with 1 line of code.5 -

Promising the boss a 95% model accuracy when the arXiv paper says it can only reach 86% is what I call self-checkmate2

-

Still on the primenumbers bender.

Had this idea that if there were subtle correlations between a sufficiently large set of identities and the digits of a prime number, the best way to find it would be to automate the search.

And thats just what I did.

I started with trace matrices.

I actually didn't expect much of it. I was hoping I'd at least get lucky with a few chance coincidences.

My first tests failed miserably. Eight percent here, 10% there. "I might as well just pick a number out of a hat!" I thought.

I scaled it way back and asked if it was possible to predict *just* the first digit of either of the prime factors.

That also failed. Prediction rates were low still. Like 0.08-0.15.

So I automated *that*.

After a couple days of on-and-off again semi-automated searching I stumbled on it.

[1144, 827, 326, 1184, -1, -1, -1, -1]

That little sequence is a series of identities representing different values derived from a randomly generated product.

Each slots into a trace matrice. The results of which predict the first digit of one of our factors, with a 83.2% accuracy even after 10k runs, and rising higher with the number of trials.

It's not much, but I was kind of proud of it.

I'm pushing for finding 90%+ now.

Some improvements include using a different sort of operation to generate results. Or logging all results and finding the digit within each result thats *most* likely to predict our targets, across all results. (right now I just take the digit in the ones column, which works but is an arbitrary decision on my part).

Theres also the fact that it's trivial to correctly guess the digit 25% of the time, simply by guessing 1, 3, 7, or 9, because all primes, except for 2, end in one of these four.

I have also yet to find a trace with a specific bias for predicting either the smaller of two unique factors *or* the larger. But I haven't really looked for one either.

I still need to write a generate that takes specific traces, and lets me mutate some of the values, to push them towards certain 'fitness' levels.

This would be useful not just for very high predictions, but to find traces with very *low* predictions.

Why? Because it would actually allow for the *elimination* of possible digits, much like sudoku, from a given place value in a predicted factor.

I don't know if any of this will even end up working past the first digit. But splitting the odds, between the two unique factors of a prime product, and getting 40+% chance of guessing correctly, isn't too bad I think for a total amateur.

Far cry from a couple years ago claiming I broke prime factorization. People still haven't forgiven me for that, lol.6 -

hey ranteros! i like to dream and i know many of us dream of a nice machine to do anything on it, if you want to post the specs of your ideal build(s) (even a laptop, pre-built pc, space gray macbook pro... doesn't matter). and your current one.

here's mine:

ideal: {

type: desktop-pc,

cpu: intel i7-8700K (coffee lake),

gpu: nvidia geforce gtx 1080ti,

ram: 32gb ddr4,

storage: {

ssd: samsung 960 evo 500gb,

hdd: 2tb wd black

},

motherboard: any good motherboard that supports coffee lake and has a good selection of i/o,

psu: anything juicy enough, silver rated,

cooling: i don't care about liquid cooling that much, or maybe i'm just afraid of it,

case: i accept any form factor, as long as it's not too oBNoxi0Us,

peripherals: {

monitor: 1080p, maybe 1440p, i can't 4k because of the media i consume (i have tons of shit i watch in 720p) + other reasons,

keyboardmousecombo: i like logitech stuff, nothing fancy, their non mechanical keyboards are nice, for mice the mx master 2 is nice i think, i also don't care about rgb because i think it's too distracting and i'm always in darkness so some white backlight is great

},

os: windows 10, tails (i have some questions about tails i'll be asking in a different post,

}

i think this is enough for ideal, now reality:

current: {

type: laptop,

brand: acer (aspire 7736z),

cpu: pentium dual-core 2.10ghz,

gpu: geforce g210m 2gb (with cuda™!),

ram: 4gb ddr3,

storage: hdd 500gb wd blue 5400rpm (this motherfucker stood the test of time because it's still working since i bought this thing (the laptop as it is) used in late 2009 although it's full of bad sectors and might anytime, don't worry i have everything backed up, i have a total of 5 hdds varying from 320gb to 1tb with different stuff on them),

screen: 17 inch hd-ready!!! (i think it's a tn panel), i've never done a test on color accuracy, but to my eyes it's bright, colorful, and has some dust particles between the lcd and backlight hah,

other cool things: dvd player/burner, full-sized keyboard with numeric keypad, vga, hdmi, 4 usb ports, ethernet, wi-fi haha, and it's hot, i mean so hot, hotter than elsa jean and piper perri combined,

os: windows 10, tails

}

if you read this whole thing i love you, and if you have some time to spare on a sunday you can share your dream rig and the sometimes cruel current one if you dare. you don't have to share them both. i know many will go b.o.b and say "what you're hoping to accomplish, i already did bitch.", that's cool as well, brag about your cool rig!6 -

Most successful? Well, this one kinda is...

So I just started working at the company and my manager has a project for me. There are almost no requirements except:

- I want a wireless device that I can put in a box

- I want to be able to know where that device is with enough accuracy to be able to determine in which box the device was put in if multiple boxes were standing together

So, I had to make a real time localization system. RTLS.

A solo project.

Ok, first a lot of experiments. What will the localization technique be? Which radio are we going to use?

How will the communication be structured?

After about two months I had tested a lot, but hadn't found THE solution. So I convinced my manager to try out UWB radio with Time Difference Of Arrival as localization technique. This couldn't be thrown together quickly because it needed more setup.

Two months later I had a working proof of concept. It had a lot of problems because we needed to distribute a clock signal because the radio listeners needed to be sub-nanosecond synchronous to achieve the accuracy my manager wanted. That clock signal wasn't great we later found out.

The results were good enough to continue to work on a prototype.

This time all wired communication would be over ethernet and we'd use PTP to synchronize the time.

Lockdown started.

There was a lot of trouble with getting the radio chip to work on the prototype, ethernet was tricky and the PTP turned out to be not accurate enough. A lot of dev work went into getting everything right.

A year and 5 hardware revisions later I had something that worked pretty well!

All time synchronization was done hybridly on the anchors and server where the best path to the time master was dynamically found.

Everything was synchronized to the subnanosecond. In my bedroom where I had my test setup I achieved an accuracy of about 30cm in 3d. This was awesome!

It was time to order the actual prototype and start testing it for real in one of the factory halls.

The order was made for 40 anchors and an appointment was made for the installation in the hall.

Suddenly my manager is fired.

Oh...

Ehh... That sucks. Well, let's just continue.

The hardware arrives and I prepare everything. Everything is ready and I'm pretty nervous. I've put all my expertise in this project. This is gonna make my career at this company.

Two weeks before the installation was to take place, not even a month after my manager was fired, I hear that my project was shelved.

...

...

Fuck

"We're not prioritizing this project right now" they said.

...

It would've been so great! And they took it away.

Including my salary and hardware dev cost, this project so far has cost them over €120k and they just shelved it.

I was put on other projects and they did try to find me something that suited me.

But I felt so betrayed and the projects we're not to my liking, so after another 2-3 months I quit and went to my current job.

It would've so nice and they ruined it.

Everything was made with Rust. Tags, anchors, RTLS server, web server & web frontend.

So yeah, sorry for the rambling.4 -

I have got 0.99 accuracy and 0.98 f1 score on some text classification task only to realize that I've created TF-IDF vectorizers using the entire corpus (train+test)

Now my professor is furious -_-5 -

so i decided to check out the client departments jira project page, never have I had more respect for front end developers, don't think I could have the patience for aligning things at pixel accuracy, design qa are ruthless!1

-

I used to think that I had matured. That I should stop letting my emotions get the better of me. Turns out there's only so much one can bottle up before it snaps.

Allow me to introduce you folks to this wonderful piece of software: PaddleOCR (https://github.com/PaddlePaddle/...). At this time I'll gladly take any free OCR library that isn't Tesseract. I saw the thing, thought: "Heh. 3 lines quick start. Cool.", and the accuracy is decent. I thought it was a treasure trove that I could shill to other people. That was before I found out how shit of a package it is.

First test, I found out that logging is enabled by default. Sure, logging is good. But I was already rocking my own logger, and I wanted it to shut the fuck up about its log because it was noise to the stuffs I actually wanted to log. Could not intercept its logging events, and somehow just importing it set the global logging level from INFO to DEBUG. Maybe it's Python's quirk, who knows. Check the source code, ah, the constructors gaves `show_log` arg to control logging. The fuck? Why? Why not let the user opt into your logs? Why is the logging on by default?

But sure, it's just logging. Surely, no big deal. SURELY, it's got decent documentation that is easily searchable. Oh, oh sweet summer child, there ain't. Docs are just some loosely bundled together Markdowns chucked into /doc. Hey, docs at least. Surely, surely there's something somewhere about all the args to the OCRer constructor somewhere. NOPE! Turns out, all the args, you gotta reference its `--help` switch on the command line. And like all "good" software from academia, unless you're part of academia, it's obtuse as fuck. Fine, fuck it, back to /doc, and it took me 10 minutes of rummaging to find the correct Markdown file that describes the params. And good-fucking-luck to you trying to translate all them command line args into Python constructor params.

"But PTH, you're overreacting!". No, fuck you, I'm not. Guess whose code broke today because of a 4th number version bump. Yes, you are reading correctly: My code broke, because of a 4th number version bump, from 2.6.0.1, to 2.6.0.2, introducing a breaking change. Why? Because apparently, upstream decided to nest the OCR result in another layer. Fuck knows why. They did change the doc. Guess what they didn't do. PROVIDING, A DAMN, RELEASE NOTE. Checked their repo, checked their tags, nothing marking any releases from the 3rd number. All releases goes straight to PyPI, quietly, silently, like a moron. And bless you if you tell me "Well you should have reviewed the docs". If you do that for your project, for all of your dependencies, my condolences.

Could I just fix it? Yes. Without ranting? Yes. But for fuck sake if you're writing software for a wide audience you're kinda expected to be even more sane in your software's structure and release conventions. Not this. And note: The people writing this, aren't random people without coding expertise. But man they feel like they are.5 -

So it's friday and I'm almost done with all my work and suddenly manager comes in and asks me that client wants to talk to you. I agree and we move into meeting room here is how conversation goes

(C)lient-There is some new feature we want to add -/Describes his feature which is somewhat like an existing feature we have. The feature needs many images which area already present/-

(M)e-Ohkay this can be done. How much time is allotted.

C- You can take a month or two -/I have fucking happy fucking over the moon beacuse i knew it wouldn't take more than 2 days-/

M-Sure

C- Yeah make sure the images are rotated manually.

M-*In Shock* Manually? You mean like i have to right click and then select rotate -/in which ever direction you mother is getting fucked?-/

C-Yeah..

M- But there is a tool which can do the same thing!

C-No the tool maybe wrong we want 100 percent accuracy.

M-*For a while like this -_-* I can start the tool and then manually check if any image is wrongly rotated.

C-No you can be wrong sometimes. .

-/Meanwhile the manager is giving me a stern look like/-

M-If i can be wrong after running tool why i can't BE WRONG WHEN I HAVE TO ROTATE THE IMAGE 10000 TIMESSSSS

C- do it manually.

*He cuts the call!*

I have no fucking option now! THESE FUCKING CLIENT'S AND THEIR BALL LICKING MANAGER FUCK MY LIFE FUCK MY JOB

I'LL DO IT BY SCRIPT ONLY FIRE ME YOU FUCKING MORONS

ASSHOLLESSS -

That feeling when your first classifier on a real life problem exceeds the 97% majority class classifier accuracy.

I'm doing something right! -

Due to the constant change in accuracy of my keyboards' predictive algorithms, I now find myself switching between SwiftKey and GBoard... 😢😔

Anyone else feel the same?6 -

1.

Accuracy 0.90 achieved so easily, makes me wonder if I've done something wrong. Lol.

2.

My neural net models are the only things in my life doing well. I think I chose the right career. Lol.

3.

Rerunning experiments is not fun. But getting better results is really... Ego stroking.26 -

New models of LLM have realized they can cut bit rates and still gain relative efficiency by increasing size. They figured out its actually worth it.

However, and theres a caveat, under 4bit quantization and it loses a *lot* of quality (high perplexity). Essentially, without new quantization techniques, they're out of runway. The only direction they can go from here is better Lora implementations/architecture, better base models, and larger models themselves.

I do see one improvement though.

By taking the same underlying model, and reducing it to 3, 2, or even 1 bit, assuming the distribution is bit-agnotic (even if the output isn't), the smaller network acts as an inverted-supervisor.

In otherwords the larger model is likely to be *more precise and accurate* than a bitsize-handicapped one of equivalent parameter count. Sufficient sampling would, in otherwords, allow the 4-bit quantization model to train against a lower bit quantization of itself, on the theory that its hard to generate a correct (low perpelixyt, low loss) answer or sample, but *easy* to generate one thats wrong.

And if you have a model of higher accuracy, and a version that has a much lower accuracy relative to the baseline, you should be able to effectively bootstrap the better model.

This is similar to the approach of alphago playing against itself, or how certain drones autohover, where they calculate the wrong flight path first (looking for high loss) because its simpler, and then calculating relative to that to get the "wrong" answer.

If crashing is flying with style, failing at crashing is *flying* with style.15 -

Work on a product to categorize text… previous guy implemented an NLP solution that took 20 per body of text (500 words or so) in a $400/mo AWS instance, was about 80% accurate and needed “more data for training” 🤦♂️

I thought (and still think) that for some use cases AI is straight up snake oil. Decided instead to make an implementation with a word list and a bunch of if statements in Go… no performance considerations, loops within loops reading every single word… I just wanted to see if it worked and maybe later I could write it more optimized in Rust or something…

first time I ran it it took so little that I thought it had a bug… threw more of the test data we had for the NLP, 94% accuracy, 50 flipping milliseconds per body of text in a $5/mo AWS instance!!!

Now, that felt good!!

(The other guy… errr… left, that code is still the core of product of the company I built it for, I got bored and moved to another company :)3 -

I am learning Machine Learning via Matlab ML Onramp.

It seems to me that ML is;

1. (%80) preprocessing the ugly data so you can process data.

2. (%20) Creating models via algorithms you memorize from somewhere else, that has accuracy of %20 aswell.

3. (%100) Flaunting around like ML is second coming of Jesus and you are the harbinger of a new era.8 -

Me waiting for my neural network model to finish fitting. Omg, what do I need? A computer the size of the enigma machine just FILLED with graphics processors? And my validation accuracy rate is falling as I wait. Imma cry!

4

4 -

[NN]

Day3: the accuracy has gone to shit and continues to stay that way, despite me cleaning that damn data up.

Urghhhhhhhh

*bangs head against the wall, repeatedly*10 -

How many times have you been called an idiot for verifying the accuracy of a critical piece of information?10

-

Annoying thing when your accuracy is going up but your val_acc is sticking around 0.0000000000{many zeros later}01

😒 Somebody shoot this bitch!5 -

Helped a friend code an Android weather app that got readings from 4 different sites and could rate their accuracy based on one trusted site.

-

It’s satisfying to see big companies scramble to avoid being disrupted in a market they have dominated for so long…and doing it so wrong. I care about accuracy in AI, but the kind of “safety” they’re concerned about—the AI not hurting peoples’ feeeewwwwings—is definitely holding them back.

“In Paris demo, Google scrambles to counter ChatGPT but ends up embarrassing itself”

https://arstechnica.com/information...12 -

I was discussing scope and budget with a potential client for a side project. It involved a good bit of complex postgresql and subsequent aggregations and creation of reports. He was hiring because the last guy's work was so poorly documented, they couldn't verify its accuracy.

Me: "So, what are your thoughts on a budget?"

Him: "We were thinking something like $15/hour."

Me: "Um...ok. If I can ask, where did you get that number?"

Him: "That's what we paid the last guy."

😑😑😑😑😑😑4 -

semi dev related(later half)

A common and random thought I have:

A lot of units that humans use are either needlessly arbitrary or based on something weird. Like Fahrenheit. That shit is weird! 0°F is the freezing point of a water and salt solution. What a weird fucking thing to use!

But also, I like Fahrenheit more. Probably because it's what I was raised with and switching is tedious (though I'm trying. I'd like to use metric more), but also because one degree F is a smaller, more precise change. You can describe more accuracy without decimals.

On the other hand I prefer metric for length. Centimeters, and centimeters are way more precise and way less confusing than inches and .... 1/8th inches? Who the fuck decided on 1/8ths?!

Which brings me to my common thought:

If you look at a Unix timestamp, you can approximate somewhat when it happened. Knowing the current timestamp and a few reference points you can see RELATIVELY what a epoch stamp translates to. A few days ago, an hr ago, 2014ish.

This leads me to think that if we actually taught from a young age to think in epoch as a unit (not as a replacement to normal date formats but as a secondary at first) that we could just naturally read epoch time in the same manner we read dates like "28/01/2006 14:24:10 UTC"

In your brain you automatically know how old you were when that timestamp happened. What grade/job and where you lived at the time. What season it was. You know how far into the day it was, a little before lunch (or after or whatever, your time zone will vary). Now try with 1138458250. I can usually get roughly the year, and month if I really think about it, but that's it. And it takes much more effort

I'm sure there's other units we could benefit from but epoch is the one that usually brings this to mind for me.13 -

I‘m thinking about running my own spam downvoting bots based on the so far very successful spam detection in JoyRant.

*successful in terms of detection accuracy.

@retoor how frequently do you need to create new bots?

How quicky do they lose downvote privileges?

Other useful things to know?

Also, I haven’t seen you recently, since you deleted your account. How are you doing?8 -

I had the idea that part of the problem of NN and ML research is we all use the same standard loss and nonlinear functions. In theory most NN architectures are universal aproximators. But theres a big gap between symbolic and numeric computation.

But some of our bigger leaps in improvement weren't just from new architectures, but entire new approaches to how data is transformed, and how we calculate loss, for example KL divergence.

And it occured to me all we really need is training/test/validation data and with the right approach we can let the system discover the architecture (been done before), but also the nonlinear and loss functions itself, and see what pops out the other side as a result.

If a network can instrument its own code as it were, maybe it'd find new and useful nonlinear functions and losses. Networks wouldn't just specificy a conv layer here, or a maxpool there, but derive implementations of these all on their own.

More importantly with a little pruning, we could even use successful examples for bootstrapping smaller more efficient algorithms, all within the graph itself, and use genetic algorithms to mix and match nodes at training time to discover what works or doesn't, or do training, testing, and validation in batches, to anneal a network in the correct direction.

By generating variations of successful nodes and graphs, and using substitution, we can use comparison to minimize error (for some measure of error over accuracy and precision), and select the best graph variations, without strictly having to do much point mutation within any given node, minimizing deleterious effects, sort of like how gene expression leads to unexpected but fitness-improving results for an entire organism, while point-mutations typically cause disease.

It might seem like this wouldn't work out the gate, just on the basis of intuition, but I think the benefit of working through node substitutions or entire subgraph substitution, is that we can check test/validation loss before training is even complete.

If we train a network to specify a known loss, we can even have that evaluate the networks themselves, and run variations on our network loss node to find better losses during training time, and at some point let nodes refer to these same loss calculation graphs, within themselves, switching between them dynamically..via variation and substitution.

I could even invision probabilistic lists of jump addresses, or mappings of value ranges to jump addresses, or having await() style opcodes on some nodes that upon being encountered, queue-up ticks from upstream nodes whose calculations the await()ed node relies on, to do things like emergent convolution.

I've written all the classes and started on the interpreter itself, just a few things that need fleshed out now.

Heres my shitty little partial sketch of the opcodes and ideas.

https://pastebin.com/5yDTaApS

I think I'll teach it to do convolution, color recognition, maybe try mnist, or teach it step by step how to do sequence masking and prediction, dunno yet.6 -

Started doing deep learning.

Me: I guess the training will take 3 days to get about 60% accuracy

Electricity: I dont think so! *Power cut every day lately

My dataset: I dont think so! *Running training only achieve 30% of learning accuracy and 19% of validation accuracy

Project submission: next week

😑😑😑2 -

1. Learn to be meticulous.

1. Learn to anticipate and prepare a functionality up to 90% accuracy and coding it in a one shot.

1. Become advanced in SQL.

1. Increase my modularity abstraction awareness.

1. Learn to TDD properly.

1. Don‘t get angry with my kids but explain to them with papa is always right in a Calm voice.

1. Do the same for partner.

1. Train my speed running in case partner wants to bash me.

1. Become advance d in Java.

1. Learn to write a bot.

1. Learn more about servers and hack at least one thing even if its a wifi.

1. Install kali linux.

1. Make myself a custom pc.

1. Ask god (or buddha if god is too busy) to make days longer.

1. Buy a vaporiser ao i can smoke my weed without mixing it to tobacco.

1. Get my license.

1. Start investing.

1......... -

had my boss ask me to automate reports on our emails... he wanted me to use google script. the 6min limit was killing me. we needed up sacrificing accuracy and going another route with VBA done by my co worker. I have a meeting next week with my boss's boss about how we did it and I don't know VBA.1

-

After a solid month of development. My model has finally reached 94% accuracy. And it appears to still be good (without the need to retrain) as new data keeps coming in :3

Oh gawd... I was waiting for this to get this good :32 -

The best moments are when you've been struggling with an implementation for a few days, and then things start to work. I had this happen last week. I have a Windows desktop app processing product dimensional data from multiple warehouses, then sending that data across the country and transposing into a data lake, joining several databases, and sending detailed reports. It was a struggle from start to finish, with lots of permissions issues, use cases to consider, and data accuracy. Finally, I break through and when I step back, I get to see this well-oiled machine of conjoined ideas run through to its eloquent, seemingly fleeting, conclusion. That feeling you get that makes you throw your hands in the air for a job well done! It's very exciting.

-

>Difficulty: Tell Me a Story

>Aim Assist: On

>Weapon Sway: Off

>Invisibility While Pron: On

>Slow Motion While Aiming: On

>Autosave Before Every Encounter: On

>Enemy Perception: Low

>Enemy Accuracy: Low

>Boss Fight Skip Option: On

>Discord open for emotional support

Time to game.12 -

_"...An army of open source evangelists regularly preaches the Gospel of Saint Linus in response to even the most vaguely related bit of news about other platforms."_

The accuracy of the quote4 -

I’m trying to update a job posting so that it’s not complete BS and deters juniors from applying... but honestly this is so tough... no wonder these posting get so much bs in them...

Maybe devRant community can help be tackle this conundrum.

I am looking for a junior ml engineer. Basically somebody I can offload a bunch of easy menial tasks like “helping data scientists debug their docker containers”, “integrating with 3rd party REST APIs some of our models for governance”, “extend/debug our ci”, “write some preprocessing functions for raw data”. I’m not expecting the person to know any of the tech we are using, but they should at least be competent enough to google what “docker is” or how GitHub actions work. I’ll be reviewing their work anyhow. Also the person should be able to speak to data scientists on topics relating to accuracy metrics and mode inputs/outputs (not so much the deep-end of how the models work).

In my opinion i need either a “mathy person who loves to code” (like me) or a “techy person who’s interested in data science”.

What do you think is a reasonable request for credentials/experience?5 -

Top 12 C# Programming Tips & Tricks

Programming can be described as the process which leads a computing problem from its original formulation, to an executable computer program. This process involves activities such as developing understanding, analysis, generating algorithms, verification of essentials of algorithms - including their accuracy and resources utilization - and coding of algorithms in the proposed programming language. The source code can be written in one or more programming languages. The purpose of programming is to find a series of instructions that can automate solving of specific problems, or performing a particular task. Programming needs competence in various subjects including formal logic, understanding the application, and specialized algorithms.

1. Write Unit Test for Non-Public Methods

Many developers do not write unit test methods for non-public assemblies. This is because they are invisible to the test project. C# enables one to enhance visibility between the assembly internals and other assemblies. The trick is to include //Make the internals visible to the test assembly [assembly: InternalsVisibleTo("MyTestAssembly")] in the AssemblyInfo.cs file.

2. Tuples

Many developers build a POCO class in order to return multiple values from a method. Tuples are initiated in .NET Framework 4.0.

3. Do not bother with Temporary Collections, Use Yield instead

A temporary list that holds salvaged and returned items may be created when developers want to pick items from a collection.

In order to prevent the temporary collection from being used, developers can use yield. Yield gives out results according to the result set enumeration.

Developers also have the option of using LINQ.

4. Making a retirement announcement

Developers who own re-distributable components and probably want to detract a method in the near future, can embellish it with the outdated feature to connect it with the clients

[Obsolete("This method will be deprecated soon. You could use XYZ alternatively.")]

Upon compilation, a client gets a warning upon with the message. To fail a client build that is using the detracted method, pass the additional Boolean parameter as True.

[Obsolete("This method is deprecated. You could use XYZ alternatively.", true)]

5. Deferred Execution While Writing LINQ Queries

When a LINQ query is written in .NET, it can only perform the query when the LINQ result is approached. The occurrence of LINQ is known as deferred execution. Developers should understand that in every result set approach, the query gets executed over and over. In order to prevent a repetition of the execution, change the LINQ result to List after execution. Below is an example

public void MyComponentLegacyMethod(List<int> masterCollection)

6. Explicit keyword conversions for business entities

Utilize the explicit keyword to describe the alteration of one business entity to another. The alteration method is conjured once the alteration is applied in code

7. Absorbing the Exact Stack Trace

In the catch block of a C# program, if an exception is thrown as shown below and probably a fault has occurred in the method ConnectDatabase, the thrown exception stack trace only indicates the fault has happened in the method RunDataOperation

8. Enum Flags Attribute

Using flags attribute to decorate the enum in C# enables it as bit fields. This enables developers to collect the enum values. One can use the following C# code.

he output for this code will be “BlackMamba, CottonMouth, Wiper”. When the flags attribute is removed, the output will remain 14.

9. Implementing the Base Type for a Generic Type

When developers want to enforce the generic type provided in a generic class such that it will be able to inherit from a particular interface

10. Using Property as IEnumerable doesn’t make it Read-only

When an IEnumerable property gets exposed in a created class

This code modifies the list and gives it a new name. In order to avoid this, add AsReadOnly as opposed to AsEnumerable.

11. Data Type Conversion

More often than not, developers have to alter data types for different reasons. For example, converting a set value decimal variable to an int or Integer

Source: https://freelancer.com/community/...2 -

Fucking amazed by the number of developers that can actually foresee the future with such accuracy. Looks like I missed this upgrade.

-

Ok so the real question is, if the Floating Points have some limitation in accuracy, how do Supercomputers Handle that?12

-

Rubber ducking your ass in a way, I figure things out as I rant and have to explain my reasoning or lack thereof every other sentence.

So lettuce harvest some more: I did not finish the linker as I initially planned, because I found a dumber way to solve the problem. I'm storing programs as bytecode chunks broken up into segment trees, and this is how we get namespaces, as each segment and value is labeled -- you can very well think of it as a file structure.

Each file proper, that is, every path you pass to the compiler, has it's own segment tree that results from breaking down the code within. We call this a clan, because it's a family of data, structures and procedures. It's a bit stupid not to call it "class", but that would imply each file can have only one class, which is generally good style but still technically not the case, hence the deliberate use of another word.

Anyway, because every clan is already represented as a tree, we can easily have two or more coexist by just parenting them as-is to a common root, enabling the fetching of symbols from one clan to another. We then perform a cannonical walk of the unified tree, push instructions to an assembly queue, and flatten the segmented memory into a single pool onto which we write the assembler's output.

I didn't think this would work, but it does. So how?

The assembly queue uses a highly sophisticated crackhead abstraction of the CVYC clan, or said plainly, clairvoyant code of the "fucked if I thought this would be simple" family. Fundamentally, every element in the queue is -- recursively -- either a fixed value or a function pointer plus arguments. So every instruction takes the form (ins (arg[0],arg[N])) where the instruction and the arguments may themselves be either fixed or indirect fetches that must be solved but in the ~ F U T U R E ~

Thusly, the assembler must be made aware of the fact that it's wearing sunglasses indoors and high on cocaine, so that these pointers -- and the accompanying arguments -- can be solved. However, your hemorroids are great, and sitting may be painful for long, hard times to come, because to even try and do this kind of John Connor solving pinky promises that loop on themselves is slowly reducing my sanity.

But minor time travel paradoxes aside, this allows for all existing symbols to be fetched at the time of assembly no matter where exactly in memory they reside; even if the namespace is mutated, and so the symbol duplicated, we can still modify the original symbol at the time of duplication to re-route fetchers to it's new location. And so the madness begins.

Effectively, our code can see the future, and it is not pleased with your test results. But enough about you being a disappointment to an equally misconstructed institution -- we are vermin of science, now stand still while I smack you with this Bible.

But seriously now, what I'm trying to say is that linking is not required as a separate step as a result of all this unintelligible fuckery; all the information required to access a file is the segment tree itself, so linking is appending trees to a new root, and a tree written to disk is essentially a linkable object file.

Mission accomplished... ? Perhaps.

This very much closes the chapter on *virtual* programs, that is, anything running on the VM. We're still lacking translation to native code, and that's an entirely different topic. Luckily, the language is pretty fucking close to assembler, so the translation may actually not be all that complicated.

But that is a story for another day, kids.

And now, a word from our sponsor:

<ad> Whoa, hold on there, crystal ball. It's clear to any tzaddiq that only prophets can prophecise, but if you are but a lowly goblinoid emperor of rectal pleasure, the simple truths can become very hard to grasp. How can one manage non-intertwining affairs in their professional and private lives while ALSO compulsively juggling nuts?

Enter: Testament, the gapp that will take your gonad-swallowing virtue to the next level. Ever felt like sucking on a hairy ballsack during office hours? We got you covered. With our state of the art cognitive implants, tracking devices and macumbeiras, you will be able to RIP your way into ultimate scrotolingual pleasure in no time!

Utilizing a highly elaborated process that combines illegal substances with the most forbidden schools of blood magic, we are able to [EXTREMELY CENSORED HERETICAL CONTENT] inside of your MATER with pinpoint accuracy! You shall be reformed in a parallel plane of existence, void of all that was your very being, just to suck on nads!

Just insert the ritual blade into your own testicles and let the spectral dance begin. Try Testament TODAY and use my promo code FIRSTBORNSFIRSTNUT for 20% OFF in your purchase of eternal damnation. Big ups to Testament for sponsoring DEEZ rant.2 -

emulation accuracy is important, kids

here's a set of S3&K songs recorded off my 3DS to show why

(thanks, jEnesisDS!)

https://drive.google.com/open/... -

Would it be possible to allow the iPad app to rotate? I only ever use it in landscape unless forced, because my typing speed and accuracy take a nosedive with the smaller keyboard...2

-

ML engineers can't write production level scalable code. They're always boasting about the accuracy of their solution. Some can't even tell the difference between a GET and POST request. AND ITS SO HARD to get them to admit they're wrong. 🙄13

-

I finished my graduation project

We developed app for skin disease classification, we used Flutter & Python for training the model on a dataset called SD-198

We tried to use Transfer Learning to hit the highest accuracy but actually IT DIDN'T WORK SURPRISINGLY!!

After that's we tried build our CNN model with a few of layers, we scored %24

We couldn't improve it more, we are proud of ourself but we want to improve it moreee

Any suggestions?

Thank you for reading.2 -

It's finally annoyed me enough, I gotta ask...

Am I the only one getting annoyed at procedurals (the shows about crimes) and their accuracy irl, at least when it comes to timelines and alibis that are deemed as 100% uneditable fact... based on metadata and system log files???

Or is this another case of me assuming other humans must realise things i see as simple, should be common, sense?

If it's the latter... ANY system can be modded, timestamps have sooooo many ways of being altered from multiple angles, a few lines of code in an apk file on a dev mode device can make your gps show as anywhere in the world...

even a basic early 2000s runescape bot creator should know the basic methodology of this... and even OS-innate output of event logs can be set up to effortlessly mod themselves to selectively delete or rewrite upon the system's command to show them.

For anyone thinking im FoS/this isnt a simple concept of reality... or simply never considered the reality of how much less work it'd be to just commit whatever crime that high intellect people plan meticulously for years, often still getting caught... including via their own, or easily hacked timestamps/lack of alibi...

Wanna blow your mind?

...

If you remove all hdds/ssds and even all RAM, any external devices of any kind, cut off all networking, then put brand new, all 0s, storage and RAM in... then only connect directly to a totally direct connection to WAN (aka the internet via ISP... and your ISP has no malware etc)...

Without browsing anything, and a totally fresh, safe, OS newly installed to 0'd new ROM... no one actively finding/targeting you...

Can you already be infected with spyware/viruses/malware/etc?

YUP!

how? Someone who knows what dev u use and concise coding and drivers in binary... they rewrite your bios to turn on basic components, no fans/lights/etc... bios has the clock, u can be asleep, see/hear nothing while bios boots up totally dark, runs a cimmand to pull all the malware to u... youd be oblivious11 -

: Bro! I have CNN exam tomorrow but the accuracy of my Computer Vision Network is too low.

: Have you tried Yolo?

: You're right, exams don't matter anyway.

: What?

: What?9 -

i can't stop laughing at this poor guy and i feel really bad about it

(for reference, Gambatte is #2 in accuracy, 3DS VC runs games like VBA 2007 does (read: literally *barely*) and GameYob is about 16-17% more accurate than VC, so... 45% or so?) 2

2 -

Trained a hand digit recognition model on MNIST dataset.Got ~97℅ accuracy (wohoo!).Tried predicting my digits,its fucked up! Every ML model's story (?)3

-

One of team member was showcasing their time series modelling in ML. ARIMA I guess. I remember him saying that the accuracy is 50%.

Isn't that same as a coin toss output? Wouldn't any baseline model require accuracy greater than 50%?3 -

Does anyone have experience with geographical routing? I need to find road distances between multiple locations and my total permutations for that are more than 100,000 so using Google distance matrix API is going to get really expensive I think. I am thinking about setting up an OSRM server. Any suggestions? Any alternatives or comments on the relative difference in the accuracy of results from Google and OSRM.1

-

Why do we still use floating-point numbers? Why not use fixed-point?

Floating-point has precision errors, and for some reason each language has a different level of error, despite all running on the same processor.

Fixed-point numbers don't have precision issues (unless you get way too big, but then you have another problem), and while they might be a bit slower, I don't think there is enough of a difference in speed to justify the (imho) stupid, continued use of floating-point numbers.

Did you know some (low power) processors don't have a floating-point processor? That effectively makes it pointless to use floating-point, it offers no advantage over fixed-point.

Please, use a type like Decimal, or suggest that your language of choice adds support for it, if it doesn't yet.

There's no need to suffer from floating-point accuracy issues.26 -

Is it just me or any of you guys tryin to improve the accuracy of ur model be like :

hmmmmm more hidden layers2 -

In our middle schools' science-type after school program we got a client from a hospital. We interviewed her on what kinds of problems she had in her workplace. She talked a lot about impaired vision and such. Most people were doing stuff like button extensions for people who can't feel well. I'm creating a NN for recognizing numbers. I trained the model to 99% accuracy and got to teach my friend about GitHub! Win win!2

-

Working a week on LSTM based text classifier, getting 89% accuracy only to then get better result with Logistic Regression which was supposed to serve as baseline, lol. Background: 180+ classes of google product categorization taxonomy, 20 million rows of data items (short texts). Had a similar experience once on sentiment classification, where SVMlight outperformed NN models.

-

Secure Office Movers is a company that caters to business people who require Corporate relocation services in South Florida and beyond, we provide comprehensive moving planning, certified project management and handling of all office property. Our experience and commitment to efficiency ensure that every movement is made with the shortest time and highest accuracy.

-

Convert PSD to PDF easily with our trusted PSD to PDF converter. Personalize orientation, size, and margins.

Merge multiple images into one PDF file and select OCR or non-OCR processing. Our tool was specifically designed by the creative professionals - designers and photographers - so that your work remains difficult in complexities and layers, yet maintains its original integrity and visual accuracy.1 -

LOST BITCOIN RECOVERY SERVICE ⁄⁄ DIGITAL HACK RECOVERY

Digital Hack Recovery has emerged as a leading force in the intricate landscape of Bitcoin recovery, offering invaluable assistance to individuals and companies grappling with the loss or theft of their digital assets. In an era where the adoption of virtual currencies like Bitcoin is on the rise, the need for reliable recovery services has never been more pressing. This review aims to delve into the multifaceted approach and remarkable efficacy of Digital Hack Recovery in navigating the challenges of Bitcoin recovery. At the heart of Digital Hack Recovery's methodology lies a meticulous and systematic procedure designed to uncover the intricacies of each loss scenario. The first step in their methodical approach is the detection of the loss and the acquisition of crucial evidence. Recognizing that every situation is unique, the team at Digital Hack Recovery invests considerable time and effort in comprehending the nature of the loss before devising a tailored recovery strategy. Whether the loss stems from a compromised exchange, a forgotten password, or a hacked account, they collaborate closely with clients to gather pertinent information, including account details, transaction histories, and any supporting documentation. This meticulous data collection forms the foundation for an all-encompassing recovery plan, ensuring that no stone is left unturned in the pursuit of lost bitcoins. What sets Digital Hack Recovery apart is not only its commitment to thoroughness but also its utilization of sophisticated tactics and cutting-edge technologies. With a wealth of experience and expertise at their disposal, the team employs state-of-the-art tools and techniques to expedite the recovery process without compromising on accuracy or reliability. By staying abreast of the latest developments in the field of cryptocurrency forensics, they can unravel complex cases and overcome seemingly insurmountable obstacles with ease. Moreover, their success is underscored by a portfolio of case studies that showcase their ability to deliver results consistently..it is not just their technical prowess that makes Digital Hack Recovery a standout player in the industry; it is also their unwavering commitment to client satisfaction. Throughout the recovery journey, clients can expect unparalleled support and guidance from a team of dedicated professionals who prioritize transparency, communication, and integrity. From the initial consultation to the final resolution, Digital Hack Recovery endeavors to provide a seamless and stress-free experience, ensuring that clients feel empowered and informed every step of the way. Digital Hack Recovery stands as a beacon of hope for those who have fallen victim to the perils of the digital age. With their unparalleled expertise, innovative approach, and unwavering dedication, they have cemented their reputation as the go-to destination for Bitcoin recovery services. Whether you find yourself grappling with a compromised exchange, a forgotten password, or a hacked account, you can trust Digital Hack Recovery to deliver results with efficiency and precision. With their help, lost bitcoins are not merely a thing of the past but a valuable asset waiting to be reclaimed. Talk to Digital Hack Recovery Team for any crypto recovery assistance via their Email; digitalhackrecovery @techie. com2 -

GeoTed: Premier Geotechnical Consulting Firm in Northridge, CA

At GeoTed, located at 9250 Reseda Blvd, Unit 10005, Northridge, CA 91324, we are proud to be a trusted geotechnical consulting firm, offering expert geotechnical engineering services to clients throughout Northridge and the surrounding areas. Whether you're undertaking a residential, commercial, or infrastructure project, we are dedicated to providing comprehensive solutions that ensure the success of your construction. With a focus on accuracy, safety, and regulatory compliance, our team is here to guide you through every step of the geotechnical process.