Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "#python #r"

-

A story about how a busy programmer became responsible for training interns.

So I was put in charge of a team of interns and had to teach them to work with Linux, coding (Bash, Python and JS) and networking overall.

None of the interns had any technical experience, skills, knowledge or talent.

Furthermore the task came to me as a surprise and I didn't have any training plan nor the time.

Case 0:

Intern is asked to connect to a VM, see which interfaces there are and bring up the one that's down (eth1). He shuts eth0 down and is immediately disconnected from the machine, being unable to connect remotely.

Case 1:

Intern researches Bash scripting via a weird android app and after a hour or so creates and runs this function: test(){test|test&}

He fork-bombed the VM all other interns used.

Case 2:

All interns used the same VM despite the fact that I created one for each.

They saved the same ssh address in Putty while giving it different names.

Case 3:

After explicitly explaining and demonstrating to the interns how to connect to their own VMs they all connect to the same machine and attempt to create file systems, map them and etc. One intern keeps running "shutdown -r" in order to test the delay flag, which he never even included.

Case 4:

All of the interns still somehow connect to the same VM despite me manually configuring their Putty "favorites". Apparently they copy-paste a dns that one of them sent to the entire team via mail. He also learned about the wall command and keeps scaring his team members with fake warnings. A female intern actually asked me "how does the screen knows what I look like?!". This after she got a wall message telling her to eat less because she gained weight.

Case 5:

The most motivated intern ran "rm -rf" from his /etc directory.

P.S. All other interns got disconnected because they still keep using his VM.

Case 6:

While giving them a presentation about cryptography and explaining how SSH (that they've been using for the past two weeks) works an intern asked "So is this like Gmail?".

I gave him the benefit of the doubt and asked if he meant the authorization process. He replied with a stupid smile "No! I mean that it can send things!".

FML. I have a huge project to finish and have to babysit these art majors who decided to earn "ezy cash many" in hightech.

Adventures will be continued.26 -

I'm a self-taught 19-year-old programmer. Coding since 10, dropped out of high-school and got fist job at 15.

In the the early days I was extremely passionate, learning SICP, Algorithms, doing Haskell, C/C++, Rust, Assembly, writing toy compilers/interpreters, tweaking Gentoo/Arch. Even got a lambda tattoo on my arm after learning lambda-calculus and church numerals.

My first job - a company which raised $100,000 on kickstarter. The CEO was a dumb millionaire hippie, who was bored with his money, so he wanted to run a company even though he had no idea what he was doing. He used to talk about how he build our product, even tho he had 0 technical knowledge whatsoever. He was on news a few times which was pretty cringeworthy. The company had only 1 programmer (other than me) who was pretty decent.

We shipped the project, but soon we burned through kickstart money and the sales dried off. Instead of trying to aquire customers (or abandoning the project), boss kept looking for investors, which kept us afloat for an extra year.

Eventually the money dried up, and instead of closing gates, boss decreased our paychecks without our knowledge. He also converted us from full-time employees to "contractors" (also without our knowledge) so he wouldn't have to pay taxes for us. My paycheck decreased by 40% by I still stayed.

One day, I was trying to burn a USB drive, and I did "dd of=/dev/sda" instead of sdb, therefore wiping out our development server. They asked me to stay at company, but I turned in my resignation letter the next day (my highest ever post on reddit was in /r/TIFU).

Next, I found a job at a "finance" company. $50k/year as a 18-year-old. CEO was a good-looking smooth-talker who made few million bucks talking old people into giving him their retirement money.

He claimed he changed his ways, and was now trying to help average folks save money. So far I've been here 8 month and I do not see that happening. He forces me to do sketchy shit, that clearly doesn't have clients best interests in mind.

I am the only developer, and I quickly became a back-end and front-end ninja.

I switched the company infrastructure from shitty drag+drop website builder, WordPress and shitty Excel macros into a beautiful custom-written python back-end.

Little did I know, this company doesn't need a real programmer. I don't have clear requirements, I get unrealistic deadlines, and boss is too busy to even communicate what he wants from me.

Eventually I sold my soul. I switched parts of it to WordPress, because I was not given enough time to write custom code properly.

For latest project, I switched from using custom React/Material/Sass to using drag+drop TypeForms for surveys.

I used to be an extremist FLOSS Richard Stallman fanboy, but eventually I traded my morals, dreams and ideals for a paycheck. Hey, $50k is not bad, so maybe I shouldn't be complaining? :(

I got addicted to pot for 2 years. Recently I've gotten arrested, and it is honestly one of the best things that ever happened to me. Before I got arrested, I did some freelancing for a mugshot website. In un-related news, my mugshot dissapeared.

I have been sober for 2 month now, and my brain is finally coming back.

I know average developer hits a wall at around $80k, and then you have to either move into management or have your own business.

After getting sober, I realized that money isn't going to make me happy, and I don't want to manage people. I'm an old-school neck-beard hacker. My true passion is mathematics and physics. I don't want to glue bullshit libraries together.

I want to write real code, trace kernel bugs, optimize compilers. Albeit, I was boring in the wrong generation.

I've started studying real analysis, brushing up differential equations, and now trying to tackle machine learning and Neural Networks, and understanding the juicy math behind gradient descent.

I don't know what my plan is for the future, but I'll figure it out as long as I have my brain. Maybe I will continue making shitty forms and collect paycheck, while studying mathematics. Maybe I will figure out something else.

But I can't just let my brain rot while chasing money and impressing dumb bosses. If I wait until I get rich to do things I love, my brain will be too far gone at that point. I can't just sell myself out. I'm coming back to my roots.

I still feel like after experiencing industry and pot, I'm a shittier developer than I was at age 15. But my passion is slowly coming back.

Any suggestions from wise ol' neckbeards on how to proceed? 32

32 -

My professor( 2 yrs ago) : Why r u wasting ur time on Python. Learn Java or .net , u will get a good job.

Now she asked me for python tutorials cause she needs it for her PHd. 😂😂😂12 -

Me: you should not open that log file in excel its almost 700mb

Client: its okay, my computer has 4gb ram

Me: *looking at clients computer crashing*

Client: the file is broken!

Me: no, you just need to use a more memory efficient tool, like R, SAS, python, C#, or like anything else!5 -

Ill join in - Our CEO and CTO insist that we can make an AI that can recognize emotions and feelings through Python, R, and some MySQL... mind you, with a team of unpaid interns....

Im sorry, but Id love some of whatever the fuck they are smoking.12 -

Two years ago I moved to Dublin with my wife (we met on tour while we were both working in music) as visa laws in the UK didn’t allow me to support the visa of a Russian national on a freelance artists salary.

After we came to Dublin I was playing a lot to pay rent (major rental crisis here), I play(ed) Double Bass which is a physically intensive instrument and through overworking caused a long term injury to my forearm which prevents me playing.

Luckily my wife was able to start working in Community Operations for the big tech companies here (not an amazing job and I want her to be able to stop).

Anyway, I was a bit stuck with what step to take next as my entire career had been driven by the passion to master an art that I was very committed to. It gave me joy and meaning.

I was working as hard as I could with a clear vision but no clear path available to get there, then by chance the opportunity came to study a Higher Diploma qualification in Data Science/Analysis (I have some experience handling music licensing for tech startups and an MA with components in music analysis, which I spun into a narrative). Seemed like a ‘smart’ thing to do to do pick up a ‘respectable’ qualification, if I can’t play any more.

The programme had a strong programming element and I really enjoyed that part. The heavy statistics/algebra element was difficult but as my Python programming improved, I was able to write and utilise codebase to streamline the work, and I started to pull ahead of the class. I put in more and more time to programming and studied personally far beyond the requirements of the programme (scored some of the highest academic grades I’ve ever achieved). I picked up a confident level of Bash, SQL, Cypher (Neo4j), proficiency with libraries like pandas, scikit-learn as well as R things like ggplot. I’m almost at the end of the course now and I’m currently lecturing evening classes at the university as a paid professional, teaching Graph Database theory and implementation of Neo4j using Python. I’m co-writing a thesis on Machine Learning in The Creative Process (with faculty members) to be published by the institute. My confidence in programming grew and grew and with that platform to lift me, I pulled away from the class further and further.

I felt lost for a while, but I’ve found my new passion. I feel the drive to master the craft, the desire to create, to refine and to explore.

I’m going to write a Thesis with a strong focus on programmatic implementation and then try and take a programming related position and build from there. I’m excited to become a professional in this field. It might take time and not be easy, but I’ve already mastered one craft in life to the highest levels of expertise (and tutored it for almost 10 years). I’m 30 now and no expert (yet), but am well beyond beginner. I know how to learn and self study effectively.

The future is exciting and I’ve discovered my new art! (I’m also performing live these days with ‘TidalCycles’! (Haskell pattern syntax for music performance).

Hey all! I’m new on devRant!12 -

If programming languages had honest slogans, what would they be?

-C : Because fuck you.

-C++ : Fuck this.(- Dan Allen )

-Visual Basic : 10 times as big but only 5 times as stupid.

-Lisp : You’re all idiots.

-JavaScript : You guys know I’m holding up the internet, right ?

-Scala : That was a waste of 4 weeks.

-Go : Tell me about it, Scala.

-Python : All we are saying, is give un-typed a chance.

-R : Whoa, I was supposed to be a statistics package!

-Java : Like a Roomba, you guess it’s OK but none of your friends use it.

-PHP : Do Not Resuscitate.

-Perl : PHP, take me with you.

-Swift : Nobody knows.

-HTML : No.

-CSS : I said no.

-XML : Stop.

Source:@Quora: https://quora.com/If-programming-la...6 -

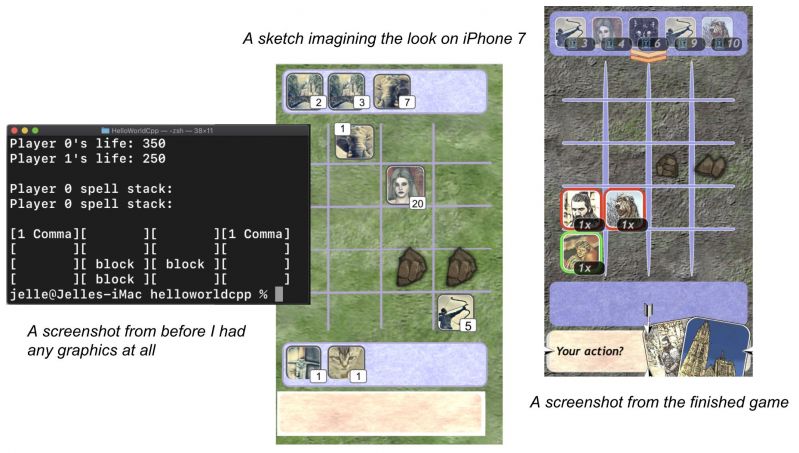

I just released a tiny game for iPhone!

It's basically an attempt to mix 'Heroes of Might & Magic' and mtg.

In the screenshot my terminal says 'helloworld.cpp'. That's right, this is my first c++ program and I don't care how crappy you think this game is, I'm super proud of myself!

I've always worked in data science where managers assume I know how to code because there's text on my screen and I can query and wrangle data, but I actually didn't know what a class was until like 3 years into my job.

Making this game was my attempt to really evolve myself away from just statistics / data transforms into actual programming. It took me forever but I'm really happy I did it

It was brutal at first using C++ instead of R/Python that data science people usually use, but now I start to wonder why it isn't more popular. Everything is so insanely fast. You really get a better idea of what your computer is actually doing instead of just standing on engineers' shoulders. It's great.

After the game was 90% finished (LOL) I started using Swift and Spritekit to get the visuals on the screen and working on iPhone. That was less fun. I didn't understand how to use xCode at all or how to keep writing tests, so I stopped doing TDD because I was '90% done anyway' and 'surely I'll figure out how to do basic debugging'. I'll know better next time... 19

19 -

POSTMORTEM

"4096 bit ~ 96 hours is what he said.

IDK why, but when he took the challenge, he posted that it'd take 36 hours"

As @cbsa wrote, and nitwhiz wrote "but the statement was that op's i3 did it in 11 hours. So there must be a result already, which can be verified?"

I added time because I was in the middle of a port involving ArbFloat so I could get arbitrary precision. I had a crude desmos graph doing projections on what I'd already factored in order to get an idea of how long it'd take to do larger

bit lengths

@p100sch speculated on the walked back time, and overstating the rig capabilities. Instead I spent a lot of time trying to get it 'just-so'.

Worse, because I had to resort to "Decimal" in python (and am currently experimenting with the same in Julia), both of which are immutable types, the GC was taking > 25% of the cpu time.

Performancewise, the numbers I cited in the actual thread, as of this time:

largest product factored was 32bit, 1855526741 * 2163967087, took 1116.111s in python.

Julia build used a slightly different method, & managed to factor a 27 bit number, 103147223 * 88789957 in 20.9s,

but this wasn't typical.

What surprised me was the variability. One bit length could take 100s or a couple thousand seconds even, and a product that was 1-2 bits longer could return a result in under a minute, sometimes in seconds.

This started cropping up, ironically, right after I posted the thread, whats a man to do?

So I started trying a bunch of things, some of which worked. Shameless as I am, I accepted the challenge. Things weren't perfect but it was going well enough. At that point I hadn't slept in 30~ hours so when I thought I had it I let it run and went to bed. 5 AM comes, I check the program. Still calculating, and way overshot. Fuuuuuuccc...

So here we are now and it's say to safe the worlds not gonna burn if I explain it seeing as it doesn't work, or at least only some of the time.

Others people, much smarter than me, mentioned it may be a means of finding more secure pairs, and maybe so, I'm not familiar enough to know.

For everyone that followed, commented, those who contributed, even the doubters who kept a sanity check on this without whom this would have been an even bigger embarassement, and the people with their pins and tactical dots, thanks.

So here it is.

A few assumptions first.

Assuming p = the product,

a = some prime,

b = another prime,

and r = a/b (where a is smaller than b)

w = 1/sqrt(p)

(also experimented with w = 1/sqrt(p)*2 but I kept overshooting my a very small margin)

x = a/p

y = b/p

1. for every two numbers, there is a ratio (r) that you can search for among the decimals, starting at 1.0, counting down. You can use this to find the original factors e.x. p*r=n, p/n=m (assuming the product has only two factors), instead of having to do a sieve.

2. You don't need the first number you find to be the precise value of a factor (we're doing floating point math), a large subset of decimal values for the value of a or b will naturally 'fall' into the value of a (or b) + some fractional number, which is lost. Some of you will object, "But if thats wrong, your result will be wrong!" but hear me out.

3. You round for the first factor 'found', and from there, you take the result and do p/a to get b. If 'a' is actually a factor of p, then mod(b, 1) == 0, and then naturally, a*b SHOULD equal p.

If not, you throw out both numbers, rinse and repeat.

Now I knew this this could be faster. Realized the finer the representation, the less important the fractional digits further right in the number were, it was just a matter of how much precision I could AFFORD to lose and still get an accurate result for r*p=a.

Fast forward, lot of experimentation, was hitting a lot of worst case time complexities, where the most significant digits had a bunch of zeroes in front of them so starting at 1.0 was a no go in many situations. Started looking and realized

I didn't NEED the ratio of a/b, I just needed the ratio of a to p.

Intuitively it made sense, but starting at 1.0 was blowing up the calculation time, and this made it so much worse.

I realized if I could start at r=1/sqrt(p) instead, and that because of certain properties, the fractional result of this, r, would ALWAYS be 1. close to one of the factors fractional value of n/p, and 2. it looked like it was guaranteed that r=1/sqrt(p) would ALWAYS be less than at least one of the primes, putting a bound on worst case.

The final result in executable pseudo code (python lol) looks something like the above variables plus

while w >= 0.0:

if (p / round(w*p)) % 1 == 0:

x = round(w*p)

y = p / round(w*p)

if x*y == p:

print("factors found!")

print(x)

print(y)

break

w = w + i

Still working but if anyone sees obvious problems I'd LOVE to hear about it.36 -

I wrote pagerank algorithm in python for data mining course but my teacher told me to write it in R because according to him python can't be used as data mining tool.5

-

If programming languages had honest slogans, what would they be?

C: If you want a horse, make sure you feed it, clean it and secure it yourself. No warranties.

C++: If you want a horse, you need to buy a circus along with it.

Java: Before you buy a horse - buy a piece of land, build a house in that land, build a barn beside the house & if you are not bankrupt yet, buy the horse and then put the horse in the barn.

C#: You don’t want a horse, but Microsoft wants you to have a horse. Now it’s up to you if you want Microsoft or not.

Swift: Don’t buy an overpriced Unicorn if all you wanted was a horse.

JavaScript: If you want to buy a horse & confidently ride it, make sure you read a book named "You don't know horse".

PHP: After enough optimization, your horse can compete the top most horses in the world; but deep down, you'll always know it's an ass.

Hack: Let's face it, even if you take the ass from the ass lovers and give them back a horse in exchange, not many will ride it.

Ruby: If you want a horse, make sure you ride it on top of rail roads, even if the horse can't run fast on rails.

Python: Don't ride your horse and eat your sandwich on the same line, until you indent it on the next line.

Bash: Your horse may shit everywhere, but at least it gets the job done.

R: You are the horse. R will ride you.

Got this from Quora.

https://quora.com/If-programming-la...7 -

I aghast there is no complete list of coder handles, here's my attempt (sourcing from others)

* AngularJS - Angularians

* Cocoa - Cocoa Heads

* Dart - Dartisans

* EmberJS - Emberinos

* Lisp - Lispers

* Node - Nodesters

* Go - Gophers

* Python - pythonistas

* Perl - Perl Monks

* Python - Pythonista

* Ruby -Rrubyists

* Rust - Rustaceans - https://teespring.com/rustacean/...

* Scala - Scalactites

Sources:

* https://gist.github.com/coolaj86/...

* https://reddit.com/r/...21 -

If programming languages were countries, which country would each language represent?

Disclaimer: its just a joke

Java: USA -- optimistic, powerful, likes to gloss over inconveniences.

C++: UK -- strong and exacting, but not so good at actually finishing things and tends to get overtaken by Java.

Python: The Netherlands. "Hey no problem, let'sh do it guysh!"

Ruby: France. Powerful, stylish and convinced of its own correctness, but somewhat ignored by everyone else.

Assembly language: India. Massive, deep, vitally important but full of problems.

Cobol: Russia. Once very powerful and written with managers in mind; but has ended up losing out.

SQL and PL/SQL: Germany. A solid, reliable workhorse of a language.

Javascript: Italy. Massively influential and loved by everyone, but breaks down easily.

Scala: Hungary. Technically pure and correct, but suffers from an unworkable obsession with grammar that will limit its future success.

C: Norway. Tough and dynamic, but not very exciting.

PHP: Brazil. A lot of beauty springs from it and it flaunts itself a lot, but it's secretly very conservative.

LISP: Iceland. Incredibly clever and well-organised, but icy and remote.

Perl: China. Able to do apparently almost anything, but rather inscrutable.

Swift: Japan. One minute it's nowhere, the next it's everywhere and your mobile phone relies on it.

C#: Switzerland. Beautiful and well thought-out, but expect to pay a lot if you want to get seriously involved.

R: Liechtenstein. Probably really amazing, especially if you're into big numbers, but no-one knows what it actually does.

Awk: North Korea. Stubbornly resists change, and its users appear to be unnaturally fond of it for reasons we can only speculate on.17 -

Java : He is the topper who is usually mocked, but still everyone seeks his help on the day before exams. He tops the class no matter where he is.

C : He was once the coolest guy in the class with all the new gadgets but now uses outdated gadgets.

C++ : The guy who comes 2nd and is also loved by everyone. He is very competitive.

R : She has everything readymade from food to tiffins.

JavaScript: Tries to be in the cool gang but she is someone to whom nobody talks.

CSS: The most beautiful girl in the class and a make-up expert. Also the only friend of JavaScript.

Python: She will soon top the class.

C# : Is she still part of the class!!?24 -

Im not dead yet (dunno about next week), for those that knew me here when I was around, but I really wanted to come to a place I know I could get some comments about it, but what the whole IT/Tech world right now?

Python and its CoC shenanigans

Linus leaving

Mozilla telemetry spying on you https://reddit.com/r/linux/...

And so on and on, the ride isnt over yet, right? (it never is, it only gets more fun from here on baby) question bsd works too linus it you had a change to stop it gentoo richard stallman open source trueos looks cool if you have an nvidia gpu install it had it5

question bsd works too linus it you had a change to stop it gentoo richard stallman open source trueos looks cool if you have an nvidia gpu install it had it5 -

TLDR Question:

When do you consider yourself an expert at a language?

More details: there is a really cool Data Science internship opening up near me that I want so badly it physically hurts me, but it asks for expert level knowledge of python, Java, or R.

I’ve only been studying R and Java for like 3 months, and Python for about 8 months, so I’m obviously no expert. When exactly does someone reach that threshold, though? When did you realize you were an expert at a language?12 -

I have this guy who always post on his social media stories about how he is so good at programming and he has mastered “python” “R” and “JavaScript”. He just started programming early this year. February to be precise and thinks he is a badass programmer. Smh

How will I tell him codeacamy free certificates isn't knowing about programming and cramming syntax isn't programming also🤦🏽♂️13 -

Finding out that your professor at the esteemed ivy league you attend has no real world experience in CS outside of the CS graduate program at said university...

#teachyourself 6

6 -

TL;DR - Girlfriend wanted to learn coding, I might have scared her off.

Today, my girlfriend said she wants to learn coding.

Me: why?

She: well, all these data science lectures are recommending Python and R.

Me: Ok. But, are you interested in coding?

She: No, but I think I have to learn.

Me: Hmm.. coding requires a clear thought process, and we should tell the computer exactly what needs to be done.

She: I think I can do that.

Me: Okay... then tell the computer to think and give a random number between 1 to 10.

She: I will use that randint function. (She has basic knowledge in C)

Me: Nope. You write your own logic to make the computer think.

She: What do you mean?

Me: If I were you... Since it is just a single digit number.. I would capture the current time and would send the last digit of milliseconds @current time.

She: Oh yeah, that's cool. Understood! I will try...

" " "

We both work in same office.. so, we meet up for lunch

" " "

I didn't ask about it, but she started,

She: Hmm, I thought about it, but I was not able to think of any solution. May be its not my cup of tea.

I felt bad for scaring her off... :(

Anyway, what are some other simple methods to generate random numbers like OTPs. I am interested in simple logics, which you have thought of..not the Genius algorithms we have in predefined libraries.26 -

One day browsing the internet, I find a website that is hiring web developers. I was curious, so I decided to see the requirements.

Job : To manage this website

Skills Required

6+ years Experience of

HTML

CSS

JavaScript

Node.js

Vue.js

TypeScript

Java

PHP

Python

Ruby

Ruby on Rails

ASP.NET

Perl

C

C++

Advanced C++

C#

Assembly

RUST

R

Django

Bash

SQL

Built at least 17 stand alone desktop apps without any dependencies with pure C++

Built at least 7 websites alone.

3+ years Hacking experience

built 5 stand-alone mobile with Java, Dart and Flutter

7800+ reputations on stack overflow.

Answered at least 560 questions on stack overflow

Have at least 300 repositories on GitHub, GitLab, Bitbucket.

Written 1000+ lines of code on each single repository.

Salary: $600 per month.

If he learnt all languages one by one at age 0, he will be 138 now!14 -

Languages like python and R are some-what high level languages, with an easy syntax and very readable code. This useful essentially to make it easier for non-programmers to use it. For me as a software developer with +4 years of professional programming. I started with Assembly, Quick-Basic to C++, Java then C#, I found Python super convenient, and at times way too convenient.

At first it felt like I was cheating, and would not consider myself actually writing code, more like pseudo-coding.

After a year or so, I got used to it and it became my default, but it still does not feel right .. is anyone else feeling the same?

I do believe that coding the hard way is not always the right way, but I am just wired that way.15 -

Today on "How the Fuck is Python a Real Language?": Lambda functions and other dumb Python syntax.

Lambda functions are generally passed as callbacks, e.g. "myFunc(a, b, lambda c, d: c + d)". Note that the comma between c and d is somehow on a completely different level than the comma between a and b, even though they're both within the same brackets, because instead of using something like, say, universally agreed-upon grouping symbols to visually group the lambda function arguments together, Python groups them using a reserved keyword on one end, and two little dots on the other end. Like yeah, that's easy to notice among 10 other variable and argument names. But Python couldn't really do any better, because "myFunc(a, b, (c, d): c + d)" would be even less readable and prone to typos given how fucked up Python's use of brackets already is.

And while I'm on the topic of dumb Python syntax, let's look at the switch, um, match statements. For a long time, people behind Python argued that a bunch of elif statements with the same fucking conditions (e.g. x == 1, x == 2, x == 3, ...) are more readable than a standard switch statement, but then in Python 3.10 (released only 1 year ago), they finally came to their senses and added match and case keywords to implement pattern matching. Except they managed to fuck up yet again; instead of a normal "default:" statement, the default statement is denoted by "case _:". Because somehow, everywhere else in the code _ behaves as a normal variable name, but in match statement it instead means "ignore the value in this place". For example, "match myVar:" and "case [first, *rest]:" will behave exactly like "[first, *rest] = myVar" as long as myVar is a list with one or more elements, but "case [_, *rest]:" won't assign the first element from the list to anything, even though "[_, *rest] = myVar" will assign it to _. Because fuck consistency, that's why.

And why the fuck is there no fallthrough? Wouldn't it make perfect sense to write

case ('rgb', r, g, b):

case ('argb', _, r, g, b):

case ('rgba', r, g, b, _):

case ('bgr', b, g, r):

case ('abgr', _, b, g, r):

case ('bgra', b, g, r, _):

and then, you know, handle r, g, and b values in the same fucking block of code? Pretty sure that would be more readable than having to write "handeRGB(r, g, b)" 6 fucking times depending on the input format. Oh, and never mind that Python already has a "break" keyword.

Speaking of the "break" keyword, if you try to use it outside of a loop, you get an error "'break' outside loop". However, there's also the "continue" keyword, and if you try to use it outside of a loop, you get an error "'continue' not properly in loop". Why the fuck are there two completely different error messages for that? Does it mean there exists some weird improper syntax to use "continue" inside of a loop? Or is it just another inconsistent Python bullshit where until Python 3.8 you couldn't use "continue" inside the "finally:" block (but you could always use "break", even though it does essentially the same thing, just branching to a different point).17 -

Me: Hey can you make another cup of coffee like this one for my friend?

Rust: Sure, but you know it's expensive, right? Why don't you just let your friend borrow your coffee?

Me: Alright, but I have two friends.

Rust: No problem, you can share it with as many friends as you’d like, but only one of you is allowed to drink it.

C++: Hey wait! I’ll gladly make a cup of your coffee for your friends! I’ll even let them share it! Heck, they can even share yours!

Rust: Hey C++, you know copying coffee is expensive.

C++: Of course I do, but he didn’t define move construction or assignment, so he implicitly wants a copy!

Me: [To my friends:] Hey, let’s just go over to the Python coffee shop.

Rust: [To C++:] Hmph. The baristas at that place will even let you declare that a muffin is a cup of coffee.

C++: Yeah, but wait till they try to drink it. I hear it can be quite exceptional....

———

Slightly modified from this comment on a Reddit post that I found humorous — only I probably made it much less funny: https://reddit.com/r/...2 -

So I finally got a job where I was an intern as a Data Scientist.

PS : I am a non-computer science background guy, who made it through.3 -

HOW TO PROGRAM(in four easy steps) :

1. Google the F*cking Problem.

2. Open a Stackoverflow link.

3. Copy and paste the code.

4. If it didn't work, goto step 2.

If it did work, goto step 1

(you didn't think you were done.. did you?)

FAQ

Q: What if there is no code?

A: Then it is impossible.3 -

The everything is Data science craze trend.

Honestly it's not even sustainable with every kid and their grandmother wanting to be data scientists because it's a 'passion' and a 'dream job' and all of that click bait stuff.

It's just become ridiculous at this point and I doubt we'll even have the long awaited 'breakthroughs' people have been talking about for so long.

Also I have a strong feeling everyone thinks it's their 'passion' because it tops the lists of highest paid jobs out there and everyone thinks with 3 months of training they're a fully fledged data scientist because some Python or R package implements all the algorithms he could ever think of using.

Add to that the fact that most advertised data science jobs are actually data engineering where you maintain a date store and that's it.

Agree or disagree that's my piece and if you can convince me otherwise I'll be surprised because I've been subscribed to this idea for so long that it lost me some real good opportunities because I thought it was just what I was meant to be doing which turned to be false after I thought about it. There's a million other jobs that are more impactful and with pursuing.2 -

My first rant here, I just found out about it, I don't have much of programming background, but it always triggeredmy intetest, currently I am learning many tools, my aim is to become a data scientist, I have done SAS, R, Python for it (not proficient yet though), also working on google cloud computing, database resources and going to start Machine Learning (Andrew Ng's Coursera).

Can anybody advice me, Am I doing it right or not.?2 -

Switching to R on the last moment from Python for a production build - I wouldn't lie, that was one of the worst choices I ever made.

-

I believe it is really useful because all of the elements of discipline and perseverance that are required to be effective in the workforce will be tested in one way or another by a higher learning institution. Getting my degree made me little more tolerant of other people and the idea of working with others, it also exposed me to a lot of topics that I was otherwise uninterested and ended up loving. For example, prior to going into uni I was a firm believer that I could and was going to learn all regarding web dev by maaaaaself without the need of a school. I wasn't wrong. And most of you wouldn't be wrong. Buuuuuut what I didn't know is how interesting compiler design was, how systems level development was etc etc. School exposed me to many topics that would have taken me time to get to them otherwise and not just on CS, but on many other fields.

I honestly believe that deciding to NOT go to school and perpetuating the idea that school is not needed in the field of software development ultimately harms our field by making it look like a trade.

Pffft you don't need to pay Johnny his $50dllrs an hour rate! They don't need school to learn that shit! Anyone can do it give him 9.50 and call it a day!<------- that is shit i have heard before.

I also believe that it is funny that people tend to believe that the idea of self learning will put you above and beyond a graduate as if the notion of self learning was sort of a mutually exclusive deal. I mean, congrats on learning about if statements man! I had to spend time out of class self learning discrete math and relearning everything regarding calculus and literally every math topic under the sun(my CS degree was very math oriented) while simultaneously applying those concepts in mathematica, r, python ,Java and cpp as well as making sure our shit lil OS emulation(in C why thank you) worked! Oh and what's that? We have that for next week?

Mind you, I did this while I was already being employed as a web and mobile developer.

Which btw, make sure you don't go to a shit school. ;) it does help in regards to learning the goood shit.5 -

Ugh! Salseforce! Fuuuuuck youuuuuu!

I have worked with C++, Java (little bit), Javascript, Python, R for so many years without any complaints ever! But this shit makes me feel so incompetent. Maybe I am actually incompetent but lack of constraints and good debuggers helped me hide that till date. 😭

Idk. I'm going to sleep.7 -

- Played with and learned Scratch

- Learned some Python, made some weird little programs

- Learned C, using two good books: K&R C and Zed Shaw's "Learn C the Hard Way" (back when it was still in development and was free to read on the internet)

- Made LOTS of programs in C

- Came back to Python when I wanted to learn network programming

- Learned some Racket/Lisp, Bash scripting along the way

- Now I use all of the above, minus Scratch -

Best: learned to code, started writing smart-home scripts for home automation and developed biologic and quantitative data analysis scripts in Python and R.

Worst: didn't get paid to develop them and haven't got enough experience for it to be more than a hobby. -

Unofficial slogans for programming languages:

Javascript - JustShitting out frameworks every week.

Python - Shit programmers become slightly less shit and call themselves "data scientists" here.

C# - We know we are better than you, and even though we don't need to say it, we will say it anyway.

Pascal - The only recognized version of Pascal is from one single vendor.

Haskell - Stay is school if you want to use this professionally.

Swift - (honestly don't know what to say here, Lensflare can fill in on this one.) Maybe this: The first rule of Swift club is we don't talk about Apple club.

Java/Kotlin - We are in everything, including your mom's vibrator.

C - The rest of the programming world doesn't exist. Especially in embedded. Happily using K & R compilers for 3 decades.

C++ - We will pretend to care about the rest of the programming world, but like C, we will do whatever the fuck we want. or, Being held back by the ABI for at least a decade.

Rust - I feel bad for you for using other programming languages.

These are probably highly inaccurate, mostly just wanted to talk about Java being in your mom's sex toy.9 -

So theoretically all it takes are 12 libes of Python for arbitrary Code Execution on a Windows system.

'Theoretically', because it loads Kernel Drivers, which any half decent antivirus can detect and block.

http://feedproxy.google.com/~r/...

https://github.com/zerosum0x0/...1 -

Never call a variable 'r' while debugging in python console.

I was trying to fix my code but for some reason the program didn't follow the code flow. I hate it when it happens because you can't pinpoint the source of the problem. I restarted the kernel, nothing, then I rebooted the IDE, nothing. The code behaved weirdly, the only thing I was doing was assigning a value to a temp variable called 'r' and then displaying it. The console kept telling me "--Return--", I didn't understand... Why, my old friend, are you telling me you're returning? Then I changed the variable name to old 'tmp' and it all worked. I finally realized that 'r' is a pdb command... I was angry at the console for obeying my own order... I'm sorry console1 -

I love pipes in R. Really wish more mainstream languages would adopt that *looking at you python, nodejs-tc39, rust, cpp*

Just something about doing

data %>% group_by(age) %>% summarize ( count= n() ) %>% print

As oppose to

print(summarize (group_by(data, age), count=n()) )16 -

How the Common Lisp Community will eventually die soon:

Clojure is the only main Lisp dialect having some sort of heavy presence in today's modern development world. Yes, I am aware of other(if not all) environments in which Lisp or a dialect of it is being used for multiple things, CADLisp, Guile Scheme, Racket, etc etc whatever. I know.

Not only is Clojure present in the JVM(I give 0 fucks about whether you like it or not also) but also has compilation targets for Javascript via Clojurescript. This means that i can effectively target backend server operations, damn near everything inside of the JVM and also the browser.

Yet, there is no real point in using Lisp or Clojure other than for pure academic endeavours, for which it is not even a pure functional programming language, you would be better served learning something else if you want true functional purity. But also because examples for one of the major areas in software development, mainly web, are really lacking, like, lacking bad, as in, so bad most examples are few in between and there is no interest in making it target complete beginners or anything of the like.

But my biggest fucking gripe with Lisp as a whole, specifically Common Lisp, is how monstrously outdated the documentation you can find available for it is.

Say for example, aesthetics, these play a large role, a developer(web mostly) used to the attention to detail placed by the Rails community, the Laravel community, django, etc etc would find on documentation that came straight from the 90s. There is no passion for design, no attention to detail, it makes it look hacky and abandoned. Everything in Lisp looks so severely abandoned for which the most abundant pool of resources are not even made present on a fully general purpose language constrained as a scripting environment for a text editor: Emacs with Emacs Lisp which I reckon is about the most used Lisp dialect in the planet, even more so than Clojure or Common Lisp.

I just want the language to be made popular again y'know? To have a killer app or framework for it much like there is Rails for Ruby, Phoenix for Elixir, etc etc. But unless I get some serious hacking done to bring about the level of maturity of those frameworks(which I won't nor I believe I can) then it will always remain a niche language with funny syntax.

To be honest I am phasing away my use of Clojure in place of Pharo. I just hate seeing how much the Lisp community does in an effort to keep shit as obscure and far away from the reach of new developers as possible. I also DESPISE reading other Lisp developer's code. Far too fucking dense and clever for anyone other than the original developer to read and add to. The idea that Lisp allows for read only code is far too real man.

Lisp has been DED for a while, and the zombies that remain will soon disappear because the community was too busy playing circle jerks for anything real to be done with it. Even as the original language of AI it has been severely outshined by the likes of Python, R and Scala, shit, even Javascript has more presence in AI than Lisp does now a days.9 -

I learned C with a K&R copy a friend gave me years ago. Now at University we in CompSci get taught in Python the first year and Java next while the engineers start with C and (I'm guessing) move on to assembly later on.

This friend comes to me all worried because he has to submit the next day a working Reversi game for the console written in C. Turns out the game was divided among two labs and he failed to submit the first one.

The guy is smart but once a week or so, when we met to smoke a joint and relax with some other friends, he was always talking about how he would prefer something like law but that would be bad business back in Egypt.

Back to the game, I get completely into it. First hour checking all the instructions he was given, then reviewing the code he wrote and copied from Internet. We decide start from scratch since he doesn't really get what the code he copied do. It took us 10 hours only stopping to eat but we get all the specifications of both labs perfectly.

A week after that he comes to me: "my TA said your code is the ugliest shit he's ever seen but he gave me a perfect score because it passed all the tests". I'm getting better (the courses I'm taking help me a lot) but what really made me happy is that he solved the next lab by himself (Reversi wasn't the first time I helped him, only the first time he was absolutely lost). Now he actually gets excited about coding and even felt confident for his programming final.

No more talking about being a lawyer after those 10 hours, totally worth it.1 -

Learning Image Processing,Deep Learning,Machine Learning,Data modeling,mining and etc related to and also work on them are so much easier than installing requiremnts, packages and tools related to them!2

-

Why is python supposedly something big data people use ? Sounds like r and stats and well I don’t see the adoption of that though python is used somewhat I note in a lot of Linux apps and utilities

Just seems strange that an interpreted language would be used that way to me or am I an idiot ?35 -

Hello,

I just quit my job at a big market research company. It was disturbing how much processes there depended on excel and obscure visual basic scripts.

They load data from a database, do typical database tasks with excel and upload it back into the database.

PhDs run complex statical computations through an excel interface that passes the request to R.

Instead of an hour Python they execute stupid tasks with excel by hand. Day after day, month after month.

WHY? My colleagues were not dumb but instead of learning SQL and some python they build insane excel tables.

Maybe it's time pressure. But this excel insanity costs much more time in the end.5 -

if you want to encounter 400 lb angry virgin programmers go on r/Python and suggest they should add a static keyword to their classes.

They swarm out of the woodwork and take turns trolling you until a mod bans you for responding in suit.

Its amazing, the dumbest lack of language feature and they're like

'me no want the extra keystroke me like code that can lose peopel, me fo fucks no never, not gonna happen, you asshat, haha, now go bye now, *click*'

valid argument is python classes are lacking in decoration

this i suppose is ok overall, i mean they work. except the issue i was having the other day resulted from a variable not being DOUBLE DECLARED IN BOTH THE CLASS SCOPE AND INSIDE THE CONSTRUCTOR LIKE IT WAS A JS OBJECT BEING INTERPRETED AS A STATIC FIELD !

ADDITIONALLY IF THEY LIKE CONCISE WHY THE FUCK DO ALL THEIR CLASS METHODS REQUIRE YOU TO INCLUDE ===>SELF<== !!!!

BUT NOOOO TRY TO COMPARE SOMETHING SENSIBLE LIKE

MYINSTANCE.HI SHOULD NOT BE STATIC

MYCLASS.HI SHOULD BE STATIC AND THEY GET ALL PISSED

ONE ACTUALLY ACTED REJECTED FOR THE SAKE OF HIS LANGUAGE SAYING 'YOU WANT WHAT PYTHON HAS BUT YOU DON'T WANT PYTHON !'

...

...

...

I DIDN'T KNOW THEY MADE VIRGINS THAT BIG!38 -

Working with surds recently, and found some cool new identities that I don't think were known before now.

if n = x*y, and z = n.sqrt(), assuming n is known but x and y are not..

q = (surd(n, (1/(1/((n+z)-1))))*(n**2))

r = (surd(n, (surd(n, x)-surd(n, y)))*surd(n, n))

s = abs(surd(abs((surd(n, q)-q)), n)/(surd(n, q)-q))

t = (abs(surd(abs((surd(n, q)-q)), n)/(surd(n, q)-q)) - abs(surd(n, abs(surd(n, q)+r)))+1)

(surd(n, (1/(1/((n+z)-1))))*(n**2)) ~=

(surd(n, (surd(n, x)-surd(n, y)))*surd(n, n))

for every n I checked.

likewise.

s/t == r.sqrt() / q.sqrt()

and

(surd(n, q) - surd(s, q)) ==

(surd(n, t) - surd(s, t))

Even without knowing x, y, r, or t.

Not sure if its useful, but its cool.

surd() is just..

surd(j, k ) = return (j+k.sqrt())*(j-k.sqrt())

and d() is just the python decimal module for ease of use.12 -

The people who post in r/php make me want to quit using php entirely and just switch to python or c#.6

-

!rant

type(rant) = shameless_self_promotion

I made an open source python personal assistant named W.I.L.L!

I made a reddit post about it here: https://reddit.com/r/Python/...

and you can use it for free at http://willbeddow.com

I've been working on it for a few years and it has a few hundred users.

Code: https://github.com/ironman5366/...12 -

just receive a refurbished 4 years old laptop.... then deep clean it.... now having dilemma on picking a distro......

mainly use to run data analysis (r, Python, Java, C++, mySQL, MongoDB and some cloud servers...)

my thinking about a good distro to me, comfortable appearances, customize freedom, community support and constant security update.

any suggestion people??6 -

Does anyone use pascal and what for?

Is there a better editor than borlands delphi?

Is pascal worth using/learning?

Can anyone advise if R or python would be more useful?3 -

I am wondering..i learnt Java, later on PHP and during my internship Clojure and during some remote projects JavaScript and now because of my AI/Data Science ambition, hv been dwelling on Python and about R...am I going crazy or just confused...what paradigm should I focus on and stay there proficiently?!🤕🤕1

-

- Learn more to do more. Pick up my skills

- Create my own project. This is the year!

- Finally learn Python. R for life.2 -

How many languages does one have to learn...? Learnt C, C++ and Java because of college courses. Learnt HTML, CSS, and vanilla JS because I wanted to learn frontend. Now learning R for big data analytics. Today, I came to know that I need to learn more Java or start learning Python for Hadoop...!!

😧😵1 -

pip kept screwing me up with permission issues in /usr/local etc. Changed permissions for respective python folders, still got pip permission errors,did a chgrp - R user /usr.

Sudo gone

Have to reinstall :/4 -

Cross post from /r/cscareerquestions

Hey guys how are you all doing!?

I got into university this September (Computer Engineering & Informatics).

Although I've been programming java since I was 14 (github.com/zarkopafilis), discussions with a friend who is a dot net guy and has been working full-time C# for 2 years now got me thinking.

Alright, Java's good. I've learned to love and hate the language. I also like Spring Boot and whole this ecosystem of stuff including Scala and the other Java based languages. Currently I'm in the proccess of completing some personal project of mine.

Alright, here's the big question: Assuming I am going to graduate (and start working) in 5-7-8 years (Masters, PhD - who knows), which language would you suggest I stick with and start learning? - for backend programming of course.

Don't tell me JavaScript. Although I don't like it I've digested the fact that I'll have to learn some of it for sure.

Currently that's what I'm thinking: Invest some more time learning how the JVM works (and probably keep improving my code quality). Also learn some more stuff regarding Spring Boot (and/or Web Services in general). Then advance onto Scala till couple of years pass. In that time I shall keep improving my SQL skills.

On the other hand I may start learning C# along with .NETcore .

Sidenote: Personally I prefer statically typed languages, that's why I dislike stuff like js and python although I occasionally find myself fiddling with small projects like some laser tracker written with python + opencv.

Sorry if this reads like a big disorganized dump of thoughts. Thanks in advance! :)3 -

Can someone (OS X user) recommend me a good IDE at the moment i use CodeRunner but it crash every hour at least 3 times.

I need it for Front-end dev., Python, C, C++, PhP, R,Swift..... so it should be handeling alot of Languages 😅

(If it's important i have a MacBook Air 2018 full specs)13 -

If u have a fix goal. Then u get motivation automatically.......... So be focused and be choose a goal.... I

These days python/R rocks u shuld think about it...... -

Listening to podcasts on programming and development, and one on data science peaked my interest. Any data scientists out there willing to share what you're actually doing with data science?1

-

You know what's not legendary? Python code reviews with Robin Scherbatsky as your reviewer!

I mean, come on, "Write cleaner code, Barney!" - It's already clean, Robin! It's Python! Indentation ENFORCED, baby! But noooo, "Barney, docstrings matter".

Docstrings? I document my awesomeness by shipping perfect, bug-free code all the time! And don't get me started on unit tests - the Bro Code doesn't need tests, it just works! Oh, and JIRA tickets? I don't follow tickets, they follow me!

This job was supposed to be "legen-W-A-I-T-F-O-R-I-T-Django", but now it's just meh!

Time to refactor... my career. Peace out!1 -

How would I use \n in Python as a string?

Use Examples:

If you are making a string calculator, and using \n to separate the numbers/text from each other and put them individually into a list, which the conclusion for example:

24\n10\nhi

This should get a list like this:

[ '24', '10', 'hi']

What is the solution?

We can simply use one of the following solutions:

r"\n"

OR

"\\n"

Code example: 1

1