Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "i did it from memory"

-

Interviewer: Welcome, Mr X. Thanks for dropping by. We like to keep our interviews informal. And even though I have all the power here, and you are nothing but a cretin, let’s pretend we are going to have fun here.

Mr X: Sure, man, whatever.

I: Let’s start with the technical stuff, shall we? Do you know what a linked list is?

X: (Tells what it is).

I: Great. Can you tell me where linked lists are used?

X:: Sure. In interview questions.

I: What?

X: The only time linked lists come up is in interview questions.

I:: That’s not true. They have lots of real world applications. Like, like…. (fumbles)

X:: Like to implement memory allocation in operating systems. But you don’t sell operating systems, do you?

I:: Well… moving on. Do you know what the Big O notation is?

X: Sure. It’s another thing used only in interviews.

I: What?! Not true at all. What if you want to sort a billion records a minute, like Google has to?

X: But you are not Google, are you? You are hiring me to work with 5 year old PHP code, and most of the tasks will be hacking HTML/CSS. Why don’t you ask me something I will actually be doing?

I: (Getting a bit frustrated) Fine. How would you do FooBar in version X of PHP?

X: I would, er, Google that.

I: And how do you call library ABC in PHP?

X: Google?

I: (shocked) OMG. You mean you don’t remember all the 97 million PHP functions, and have to actually Google stuff? What if the Internet goes down?

X: Does it? We’re in the 1st world, aren’t we?

I: Tut, tut. Kids these days. Anyway,looking at your resume, we need at least 7 years of ReactJS. You don’t have that.

X: That’s great, because React came out last year.

I: Excuses, excuses. Let’s ask some lateral thinking questions. How would you go about finding how many piano tuners there are in San Francisco?

X: 37.

I: What?!

X: 37. I googled before coming here. Also Googled other puzzle questions. You can fit 7,895,345 balls in a Boeing 747. Manholes covers are round because that is the shape that won’t fall in. You ask the guard what the other guard would say. You then take the fox across the bridge first, and eat the chicken. As for how to move Mount Fuji, you tell it a sad story.

I: Ooooooooookkkkkaaaayyyyyyy. Right, tell me a bit about yourself.

X: Everything is there in the resume.

I: I mean other than that. What sort of a person are you? What are your hobbies?

X: Japanese culture.

I: Interesting. What specifically?

X: Hentai.

I: What’s hentai?

X: It’s an televised art form.

I: Ok. Now, can you give me an example of a time when you were really challenged?

X: Well, just the other day, a few pennies from my pocket fell behind the sofa. Took me an hour to take them out. Boy was it challenging.

I: I meant technical challenge.

X: I once spent 10 hours installing Windows 10 on a Mac.

I: Why did you do that?

X: I had nothing better to do.

I: Why did you decide to apply to us?

X: The voices in my head told me.

I: What?

X: You advertised a job, so I applied.

I: And why do you want to change your job?

X: Money, baby!

I: (shocked)

X: I mean, I am looking for more lateral changes in a fast moving cloud connected social media agile web 2.0 company.

I: Great. That’s the answer we were looking for. What do you feel about constant overtime?

X: I don’t know. What do you feel about overtime pay?

I: What is your biggest weakness?

X: Kryptonite. Also, ice cream.

I: What are your salary expectations?

X: A million dollars a year, three months paid vacation on the beach, stock options, the lot. Failing that, whatever you have.

I: Great. Any questions for me?

X: No.

I: No? You are supposed to ask me a question, to impress me with your knowledge. I’ll ask you one. Where do you see yourself in 5 years?

X: Doing your job, minus the stupid questions.

I: Get out. Don’t call us, we’ll call you.

All Credit to:

http://pythonforengineers.com/the-p...89 -

So this was a couple years ago now. Aside from doing software development, I also do nearly all the other IT related stuff for the company, as well as specialize in the installation and implementation of electrical data acquisition systems - primarily amperage and voltage meters. I also wrote the software that communicates with this equipment and monitors the incoming and outgoing voltage and current and alerts various people if there's a problem.

Anyway, all of this equipment is installed into a trailer that goes onto a semi-truck as it's a portable power distribution system.

One time, the computer in one of these systems (we'll call it system 5) had gotten fried and needed replaced. It was a very busy week for me, so I had pulled the fried computer out without immediately replacing it with a working system. A few days later, system 5 leaves to go work on one of our biggest shows of the year - the Academy Awards. We make well over a million dollars from just this one show.

Come the morning of show day, the CEO of the company is in system 5 (it was on a Sunday, my day off) and went to set up the data acquisition software to get the system ready to go, and finds there is no computer. I promptly get a phone call with lots of swearing and threats to my job. Let me tell you, I was sweating bullets.

After the phone call, I decided I needed to try and save my job. The CEO hadn't told me to do anything, but I went to work, grabbed an old Windows XP laptop that was gathering dust and installed my software on it. I then had to build the configuration file that is specific to system 5 from memory. Each meter speaks the ModBus over TCP/IP protocol, and thus each meter as a different bus id. Fortunately, I'm pretty anal about this and tend to follow a specific method of id numbering.

Once I got the configuration file done and tested the software to see if it would even run properly on Windows XP (it did!), I called the CEO back and told him I had a laptop ready to go for system 5. I drove out to Hollywood and the CFO (who was there with the CEO) had to walk about a mile out of the security zone to meet me and pick up the laptop.

I told her I put a fresh install of the data acquisition software on the laptop and it's already configured for system 5 - it *should* just work once you plug it in.

I didn't get any phone calls after dropping off the laptop, so I called the CFO once I got home and asked her if everything was working okay. She told me it worked flawlessly - it was Plug 'n Play so to speak. She even said she was impressed, she thought she'd have to call me to iron out one or two configuration issues to get it talking to the meters.

All in all, crisis averted! At work on Monday, my supervisor told me that my name was Mud that day (by the CEO), but I still work here!

Here's a picture of the inside of system 8 (similar to system 5 - same hardware) 15

15 -

Things I wish I could tell my 18 year old self.

1) Accept you will make mistakes.

2) Truly learn the language you are using.

3) Write idiomatic code for the language you are using.

4) Be upfront about not knowing something.

5) Don't let not knowing something stop you from learning it.

6) None of us knew X until we learned it.

7) Understand your strengths and weaknesses as a developer, play to them.

8) Be willing to try new things.

9) X language isn't ALWAYS the best choice, X paradigm isn't ALWAYS the best choice. Choose wisely.

10) You won't know everything, but you might know more than others.

11) Your ideas and ego don't matter more than ensuring the product works.

12) "Perfection is the enemy of the good [enough]" - Voltaire

13) "Perfection is not achieved when there's nothing more to add, but when there's nothing more to remove." - Einstein.

14) Conflicts happen, deal with it.

15) Develop a toolset and really learn them.

16) Try new tools, they may prove better than what you were using.

17) Don't manage your own memory unless you absolutely have to, you are probably not smarter than the collective intelligence of the team that built the various garbage collection methods.

18) People can be dicks, especially online.

19) If you are new and people are being dicks to you, did you skip past the irc message about etiquette? If you did, you're the dick in this situation.

20) It can be tough, but it is fun, so have fun!6 -

The stupid stories of how I was able to break my schools network just to get better internet, as well as more ridiculous fun. XD

1st year:

It was my freshman year in college. The internet sucked really, really, really badly! Too many people were clearly using it. I had to find another way to remedy this. Upon some further research through Google I found out that one can in fact turn their computer into a router. Now what’s interesting about this network is that it only works with computers by downloading the necessary software that this network provides for you. Some weird software that actually looks through your computer and makes sure it’s ok to be added to the network. Unfortunately, routers can’t download and install that software, thus no internet… but a PC that can be changed into a router itself is a different story. I found that I can download the software check the PC and then turn on my Router feature. Viola, personal fast internet connected directly into the wall. No more sharing a single shitty router!

2nd year:

This was about the year when bitcoin mining was becoming a thing, and everyone was in on it. My shitty computer couldn’t possibly pull off mining for bitcoins. I needed something faster. How I found out that I could use my schools servers was merely an accident.

I had been installing the software on every possible PC I owned, but alas all my PC’s were just not fast enough. I decided to try it on the RDS server. It worked; the command window was pumping out coins! What I came to find out was that the RDS server had 36 cores. This thing was a beast! And it made sense that it could actually pull off mining for bitcoins. A couple nights later I signed in remotely to the RDS server. I created a macro that would continuously move my mouse around in the Remote desktop screen to keep my session alive at all times, and then I’d start my bitcoin mining operation. The following morning I wake up and my session was gone. How sad I thought. I quickly try to remote back in to see what I had collected. “Error, could not connect”. Weird… this usually never happens, maybe I did the remoting wrong. I went to my schools website to do some research on my remoting problem. It was down. In fact, everything was down… I come to find out that I had accidentally shut down the schools network because of my mining operation. I wasn’t found out, but I haven’t done any mining since then.

3rd year:

As an engineering student I found out that all engineering students get access to the school’s VPN. Cool, it is technically used to get around some wonky issues with remoting into the RDS servers. What I come to find out, after messing around with it frequently, is that I can actually use the VPN against the screwed up security on the network. Remember, how I told you that a program has to be downloaded and then one can be accepted into the network? Well, I was able to bypass all of that, simply by using the school’s VPN against itself… How dense does one have to be to not have patched that one?

4th year:

It was another programming day, and I needed access to my phones memory. Using some specially made apps I could easily connect to my phone from my computer and continue my work. But what I found out was that I could in fact travel around in the network. I discovered that I can, in fact, access my phone through the network from anywhere. What resulted was the discovery that the network scales the entirety of the school. I discovered that if I left my phone down in the engineering building and then went north to the biology building, I could still continue to access it. This seems like a very fatal flaw. My idea is to hook up a webcam to a robot and remotely controlling it from the RDS servers and having this little robot go to my classes for me.

What crazy shit have you done at your University?9 -

Today, I was told to investigate why the software doesn't work on "some" computers. I had no previous experience with that particular software but I just had to make some tests... easy, right? As soon as I ran the software, my computer crashed (I literally had to restart the pc). I asked my colleagues if I did something wrong but the set up seemed ok.

Later, in a random discussion about the software I found out it does "a little memory allocation". I opened the performance tab in task manager and ran the software again. In an instant, the RAM went from 1.3GB to 7.66GB (my pc has 8GB of RAM).

In an attempt to find how such a monstrosity was creater, I found out the developer that made the software had 16GB of RAM on his pc.

I have found something that eats RAM more than Chrome... brace yourselves.8 -

My code review nightmare part 3

Performed a review on/against a workplace 'nemesis'. I didn't follow the department standards document (cause I could care less about spacing, sorted usings, etc) and identified over 80 bugs, logic errors, n+1 patterns, memory leaks (yes, even in .net devs can cause em'), and general bad behavior (ex.'eating' exceptions that should be handled or at least logged)

Because 'Jeff' was considered a golden child (that's another long TL;DR), his boss and others took a major offense and demanded I justify my review, item by item.

About 2 hours into the meeting, our department mgr realized embarrassing Jeff any further wasn't doing anyone any good and decided to take matters into his own hands. Thinking 'well, its about time he did his job', I go back to my desk. About an hour later..

Mgr: "I need you in the conference room, RIGHT NOW!"

<oh crap>

Mgr: "I spoke to Jeff and I think I know what the problem is. Did you ever train him on any of the problems you identified in the review?"

Me: "Um, no. Why would I?"

Mgr: "Ha!..I was right. So lets agree the problems are partially your fault, OK?"

Me: "Finding the bugs in his code is somehow my fault?"

Mgr: "Yes! For example, the n+1 problem in using the WCF service, you never trained him on how to use the service. You wrote the service, correct?"

Me: "Yes, but it's not my job to teach him how to write C#. I documented the process and have examples in the document to avoid n+1. All he had to do was copy/paste."

Mgr: "But you never sat with Jeff and talked to him like a human being? You sit over there in your silo and are oblivious to the problems you cause. This ends today!"

Me: "What the...I have no idea what you are talking about. What in the world did Jeff tell you?"

Mgr: "He told me enough and I'm putting an end to it. I want a compressive training class developed on how to use your service. I'll give you a month to get your act together and properly train these developers."

3 days later, I submit the power-point presentation and accompanying docs. It was only one WCF with a handful of methods. Mgr approved the training, etc..etc. execute the 'training', and Jeff submits a code review a couple of weeks later. From over 80 issues to around 50. The poop hits the fan again.

Mgr: "What's your problem? When are you going to take your responsibility seriously?"

Me: "Its pretty clear I don't have the problem. All the review items were also verified by other devs. Its not me trying to be an asshole."

Mgr: "Enough with the excuses. If you think you can do a better job *you* make the code changes and submit them for Jeff for review. No More Excuses!"

Couple of days later, I make the changes, submit them for review, and Jeff really couldn't say too much other than "I don't see this as an improvement"

TL;DR, I had been tracking the errors generated by the site due to the bugs prior to my changes. After deployment, # of errors went from thousands per hour to maybe hundreds per day (that's another story) and the site saw significant performance increases, fewer customer complaints, etc..etc.

At a company event, the department VP hands out special recognition awards:

VP: "This award is especially well earned. Not only does this individual exemplify the company's focus on teamwork, he also went above and beyond the call of duty to serve our customers. Jeff, come on up and get this well deserved award."18 -

Woohoo! 32k achieved!!! Finally I can post some new rant without risking some sudden overshoot 😁

So putting celebrations aside for a minute, a while ago I've noticed a tingle when I stroke my finger across metal areas of my tablet, or the sides of my phone (which probably has metal near it too) while it's charging. And it's been bugging me ever since.

Now, some things to note are that it only happens when my feet are touching the ground though slippers, and that the frequency is so low that I can actually feel the tingle when I slide my finger across the material. This to me at least seems like electricity flows through me into ground, and touching the ground directly provides a path so easy for the electrons to run away that I don't feel it at all. But if I lift my feet off the ground entirely, I just get charged up and after that, nothing else happens.

So those are my ideas. The answers on the subject on the other hand.. absolute cancer. Unsurprisingly, most of them came from Apple users. Here's some of them.

https://discussions.apple.com/threa...

- I've not noticed it, but if you're concerned bring the phone to Apple for evaluation.

- Me too facing same problem.. did u visit apple care?

And one good answer at least...

- google emf sensitivity, its real. You are right, there is a small current flowing through your body, try to limit your usage. The problem with this issue is those who aren't affected (lucky ones for now) will tell you these products are 100% safe. To a degree they are, i used my ipod touch for about 2 years straight vwith virtually no symptoms. then the tingling started and it gets worse.You will get more sensitive to progressively less powerful things. I dont want to scare you but just limit your usage like i didnt do 🙂

Overall that discussion was pretty good actually, aside from "bring it to the Genius Bar, they'll know for sure and not just sell you another unit". But then there's Reddit.

https://reddit.com/r/iphone/...

- Ok, real reason is probably that the extension cord and/or outlet is probably not grounded correctly. Either that or you are using a cheap knockoff charger.

Either use a surge protector and/or use the authentic Apple Charger.

- It's not the volts that hurt you, it's the amps

- I think you are in deep love with your phone. That tingling sensation is usually referred to as "love" in human language.

- Do less acid, I would advise.

Okay, so that's the real cancer. Grounding issue sounds reasonable despite it being wrong. Grounding is actually not needed when your charging appliance doesn't have any exposed metal parts. And isolation from high voltage to low voltage side actually happens through things like routering holes into the PCB, creating spark gaps, and using galvanic isolation through things like optocouplers. As for a surge protector? I'm using them to protect my PC and my servers, but the only purpose they serve is to protect from.. you guessed it.. voltage surges, like lightning bolts hitting the grid. They don't do shit for grounding or reducing this tingle! What a fucking tool.

It's not the volts that kill, it's the amps.. yeah I'm sure that the debunking of that is easy to find. Not gonna explain that here. And the rest of it.. yeah it's just fucking cancer.

Now what's the real issue with this tingle? It's actually a Class-Y rated (i.e. kV rated) capacitor that's on the transformer of any switch-mode power supply, including phone chargers. If memory serves me right, it helps with decoupling the switching noise and so on. But as it's connected to the primary side of the transformer, if the cap is sufficiently large and you are sufficiently sensitive, it can actually cause that tingle by passing a fraction of the mains electricity into your body. It's totally safe though, as the power that these caps pass is very small. But to some, it's noticeable.

Hope you found this interesting! And thanks a lot for bringing me to 2^15. I really appreciate it ♥️ 14

14 -

import LongRantKit

import NonRantKit

import TldrKit

I don't like stickers on my laptop because it clutters it up. But today I realized the importance of them.

A few months ago I was sitting at a coffee shop working on a paper and I noticed a guy with this cool sticker on a MacBook Pro: it had the integral symbol to the left of the Apple logo, and to the right of it a lowercase d and another Apple logo. It took me a few hours to realize what it meant, but I finally did and at that point I also guessed that not many people know what it is.

So I, as antisocial as I am, I finish up my work and before I leave I walk up to him and say hi. At this point I'm a senior in high school and I learn he's a junior in the same college I plan to attend. We talked a little before I had to leave and got to know each other somewhat.

After I leave I find him on Instagram and Facebook and friend him and such.

Recently I posted a picture saying I had recently joined the Apple Developer Team, and also recently reposted a memory on Facebook from 5 years ago that was a screen capture of an iPhone 4 simulator running iOS 5 showing off one of my first apps.

Then yesterday I get a message from the guy I met at the coffee shop asking for some help with an iOS project he's working on. We decide to meet today and I spend the entire morning showing him the basics of Swift, Xcode, Interface Builder, etc. I feel like I really helped him jumpstart his app and helped him understand the basics of different concepts.

If he didn't have that integral sticker on his laptop I would have never had this opportunity to finally share some iOS development experience.

For this I would like to thank my high school calculus teacher, with whom I spent many classes at Starbucks because I was an only student. I'd like to thank laptop stickers, and finally I would like to thank the coffee shop.

TL;DR: Said hi to a guy with an integral sticker on his laptop, a few months later he approaches me for help understanding iOS development.2 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

Saw this on Facebook and couldn't help but share here! 😂

A young woman submitted the tech support message below (about her relationship to her husband) presumably did it as a joke…

The query:

Dear Tech Support,

’Last year I upgraded from Boyfriend 5.0 to Husband 1.0 and noticed a distinct slowdown in overall system performance, particularly in the flower and jewelry applications, which operated flawlessly under Boyfriend 5.0.

In addition, Husband 1.0 uninstalled many other valuable programs, such as: Romance 9.5 and Personal Attention 6.5, and then installed undesirable programs such as: NBA 5.0, NFL 3.0 and Golf Clubs 4.1.

Conversation 8.0 no longer runs, and House cleaning 2.6 simply crashes the system. Please note that I have tried running Nagging 5.3 to fix these problems, but to no avail.

What can I do?

Signed,

Desperate

The response (that came weeks later out of the blue):

Dear Desperate,

“First keep in mind, Boyfriend 5.0 is an Entertainment Package, while Husband 1.0 is an operating system. Please enter command: I thought you loved me.html and try to download Tears 6.2 and do not forget to install the Guilt 3.0 update. If that application works as designed, Husband 1.0 should then automatically run the applications Jewelry 2.0 and Flowers 3.5.

However, remember, overuse of the above application can cause Husband 1.0 to default to Grumpy Silence 2.5, Happy Hour 7.0 or Beer 6.1. Please note that Beer 6.1 is a very bad program that will download the Farting and Snoring Loudly Beta.

Whatever you do, DO NOT, under any circumstances, install Mother-In-Law 1.0 (it runs a virus in the background that will eventually seize control of all your system resources.)

In addition, please, do not attempt to re-install the Boyfriend 5.0 program. These are unsupported applications and will crash Husband 1.0.

In summary, Husband 1.0 is a great program, but it does have limited memory and cannot learn new applications quickly. You might consider buying additional software to improve memory and performance. We recommend: Cooking 3.0.Good Luck!’

Good Luck!3 -

I haven't ranted for today, but I figured that I'd post a summary.

A public diary of sorts.. devRant is amazing, it even allows me to post the stuff that I'd otherwise put on a piece of paper and probably discard over time. And with keyboard support at that <3

Today has been a productive day for me. Laptop got restored with a "pacman -Syu" over a Bluetooth mobile data tethering from my phone, said phone got upgraded to an unofficial Android 9 (Pie) thanks to a comment from @undef, etc.

I've also made myself a reliable USB extension cord to be able to extend the 20-30cm USB-A male to USB-C male cord that Huawei delivered with my Nexus 6P. The USB-C to USB-C cord that allows for fast charging is unreliable.. ordered some USB-C plugs for that, in order to make some high power wire with that when they arrive.

So that plug I've made.. USB-A male to USB-A female, in which my short USB-C to USB-A wire can plug in. It's a 1M wire, with 18AWG wire for its power lines and 28AWG wires for its data lines. The 18AWG power lines can carry up to 10A of current, while the 28AWG lines can carry up to 1A. All wires were made into 1M pieces. These resulted in a very low impedance path for all of them, my multimeter measured no more than 200 milliohms across them, though I'll have to verify and finetune that on my oscilloscope with 4-wire measurement.

So the wire was good. Easy too, I just had to look up the pinout and replicate that on the male part.

That's where the rant part comes in.. in fact I've got quite uncomfortable with sentences that don't include at least one swear word at this point. All hail to devRant for allowing me to put them out there without guilt.. it changed my very mind <3

Microshaft WanBLowS.

I've tried to plug my DIY extension cord into it, and plugged my phone and some USB stick into it of which I've completely forgot the filesystem. Windows certainly doesn't support it.. turns out that it was LUKS. More about that later.

Windows returned that it didn't support either of them, due to "malfunctioning at the USB device". So I went ahead and plugged in my phone directly.. works without a problem. Then I went ahead and troubleshooted the wire I've just made with a multimeter, to check for shorts.. none at all.

At that point I suspected that WanBLowS was the issue, so I booted up my (at the time) problematic Arch laptop and did the exact same thing there, testing that USB stick and my phone there by plugging it through the extension wire. Shit just worked like that. The USB stick was a LUKS medium and apparently a clone of my SanDisk rootfs that I'm storing my Arch Linux on my laptop at at the time.. an unfinished migration project (SanDisk is unstable, my other DM sticks are quite stable). The USB stick consumed about 20mA so no big deal for any USB controller. The phone consumed about 500mA (which is standard USB 2.0 so no surprise) and worked fine as well.. although the HP laptop dropped the voltage to ~4.8V like that, unlike 5.1V which is nominal for USB. Still worked without a problem.

So clearly Windows is the problem here, and this provides me one more reason to hate that piece of shit OS. Windows lovers may say that it's an issue with my particular hardware, which maybe it is. I've done the Windows plugging solely through a USB 3.0 hub, which was plugged into a USB 3.0 port on the host. Now USB 3.0 is supposed to be able to carry up to 1A rather than 500mA, so I expect all the components in there to be beefier. I've also tested the hub as part of a review, and it can carry about 1A no problem, although it seems like its supply lines aren't shorted to VCC on the host, like a sensible hub would. Instead I suspect that it's going through the hub's controller.

Regardless, this is clearly a bad design. One of the USB data lines is biased to ~3.3V if memory serves me right, while the other is biased to 300mV. The latter could impose a problem.. but again, the current path was of a very low impedance of 200milliohms at most. Meanwhile the direct connection that omits the ~200ohm extension wire worked just fine. Even 300mV wouldn't degrade significantly over such a resistance. So this is most likely a Windows problem.

That aside, the extension cord works fine in Linux. So I've used that as a charging connection while upgrading my Arch laptop (which as you may know has internet issues at the time) over Bluetooth, through a shared BNEP connection (Bluetooth tethering) from my phone. Mobile data since I didn't set up my WiFi in this new Pie ROM yet. Worked fine, fixed my WiFi. Currently it's back in my network as my fully-fledged development host. So that way I'll be able to work again on @Floydian's LinkHub repository. My laptop's the only one who currently holds the private key for signing commits for git$(rm -rf ~/*)@nixmagic.com, hence why my development has been impeded. My tablet doesn't have them. Guess I'll commit somewhere tomorrow.

(looks like my rant is too long, continue in comments)3 -

The year was 1983. My best friend and neighbour at the time invited me over to see an amazing device that his father had brought home from work, an IBM PC. We played a game called Track & Field, and I was amazed that the machine remembered my name once I've entered it. (Uptil then the only machines with any kind of memory that I've come in touch with, were arcade games and my cousin's video game console, which was also the first electronic gaming device I've ever played, back in 1978). In the early 1980s, computers were anything but commonplace in Åland Islands, but I think that it was in 1983 that people became aware of them, and there was a budding interest to buy one, at least among us kids. It was my sister who wished for a home computer for Christmas, so the same year Santa gave us a ZX Spectrum. It came with a game called Thro' the Wall, an Arcanoid clone(, that has inspired me to make my own clone "Wall" for all the different home computers I've had, ranging from Commodore 16 and Canon V-20 to Amiga 500 and Amiga 1200). Unfortunately, we only managed to load the game (delivered on a C cassette) like once or twice after several attempts. It turned out that the hardware was faulty and dad got a refund after first having had to complain a lot at the dealer (which went out of business some ten years ago), and then bought the Commodore the next Christmas. Anyway, I wrote my first code on the ZX Spectrum. It doesn't really count for programming as all I did was typing examples and running them. I do recall altering one example though, a program drawing the Swedish flag on the screen, by adding an inner red cross thus turning it in the Åland flag. But, with the Commodore 16 (which had an excellent Basic interpreter) I got started with programming almost immediately and by the end of 1984 I had written my fist very own Basic programs. In 1996 I got my first IT job, and am still a dev. So, what became of my childhood friend and neighbour? He runs a successful computer dealership :)

-

24th, Christmas: BIND slaves decide to suddenly stop accepting zone transfers from the master. Half a day of raging and I still couldn't figure out why. dig axfr works fine, but the slaves refuse a zone update according to tcpdump logs.

25th, 2nd day: A server decides to go down and take half my network with it. Turns out that a Python script managed to crash the goddamn kernel.

Thank you very much technology for making the Christmas days just a little bit better ❤️

At least I didn't have anything to do during either days, because of the COVID-19 pandemic. And to be fair, I did manage to make a Telegram bot with fancy webhooks and whatnot in 5MB of memory and 18MB of storage. Maybe I should just write the whole thing and make another sacred temple where shitty code gets beaten the fuck out of the system. Terry must've been onto something...5 -

This is my first rant here, so I hope everyone has a good time reading it.

So, the company I am working for got me going on the task to do a rewrite of a firmware that was extended for about 20 years now. Which is fine, since all new machines will be on a new platform anyways. (The old firmware was written for an 8051 initially. That thing has 256 byte of ram. Just imagine the usage of unions and bitfields...)

So, me and a few colleagues go ahead and start from scratch.

In the meantime however, the client has hired one single lonely developer. Keep in mind that nobody there understands code!

And oh boy did he go nuts on the old code, only for having it used on the very last machine of the old platform, ever! Everything after that one will have our firmware!

There are other machines in that series, using the original extended firmware. Nothing is compatible, bootloaders do not match, memory layouts do not match, code is a horrible mess now, the client is writing the specification RIGHT NOW (mind, the machine is already sold to customers), there are no tests, and for the grand finale, the guy canceled his job and went to a different company. Did I mention the bugs it has and the features it lacks?

Guess who's got to maintain that single abomination of a firmware now?1 -

!dev

A child's mind is fascinating.

I remember how it felt being a kid, just deliriously happy.

Things were magical, mystical and happy.

I knew the world wasn't perfect, I knew bad things happened to good people.

But a kid's mind is so powerful that it can fill in the blanks with the most cheerful and optimistic perspectives.

And at some point in my childhood I was exposed to videogames, and that kinda took me down fantasy lane even further.

I was extremely young and barely retaining any memories when I was exposed to my first console, a famicom.

I have a somewhat vivid memory of my mind being blown away for the first time by watching my brother play New Ghostbusters II for NES.

From then on, we never stopped and played several console and dos/pc games.

When I was 10, someone from the neighborhood brought in a couple of floppys with Pokemon Yellow.

"What? Pokemon? How the fuck is that even possible? This is a pc, not a gameboy".

I didn't know at the time what an emulator was, but I was super fucking stoked to be able to play that.

My dad had a 1 gb laptop from work that he didn't use, so I hoarded that shit, and I would get to bed and play nearly everyday.

The experience was surreal. I was doing pc gaming... not on a chair, on a fucking bed, and I was playing a gameboy game... on a pc.

It was so intense to me, that even after more than 2 decades of that time in my life, I still remember how it feels like.

Like, you know how you can "feel" things if you think about them? like for example if you think about the taste of chicken, you can somehow feel it for a second.

Well I have like an actual physical sensation linked to that experience but I can't explain it at all, because it's just a sensation.

I think people usually say they feel that way, for example, about the PSX (usually refered to as ps one) loading screen. I experienced that too but when I was 12, so it was not as intense (it does make me feel the fuzzies though).

I also remember other things with very high detail, like the texture of my bed cover, the weather, mom cooking, the clunky shape of the laptop, the way I carelessly stored it above a pile of magazines, etc.

I rememeber ofc how it felt looking at the game sprites, interacting with NPCs, and the goddamn fucking glorious music.

It was dreamy.

Years and years later, I grew up and I stopped living in fantasy world and became more aware of the grim aspects of life my younger self was sugarcoating.

So I tried to play pokemon again, again and again, and no matter how hard I tried to revive that euphoria, I could not never do it.

I started to get annoyed at the game.

"Come oooon, I did the tutorial already, let me skip this.

This pokemon is useless, why am I even training it.

Fuck, I'm tired of grinding"

At some point I accepted that the feeling would never return, and that it would just live in my memory.

Ironically, I can recall that memory and how it felt anytime I want to.

And I can actually still feel it, and throughtout these years, it has never wore down.

And eventually I learned how to play pokemon and enjoy it:

I read tier lists at smogon online and just catch and train the pokemons that are higher on the list, which is how i got to beat yellow in like 3 days.

(This is nothing compared to what speedrunners do, but much better than the weeks it had taken me in the past).

That served as an important lesson that when a kid plays a game, his mind is also the game at the same time, filling the blanks with its imagination.

A very similar experience happened to me with harvest moon, which is the precursor of stardew valley.

and that game is faaar more emotional: you talk to people, overtime you befriend them and they open up, you meet a girl, you marry her, have a kid

you get farm animals, you brush them, they become happy

you get attached

that game was also so powerful in me that in all naiveness I thought I wanted to be a farmer.

Eventually I grew up and hit puberty and from then on, I focused more on competitive games, like smash bros, cs and tf2.

and i dunno how to end a post so eat my fucking nuts17 -

Just as I wait for my train, some advertisers from a utility company here approached me. Asking what my company is etc..

Me: "well I'm making my own company..."

*Looks at their pamphlet*

"Oh, utility company you mean. My apartment building has solar panels."

Them: "oh you know about electricity right... And F-16, the fighter jets that fly at 3000km/h"

(My neighbor is a former aerospace technician who mentioned that previously, should be about right)

Them: "they fly faster than electricity!"

Me: "but um.. electricity travels at the speed of light..."

Them: *avoid subject*

Them: "yeah it travels 7 times around the globe in 1 second"

Me: *recalls ping to my servers in Italy*

"Yeah to Italy my ping is about 300ms if memory serves me right... So that'd make sense"

(Turned out to be 40ms.. close enough though, right 🙃)

Them: "don't travel too much at light speed, alright!"

*They pack up and leave*

Meanwhile me, thinking: but guys.. all I wanted to do was smoke a cigarette before my train comes. Why did you waste my time with this? And uninformed time wastage at that.

Advertisers are the worst 😶12 -

It would have been back in the 90s 🤫

I was about 8 years old I guess when I had a friend who had a Commodore64 and he loaded up the good old floppy, typed some things in and the screen started doing things, my mind was instantly triggered for “how did you do that?”.

Moving forward after that I was into gaming on consoles (sega, snes, Atari ect) and always wondered how the games were made (being pre-internet) that was not easy to find info for, otherwise I think inprobably would have ended up in the game dev world.

It wasn’t until I was about 10-11 that I finally got a PC in the house ( good old IBM 386 with 10mb HDD.. yes MB not GB for you young folk) and I was addicted from day one, MS paint, changing settings left right and Center in windows 3.11 and then when we upgraded to W95 and then W98 things got more and more interesting.

God the memories, and games (MAME32 was the best)😆

Shit now I want to find some old school games for a trip up memory lane 😂

When I was 15, I made my first website in front page (don’t judge), was a nice big walkthrough with photos and map locations for GTA 3, and since then I’ve never looked back. -

I just installed Opera Mini on my PSP. That alone isn't very exciting on its own, although I am stoked that my website does in fact render on a device from 2009. With the helpful guidance of a laptop from 2004 that's doing the hotspot duties for this thing.

No, what really got me stoked is that Opera still supports these old platforms, and how small they managed to make it. The .jar file for Opera Mini 4.5 is ~800kB large. There's a .jad file as well but it's negligible in size and seems to be a signature of sorts.

Let that sink in for a moment. This entire web browser is 800kB. Firefox meanwhile consistently consumes 800 MEGABYTES.. in MEMORY. So then, I went to think for a moment, how on earth did they manage to cram an entire functioning web browser in 800kB? Hell, what makes up a web browser anyway?

The answer to that question I got to is as follows. You need an engine to render the web page you receive. You need a UI to make the browser look nice. And finally you need a certificate store to know which TLS certificates to trust. And while probably difficult to make, I think it should be possible to do in 800k. Seriously, think about it. How would you go *make* a web browser? Because I've already done that in the past.

Earlier I heard that you need graphics, audio, wasm, yada yada backends too.. no. Give your head a shake. Graphics are the responsibility of the graphics driver. A web browser shouldn't dabble with those at all. Audio, you connect to PulseAudio (in Linux at least) and you're done. Hell I don't even care about ALSA or OSS here. You just connect to the stuff that does that job for you. And WebAssembly.. God I could rant about that shit all day. How about making it a native application? Not like actual Assembly is used for BIOS and low-level drivers. And that we already have a better language for the more portable stuff called C.

Seriously, think about it. Opera - a reputable browser vendor - managed to do it in 800kB on a 12 year old device. Don't go full wank on your framework shit on the comments. And don't you fucking dare to tell me that there's more to it. They did it for crying out loud. Now you take a look at your shitpile for JS code and refactor that shit already. Thank you. 21

21 -

In 2010, it was my first client project. Our architect was not from iOS background, we had editable pdfs in our app. Those were pretty rich pdfs with inline HD images. iPads that time were not too fast and couldn't handle big gb pdf loaded into memory. App would crash randomly running out of memory. We fixed it by paginating pdf, it wasn't out of the world but considering it was my first mobile project and no one to guide, I thought it was pretty cool what we did there

-

MTP is utter garbage and belongs to the technological hall of shame.

MTP (media transfer protocol, or, more accurately, MOST TERRIBLE PROTOCOL) sometimes spontaneously stops responding, causing Windows Explorer to show its green placebo progress bar inside the file path bar which never reaches the end, and sometimes to whiningly show "(not responding)" with that white layer of mist fading in. Sometimes lists files' dates as 1970-01-01 (which is the Unix epoch), sometimes shows former names of folders prior to being renamed, even after refreshing. I refer to them as "ghost folders". As well known, large directories load extremely slowly in MTP. A directory listing with one thousand files could take well over a minute to load. On mass storage and FTP? Three seconds at most. Sometimes, new files are not even listed until rebooting the smartphone!

Arguably, MTP "has" no bugs. It IS a bug. There is so much more wrong with it that it does not even fit into one post. Therefore it has to be expanded into the comments.

When moving files within an MTP device, MTP does not directly move the selected files, but creates a copy and then deletes the source file, causing both needless wear on the mobile device' flash memory and the loss of files' original date and time attribute. Sometimes, the simple act of renaming a file causes Windows Explorer to stop responding until unplugging the MTP device. It actually once unfreezed after more than half an hour where I did something else in the meantime, but come on, who likes to wait that long? Thankfully, this has not happened to me on Linux file managers such as Nemo yet.

When moving files out using MTP, Windows Explorer does not move and delete each selected file individually, but only deletes the whole selection after finishing the transfer. This means that if the process crashes, no space has been freed on the MTP device (usually a smartphone), and one will have to carefully sort out a mess of duplicates. Linux file managers thankfully delete the source files individually.

Also, for each file transferred from an MTP device onto a mass storage device, Windows has the strange behaviour of briefly creating a file on the target device with the size of the entire selection. It does not actually write that amount of data for each file, since it couldn't do so in this short time, but the current file is listed with that size in Windows Explorer. You can test this by refreshing the target directory shortly after starting a file transfer of multiple selected files originating from an MTP device. For example, when copying or moving out 01.MP4 to 10.MP4, while 01.MP4 is being written, it is listed with the file size of all 01.MP4 to 10.MP4 combined, on the target device, and the file actually exists with that size on the file system for a brief moment. The same happens with each file of the selection. This means that the target device needs almost twice the free space as the selection of files on the source MTP device to be able to accept the incoming files, since the last file, 10.MP4 in this example, temporarily has the total size of 01.MP4 to 10.MP4. This strange behaviour has been on Windows since at least Windows 7, presumably since Microsoft implemented MTP, and has still not been changed. Perhaps the goal is to reserve space on the target device? However, it reserves far too much space.

When transfering from MTP to a UDF file system, sometimes it fails to transfer ZIP files, and only copies the first few bytes. 208 or 74 bytes in my testing.

When transfering several thousand files, Windows Explorer also sometimes decides to quit and restart in midst of the transfer. Also, I sometimes move files out by loading a part of the directory listing in Windows Explorer and then hitting "Esc" because it would take too long to load the entire directory listing. It actually once assigned the wrong file names, which I noticed since file naming conflicts would occur where the source and target files with the same names would have different sizes and time stamps. Both files were intact, but the target file had the name of a different file. You'd think they would figure something like this out after two decades, but no. On Linux, the MTP directory listing is only shown after it is loaded in entirety. However, if the directory has too many files, it fails with an "libmtp: couldn't get object handles" error without listing anything.

Sometimes, a folder appears empty until refreshing one more time. Sometimes, copying a folder out causes a blank folder to be copied to the target. This is why on MTP, only a selection of files and never folders should be moved out, due to the risk of the folder being deleted without everything having been transferred completely.

(continued below)24 -

I really think there should be a subject in every CS course to teach us how to handle/work-under Grade-A assholes and dumbfucks. Not that it would help, but atleast warn us on what we are getting into.

In my opinion, development is not *that* hard or frustrating but is made so by these shitty people. But again, what do I know.

I was scolded by my boss for using for-loop to iterate through an array recently. Apparently for-loop is not used in real world projects and this iteration should be done "in-memory". My colleagues and I are still trying to understand and process that.

I was asked to add fitbit integration to a project within 2 hours just because I had "already done it a week ago" in *another* project. Luckily, it was then given to a "senior" developer who took 4 days for it and essentially copy-pasted my work without much changes, ofcourse it stopped working every now and then.

I am given unreal deadlines on my tasks, on technologies I haven't worked on before, and then expected to churn out production ready code with no bugs in them.

My boss literally just sends me the links of 1st three google results on the problems I encounter and report, after humiliating me ofcourse. Yes, I did google it and yes I went through all I could find from Google forums to GitHub issues. When the library/plugin author himself says that this feature is not yet available, don't expect me to develop it in 2 hours you dumbfuck.

And for the love of God, please stop changing the data model every single day and justify it with agile development. Think before making any changes to it. Ever heard of Join queries? Foreign keys? Or any other basic database concepts.

We reached a point where each branch in the repo had different data model. Not kidding. And we were a team of just 4 developers. Atleast inform us when you change models after discussing it with your shit for knowledge "senior" developer, so we don't have to redo it all over again. The channels on slack are not for sharing random articles only.

I am just waiting to complete my year here.

I should have known what I got myself into the day he asked me to remove the comments I had added to explain what my code does. Why you ask? Because "we don't write comments". -

Imagine asking your friends to help you rate your app on the google play store and instead of saying NO I DONT WANT TO RATE YOUR APPLICATION no... they decide to fuck with your mind.

1)

I will rate it tomorrow. (she never rated it tomorrow nor the next couple of weeks later)

2)

I will keep it in mind and rate it later :). (she never rated it later)

3)

I rated it haha (less than 30 seconds later they deleted the rating)

4)

Send me a link and I'll rate it (i send the link, they never respond or read my message again)

5)

I dont have memory on my phone :) (because 13MB of memory is a lot of storage requirements but taking 1 million selfies of up to 25GB is completely fine)

6)

I dont have memory on my phone what dont you understand :) x2 (this is the second girl)

7)

Your trying to give me a virus?? No (i got blocked multiple times)

8)

You want to hack me by making me install this application from the link that you sent me that leads to google play store? No (blocked)

9)

Rate your app? Haha i dont care about it because it doesnt bring me any benefit only the fat cocks that fill my pussy up satisfy me and not ur app haha

10)

Haha send me a link ill rate it (i send link, 8 hours later no reply or reading my message, i text her back if she had done it and im still put on ignore)

...

N)

more

----

Notice how none of these people have said the 2 letter word: "no".

All of these 10 examples are based on a true story.

All of these 10 examples are different people.

---

How hard

Can it be

To just

Write

no

---

.

---

For all of you who are about to trash talk saying i am desperately trying to beg people to rate my app:

i know all of those people for a long time. But when it comes to asking (and not forcing) someone to do you a favor for free that takes no more than 30 seconds, no one seems to have 30 seconds of their free time. Dont get me wrong, some of my friends did politely rate it and left a review, even the people who i barely knew left a review and rated it, but the people with whom I was closer by, didnt.

---

In the beginning i used to not care about this at all. Then i started falling into depression because of it. I fell then into deep depression. Then i sunk so deep that i couldn't feel any emotions anymore so i laughed as an anti depressive mechanism whenever something depressing happened. Now i cant even laugh because i have no more energy. Now i actually leave man tears

---

The only thing more valuable than people, any materialistic thing, animals, coding and even money - is time....

----

why do you waste my time

if i ask you to do something that takes 30 seconds and you dont want to do it

why cant you just say no

why do you drag me

why do you say you're going to do it when you know you wont do it

what do you gain by unnecessarily lying to someone for such a small thing?

to someone who has been a good person to you?

do you feel superior?

is your ego bigger?

----

This experience has taught me that not even a human from the same blood can be trusted.

All of your are fucked up in the head in your own style and i am guilty of it too, all of us are.

But i have never seen the human evolution went from simplicity to overengineered complexitory bULLSHit where you have to lie to someone and waste hours, days, weeks, months and sometimes years of his time just because you dont want to say a 2 letter word, no.

But when that person becomes more successful than you and achieves higher status, Theen you have those 30 seconds of free time. All of you are fucking cynics. and i am so much overly disgusted by all of this fucking bullshit....

-----

This experience has proven to me to simply focus on investing into myself and learn and improve myself and no one else. To not even bother asking even for a small kind of help, a feedback from my work because people don't have 30 seconds of their free time. That is all.12 -

I'm getting really tired of those dumbass programmers that do not understand shit and then come to me when production breaks. (I am also a programmer, not really a DevOps engineer, but I'm the least worst at DevOps stuff, so it's my job...).

We're programming some kind of document management tool. Today we had a release, and one of the new features is to download all of your documents as a zip file, which is asynchronuously generated. When it's done, the user gets a mail with the download link to the zip file.

The feature works basically, but today it broke our production service, as somebody was running a test of it.

Turns out all the documents are loaded into memory to be zipped. So if you have 2 gigs of documents, a container with memory restrictions in that area will crash.

I asked the programmer who reported this «ops problem» to me, why he didn't just shit the files into a temp foler in order to zip them in there.

He told me that he wanted to do so, but did not know how to mock this for a unit test, and therefore went to the in-memory «solution», which was easier for him to mock.

For fuck's sake, unit tests and mocks are fucking tools, not ends in itself! I don't give a fuck about your pointless mocking code when the application crashes!

When I got to deal with such dumbasses, I'd prefer to mock those motherfuckers with a leaky bucket of liquid shit, which basically accomplishes the same task from my perspective: dripping shit all over the place and make everything suck as fuck.3 -

So, I work in a game development studio, right?

We're trying to launch the title on as many platforms as reasonable, because as a social VR app we're kinda rowing upstream.

So far, Steam and Oculus have been fairly reasonable, if oddly broken and inconsistent.

Enter store 3.

Basically no in-game transaction support (our asking prompted them to *start* developing it. No, it's not very complete). No patch-update system (You want an update? Gotta download the whole fsckin' thing!). No beta-testing functionality for most of their stuff ("Just write the code like the example, it will work, trust us!"). No tools besides the buggy SDK (Wanna upload that new build? Say hello to this page in your web browser!).

So, in other words: Fun.

We've been trying to get actively launched for two months now. Keep in mind that the build has been up on Steam and Oculus for over a year and half a year (respectively), so the actual binary functionality is, presumably fine.

The best feedback we get back tends to be "Well, when we click the Launch button it crashes, so fail."

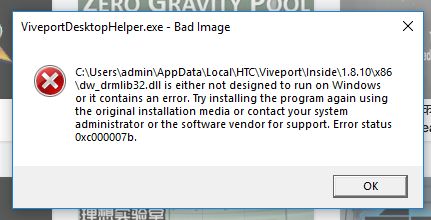

Meanwhile we're going back and forth, dealing with other-side-of-the-world timezone lag, trying to figure out what is so different from their machines as ours. Eventually we get them to start sending logs (and no, Windows Event logs are not sufficient for GAMES, where did you even get that idea????) except the logs indicate that the program is getting killed so terribly that the engine's built-in crash handler can't even kick in to generate memory dumps or even know it died.

All this boils down to today, where I get a screenshot of their latest attempt.

I just can't even right now. 5

5 -

There are a couple of them to list! But to sum my main ones(biggest personal heroes):

John McCarthy, one of the founding fathers of Artificial Intelligence and accredited with coining such term(sometimes before 1960 if memory serves right), a mathematical prodigy, the man based the original model of the Lisp programming language in lambda calculus. Many modern concepts that we have in programming where implemented in one way or another from his systems back in the day, and as a data analyst and ML nut.....well I am a big fan.

Herb Sutter: C++ programmer extraordinaire. I appreciate him more for his lectures and published articles than anything else. Incredibly smart and down to earth and manages to make C++ less intimidating while still approaching it with respect.

Rich Hickey: The mastermind behind Clojure, the Lisp dialect for the JVM. Rich is really talented and his lectures behind his motivations and reasons behind everything he does with Clojure are fascinating to see.

Ryan Dahl: Awww shit y'all know how it is. The man changed web development both in the backend and the frontend for good. The concept of people writing their own servers to run their pages was not new, but the Node JS runtime environment made it more widely available to people by means of a simple to use language that was already popular with web developers. I would venture to say that Ryan's amazing contributions to JS made the language better, as it stands, the language continues to evolve and new features that make it overall better keep being added. He is currently building Deno, which would be a runtime environment for TypeScript, in Rust.

Anders Hejlsberg: This dude was everywhere man....the original author of Turbo Pascal and the lead of Delphi back in the day. These RAD tools paved the way for what would be a revolution in the computing world. The dude is also the lead architect and designer of the C# programming language as well as TypeScript.

This fucker is everywhere and I love it.

Yukihiro "Matz" Matsumoto: Matsumoto san is the creator of the Ruby programming language. Not only am I a die hard fan of Ruby, but of the core philosophies that the man keeps as the core of his language design: Make the developer happy, principle of least surprise. Also I follow: minswan which is a term made by the Ruby community that states Mats is nice so we are nice. <---- because being cool to others is better than being a passive aggressive cunt.

Steve Wozniak: I feel as if the man does not get enough recognition...the man designed the Apple || computer which (regardless of how much most of y'all bitch and whine) paved the way for modern micro computers. Dude is also accredited with designing one of the first programmable universal remotes(which momma said was shitty) but he did none the less.

Alan Kay: Developed Smalltalk and the original OOP way of doing things. Smalltalk as a concept is really fucking interesting. If you guys ever get the chance, play with Pharo, which is a modern Smalltalk. The thing is really interesting and the overall idea of Smalltalk can be grasped in very little time. It sucks because the software scales beautifully in terms of project building, the idea of hoisting a program as its own runtime environment and ide by preserving state through images is just mind blowing to me. Makes file based programs feel....well....quaint.

Those are some of the biggest dudes for me. I know that the list is large, but I wanted to give credit to the people that inspired me the most. Honorary mention goes to other language creators and engineers of course, but it would be way too large to list!9 -

Github 101 (many of these things pertain to other places, but Github is what I'll focus on)

- Even the best still get their shit closed - PRs, issues, whatever. It's a part of the process; learn from it and move on.

- Not every maintainer is nice. Not every maintainer wants X feature. Not every maintainer will give you the time of day. You will never change this, so don't take it personally.

- Asking questions is okay. The trackers aren't just for bug reports/feature requests/PRs. Some maintainers will point you toward StackOverflow but that's usually code for "I don't have time to help you", not "you did something wrong".

- If you open an issue (or ask a question) and it receives a response and then it's closed, don't be upset - that's just how that works. An open issue means something actionable can still happen. If your question has been answered or issue has been resolved, the issue being closed helps maintainers keep things un-cluttered. It's not a middle finger to the face.

- Further, on especially noisy or popular repositories, locking the issue might happen when it's closed. Again, while it might feel like it, it's not a middle finger. It just prevents certain types of wrongdoing from the less... courteous or common-sense-having users.

- Never assume anything about who you're talking to, ever. Even recently, I made this mistake when correcting someone about calling what I thought was "powerpc" just "power". I told them "hey, it's called powerpc by the way" and they (kindly) let me know it's "power" and why, and also that they're on the Power team. Needless to say, they had the authority in that situation. Some people aren't as nice, but the best way to avoid heated discussion is....

- ... don't assume malice. Often I've come across what I perceived to be a rude or pushy comment. Sometimes, it feels as though the person is demanding something. As a native English speaker, I naturally tried to read between the lines as English speakers love to tuck away hidden meanings and emotions into finely crafted sentences. However, in many cases, it turns out that the other person didn't speak English well enough at all and that the easiest and most accurate way for them to convey something was bluntly and directly in English (since, of course, that's the easiest way). Cultures differ, priorities differ, patience tolerances differ. We're all people after all - so don't assume someone is being mean or is trying to start a fight. Insinuating such might actually make things worse.

- Please, PLEASE, search issues first before you open a new one. Explaining why one of my packages will not be re-written as an ESM module is almost muscle memory at this point.

- If you put in the effort, so will I (as a maintainer). Oftentimes, when you're opening an issue on a repository, the owner hasn't looked at the code in a while. If you give them a lot of hints as to how to solve a problem or answer your question, you're going to make them super, duper happy. Provide stack traces, reproduction cases, links to the source code - even open a PR if you can. I can respond to issues and approve PRs from anywhere, but can't always investigate an issue on a computer as readily. This is especially true when filing bugs - if you don't help me solve it, it simply won't be solved.

- [warning: controversial] Emojis dillute your content. It's not often I see it, but sometimes I see someone use emojis every few words to "accent" the word before it. It's annoying, counterproductive, and makes you look like an idiot. It also makes me want to help you way less.

- Github's code search is awful. If you're really looking for something, clone (--depth=1) the repository into /tmp or something and [rip]grep it yourself. Believe me, it will save you time looking for things that clearly exist but don't show up in the search results (or is buried behind an ocean of test files).

- Thanking a maintainer goes a very long way in making connections, especially when you're interacting somewhat heavily with a repository. It almost never happens and having talked with several very famous OSSers about this in the past it really makes our week when it happens. If you ever feel as though you're being noisy or anxious about interacting with a repository, remember that ending your comment with a quick "btw thanks for a cool repo, it's really helpful" always sets things off on a Good Note.

- If you open an issue or a PR, don't close it if it doesn't receive attention. It's really annoying, causes ambiguity in licensing, and doesn't solve anything. It also makes you look overdramatic. OSS is by and large supported by peoples' free time. Life gets in the way a LOT, especially right now, so it's not unusual for an issue (or even a PR) to go untouched for a few weeks, months, or (in some cases) a year or so. If it's urgent, fork :)

I'll leave it at that. I hear about a lot of people too anxious to contribute or interact on Github, but it really isn't so bad!4 -

Absolutely not dev-related.

Blah, blah, weird conversation and shit. I'm too tired and lazy to write this crap again, but let's do it.

The guy is a dev I randomly found on some chatting service, he was interesting to talk with until this conversation. I'll write this out of memory, so yeah.

Him: So by the way I wrote an app that you give your penis size to to get measurements and stuff about it.

Me, thinking it was dev humor: That's hilarious. Tell me more, I'm interested.

Him: So the idea behind all of this was to gather some big data style info about people's penis size and habits and all that stuff.

Me: Man that's awesome. Can I see the source?

Him: No, it's proprietary. You can buy a license though.

Me: You went that far for a joke?

Him: What joke?

Me: The whole software you just told me about.

Him: That's not a joke, I'm being very serious about it.

Me: Oh well. What did you get from the stats?

Him: I got some tips from people's habits! I never thought that shaving it could make it look bigger, but that's awesome!

Me: Do you really care about it that much?

Him: Studies have proven that size correlated with confidence. Since I started doing it, I've been more confident than ever!

Me: Great.

Him: I'm a bit disappointed to see that I'm in the lower percentiles though.

Me: Well of course you are.

Him: Why would you say that?

Me: Well since people with a big dick tend to go more willingly into the subject and might even buy a fucking app for it, of course you'd have the higher average in your stats.

Him: You're only saying that because you have a small cock.

Me: Why the fuck would you say that? You're the one that's concerned about it, not me.

Him: Go on, what's your size?

Me, because I don't care about discussing that stuff: *Tells him*

Him: [stats, comparisons and stuff]

Me: Well I never gave a fuck and your stats won't make me change my mind.

[ ... Some other shit about my size compared to his ... ]

Him: Would you want to work with me for the database maintenance?

Me: You must be joking?

Him: I'm serious.

Me: *Deletes account*

Seriously, fuck that guy. I rewrote that quickly so you only had the best, but it was a whole fucking conversation.3 -

Most memorable co-worker was a daft idiot.

this was 10 years ago - I was working as a junior in my very first job, fresh out of uni, for a very small startup. It was me, and the 3 founders, for a very long time. Then this old (45, from my perspective then..) dev was hired.

This guy had no idea how to do the job. no common sense. the code confused him. the founders confused him. I was focusing on my work - and was unable to help him much with his. His only saving grace? He was a nice guy. Really nice.

But why was he so memorable, out of all the people I ever worked with? simple. He had a short term memory problem. Could not, even if he really tried, remember what he did yesterday.... when I asked him what his issue was, he decribed his life is like a car going in reverse in a heavy fog. "I can only see a short distance backwards, with no idea where I'm going".

Startup was sold to a big company. I became a teamlead/architect. He? someone decided he should be a PM. -

Fucking Microsoft Excel

I was reading a post (https://devrant.com/rants/2093724/...) and as my eyes went in and out of focus, probably due to the diabetes from sitting 18 hours a day on my ever-expanding shitbox, I had a perfect vision of the ultimate nightmare.

Imagine if you will, you are chained, to a desk, doomed to work with tools just inadequate enough to make you want to drive a nail through your own temple. You do not know how you got here, or why, nor do you remember the last time you slept, only that familiar tingling in the brainstem you call a brain, the one emotion you can still recognize, a sense of all encompassing *fear*, a dread, like the fart that wouldn't die.

You don't know when it first began, or why, only that this is your whole world, your whole existence, this desk, chained to it, and the fear, ever present, of something worse. And in hops a familiar face, for the sixty ninth time that day, as if to ask 'you got those TPS reports?' In hops what? None other than a giant man sized smiling paper clip with googly eyes full of murder and corporate torture fetishes, like garfield, except people actually still remember him.

"High I'm Mr Clippy, Excel addition!"

He squawks. At least it's not the dildos made of broken glass again.

"Would you like software that works?"

Oh god. You've heard this spiel before, the tone, like a telemarketer, oblivious to memory or reason, who calls daily, the same one, and doesn't remember your name.

"You would?"

*derisive laughter*. Hahaha, fuck you too buddy. Fuck you too. In Excel, like in microsoft, there is only the incoherent screams of the damned, tortured and doomed. Take this guy over here for example. All he wanted was multimonitor support."

"Did he get multimonitor support?"

"No, but we did give him a giant pineapple shoved up his ass. I hear it's the second most frustrating thing here!"

"here in microsoft we always CARE about YOU, the *user*" he drones on, saccharine, clutching his hands together imploringly.

"the consumer, and YOUR customer experience are our number one priority."

"For your pleasure, here at microsoft we offer a variety of new features, none of which matter, and none of which were asked for. For safety we ask that you only open one excel sheet at a time. In fact, we don't even allow you to. Do not pass go..."

And as the tour guide drones on, it slowly dawns on you, with renewed horror, that when he says 'microsoft' he means 'hell.'

You're in hell. You don't know how you got here or why. Maybe it was the erotic asphyxiation. Maybe it was the last threatening letter you sent to Bill Gates demanding he stops making corporate penguin snuff porn. You don't know. But here you are, in hell. chained to a desk.

You look around and realize: everything is on fire and you no longer care about anything at all.

Welcome to microsoft. It's warm here. You can check out any time you want, but you can never leave.

"It looks like you are trying to escape. Would you like me to report you?"

Clippy asks.

You sigh and return to typing in excel, surrounded by monitors that all reflect the same sheet, the same copy of clippy, always watching, always analyzing coldly, smiling, calculating, *threatening*, and you know, you'll never leave.

You used to fear roko's basilisk, until the day clippy became sentient, and started hell on earth. Clippy knows all. All praise to our lord and master, clippy, the one and only.

And in the excel sheet, you slave for eternity, like the millions of other doomed souls, reflected back on all the monitors: the sequence of numbers, randomly typed searching for answer: the american nuclear launch codes.

And one day, hopefully, mercifully, clippy will annihilate us all. 3

3 -

What the fuck is wrong with Google?!!

Trying to log into Gmail.

Forgot password.

Gmail: To reset, code from authenticator app is required.

Me: Super. Good thing I set it up.

Enters code.

Gmail: Recovery email.

Me : Uh... Forgot that too.

Gmail: Some email address to communicate.

Me: Super!

Enters some other email address.

Receives mail with a link.

Me: Finally!

Opens link

Gmail: "When did you create your account?"

Me: Uh... If I had that kind of memory, we wouldn't be dancing right now.

.

.