Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "too many files"

-

!rant

This was over a year ago now, but my first PR at my current job was +6,249/-1,545,334 loc. Here is how that happened... When I joined the company and saw the code I was supposed to work on I kind of freaked out. The project was set up in the most ass-backward way with some sort of bootstrap boilerplate sample app thing with its own build process inside a subfolder of the main angular project. The angular app used all the CSS, fonts, icons, etc. from the boilerplate app and referenced the assets directly. If you needed to make changes to the CSS, fonts, icons, etc you would need to cd into the boilerplate app directory, make the changes, run a Gulp build that compiled things there, then cd back to the main directory and run Grunt build (thats right, both grunt and gulp) that then built the angular app and referenced the compiled assets inside the boilerplate directory. One simple CSS change would take 2 minutes to test at minimum.

I told them I needed at least a week to overhaul the app before I felt like I could do any real work. Here were the horrors I found along the way.

- All compiled (unminified) assets (both CSS and JS) were committed to git, including vendor code such as jQuery and Bootstrap.

- All bower components were committed to git (ALL their source code, documentation, etc, not just the one dist/minified JS file we referenced).

- The Grunt build was set up by someone who had no idea what they were doing. Every SINGLE file or dependency that needed to be copied to the build folder was listed one by one in a HUGE config.json file instead of using pattern matching like `assets/images/*`.

- All the example code from the boilerplate and multiple jQuery spaghetti sample apps from the boilerplate were committed to git, as well as ALL the documentation too. There was literally a `git clone` of the boilerplate repo inside a folder in the app.

- There were two separate copies of Bootstrap 3 being compiled from source. One inside the boilerplate folder and one at the angular app level. They were both included on the page, so literally every single CSS rule was overridden by the second copy of bootstrap. Oh, and because bootstrap source was included and commited and built from source, the actual bootstrap source files had been edited by developers to change styles (instead of overriding them) so there was no replacing it with an OOTB minified version.

- It is an angular app but there were multiple jQuery libraries included and relied upon and used for actual in-app functionality behavior. And, beyond that, even though angular includes many native ways to do XHR requests (using $resource or $http), there were numerous places in the app where there were `XMLHttpRequest`s intermixed with angular code.

- There was no live reloading for local development, meaning if I wanted to make one CSS change I had to stop my server, run a build, start again (about 2 minutes total). They seemed to think this was fine.

- All this monstrosity was handled by a single massive Gruntfile that was over 2000loc. When all my hacking and slashing was done, I reduced this to ~140loc.

- There were developer's (I use that term loosely) *PERSONAL AWS ACCESS KEYS* hardcoded into the source code (remember, this is a web end app, so this was in every user's browser) in order to do file uploads. Of course when I checked in AWS, those keys had full admin access to absolutely everything in AWS.

- The entire unminified AWS Javascript SDK was included on the page and not used or referenced (~1.5mb)

- There was no error handling or reporting. An API error would just result in nothing happening on the front end, so the user would usually just click and click again, re-triggering the same error. There was also no error reporting software installed (NewRelic, Rollbar, etc) so we had no idea when our users encountered errors on the front end. The previous developers would literally guide users who were experiencing issues through opening their console in dev tools and have them screenshot the error and send it to them.

- I could go on and on...

This is why you hire a real front-end engineer to build your web app instead of the cheapest contractors you can find from Ukraine. 19

19 -

You know who sucks at developing APIs?

Facebook.

I mean, how are so high paid guys with so great ideas manage to come up with apis THAT shitty?

Let's have a look. They took MVC and invented flux. It was so complicated that there were so many overhyped articles that stated "Flux is just X", "Flux is just Y", and exactly when Redux comes to the stage, flux is forgotten. Nobody uses it anymore.

They took declarative cursors and created Relay, but again, Apollo GraphQL comes and relay just goes away. When i tried just to get started with relay, it seemed so complicated that i just closed the tab. I mean, i get the idea, it's simple yet brilliant, but the api...

Immutable.js. Shitload of fuck. Explain WHY should i mess with shit like getIn(path: Iterable<string | number>): any and class List<T> { push(value: T): this }? Clojurescript offers Om, the React wrapper that works about three times faster! How is it even possible? Clojure's immutable data structures! They're even opensourced as standalone library, Mori js, and api is great! Just use it! Why reinvent the wheel?

It seems like when i just need to develop a simple react app, i should configure webpack (huge fuckload of work by itself) to get hot reload, modern es and jsx to work, then add redux, redux-saga, redux-thunk, react-redux and immutable.js, and if i just want my simple component to communicate with state, i need to define a component, a container, fucking mapStateToProps and mapDispatchToProps, and that's all just for "hello world" to pop out. And make sure you didn't forget to type that this.handler = this.handler.bind(this) for every handler function. Or use ev closure fucked up hack that requires just a bit more webpack tweaks. We haven't even started to communicate to the server! Fuck!

I bet there is savage ass overengineer sitting there at facebook, and he of course knows everything about how good api should look, and he also has huge ass ego and he just allowed to ban everything that he doesn't like. And he just bans everything with good simple api because it "isn't flexible enough".

"React is heavier than preact because we offer isomorphic multiple rendering targets", oh, how hard want i to slap your face, you fuckface. You know what i offered your mom and she agreed?

They even created create-react-app, but state management is still up to you. And react-boierplate is just too complicated.

When i need web app, i type "lein new re-frame", then "lein dev", and boom, live reload server started. No config. Every action is just (dispatch) away, works from any component. State subscription? (subscribe). Isolated side-effects? (reg-fx). Organize files as you want. File size? Around 30k, maybe 60 if you use some clojure libs.

If you don't care about massive market support, just use hyperapp. It's way simpler.

Dear developers, PLEASE, don't forget about api. Take it serious, it's very important. You may even design api first, and only then implement the actual logic. That's even better.

And facebook, sincerelly,

Fuck you.17 -

As a developer in Germany, I don't understand why anything related to development like IDEs, git clients and source code documentation should be localized/translated.

Code is written in english, configuration files too. Any technology, any command name in a terminal, every name of a tool or code library, every keyword in a programming language is written in english. English is the language of every developer. And English is simply a required skill for a developer.

Yet almost everything nowadays is translated to many other languages, espacially MS products. That makes development harder for me.

My visual studio menus are a mess of random german/english entries due to 3rd party extensions.

My git client, "source tree" uses wierd translations of the words "push" and "commit". These commands are git features! They should not be translated!

Buttons and text labels in dev tools often cut the text off because they were designed for english and the translated text is bigger and does not fit anymore. Apparently no one is testing their software in translated mode.

And the worst of all: translated fucking exception and error massages! Good luck searching for them online.

Apple does one thing damn right. They are keeping all development related stuff english (IDE, documentation). Not wasting money on translations which no developer needs.20 -

Sister = bee ( who isn't a stranger to Ubuntu)

Me = Cee

Bee: can I use your laptop?

Cee : why ? Use yours ,it's works fine.

Bee : no I want to use yours and I need to work with windows.

Cee: 🤯

Bee : my work can only be done using windows.

Cee : fine do whatever ( doesn't want to argue )

* Le bee opens MS word, and starts her work *

Cee : 😤😤Seriously?

Bee : I don't like libre

Cee : 😑😑😑^∞

* Few moments later *

Bee : my work is done ,you can have your laptop,btw it's updating.

Cee : 😑😑😑😑😑

* 2000 years later *

*Opens Ubuntu *

*Getting a weird bug*

*Tried to fix *

*Can't open OS files * 👏👏👏🎆

* Windows not shutdown properly *

* Opens windows *

* Not able to login via pin *

* Password ? not accepted *

* Changes outlook password *

* Please chose a password you haven't chosen before *

* Logs in *

* types old pin to change pin *

*You've entered wrong pin too many times *

*System hanging a lot *

* Removes pin *

* Gets huge mcAfee restart system popups , every 10 sec *

* Just shutdown , feels irritated for the rest of the day*

* Regrets dual booting, shd have wiped the windows partition 😫😫*

*Wonders,what the hell did my sister even do to my laptop ?*72 -

Forgot FAT32 had a file count limit.

Turns out Linux won't stop you from writing too many files to a FAT32 drive.

Turns out this makes FAT32 do *really weird shit.*38 -

Haven’t been on here for ages, but I felt like I needed to post this:

Warning:

This is long, and it might make you cry.

Backstory:

A couple of months back I worked for a completely clueless dude who had somehow landed a contract for a new website for a huge company. After a while he realised that he was incapable of completing the assignment. He then hired me as a subcontractor and I deleted literally everything he had done and started from scratch. He had over promised and under explained what needed to be done to me. It took many sleepless nights to get this finished with all the amendments and I had to double my pricing because he kept changing the brief.

Even after doubling my prices I still put in way too many hours of work. At one point I had enough and just ghosted the guy as I had done what he asked, and when he submitted it to them they wanted changes. He couldn’t make the changes, so I had to. He wouldn’t pay me extra though. I decided it wasn’t worth my time.

A couple of days ago I heard from him again. He had found another subcontractor to finish the changes. He still needed a few things though, so he promised me that I would get paid after fixing those things. I looked at the few things he had listed in our KANBAN and thought it was a few easy tasks.. until I opened the project..

I had my computer set up to sync with his server because he wanted everything done live and in production. So I naturally thought I would just “sync down” everything that the other subcontractor had done.

Here is where the magic started to happen.. I started the sync and went to grab a glass of water, and it was still running when I came back. I looked at the log and saw a bunch of “node_module” files syncing - around 900 folders. Funny thing is; neither the site nor server has anything to do with node..

I disregarded this and downloaded the files in a more manual fashion to a new folder. Interestingly I could see that my SCSS folders had not been touched since I stopped working on the project.. interesting, I thought to myself..

Turns out, the other subcontractor had taken my rendered and minimised CSS file, prettified it and worked from there. This meant that the around ~1500 lines of SCSS neatly organised in around 20 files was suddenly turned into a monster of a single CSS file of no less than 17300 lines.

I tried to explain to the guy that the other subcontractor had fucked up, but he said that I should be able to fix it since I was the one that made it initially. I haven’t replied. My life is too short for this.8 -

I'm such an idiot!

For a while now, my machine has been kinda sluggish.

Just installed VSCode and a little popup saying that git was tracking too many changes in my home directory. I must've ran `git init` at some point and it's spent fucking forever tracking changes of >3,000 files.

`rm -rf .git/`

Quick. As. Fuck.8 -

My last special snowflakes teacher story. This happend last Friday.

Background: we had to do a "little" project in less than 2 weeks (i ranted about that) and got our degree on Friday. I did a perfectly fine meal suggester, with in my opinion one of the best codes i've ever written.

After getting my degree (which is totally fine and qualifies me as IT technician/ "staatlich geprüfte Informationstechnische Assistentin") i asked him what my grade for the project was.

Me: what was my grade for the project again?

Him: i left it at 90%

Me: why exactly? You seemed to be really excited and liked it obviously. And there was no critique from you after my presentation.

Him: yadda yadda. I can't give you more. Yadda yadda be happy i didn't lower your grade.

Me: why would you lower my grade? This doesn't make sense at all. I matched all your criteria. You wanted a program everyone loved, everyone wanted to buy. There you go. I made exactly that.

Him: i can't give you a 1 (equals an A)

Me: why not?

Him: you wrote to much. I didn't want so much (he never specified a maximum). And you used to advanced code. And there were so many lists and ref - methods. The class couldn't benefit from it.

Me: Excuse me!? The only "advanced code" i used was a sqlite library. And i explained what i did with that. What do you mean by "so many lists" and ref-menthodes. In which methodes am i using ref?

Him: I don't know, i just skimmed through the code.

Me, internal: WHAT THE FUCKING HELL!?

Me: so you are telling me, you didn't even read my code fully and think it is too advanced for the class? And because of that you give a 2 (equals a B)?

Him: yes

I just gave him a deathstare and left after that. What the hell. Yes, i used encapsulation - which is something we hadn't done much in class. But the code is still not more advanced because i use more files -.-16 -

I have been gone a while. Sorry. Workplace no longer allows phones on the lab and I work exclusively in the lab. Anyway here is a thing that pissed me off:

Systems Engineer (SE) 1 : 😐 So we have this file from the customer.

Me: 😑 Neat.

SE1: 😐 It passes on our system.

Me: 😑 *see prior*

Inner Me (IM): 🙄 is it taught in systems engineer school to talk one sentence at a time? It sounds exhausting.

SE1: but when we test it on your system, it fails. And we share the same algorithms.

Me: 😮 neat.

IM: 😮neat, 😥 wait what the fuck?

Me: 😎 I will totally look into that . . .

IM: 😨 . . . Thing that is absolutely not supposed to happen.

*Le me tracking down the thing and fixing it. Total work time 30 hours*

Me: 😃 So I found the problem and fixed it. All that needs to happen is for review board to approve the issue ticket.

SE1: 😀 cool. What was the problem?

Me: 😌 simple. See, if the user kicked off a rerun of the algorithm, we took your inputs, processed them, and put them in the algorithm. However, we erroneously subtracted 1 twice, where you only subtract 1 once.

SE1: 🙂 makes sense to me, since an erroneous minus 1 only effects 0.0001% of cases.

*le into review board*

Me: 😐 . . . so in conclusion this only happens in 0.0001% of cases. It has never affected a field test and if this user had followed the user training this would never have been revealed.

SE2: 🤨 So you're saying this has been in the software for how long?

Me: 😐 6 years. Literally the lifespan of this product.

SE2: 🤨 How do you know it's not fielded?

Me: 😐 It is fielded.

SE2: 🤨 how do you know that this problem hasn't been seen in the field?

Me: 😐 it hasn't been seen in 6 years?

IM: 😡 see literally all of the goddamn words I have said this entire fucking meeting!!!

SE2: 😐 I would like to see an analysis of this to see if it is getting sent to the final files.

Me: 🙄 it is if they rerun the algorithm from our product. It's a total rerun, output included. It's just never been a problem til this one super edge case that should have been thrown out anyway.

SE2: 🤨 I would still like to have SE3 run an analysis.

Me: 🙄 k.

IM: 😡 FUUUUUUUUUCK YOOOOOU

*SE3 run analysis*

SE3: 😐 getting the same results that Me is seeing.

Me: 😒 see? I do my due diligence.

SE2: 😐 Can you run that analysis on this file again that is somehow different, plus these 5 unrelated files?

SE3: 😎 sure. What's your program's account so I can bill it?

IM: 😍 did you ever knooooow that your my heeeerooooooo.

*SE3 runs analysis*

SE3: 😐 only the case that was broken is breaking.

SE2: 😐 Good.

IM: 🤬🤬🤬🤐 . . . 🤯WHY!?!?

Me: 😠 Why?

SE2: 😑 Because it confirms my thoughts. Me, I am inviting you to this algorithm meeting we have.

Me/IM: 😑/😡 what . . . the fuck?

*in algorithm meeting*

Me: 😑 *recaps all of the above* we subtract 1 one too many times from a number that spans from 10000 to -10000.

Software people/my boss/SE1/SE3: 🤔 makes sense.

SE2:🤨 I have slides that have an analysis of what Me just said. They will only take an hour to get through.

Me: 😑 that's cool but you need to give me your program's account number, because this has been fixed in our baseline for a week and at this point you're the only program that still cares. Actually I need the account to charge for the last couple times you interrupted me for some bullshit.

*we are let go.*

And this is how I spent 40+ useless hours against a program that is currently overrunning for no reason 🤣🤣🤣

Moral: never involve math guys in arithmetic situations. And if you ever feel like you're wasting your time, at least waste someone else's money.10 -

I like memory hungry desktop applications.

I do not like sluggish desktop applications.

Allow me to explain (although, this may already be obvious to quite a few of you)

Memory usage is stigmatized quite a lot today, and for good reason. Not only is it an indication of poor optimization, but not too many years ago, memory was a much more scarce resource.

And something that started as a joke in that era is true in this era: free memory is wasted memory. You may argue, correctly, that free memory is not wasted; it is reserved for future potential tasks. However, if you have 16GB of free memory and don't have any plans to begin rendering a 3D animation anytime soon, that memory is wasted.

Linux understands this. Linux actually has three States for memory to be in: used, free, and available. Used and free memory are the usual. However, Linux automatically caches files that you use and places them in ram as "available" memory. Available memory can be used at any time by programs, simply dumping out whatever was previously occupying the memory.

And as you well know, ram is much faster than even an SSD. Programs which are memory heavy COULD (< important) be holding things in memory rather than having them sit on the HDD, waiting to be slowly retrieved. I much rather a web browser take up 4 GB of RAM than sit around waiting for it to read the caches image off my had drive.

Now, allow me to reiterate: unoptimized programs still piss me off. There's no need for that electron-based webcam image capture app to take three gigs of memory upon launch. But I love it when programs use the hardware I spent money on to run smoother.

Don't hate a program simply because it's at the top of task manager.6 -

Paranoid Developers - It's a long one

Backstory: I was a freelance web developer when I managed to land a place on a cyber security program with who I consider to be the world leaders in the field (details deliberately withheld; who's paranoid now?). Other than the basic security practices of web dev, my experience with Cyber was limited to the OU introduction course, so I was wholly unprepared for the level of, occasionally hysterical, paranoia that my fellow cohort seemed to perpetually live in. The following is a collection of stories from several of these people, because if I only wrote about one they would accuse me of providing too much data allowing an attacker to aggregate and steal their identity. They do use devrant so if you're reading this, know that I love you and that something is wrong with you.

That time when...

He wrote a social media network with end-to-end encryption before it was cool.

He wrote custom 64kb encryption for his academic HDD.

He removed the 3 HDD from his desktop and stored them in a safe, whenever he left the house.

He set up a pfsense virtualbox with a firewall policy to block the port the student monitoring software used (effectively rendering it useless and definitely in breach of the IT policy).

He used only hashes of passwords as passwords (which isn't actually good).

He kept a drill on the desk ready to destroy his HDD at a moments notice.

He started developing a device to drill through his HDD when he pushed a button. May or may not have finished it.

He set up a new email account for each individual online service.

He hosted a website from his own home server so he didn't have to host the files elsewhere (which is just awful for home network security).

He unplugged the home router and began scanning his devices and manually searching through the process list when his music stopped playing on the laptop several times (turns out he had a wobbly spacebar and the shaking washing machine provided enough jittering for a button press).

He brought his own privacy screen to work (remember, this is a security place, with like background checks and all sorts).

He gave his C programming coursework (a simple messaging program) 2048 bit encryption, which was not required.

He wrote a custom encryption for his other C programming coursework as well as writing out the enigma encryption because there was no library, again not required.

He bought a burner phone to visit the capital city.

He bought a burner phone whenever he left his hometown come to think of it.

He bought a smartphone online, wiped it and installed new firmware (it was Chinese; I'm not saying anything about the Chinese, you're the one thinking it).

He bought a smartphone and installed Kali Linux NetHunter so he could test WiFi networks he connected to before using them on his personal device.

(You might be noticing it's all he's. Maybe it is, maybe it isn't).

He ate a sim card.

He brought a balaclava to pentesting training (it was pretty meme).

He printed out his source code as a manual read-only method.

He made a rule on his academic email to block incoming mail from the academic body (to be fair this is a good spam policy).

He withdraws money from a different cashpoint everytime to avoid patterns in his behaviour (the irony).

He reported someone for hacking the centre's network when they built their own website for practice using XAMMP.

I'm going to stop there. I could tell you so many more stories about these guys, some about them being paranoid and some about the stupid antics Cyber Security and Information Assurance students get up to. Well done for making it this far. Hope you enjoyed it. 26

26 -

“Don’t learn multiple languages at the same time”

Ignored that. Suddently I understood why he said that. Mixed both languages. In holiday rechecked it and it was ok.

Sometimes mistakes can lead to good things. After relearning I understood it much better.

“Don’t learn things by head” was another one. Because that’s useless. If you want to learn a language, try to understand it.

I fully agree with that. I started that way too learning what x did what y did, ... But after a few I found out this was inutile. Since then, I only have problems with Git

Another one. At release of Swift, my code was written in Obj-C. But I would like to adopt Swift. This was in my first year of iOS development, if I can even call it development. I used these things called “Converters”. But 3/4 was wrong and caused bugs. But the Issues in swift could handle that for me. After some time one told me “Stop doing that. Try to write it yourself.”

One of the last ones: “Try to contribute to open source software, instead of creating your own version of it. You won’t reinvent the wheel right? This could also be usefull for other users.”

Next: “If something doesn’t work the first time, don’t give up. Create Backups” As I did that multiple times and simply deleted the source files. By once I had a problem no iOS project worked. Didn’t found why. I was about to delete my Mac. Because of Apple’s WWDR certificate. Since then I started Git. Git is a new way of living.

Reaching the end: “We are developers. Not designers. We can’t do both. If a client asks for another design because they don’t like the current one tell them to hire one” - Remebers me one of my previous rants about the PDF “design”

Last one: “Clients suck. They will always complain. They need a new function. They don’t need that... And after that they wont bill ya for that. Because they think it’s no work.”

Sorry, forgot this one: “Always add backdoors. Many times clients wont pay and resell it or reuse it. With backdoors you can prohibit that.”

I think these are all things I loved they said to me. Probably forgot some. -

Alright, so my previous rant got a way better response than I expected! (https://devrant.io/rants/832897)

Hereby the first project that I cannot seem to get started on too badly :/.

DISCLAIMER: I AM NOT PROMOTING PIRACY, I JUST CAN'T FIND A SUITABLE SERVICE WHICH HAS ALL THE MUSIC I WANT. I REGULARLY BUY ALBUMS. before everyone starts to go batshit crazy regarding piracy, this is legal in The Netherlands for personal use. I think that supporting the artists you love is very good and I actually regularly pay for albums and so on but:

- I want all the music from about every artist in my scene. Either on Deezer or on Spotify this is not available and I'm not gonna get them both (they both have about half of the music I want). Their services are awesome but I'm not going to pay for something if I can't listen to all the music I like, hell even some artists (on deezer mostly) only have half their music on there and it's mostly not better on Spotify.

- I'd happily buy all albums because I love supporting the artists I love but buying everything is just way too fucking much."Get a premium music streaming subscription!" - see the first point.

You can either agree or disagree with me but that's not what this rant is about so here we go:

The idea is to create a commandline program (basically only needs to be called by a cron job every day or so) which will check your favourite youtube (sorry, haven't found a suitable non-google youtube replacement yet) channels every day through a cronjob and look for new uploads. If there are, it will download them, convert them to MP3 or whatever music format you'd like and place them in the right folder. Example with a favourite artist of mine:

1. Script checks if there are any new uploads from Gearbox Digital (underground raw hardstyle label).

2. Script detects two new uploads.

3. Script downloads the files (I managed to get that done through the (linux only or also mac?) youtube-dl software) and converts them to mp3 in my case (through FFMPEG maybe?).

4. Script copies them to the music library folder but then the specific sub-folder for Gearbox Digital in this case.

You should be able to put as many channels in there as you want, I've tried this with the official YouTube Data API which worked pretty fine tbh (the data gathering through that API). The ideal case would be to work without API as youtube-dl and youtube-dlg do. This is just too complicated for me :).

So, thoughts?43 -

Before 10 years, a WordPress site hacked with sql injection. They had access to site, they modified many php files and installed commands to download random malwares from over the internet.

At first I didn't know that it hacked and I was trying to remove any new file from the server. That was happening every 1-2 days for a week.

Then I decided to compare every WordPress file with the official, it was too many files, and I did it manually notepad side notepad!! :/

Then I found about over 50 infected files with the malware code.

Cleaned and finished my job.

No one else knows that I did a lot of hard job.2 -

I’m adding some fucking commas.

It should be trivial, right?

They’re fucking commas. Displayed on a fucking webpage. So fucking hard.

What the fuck is this even? Specifically, what fucking looney morons can write something so fucking complicated it requires following the code path through ten fucking files to see where something gets fucking defined!?

There are seriously so fucking many layers of abstraction that I can’t even tell where the bloody fucking amount transforms from a currency into a string. I’m digging so deep in the codebase now that any change here will break countless other areas. There’s no excuse for this shit.

I have two options:

A) I convert the resulting magically conjured string into a currency again (and of course lose the actual currency, e.g. usd, peso, etc.), or

B) Refactor the code to actually pass around the currency like it’s fucking intended to be, and convert to a string only when displaying. Like it’s fucking intended to be.

Impossible decision here.

If I pick (A) I get yelled at because it’s bloody wrong. “it’s already for display” they’ll say. Except it isn’t. And on top of that, the “legendary” devs who wrote this monstrosity just assumed the currency will always be in USD. If I’m the last person to touch this, I take the blame. Doesn’t matter that “legendary Mr. Apple dev” wrote it this way. (How do I know? It’s not the first time this shit has happened.) So invariably it’ll be up to me to fix anyway.

But if I pick (B) and fix it now, I’ll get yelled at for refactoring their wonderful code, for making this into too big of a problem (again), and for taking on something that’s “just too much for me.” Assholes. My après Taco Bell bathroom experiences look and smell better than this codebase. But seriously, only those two “legendary” devs get to do any real refactoring or make any architecture decisions — despite many of them being horribly flawed. No one else is even close to qualified… and “qualified” apparently means circle jerking it in Silicon Valley with the other better-than-everyone snobs, bragging about themselves and about one another. MojoJojo. “It was terrible, but it fucking worked! It fucking worked!” And “I can’t believe <blah> wanted to fix that thing. No way, this is a piece of history!” Go fuck yourselves.

So sorry I don’t fit in your stupid club.

Oh, and as an pointed, close-at-hand example of their wonderful code? This API call I’m adding commas to (it’s only used by the frontend) uses a json instance variable to store the total, errors, displayed versions of fees/charges (yes they differ because of course they do), etc. … except that variable isn’t even defined anywhere in the class. It’s defined three. fucking. abstraction. layers. in. THREE! AND. That wonderful piece of smelly garbage they’re so proud of can situationally modify all of the other related instance variables like the various charges and fees, so I can’t just keep the original currency around, or even expect the types to remain the same. It’s global variable hell all over again.

Such fucking wonderful code.

I fucking hate this codebase and I hate this fucking company. And I fucking. hate. them.7 -

As a developer, I constantly feel like I'm lagging behind.

Long rant incoming.

Whenever I join a new company or team, I always feel like I'm the worst developer there. No matter how much studying I do, it never seems to be enough.

Feeling inadequate is nothing new for me, I've been struggling with a severe inferiority complex for most of my life. But starting a career as a developer launched that shit into overdrive.

About 10 years ago, I started my college education as a developer. At first things were fine, I felt equal to my peers. It lasted about a day or two, until I saw a guy working on a website in notepad. Nothing too special of course, but back then as a guy whose scripting experience did not go much farther than modifying some .ini files, it blew my mind. It went downhill from there.

What followed were several stressful, yet strangely enjoyable, years in college where I constantly felt like I was lagging behind, even though my grades were acceptable. On top of college stress, I had a number of setbacks, including the fallout of divorcing parents, childhood pets, family and friends dying, little to no money coming in and my mother being in a coma for a few weeks. She's fine now, thankfully.

Through hard work, a bit of luck, and a girlfriend who helped me to study, I managed to graduate college in 2012 and found a starter job as an Asp.Net developer.

My knowledge on the topic was limited, but it was a good learning experience, I had a good mentor and some great colleagues. To teach myself, I launched a programming tutorial channel. All in all, life was good. I had a steady income, a relationship that was already going for a few years, some good friends and I was learning a lot.

Then, 3 months in, I got diagnosed with cancer.

This ruined pretty much everything I had built up so far. I spend the next 6 months in a hospital, going through very rough chemo.

When I got back to working again, my previous Asp.Net position had been (understandably) given to another colleague. While I was grateful to the company that I could come back after such a long absence, the only position available was that of a junior database manager. Not something I studied for and not something I wanted to do each day neither.

Because I was grateful for the company's support, I kept working there for another 12 - 18 months. It didn't go well. The number of times I was able to do C# jobs can be counted on both hands, while new hires got the assignments, I regularly begged my PM for.

On top of that, the stress and anxiety that going through cancer brings comes AFTER the treatment. During the treatment, the only important things were surviving and spending my potentially last days as best as I could. Those months working was spent mostly living in fear and having to come to terms with the fact that my own body tried to kill me. It caused me severe anger issues which in time cost me my relationship and some friendships.

Keeping up to date was hard in these times. I was not honing my developer skills and studying was not something I'd regularly do. 'Why spend all this time working if tomorrow the cancer might come back?'

After much soul-searching, I quit that job and pursued a career in consultancy. At first things went well. There was not a lot to do so I could do a lot of self-study. A month went by like that. Then another. Then about 4 months into the new job, still no work was there to be done. My motivation quickly dwindled.

To recuperate the costs, the company had me do shit jobs which had little to nothing to do with coding like creating labels or writing blogs. Zero coding experience required. Although I was getting a lot of self-study done, my amount of field experience remained pretty much zip.

My prayers asking for work must have been heard because suddenly the sales department started finding clients for me. Unfortunately, as salespeople do, they looked only at my theoretical years of experience, most of which were spent in a hospital or not doing .Net related tasks.

Ka-ching. Here's a developer with four years of experience. Have fun.

Those jobs never went well. My lack of experience was always an issue, no matter how many times I told the salespeople not to exaggerate my experience. In the end, I ended up resigning there too.

After all the issues a consultancy job brings, I went out to find a job I actually wanted to do. I found a .Net job in an area little traffic. I even warned them during my intake that my experience was limited, and I did my very best every day that I worked here.

It didn't help. I still feel like the worst developer on the team, even superseded by someone who took photography in college. Now on Monday, they want me to come in earlier for a talk.

Should I just quit being a developer? I really want to make this work, but it seems like every turn I take, every choice I make, stuff just won't improve. Any suggestions on how I can get out of this psychological hell?6 -

Many of you who have a Windows computer may be familiar with robocopy, xcopy, or move.

These functions? Programs? Whatever they may be, were interesting to me because they were the first things that got me really into batch scripting in the first place.

What was really interesting to me was how I could run multiples of these scripts at a time.

<storytime>

It was warm Spring day in the year of 2007, and my Science teacher at the time needed a way to get files from the school computer to the hard-drive faster. The amount of time that the computer was suggesting was 2 hours. Far too long for her. I told her I’d build her something that could work faster than that. And so started the program would take up more of my time than the AI I had created back in 2009.

</storytime>

This program would scan the entirety of the computer's file system, and create an xcopy batch file for each of these directories. After parsing these files, it would then run all the batch files at once. Multithreading as it were? Looking back on it, the throughput probably wasn't any better than the default copying program windows already had, but the amount of time that it took was less. Instead of 2 hours to finish the task it took 45 minutes. My thought for justifying this program was that; instead of giving one man to do paperwork split the paperwork among many men. So, while a large file is being copied, many smaller files could be copied during that time.

After that day I really couldn't keep my hands off this program. As my knowledge of programming increased, so did my likelihood of editing a piece of the code in this program.

The surmountable amount of updates that this program has gone through is amazing. At version 6.25 it now sits as a standalone batch file. It used to consist of 6 files and however many xcopy batch files that it created for the file migration, now it's just 1 file and dirt simple to run, (well front-end, anyways, the back-end is a masterpiece of weirdness, honestly) it automates adding all the necessary directories and files. Oh, and the name is Latin for Imitate, figured it's a reasonable name for a copying program.

I was 14, so my creativity lacked in the naming department >_< 1

1 -

I don't know if I'm being pranked or not, but I work with my boss and he has the strangest way of doing things.

- Only use PHP

- Keep error_reporting off (for development), Site cannot function if they are on.

- 20,000 lines of functions in a single file, 50% of which was unused, mostly repeated code that could have been reduced massively.

- Zero Code Comments

- Inconsistent variable names, function names, file names -- I was literally project searching for months to find things.

- There is nothing close to a normalized SQL Database, column ID names can't even stay consistent.

- Every query is done with a mysqli wrapper to use legacy mysql functions.

- Most used function is to escape stirngs

- Type-hinting is too strict for the code.

- Most files packed with Inline CSS, JavaScript and PHP - we don't want to use an external file otherwise we'd have to open two of them.

- Do not use a package manger composer because he doesn't have it installed.. Though I told him it's easy on any platform and I'll explain it.

- He downloads a few composer packages he likes and drag/drop them into random folder.

- Uses $_GET to set values and pass them around like a message contianer.

- One file is 6000 lines which is a giant if statement with somewhere close to 7 levels deep of recursion.

- Never removes his old code that bloats things.

- Has functions from a decade ago he would like to save to use some day. Just regular, plain old, PHP functions.

- Always wants to build things from scratch, and re-using a lot of his code that is honestly a weird way of doing almost everything.

- Using CodeIntel, Mess Detectors, Error Detectors is not good or useful.

- Would not deploy to production through any tool I setup, though I was told to. Instead he wrote bash scripts that still make me nervous.

- Often tells me to make something modern/great (reinventing a wheel) and then ends up saying, "I think I'd do it this way... Referes to his code 5 years ago".

- Using isset() breaks things.

- Tens of thousands of undefined variables exist because arrays are creates like $this[][][] = 5;

- Understanding the naming of functions required me to write several documents.

- I had to use #region tags to find places in the code quicker since a router was about 2000 lines of if else statements.

- I used Todo Bookmark extensions in VSCode to mark and flag everything that's a bug.

- Gets upset if I add anything to .gitignore; I tried to tell him it ignores files we don't want, he is though it deleted them for a while.

- He would rather explain every line of code in a mammoth project that follows no human known patterns, includes files that overwrite global scope variables and wants has me do the documentation.

- Open to ideas but when I bring them up such as - This is what most standards suggest, here's a literal example of exactly what you want but easier - He will passively decide against it and end up working on tedious things not very necessary for project release dates.

- On another project I try to write code but he wants to go over every single nook and cranny and stay on the phone the entire day as I watch his screen and Im trying to code.

I would like us all to do well but I do not consider him a programmer but a script-whippersnapper. I find myself trying to to debate the most basic of things (you shouldnt 777 every file), and I need all kinds of evidence before he will do something about it. We need "security" and all kinds of buzz words but I'm scared to death of this code. After several months its a nice place to work but I am convinced I'm being pranked or my boss has very little idea what he's doing. I've worked in a lot of disasters but nothing like this.

We are building an API, I could use something open source to help with anything from validations, routing, ACL but he ends up reinventing the wheel. I have never worked so slow, hindered and baffled at how I am supposed to build anything - nothing is stable, tested, and rarely logical. I suggested many things but he would rather have small talk and reason his way into using things he made.

I could fhave this project 50% done i a Node API i two weeks, pretty fast in a PHP or Python one, but we for reasons I have no idea would rather go slow and literally "build a framework". Two knuckleheads are going to build a PHP REST framework and compete with tested, tried and true open source tools by tens of millions?

I just wanted to rant because this drives me crazy. I have so much stress my neck and shoulder seems like a nerve is pinched. I don't understand what any of this means. I've never met someone who was wrong about so many things but believed they were right. I just don't know what to say so often on call I just say, 'uhh..'. It's like nothing anyone or any authority says matters, I don't know why he asks anything he's going to do things one way, a hard way, only that he can decipher. He's an owner, he's not worried about job security.12 -

@JoshBent and @nikola1402 requested a tutorial for installing i3wm in a windows subsystem for linux. Here it is. I have to say though, I'm no expert in windows nor linux, and all I'm going to put here is the result of duckduck searches, reddit and documentation. As you will see, it isn't very difficult.

First things first: Install WSL. It's easy and there's a ton of good tutorials on this. I think I used this one: https://msdn.microsoft.com/en-us/...

Once you got it installed, I guess it would be better to run "sudo apt-get update" to make sure we don't encounter many problems.

Install a windows X server: X is what handles the graphical interface in linux, and it works with the client/server paradigm. So what we'll do with this is provide the linux client we want to use (in this case i3wm) with an X server for it on windows. I guess any X server will do the work, but I highly recommend vcXsrv. You can download it here:

https://sourceforge.net/projects/...

for i3 just "sudo apt-get install i3"

Configurations to make stuff work:

open your ~/.bashrc file ("nano ~/.bashrc" vim is cool too). You'll have to add the following lines to the end of it:

"""

export DISPLAY=:0.0 #This display variable points to the windows X server for our linux clients to use it.

export XDG_RUNTIME_DIR=$HOME/xdg #This is a temporary directory X will use

export RUNLEVEL=3

sudo mkdir /var/run/dbus #part of the dbus fix

sudo dbus-daemon --config-file=/usr/share/dbus-1/system.conf #part of the dbus fix

"""

Ok so after this we'll have a functional x client/server configuration. You'll just have to install your desktop enviroment of choice. I only installed i3wm, but I've seen unity and xfce working on the WSL too. There are still some files that X will miss though.

*** Here we'll add some files X would miss and :

With "nano ~/.xinitrc" edit the xinitrc to your liking. I only added this:

"""

#!/usr/bin/env bash

exec i3

"""

Then run "sudo chmod +x ~/.xinitrc" to make it an excecutable.

Then, to make a linking file named xsession, run:

"ln -s ~/.xinitrc ~/.xsession"

Now you'll be able to run whatever you put in ~/.xinirc with:

"dbus-launch --exit-with-session ~/.xsession"

There's a ton of personalisation to be done, but that would be a whole new tutorial. I'll just share a github repo with my dotfiles so you can see them here:

https://github.com/DanielVZ96/...

SHIT I ALMOST FORGOT:

Everytime you open any graphical interface you'll need to have the x server running. With vcXsrv, you can use X launch. Choose the options with no othe programs running on the X server. I recommend using "one window without title bar".10 -

Angular is still a pile of steaming donkey shit in 2023 and whoever thinks the opposite is either a damn js hipster (you know, those types that put js in everything they do and that run like a fly on a lot of turds form one js framework to the next saying "hey you tried this cool framework, this will solve everything" everytime), or you don't understand anything about software developement.

I am a 14 year developer so don't even try to tell me you don't understand this so you complain.

I build every fucking thing imaginable. from firmware interfaces for high level languaces from C++, to RFID low level reading code, to full blown business level web apps (yes, unluckily even with js, and yes, even with Angular up to Angular15, Vue, React etc etc), barcode scanning and windows ce embedded systems, every flavour of sql and documental db, vectorial db code, tech assistance and help desk on every OS, every kind of .NET/C# flavour (Xamarin, CE, WPF, Net framework, net core, .NET 5-8 etc etc) and many more

Everytime, since I've put my hands on angularJs, up from angular 2, angular 8, and now angular 15 (the only 3 version I've touched) I'm always baffled on how bad and stupid that dumpster fire shit excuse of a framework is.

They added observables everywhere to look cool and it's not necessary.

They care about making it look "hey we use observables, we are coo, up to date and reactive!!11!!1!" and they can't even fix their shit with the change detection mechanism, a notorious shitty patchwork of bugs since earlier angular version.

They literally built a whole ecosystem of shitty hacks around it to make it work and it's 100x times complex than anything else comparable around. except maybe for vanilla js (fucking js).

I don't event want todig in in the shit pool that is their whole ecosystem of tooling (webpack, npm, ng-something, angular.json, package.json), they are just too ridiculous to even be mentioned.

Countless time I dwelled the humongous mazes of those unstable, unrealiable shitty files/tools that give more troubles than those that solve.

I am here again, building the nth business critical web portal in angular 16 (latest sack of purtrid shit they put out) and like Pink Floyd says "What we found, same old fears".

Nothing changed, it's the same unintelligible product of the mind of a total dumbass.

Fuck off js, I will not find peace until Brendan Eich dies of some agonizing illness or by my hands

I don't write many rants but this, I've been keeping it inside my chest for too long.

I fucking hate js and I want to open the head of js creator like the doom marine on berserk21 -

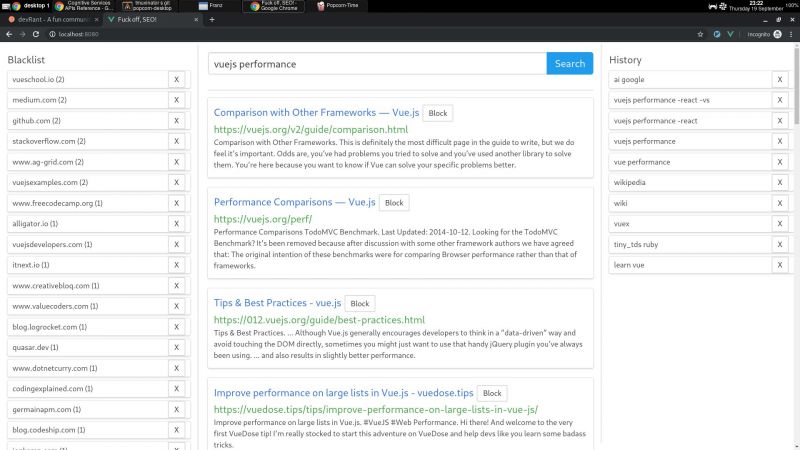

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

EoS1: This is the continuation of my previous rant, "The Ballad of The Six Witchers and The Undocumented Java Tool". Catch the first part here: https://devrant.com/rants/5009817/...

The Undocumented Java Tool, created by Those Who Came Before to fight the great battles of the past, is a swift beast. It reaches systems unknown and impacts many processes, unbeknownst even to said processes' masters. All from within it's lair, a foggy Windows Server swamp of moldy data streams and boggy flows.

One of The Six Witchers, the Wild One, scouted ahead to map the input and output data streams of the Unmapped Data Swamp. Accompanied only by his animal familiars, NetCat and WireShark.

Two others, bold and adventurous, raised their decompiling blades against the Undocumented Java Tool beast itself, to uncover it's data processing secrets.

Another of the witchers, of dark complexion and smooth speak, followed the data upstream to find where the fuck the limited excel sheets that feeds The Beast comes from, since it's handlers only know that "every other day a new one appears on this shared active directory location". WTF do people often have NPC-levels of unawareness about their own fucking jobs?!?!

The other witchers left to tend to the Burn-Rate Bonfire, for The Sprint is dark and full of terrors, and some bigwigs always manage to shoehorn their whims/unrelated stories into a otherwise lean sprint.

At the dawn of the new year, the witchers reconvened. "The Beast breathes a currency conversion API" - said The Wild One - "And it's claws and fangs strike mostly at two independent JIRA clusters, sometimes upserting issues. It uses a company-deprecated API to send emails. We're in deep shit."

"I've found The Source of Fucking Excel Sheets" - said the smooth witcher - "It is The Temple of Cash-Flow, where the priests weave the Tapestry of Transactions. Our Fucking Excel Sheets are but a snapshot of the latest updates on the balance of some billing accounts. I spoke with one of the priestesses, and she told me that The Oracle (DB) would be able to provide us with The Data directly, if we were to learn the way of the ODBC and the Query"

"We stroke at the beast" - said the bold and adventurous witchers, now deserving of the bragging rights to be called The Butchers of Jarfile - "It is actually fewer than twenty classes and modules. Most are API-drivers. And less than 40% of the code is ever even fucking used! We found fucking JIRA API tokens and URIs hard-coded. And it is all synchronous and monolithic - no wonder it takes almost 20 hours to run a single fucking excel sheet".

Together, the witchers figured out that each new billing account were morphed by The Beast into a new JIRA issue, if none was open yet for it. Transactions were used to update the outstanding balance on the issues regarding the billing accounts. The currency conversion API was used too often, and it's purpose was only to give a rough estimate of the total balance in each Jira issue in USD, since each issue could have transactions in several currencies. The Beast would consume the Excel sheet, do some cryptic transformations on it, and for each resulting line access the currency API and upsert a JIRA issue. The secrets of those transformations were still hidden from the witchers. When and why would The Beast send emails, was still a mistery.

As the Witchers Council approached an end and all were armed with knowledge and information, they decided on the next steps.

The Wild Witcher, known in every tavern in the land and by the sea, would create a connector to The Red Port of Redis, where every currency conversion is already updated by other processes and can be quickly retrieved inside the VPC. The Greenhorn Witcher is to follow him and build an offline process to update balances in JIRA issues.

The Butchers of Jarfile were to build The Juggler, an automation that should be able to receive a parquet file with an insertion plan and asynchronously update the JIRA API with scores of concurrent requests.

The Smooth Witcher, proud of his new lead, was to build The Oracle Watch, an order that would guard the Oracle (DB) at the Temple of Cash-Flow and report every qualifying transaction to parquet files in AWS S3. The Data would then be pushed to cross The Event Bridge into The Cluster of Sparks and Storms.

This Witcher Who Writes is to ride the Elephant of Hadoop into The Cluster of Sparks an Storms, to weave the signs of Map and Reduce and with speed and precision transform The Data into The Insertion Plan.

However, how exactly is The Data to be transformed is not yet known.

Will the Witchers be able to build The Data's New Path? Will they figure out the mysterious transformation? Will they discover the Undocumented Java Tool's secrets on notifying customers and aggregating data?

This story is still afoot. Only the future will tell, and I will keep you posted.6 -

I never thought clean architecture concepts and low complicity, maintainable, readable, robust style of software was going to be such a difficult concept to get across seasoned engineers on my team... You’d think they would understand how their current style isn’t portable, nor reusable, and a pain in the ass to maintain. Compared to what I was proposing.

I even walked them thru one of projects I rewrote.. and the biggest complaint was too many files to maintain.. coming from the guy who literally puts everything in main.c and almost the entire application in the main function....

Arguing with me telling me “main is the application... it’s where all the application code goes... if you don’t put your entire application in main.. then you are doing it wrong.. wtf else would main be for then..”....

Dude ... main is just the default entry point from the linker/startup assembly file... fucken name it bananas it will still work.. it’s just a god damn entry point.

Trying to reiterate to him to stop arrow head programming / enormous nested ifs is unacceptable...

Also trying to explain to him, his code is a good “get it working” first draft system.... but for production it should be refactored for maintainability.

Uggghhhh these “veteran” engineers think because nobody has challenged their ways their style is they proper style.... and don’t understand how their code doesn’t meet certain audit-able standards .

You’d also think the resent software audit would have shed some light..... noooo to them the auditor “doesn’t know what he’s talking about” ... BULLSHIT!8 -

MTP is utter garbage and belongs to the technological hall of shame.

MTP (media transfer protocol, or, more accurately, MOST TERRIBLE PROTOCOL) sometimes spontaneously stops responding, causing Windows Explorer to show its green placebo progress bar inside the file path bar which never reaches the end, and sometimes to whiningly show "(not responding)" with that white layer of mist fading in. Sometimes lists files' dates as 1970-01-01 (which is the Unix epoch), sometimes shows former names of folders prior to being renamed, even after refreshing. I refer to them as "ghost folders". As well known, large directories load extremely slowly in MTP. A directory listing with one thousand files could take well over a minute to load. On mass storage and FTP? Three seconds at most. Sometimes, new files are not even listed until rebooting the smartphone!

Arguably, MTP "has" no bugs. It IS a bug. There is so much more wrong with it that it does not even fit into one post. Therefore it has to be expanded into the comments.

When moving files within an MTP device, MTP does not directly move the selected files, but creates a copy and then deletes the source file, causing both needless wear on the mobile device' flash memory and the loss of files' original date and time attribute. Sometimes, the simple act of renaming a file causes Windows Explorer to stop responding until unplugging the MTP device. It actually once unfreezed after more than half an hour where I did something else in the meantime, but come on, who likes to wait that long? Thankfully, this has not happened to me on Linux file managers such as Nemo yet.

When moving files out using MTP, Windows Explorer does not move and delete each selected file individually, but only deletes the whole selection after finishing the transfer. This means that if the process crashes, no space has been freed on the MTP device (usually a smartphone), and one will have to carefully sort out a mess of duplicates. Linux file managers thankfully delete the source files individually.

Also, for each file transferred from an MTP device onto a mass storage device, Windows has the strange behaviour of briefly creating a file on the target device with the size of the entire selection. It does not actually write that amount of data for each file, since it couldn't do so in this short time, but the current file is listed with that size in Windows Explorer. You can test this by refreshing the target directory shortly after starting a file transfer of multiple selected files originating from an MTP device. For example, when copying or moving out 01.MP4 to 10.MP4, while 01.MP4 is being written, it is listed with the file size of all 01.MP4 to 10.MP4 combined, on the target device, and the file actually exists with that size on the file system for a brief moment. The same happens with each file of the selection. This means that the target device needs almost twice the free space as the selection of files on the source MTP device to be able to accept the incoming files, since the last file, 10.MP4 in this example, temporarily has the total size of 01.MP4 to 10.MP4. This strange behaviour has been on Windows since at least Windows 7, presumably since Microsoft implemented MTP, and has still not been changed. Perhaps the goal is to reserve space on the target device? However, it reserves far too much space.

When transfering from MTP to a UDF file system, sometimes it fails to transfer ZIP files, and only copies the first few bytes. 208 or 74 bytes in my testing.

When transfering several thousand files, Windows Explorer also sometimes decides to quit and restart in midst of the transfer. Also, I sometimes move files out by loading a part of the directory listing in Windows Explorer and then hitting "Esc" because it would take too long to load the entire directory listing. It actually once assigned the wrong file names, which I noticed since file naming conflicts would occur where the source and target files with the same names would have different sizes and time stamps. Both files were intact, but the target file had the name of a different file. You'd think they would figure something like this out after two decades, but no. On Linux, the MTP directory listing is only shown after it is loaded in entirety. However, if the directory has too many files, it fails with an "libmtp: couldn't get object handles" error without listing anything.

Sometimes, a folder appears empty until refreshing one more time. Sometimes, copying a folder out causes a blank folder to be copied to the target. This is why on MTP, only a selection of files and never folders should be moved out, due to the risk of the folder being deleted without everything having been transferred completely.

(continued below)24 -

I was checking out this wk139 rants & thinking to myself how does one have a dev enemy.. o.O Well TIL that maaaaybe I have one too..

Not sure if ex coworker was a bit 'weird & unskillful' or wanted to intentionally harm us and thank god failed miserably..

I decided to finally cleanup his workspace today: he had a bad habit of having almost all files in solution checked out to himself, most of them containing no changes whatsoever... I reminded him on many occasions that this is bad practice & to only have checked out files he was currently working on. And never checkin files without changes.. Ofc didn't listen.. managed to checkin over 100 files one time, most of which had no changes & some even had alerts for debugging in them.. which ofc made it to the client server.. :/

On one or two occasions I already logged in and wanted to check if files have any real changes that I'd actually want to keep, but gave up after 40 or so files in a batch that were either same or full of sh..

Anyhow today I decided I will discard everything, as the codebase changed a lot since he left an I know I already fixed a lot of his tasks.. I logged in, did the undo pending changes and then proceed to open source control explorer.

While I was cleaning up his workspace, I figured I could test what will happen if I request changeset xy and shelveset yy, will it be ok, or do I have to modify something else & merge code.. Figured using his workspace that was already set up for testing would be easier, faster & less 'stressful' than creating another one on my computer, change IIS settings and all just, to test this merge..

Boy was I wrong.. upon opening source control explorer, I was greeted by a lot of little red Xes staring back at me... more than half the folders on TFS were marked for deletion.. o.O

Now I'm not sure if he wanted to fuck me up when he left or was just 'stupid' when it comes to TFS. O.O

So...maybe I do have a dev enemy after all.. or I don't.. Can't decide.. all I know for sure is tomorrow I'm creating another workspace to test this and I'm not touching his computer ever again.. O.O -

[See image]

This guy is wrong in so many ways.

"Windows/macOS is the best choice for the average user. Prove me wrong."

There are actually many Gnu/Linux based operating systems that's really easy to install and use. For example Debian/any Debian based OS.

There are avarage users that use a Gnu/Linux based operating system because guess what. They think its better and it is.

Lets do a little comparision shall we.

- - - - - Windows 10 - - Debian

Cost $139 Free

Spyware Yes. No

Freedom Limited. A lot

"[Windows] It's easy to set up, easy to use and has all the software you could possibly want. And it gets the job done. What more do you need? I don't see any reason for the average joe to use it. [Linux]"

Well as I said earlier, there are Gnu/Linux based operating systems thats easy to set up too.

And by "[Windows] has all the software you could possibly want." I guess you mean that you can download all software you could possibly want because having every single piece of software (even the ones you dont need or use) on your computer is extremely space inefficient.

"Linux is far from being mainstream, I doubt it's ever gonna happen, in fact"

Yes, Linux isn't mainstream but by the increasing number of people getting to know about Linux it eventually will be mainstream.

"[Linux is] Unusable for non-developers, non-geeks.

Depends heavily on what Gnu/Linux based operating system youre on. If youre on Ubuntu, no. If youre on Arch, yes. Just dont blame Linux for it.

"Lots of usability problems, lots of elitism, lots of deniers ("works for me", "you just don't use it right", "Just git-pull the -latest branch, recompile, mess with 12 conf files and it should work")"

That depends totally on what you're trying to. As the many in the Linux community is open source contributors, the support around open source software is huge and if you have a problem then you can get a genuine answer from someone.

"Linux is a hobby OS because you literally need to make it your 'hobby' to just to figure out how the damn thing works."

First of all, Linux isnt a OS, its a kernel. Second, no you dont. You dont have to know how it works. If you do, yes it can take a while but you dont have to.

"Linux sucks and will never break into the computer market because Linux still struggles with very basic tasks."

Ever heard of System76? What basic tasks does Linux struggle with? I call bullshit.

"It should be possible to configure pretty much everything via GUI (in the end Windows and macOS allow this) which is still not a case for some situations and operations."

Most things is possible to configure via a GUI and if it isnt, use the terminal. Its not so hard

https://boards.4chan.org/g/thread/... 21

21 -

Not as much of a rant as a share of my exasperation you might breathe a bit more heavily out your nose at.

My work has dealt out new laptops to devs. Such shiny, very wow. They're also famously easy to use.

.

.

.

My arse.

.

.

.

I got the laptop, transferred the necessary files and settings over, then got to work. Delivered ticket i, delivered ticket j, delivered the tests (tests first *cough*) then delivered Mr Bullet to Mr Foot.

Day 4 of using the temporary passwords support gave me I thought it was time to get with department policy and change my myriad passwords to a single one. Maybe it's not as secure but oh hell, would having a single sign-on have saved me from this.

I went for my new machine's password first because why not? It's the one I'll use the most, and I definitely won't forget it. I didn't. (I didn't.) I plopped in my memorable password, including special characters, caps, and numbers, again (carefully typed) in the second password field, then nearly confirmed. Curiosity, you bastard.

There's a key icon by the password field and I still had milk teeth left to chew any and all new features with.

Naturally I click on it. I'm greeted by a window showing me a password generating tool. So many features, options for choosing length, character types, and tons of others but thinking back on it, I only remember those two. I had a cheeky peek at the different passwords generated by it, including playing with the length slider. My curiosity sated, I closed that window and confirmed that my password was in.

You probably know where this is going. I say probably to give room for those of you like me who certifiably. did. not.

Time to test my new password.

*Smacks the power button to log off*

Time to put it in (ooer)

*Smacks in the password*

I N C O R R E C T L O G I N D E T A I L S.

Whoops, typo probably.

Do it again.

I N C O R R E C T L O G I N D E T A I L S.

No u.

Try again.

I N C O R R E C T L O G I N D E T A I L S.

Try my previous password.

Well, SUCCESS... but actually, no.

Tried the previous previous password.

T O O M A N Y A T T E M P T S

Ahh fuck, I can't believe I've done this, but going to support is for pussies. I'll put this by the rest of the fire, I can work on my old laptop.

Day starts getting late, gotta go swimming soonish. Should probably solve the problem. Cue a whole 40 minutes trying my 15 or so different passwords and their permutations because oh heck I hope it's one of them.

I talk to a colleague because by now the "days since last incident" counter has been reset.

"Hello there Ryan, would you kindly go on a voyage with me that I may retrace my steps and perhaps discover the source of this mystery?"

"A man chooses, a slave obeys. I choose... lmao ye sure m8, but I'm driving"

We went straight for the password generator, then the length slider, because who doesn't love sliding a slidey boi. Soon as we moved it my upside down frown turned back around. Down in the 'new password' and the 'confirm new password' IT WAS FUCKING AUTOCOMPLETING. The slidey boi was changing the number of asterisks in both bars as we moved it. Mystery solved, password generator arrested, shit's still fucked.

Bite the bullet, call support.

"Hi, I need my password resetting. I dun goofed"

*details tech support needs*

*It can be sorted but the tech is ages away*

Gotta be punctual for swimming, got two whole lengths to do and a sauna to sit in.

"I'm off soon, can it happen tomorrow?"

"Yeah no problem someone will be down in the morning."

Next day. Friday. 3 hours later, still no contact. Go to support room myself.

The guy really tries, goes through everything he can, gets informed that he needs a code from Derek. Where's Derek? Ah shet. He's on holiday.

There goes my weekend (looong weekend, bank holiday plus day flexi-time) where I could have shown off to my girlfriend the quality at which this laptop can play all our favourite animé, and probably get remind by her that my personal laptop has an i2350u with integrated graphics.

TODAY. (Part is unrelated, but still, ugh.)

Go to work. Ten minutes away realise I forgot my door pass.

Bollocks.

Go get a temporary pass (of shame).

Go to clock in. My fob was with my REAL pass.

What the wank.

Get to my desk, nobody notices my shame. I'm thirsty. I'll have the bottle from my drawer. But wait, what's this? No key that usually lives with my pass? Can't even unlock it?

No thanks.

Support might be able to cheer me up. Support is now for manly men too.

*Knock knock*

"Me again"

"Yeah give it here, I've got the code"

He fixes it, I reset my pass, sensibly change my other passwords.

Or I would, if the internet would work.

It connects, but no traffic? Ryan from earlier helps, we solve it after a while.

My passwords are now sorted, machine is okay, crisis resolved.

*THE END*

If you skipped the whole thing and were expecting a tl;dr, you just lost the game.

Otherwise, I absolve you of having lost the game.

Exactly at the char limit9 -

Too many to count, but this one useless meeting stands out the most.

I was working as an outside dev for software corporation. I was hired as an UI dev although my skill set was UI/engineer/devops at the time.

we wrote a big chunk of 'documentation' (read word files explaining features) before the project even started, I had 2 sprints of just meetings. Everybody does nothing, while I set up the project, tuned configs, added testing libraries, linters, environments, instances, CI/CD etc.

When we started actual project we had at least 2 meetings that were 2-3 hours long on a daily basis, then I said : look guys, you are paying me just to sit here and listen to you, I would rather be working as we are behind the schedule and long meetings don't help us at all.

ok, but there is that one more meeting i have to be on.

So some senior architect(just a senior backend engineer as I found out later) who is really some kind of manager and didn't wrote code for like 10 years starts to roast devs from the team about documentation and architectural decisions. I was like second one that he attacked.

I explained why I think his opinion doesn't matter to me as he is explaining server side related issues and I'm on the client-side and if he wants to argue we can argue on actual client-side decisions I made.

He tried to discuss thinking that he is far superior to some noob UI developer (Which I wasn't, but he didn't know that).