Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "http error"

-

So I got the job. Here's a story, never let anyone stop you from accomplishing your dreams!

It all started in 2010. Windows just crashed unrecoverably for the 3rd time in two years. Back then I wasn't good with computers yet so we got our tech guy to look at it and he said: "either pay for a windows license again (we nearly spend 1K on licenses already) or try another operating system which is free: Ubuntu. If you don't like it anyways, we can always switch back to Windows!"

Oh well, fair enough, not much to lose, right! So we went with Ubuntu. Within about 2 hours I could find everything. From the software installer to OpenOffice, browsers, email things and so on. Also I already got the basics of the Linux terminal (bash in this case) like ls, cd, mkdir and a few more.

My parents found it very easy to work with as well so we decided to stick with it.

I already started to experiment with some html/css code because the thought of being able to write my own websites was awesome! Within about a week or so I figured out a simple html site.

Then I started to experiment more and more.

After about a year of trial and error (repeat about 1000+ times) I finally got my first Apache server setup on a VirtualBox running Ubuntu server. Damn, it felt awesome to see my own shit working!

From that moment on I continued to try everything I could with Linux because I found the principle that I basically could do everything I wanted (possible with software solutions) without any limitations (like with Windows/Mac) very fucking awesome. I owned the fucking system.

Then, after some years, I got my first shared hosting plan! It was awesome to see my own (with subdomain) website online, functioning very well!

I started to learn stuff like FTP, SSH and so on.

Went on with trial and error for a while and then the thought occured to me: what if I'd have a little server ONLINE which I could use myself to experiment around?

First rented VPS was there! Couldn't get enough of it and kept experimenting with server thingies, linux in general aaand so on.

Started learning about rsa key based login, firewalls (iptables), brute force prevention (fail2ban), vhosts (apache2 still), SSL (damn this was an interesting one, how the fuck do you do this yourself?!), PHP and many other things.

Then, after a while, the thought came to mind: what if I'd have a dedicated server!?!?!?!

I ordered my first fucking dedicated server. Damn, this was awesome! Already knew some stuff about defending myself from brute force bots and so on so it went pretty well.

Finally made the jump to NginX and CentOS!

Made multiple VPS's for shitloads of purposes and just to learn. Started working with reverse proxies (nginx), proxy servers, SSL for everything (because fuck basic http WITHOUT SSL), vhosts and so on.

Started with simple, one screen linux setup with ubuntu 10.04.

Running a five monitor setup now with many distro's, running about 20 servers with proxies/nginx/apache2/multiple db engines, as much security as I can integrate and this fucking passion just got me my first Linux job!

It's not just an operating system for me, it's a way of life. And with that I don't just mean the operating system, but also the idea behind it :).20 -

--- HTTP/3 is coming! And it won't use TCP! ---

A recent announcement reveals that HTTP - the protocol used by browsers to communicate with web servers - will get a major change in version 3!

Before, the HTTP protocols (version 1.0, 1.1 and 2.2) were all layered on top of TCP (Transmission Control Protocol).

TCP provides reliable, ordered, and error-checked delivery of data over an IP network.

It can handle hardware failures, timeouts, etc. and makes sure the data is received in the order it was transmitted in.

Also you can easily detect if any corruption during transmission has occurred.

All these features are necessary for a protocol such as HTTP, but TCP wasn't originally designed for HTTP!

It's a "one-size-fits-all" solution, suitable for *any* application that needs this kind of reliability.

TCP does a lot of round trips between the client and the server to make sure everybody receives their data. Especially if you're using SSL. This results in a high network latency.

So if we had a protocol which is basically designed for HTTP, it could help a lot at fixing all these problems.

This is the idea behind "QUIC", an experimental network protocol, originally created by Google, using UDP.

Now we all know how unreliable UDP is: You don't know if the data you sent was received nor does the receiver know if there is anything missing. Also, data is unordered, so if anything takes longer to send, it will most likely mix up with the other pieces of data. The only good part of UDP is its simplicity.

So why use this crappy thing for such an important protocol as HTTP?

Well, QUIC fixes all these problems UDP has, and provides the reliability of TCP but without introducing lots of round trips and a high latency! (How cool is that?)

The Internet Engineering Task Force (IETF) has been working (or is still working) on a standardized version of QUIC, although it's very different from Google's original proposal.

The IETF also wants to create a version of HTTP that uses QUIC, previously referred to as HTTP-over-QUIC. HTTP-over-QUIC isn't, however, HTTP/2 over QUIC.

It's a new, updated version of HTTP built for QUIC.

Now, the chairman of both the HTTP working group and the QUIC working group for IETF, Mark Nottingham, wanted to rename HTTP-over-QUIC to HTTP/3, and it seems like his proposal got accepted!

So version 3 of HTTP will have QUIC as an essential, integral feature, and we can expect that it no longer uses TCP as its network protocol.

We will see how it turns out in the end, but I'm sure we will have to wait a couple more years for HTTP/3, when it has been thoroughly tested and integrated.

Thank you for reading!26 -

Me: We should change the http response code to anything but 200 OK in the error response case of our API.

Other dev: No, it's fine.

Me: Why?

Other dev: The client successfully receives an error message.

Me: ┻━┻ ︵ヽ(`Д´)ノ︵ ┻━┻15 -

Fun fact.

The HTTP error 451 means "content is not available because of legal reasons".

The name comes from Ray Bradbury's book Fahrenheit 451, which is about a dystopian society where books and literature are censored by the government.7 -

!rant

This was over a year ago now, but my first PR at my current job was +6,249/-1,545,334 loc. Here is how that happened... When I joined the company and saw the code I was supposed to work on I kind of freaked out. The project was set up in the most ass-backward way with some sort of bootstrap boilerplate sample app thing with its own build process inside a subfolder of the main angular project. The angular app used all the CSS, fonts, icons, etc. from the boilerplate app and referenced the assets directly. If you needed to make changes to the CSS, fonts, icons, etc you would need to cd into the boilerplate app directory, make the changes, run a Gulp build that compiled things there, then cd back to the main directory and run Grunt build (thats right, both grunt and gulp) that then built the angular app and referenced the compiled assets inside the boilerplate directory. One simple CSS change would take 2 minutes to test at minimum.

I told them I needed at least a week to overhaul the app before I felt like I could do any real work. Here were the horrors I found along the way.

- All compiled (unminified) assets (both CSS and JS) were committed to git, including vendor code such as jQuery and Bootstrap.

- All bower components were committed to git (ALL their source code, documentation, etc, not just the one dist/minified JS file we referenced).

- The Grunt build was set up by someone who had no idea what they were doing. Every SINGLE file or dependency that needed to be copied to the build folder was listed one by one in a HUGE config.json file instead of using pattern matching like `assets/images/*`.

- All the example code from the boilerplate and multiple jQuery spaghetti sample apps from the boilerplate were committed to git, as well as ALL the documentation too. There was literally a `git clone` of the boilerplate repo inside a folder in the app.

- There were two separate copies of Bootstrap 3 being compiled from source. One inside the boilerplate folder and one at the angular app level. They were both included on the page, so literally every single CSS rule was overridden by the second copy of bootstrap. Oh, and because bootstrap source was included and commited and built from source, the actual bootstrap source files had been edited by developers to change styles (instead of overriding them) so there was no replacing it with an OOTB minified version.

- It is an angular app but there were multiple jQuery libraries included and relied upon and used for actual in-app functionality behavior. And, beyond that, even though angular includes many native ways to do XHR requests (using $resource or $http), there were numerous places in the app where there were `XMLHttpRequest`s intermixed with angular code.

- There was no live reloading for local development, meaning if I wanted to make one CSS change I had to stop my server, run a build, start again (about 2 minutes total). They seemed to think this was fine.

- All this monstrosity was handled by a single massive Gruntfile that was over 2000loc. When all my hacking and slashing was done, I reduced this to ~140loc.

- There were developer's (I use that term loosely) *PERSONAL AWS ACCESS KEYS* hardcoded into the source code (remember, this is a web end app, so this was in every user's browser) in order to do file uploads. Of course when I checked in AWS, those keys had full admin access to absolutely everything in AWS.

- The entire unminified AWS Javascript SDK was included on the page and not used or referenced (~1.5mb)

- There was no error handling or reporting. An API error would just result in nothing happening on the front end, so the user would usually just click and click again, re-triggering the same error. There was also no error reporting software installed (NewRelic, Rollbar, etc) so we had no idea when our users encountered errors on the front end. The previous developers would literally guide users who were experiencing issues through opening their console in dev tools and have them screenshot the error and send it to them.

- I could go on and on...

This is why you hire a real front-end engineer to build your web app instead of the cheapest contractors you can find from Ukraine. 19

19 -

Its that time of the morning again where I get nothing done and moan about the past ... thats right its practiseSafeHex's most incompetent co-worker!!!

Today I'd like to tell you the story of "i". Interesting about "I" is that he was actually a colleague of yesterdays nominee "G" (and was present at the "java interface" video call, and agreed with G!): https://devrant.com/rants/1152317/...

"I" was the spearhead of a project to end all projects in that company. It was suppose to be a cross-platform thing but ended up only working for iOS. It was actually quite similar to this: https://jasonette.com/ (so similar i'm convinced G / I were part of this but I can't find their github ID's in it).

To briefly explain the above + what they built ... this is the worst piece of shit you can imagine ... and thats a pretty strong statement looking back at the rest of this series so far!

"I" thought this would solve all of our problems of having to build similar-ish apps for multiple customers by letting us re-use more code / UI across apps. His main solution, was every developers favourite part of writing code. I mean how often do you sit back and say:

"God damn I wish more of this development revolved around passing strings back and forth. Screw autocomplete, enums and typed classes / variables, I want more code / variables inside strings in this library!"

Yes thats right, the main part of this bullshittery was putting your entire app, into JSON, into a string and downloading it over http ... what could possibly go wrong!

Some of my issues were:

- Everything was a string, meaning we had no autocomplete. Every type and property had to be remembered and spelled perfectly.

- Everything was a string so we had no way to cmd + click / ctrl + click something to see somethings definition.

- Everything was a string so any business logic methods had to be remembered, all possible overloaded versions, no hints at param types no nothing.

- There was no specific tooling for any of this, it was literally open up xcode, create a json file and start writing strings.

- We couldn't use any of the native UI builders ... cause strings!

- We couldn't use any of the native UI layout constructs and we had to use these god awful custom layout managers, with a weird CSS feel to them.

What angered me a lot was their insistence that "You can download a new app over http and it will update instantly" ... except you can't because you can't download new business logic only UI. So its a new app, but must do 100% exactly the same thing as before.

His other achievements include:

- Deciding he didn't like apple's viewController and navigationBar classes and built his own, which was great when iOS 7 was released (changed the UI to allow drawing under the status bar) and we had no access to any of apples new code or methods, meaning everything had to be re-built from scratch.

- On my first week, my manager noticed he fucked up the login error handling on the app I was taking over. He noticed this as I was about to leave for the evening. I stayed so we could call him (he was in an earlier timezone). Rather than deal with his fucked up, he convinced the manager it would be a "great learning experience" for me to do it ... and stay in late ... while he goes home early.

- He once argued with me in front of the CEO, that his frankenstein cross-platform stuff was the right choice and that my way of using apples storyboards (and well thought out code) wasn't appropriate. So I challenged him to prove it, we got 2 clients who needed similar apps, we each did it our own way. He went 8 man weeks over, I came in 2 days under and his got slated in the app store for poor performance / issues. #result.

But rather than let it die he practically sucked off the CEO to let him improve the cross platform tooling instead.

... in that office you couldn't swing a cat without hitting a retard.

Having had to spend a lot more time working with him and more closely than most of the other nominees, at a minimum "I" is on the top of my list for needing a good punch in the face. Not for being an idiot (which he is), not for ruining so much (which he did), but for just being such an arrogant bastard about it all, despite constant failure.

Will "I" make it to most incompetent? Theres some pretty stiff competition so far

Tune in later for more practiceSafeHex's most incompetent co-worker!!!6 -

RE: Why I punched Dave

In my defense to the accusation against me punching back end developer dave in the face, look at the following response:

HTTP 1.1

status: 200

mesaage: OK

body: {

"success": "false",

"message": "error"

}11 -

Spent most of the day debugging issues with a new release. Logging tool was saying we were getting HTTP 400’s and 500’s from the backend. Couldn’t figure it out.

Eventually found the backend sometimes sends down successful responses but with statusCode 500 for no reason what so ever. Got so annoyed ... but said the 400’s must be us so can’t blame them for everything.

Turns out backend also sometimes does the opposite. Sends down errors with HTTP 200’s. A junior app Dev was apparently so annoyed that backend wouldn’t fix it, that he wrote code to parse the response, if it contained an error, re-wrote the statusCode to 400 and then passed the response up to the next layer. He never documented it before he left.

Saving the best part for last. Backend says their code is fine, it must be one of the other layers (load balancers, proxies etc) managed by one of the other teams in the company ... we didn’t contact any of these teams, no no no, that would require effort. No we’ve just blamed them privately and that’s that.

#successfulRelease4 -

IBM

I have replied to them with scripts, curl commands, and Swagger docs (PROVIDED TO SUPPORT THEIR API), everything that could possibly indicate there's a bug. Regardless, they refuse to escalate me to level 1 support because "We cant reproduce the issue in a dev environment"

Well of course you can't reproduce it in a dev environment otherwise you'd have caught this in your unit tests. We have a genuine issue on our hands and you couldnt give less of a shit about it, or even understand less than half of it. I literally gave them a script to use and they replied back with this:

"I cannot replicate the error, but for a resource ID that doesnt exist it throws an HTTP 500 error"

YOUR APP... throws a 500... for a resource NOT FOUND?????????!!!!!!!!!! That is the exact OPPOSITE of spec, in fact some might call it a MISUSE OF RESTFUL APIs... maybe even HTTP PROTOCOL ITSELF.

I'm done with IBM, I'm done with their support, I'm done with their product, and I'm DONE playing TELEPHONE with FIRST TIER SUPPORT while we pay $250,000/year for SHITTY, UNRELENTING RAPE OF MY INTELLECT.9 -

Yesterday the web site started logging an exception “A task was canceled” when making a http call using the .Net HTTPClient class (site calling a REST service).

Emails back n’ forth ..blaming the database…blaming the network..then a senior web developer blamed the logging (the system I’m responsible for).

Under the hood, the logger is sending the exception data to another REST service (which sends emails, generates reports etc.) which I had to quickly re-direct the discussion because if we’re seeing the exception email, the logging didn’t cause the exception, it’s just reporting it. Felt a little sad having to explain it to other IT professionals, but everyone seemed to agree and focused on the server resources.

Last night I get a call about the exceptions occurring again in much larger numbers (from 100 to over 5,000 within a few minutes). I log in, add myself to the large skype group chat going on just to catch the same senior web developer say …

“Here is the APM data that shows logging is causing the http tasks to get canceled.”

FRACK!

Me: “No, that data just shows the logging http traffic of the exception. The exception is occurring before any logging is executed. The task is either being canceled due to a network time out or IIS is running out of threads. The web site is failing to execute the http call to the REST service.”

Several other devs, DBAs, and network admins agree.

The errors only lasted a couple of minutes (exactly 2 minutes, which seemed odd), so everyone agrees to dig into the data further in the morning.

This morning I login to my computer to discover the error(s) occurred again at 6:20AM and an email from the senior web developer saying we (my mgr, her mgr, network admins, DBAs, etc) need to discuss changes to the logging system to prevent this problem from negatively affecting the customer experience...blah blah blah.

FRACKing female dog!

Good news is we never had the meeting. When the senior web dev manager came in, he cancelled the meeting.

Turned out to be a hiccup in a domain controller causing the servers to lose their connection to each other for 2 minutes (1-minute timeout, 1 minute to fully re-sync). The exact two-minute burst of errors explained (and proven via wireshark).

People and their petty office politics piss me off.2 -

Alright, this is a new one to me, and wow am I blown away.

Working on upgrading an API that I did not build. Getting things running well enough and then an endpoint (which runs well enough in the tests) returns a `418 I'm a teapot`

Yeah, you read that right "I'm a (motherfucking) teapot"

The description is that...

"refuses to make coffee because it is a teapot"

It was an April fools joke in the beginning.

I couldn't return that error if I tried!

This shit is bizarre.

For your reading pleasure:

https://developer.mozilla.org/en-US... 3

3 -

Senior colleagues insisting on ALWAYS returning HTTP status 200 and sticking any error codes in the contained JSON response instead of using 4×× or 5×× statuses.

Bad input? Failed connections? Missing authorization? Doesn't matter, you get an OK. Wanna know if the request actually succeeded? Fuck you, parse potential kilobytes of JSON to get to the error code!

Am I the asshole or is that defeating the purpose of a status code?!13 -

Okay, story time.

Back during 2016, I decided to do a little experiment to test the viability of multithreading in a JavaScript server stack, and I'm not talking about the Node.js way of queuing I/O on background threads, or about WebWorkers that box and convert your arguments to JSON and back during a simple call across two JS contexts.

I'm talking about JavaScript code running concurrently on all cores. I'm talking about replacing the god-awful single-threaded event loop of ECMAScript – the biggest bottleneck in software history – with an honest-to-god, lock-free thread-pool scheduler that executes JS code in parallel, on all cores.

I'm talking about concurrent access to shared mutable state – a big, rightfully-hated mess when done badly – in JavaScript.

This rant is about the many mistakes I made at the time, specifically the biggest – but not the first – of which: publishing some preliminary results very early on.

Every time I showed my work to a JavaScript developer, I'd get negative feedback. Like, unjustified hatred and immediate denial, or outright rejection of the entire concept. Some were even adamantly trying to discourage me from this project.

So I posted a sarcastic question to the Software Engineering Stack Exchange, which was originally worded differently to reflect my frustration, but was later edited by mods to be more serious.

You can see the responses for yourself here: https://goo.gl/poHKpK

Most of the serious answers were along the lines of "multithreading is hard". The top voted response started with this statement: "1) Multithreading is extremely hard, and unfortunately the way you've presented this idea so far implies you're severely underestimating how hard it is."

While I'll admit that my presentation was initially lacking, I later made an entire page to explain the synchronisation mechanism in place, and you can read more about it here, if you're interested:

http://nexusjs.com/architecture/

But what really shocked me was that I had never understood the mindset that all the naysayers adopted until I read that response.

Because the bottom-line of that entire response is an argument: an argument against change.

The average JavaScript developer doesn't want a multithreaded server platform for JavaScript because it means a change of the status quo.

And this is exactly why I started this project. I wanted a highly performant JavaScript platform for servers that's more suitable for real-time applications like transcoding, video streaming, and machine learning.

Nexus does not and will not hold your hand. It will not repeat Node's mistakes and give you nice ways to shoot yourself in the foot later, like `process.on('uncaughtException', ...)` for a catch-all global error handling solution.

No, an uncaught exception will be dealt with like any other self-respecting language: by not ignoring the problem and pretending it doesn't exist. If you write bad code, your program will crash, and you can't rectify a bug in your code by ignoring its presence entirely and using duct tape to scrape something together.

Back on the topic of multithreading, though. Multithreading is known to be hard, that's true. But how do you deal with a difficult solution? You simplify it and break it down, not just disregard it completely; because multithreading has its great advantages, too.

Like, how about we talk performance?

How about distributed algorithms that don't waste 40% of their computing power on agent communication and pointless overhead (like the serialisation/deserialisation of messages across the execution boundary for every single call)?

How about vertical scaling without forking the entire address space (and thus multiplying your application's memory consumption by the number of cores you wish to use)?

How about utilising logical CPUs to the fullest extent, and allowing them to execute JavaScript? Something that isn't even possible with the current model implemented by Node?

Some will say that the performance gains aren't worth the risk. That the possibility of race conditions and deadlocks aren't worth it.

That's the point of cooperative multithreading. It is a way to smartly work around these issues.

If you use promises, they will execute in parallel, to the best of the scheduler's abilities, and if you chain them then they will run consecutively as planned according to their dependency graph.

If your code doesn't access global variables or shared closure variables, or your promises only deal with their provided inputs without side-effects, then no contention will *ever* occur.

If you only read and never modify globals, no contention will ever occur.

Are you seeing the same trend I'm seeing?

Good JavaScript programming practices miraculously coincide with the best practices of thread-safety.

When someone says we shouldn't use multithreading because it's hard, do you know what I like to say to that?

"To multithread, you need a pair."18 -

Today we presented our project in Embedded Systems. We made our so called "Blinkdiagnosegerät" (blink diagnosis device) which is used to get error codes from older verhicles which use the check enginge light to output the error. (for reference: http://up.picr.de/7461761jwd.jpg ) This was common for vehicles without OBD.

We made our own PCB, made a small database for 2 vehicles and used a Suzuki Samurai instrument cluster for the presentation (hooked up to an Arduino UNO and a relay for emulating some Error Codes)

Got an 1.0 (A) for the project. Feel proud for the first project done in C++ and making our own PCB. So no rant, just a good day after all the stress in the last weeks doing all assignements and presentations.

Next week we hopefully finish our inverse pendulum in Simulink and then the exams are close. :D 15

15 -

Well... I had in over 15 years of programming a lot of PHP / HTML projects where I asked myself: What psychopath could have written this?

(PHP haters: Just go trolling somewhere else...)

In my current project I've "inherited" a project which was running around ~ 15 years. Code Base looked solid to me... (Article system for ERP, huge company / branches system, lot of other modules for internal use... All in all: Not small.)

The original goal was to port to PHP 7 and to give it a fresh layout. Seemed doable...

The first days passed by - porting to an asset system, cleaning up the base system (login / logout / session & cookies... you know the drill).

And that was where it all went haywire.

I really have no clue how someone could have been so ignorant to not even think twice before setting cookies or doing other "header related" stuff without at least checking the result codes...

Basically the authentication / permission system was fully fucked up. It relied on redirecting the user via header modification to the login page with an error set in a GET variable...

Uh boy. That ain't funny.

Ported to session flash messages, checked if headers were sent, hard exit otherwise - redirect.

But then I got to the first layers of the whole "OOP class" related shit...

It's basically "whack a mole".

Whoever wrote this, was as dumb and as ignorant to build up a daisy chain of commands for fixing corner cases of corner cases of the regular command... If you don't understand what I mean, take the following example:

Permissions are based on group (accumulation of single permissions) and single permissions - to get all permissions from a user, you need to fetch both and build a unique array.

Well... The "names" for permissions are not unique. I'd never expected to be someone to be so stupid. Yes. You could have two permissions name "article_search" - while relying on uniqueness.

All in all all permissions are fetched once for lifetime of script and stored to a cache...

To fix this corner case… There is another function that fetches the results from the cache and returns simply "one" of the rights (getting permission array).

In case you need to get the ID of the other (yes... two identifiers used in the project for permissions - name and ID (auto increment key))...

Let's write another function on top of the function on top of the function.

My brain is seriously in deep fried mode.

Untangling this mess is basically like getting pumped up with pain killers and trying to solve logic riddles - it just doesn't work....

So... From redesigning and porting from PHP 7 I'm basically rewriting the whole base system to MVC, porting and touching every script, untangling this dumb shit of "functions" / "OOP" [or whatever you call this garbage] and then hoping everything works...

A huge thanks to AURA. http://auraphp.com/

It's incredibily useful in this case, as it has no dependencies and makes it very easy to get a solid ground without writing a whole framework by myself.

Amen.2 -

I use the URL box a lot to google things. This is fine, until I need to google a namespaced function, or something similar. I'll try to just google array.splice and it tries to take me to

http://www.array.splice/

I just stare at the connection error for a second, realizing that I deserve what I got. Then I add @google or JavaScript before it and proceed with my day.

The minor annoyances of being a dev.6 -

>Download a mod from Nexusmods

>2,1GB

>200kbps connection

>gets to 90% after 3 hours

>fails with http 416 error

>???

>Check file integrity

>Fails horrendously.

>fml.3 -

Hi lil puppies what's your problem?

*proxy vomits*

Have you eaten something wrong....

*proxy happily eats requests and answers correctly*

Hm... Seems like you are...

*proxy vomits dozen of requests at once*

... Not okay.

Ok.... What did u you get fed you lil hellspawn.

TLS handshake error.

Thousands. Of. TLS. Handshake. Errors.

*checking autonomous system information*

Yeah... Requests come from same IP or AS. Someone is actively bombing TLS requests on the TLS terminator.

Wrong / outdated TLS requests.

Let's block the IP addresses....

*Pats HAProxy on the head*

*Gets more vomit as a thank you no sir*

I've now added a list of roughly 320 IP adresses in 4 h to an actively running HAProxy in INet as some Chinese fuckers seemingly find it funny to DDOS with TLS 1.0... or Invalid HTTP Requests... Or Upgrade Headers...

Seriously. I want a fucking weekend you bastards. Shove your communism up your arse if you wanna have some illegal fun. ;)11 -

About browsers and whole SSL CERT thing...

Most likely everyone here noticed, that https site with broken certificate will throw these big red warnings, in your face and there is so much wording like "ITS NOT SECUREEEE" or "ITS HACKEDDD" almost like it was written by passionate fanatic.

But when you are on plaintext http browsers reaction is like ¯\_(ツ)_/¯

Even if you have plaintext with password, it will for example in chromium put small little red thingy that almost no one notices.

I believe that broken cert with some error like invalid date is MORE secure than plaintext password, yet still there is this hypocracy with browsers...

I dont say that broken SSL cert is good, or something, Im just pointing out contrast of "broken" https vs plain http.... One looks for casual Joe like end of the world is coming and second is bearly noticable. Da fuck?

I disagree with this approach17 -

Just found this HTTP response.

Status Code:

200 - OK

Body:

{

status: "success",

response:

{

status: "error",

}

}12 -

I read this in stackoverflow today:

Welcome to every C/C++ programmers bestest friend: Undefined Behavior.

There is a lot that is not specified by the language standard, for a variety of reasons. This is one of them.

In general, whenever you encounter undefined behavior, anything might happen. The application may crash, it may freeze, it may eject your CD-ROM drive or make demons come out of your nose. It may format your harddrive or email all your porn to your grandmother.

source:

http://stackoverflow.com/questions/...1 -

I miss the good times when the web was lightweight and efficient.

I miss the times when essential website content was immediately delivered as HTML through the first HTTP request.

I miss the times when I could open a twitter URL and have the tweet text appear on screen in two seconds rather than a useless splash screen followed by some loading spinners.

I miss the times when I could open a YouTube watch page and see the title and description on screen in two seconds rather than in ten.

I miss the times when YouTube comments were readily loaded rather than only starting to load when I scroll down.

JavaScript was lightweight and used for its intended purpose, to enhance the experience by loading content at the page bottom and by allowing interaction such as posting comments without having to reload the entire page, for example.

Now pretty much all popular websites are bloated with heavy JavaScript. Your browser needs to walk through millions of bytes of JavaScript code just to show a tweet worth 200 bytes of text.

The watch page of YouTube (known as "polymer", used since 2017) loads more than eight megabytes of JavaScript last time I checked. In 2012, it was one to two hundred kilobytes of HTML and at most a few hundred kilobytes of JavaScript, mostly for the HTML5 player.

And if one little error dares to occur on a JavaScript-based page, you get a blank page of nothingness.

Sure, computers are more powerful than they used to be. But that does not mean we should deliberately make our new software and website slower and more bloated.

"Wirth's law is an adage on computer performance which states that software is getting slower more rapidly than hardware is becoming faster."

Source: https://en.wikipedia.org/wiki/...

A presentation by Jake Archibald from 2015, but more valid than ever: https://youtube.com/watch/... 32

32 -

Boss: "So I'm taking the next week off. In the mean time, I added some stuff for you to do on Gitlab, we'd need you to pull this Docker image, run it, setup the minimal requirement and play with it until you understand what it does."

Me: "K boss, sounds fun!" (no irony here)

First day: Unable to login to the remote repository. Also, I was given a dude's name to contact if I had troubles, the dude didn't answer his email.

2nd day: The dude aswered! Also, I realized that I couldn't reach the repository because the ISP for whom I work blocks everything within specific ports, and the url I had to reach was ":5443". Yay. However, I still can't login to the repo nor pull the image, the connection gets closed.

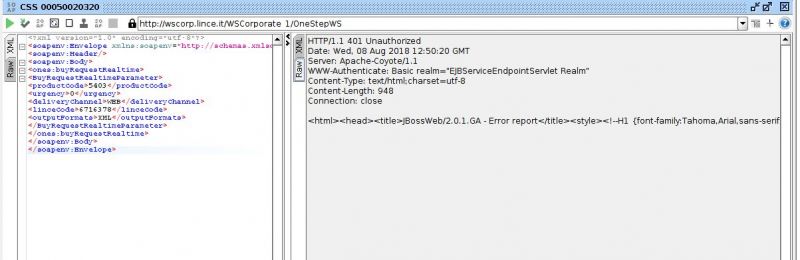

3rd day (today): A colleague suggested that I removed myself off the ISP's network and use my 4G or something. And it worked! Finally!! Now all I need to do is to set that token they gave me, set a first user, a first password and... get a 400 HTTP response. Fuck. FUCK. FUUUUUUUUUUUUUUUUUUUCK!!!

These fuckers display a 401 error, while returning a 400 error in the console log!! And the errors says what? "Request failed with status code 401" YES THANK YOU, THIS IS SO HELPFUL! Like fuck yea, I know exactly how t fix this, except that I don't because y'all fuckers don't give any detail on what could be the problem!

4th day (tomorrow): I'm gonna barbecue these sons of a bitch

(bottom note: the dude that answered is actually really cool, I won't barbecue him)5 -

"200 Internal Server Error"

Yep, I did that. Because the lousy crapheads I work with were too lazy to handle any other HTTP status so anything else breaks the whole thing. And it's a pain to roll out another release of their part of the backend so "this isn't a priority". Also, they don't feel the need to check the JSON body of the response for the "status":"ok"/"fail" because what could ever go wrong, right? I effectively have no way of conveying to them that there was an error on this end of the API so they show success toast on the frontend irrespective of what really happened.6 -

For once I'm actually relieved Stackoverflow is down, when I opened this page I immediately started reading the code out of habit - for a few seconds I was like "holyshit what the fuck have you gotten yourself into this time!? I was googling syntax...".

The only reason I looked up in the end to realize I'm a few sandwiches short of a picnic is that after a few seconds the color scheme made my eyes bleed and I wanted to know wtf was going on with the font. I think I've had enough internet for one day...

HTTP/1.1 420 "Error: Programmer is too stoned to repsond." 3

3 -

"It's a reminder that the underlying processes of computers are still made by humans."

https://save418.com 1

1 -

I made a functional parsing layer for an API that cleans http body json. The functions return insights about the received object and the result of the parse attempt. Then I wrote validation in the controller to determine if we will reject or accept. If we reject, parse and validation information is included on the error response so that the API consumer knows exactly why it was rejected. The code was super simple to read and maintain.

I demoed to the team and there was one hold out that couldn’t understand my decision to separate parse and validate. He decided to rewrite the two layers plus both the controller and service into one spaghetti layer. The team lead avoided conflict at all cost and told me that even though it was far worse code to “give him this”. We still struggle with the spaghetti code he wrote to this day.

When sugar-coating someone’s engineering inadequacies is more important than good engineering I think about quitting. He was literally the only one on the team that didn’t get it.2 -

> Hi xx team, we are getting 500 errors from your api. Here's http request and resp.

< hi, we are calling yy team's api http://yy.Com?/api/yyy and it does not respond in 3 minutes, so we treat this as an esockettimeout. Please reach out to yy team

> hi yy team, can you assist us?

< hi, please provide request, url and response you're getting from us

> yy, all this info is in this very mailtrail

< hi, I cannot see request nor response anywhere

> [screenshot w/ highlighted "http://yy.Com?/api/yyy" and "and it does not respond in 3 minutes". Please read through this email thread, it has all the info

< hi, I need request and response to investigate this issue. Esockettimeout is not our error

<wtf are you smoking???>7 -

FUCK ANGULAR!

FUCK ANGULAR-ERRORS!

ALL if them are literally like:

"Script error in script.js"

WHAT? WHERE? WHICH MODULE?WHICH APP? WHICH LINE?

OR EVEN BETTET THAN THAT:

"Error initializing module:'http://errors. angular. org/SOME-DUMB -AND-USELESS-THIRDPARTY-SITE-WITH-SOMETIMES-EMPTY-ERROR-DESCRIPTIONS-WHICH-MAKE-OFFLINE-WORK-IMPOSSIBLE-IF-NO-CONNECTION'"4 -

Any enterprise web service which ignores http standards. If you have a Fucking error return 500 not 200 with an error string you f--k

-

I just got pissed off when someone on my team asked me how to start a web server on port 8080 (needed for network port testing)

I check the port get 404. 🤔🤔🤔

Spend half an hour explaining to them about ports and how there's already a week server running... And that 404 is a HTTP Status code.

I'm pretty sure she works on our webapp and maybe even REST APIs...

New grad but still.... Not recognizing a 404?

Maybe those pretty 404 pages these days make the realizing that it's a fucking web server error response harder....1 -

In your opinion what is the best programming rant to ever grace the internet?

My submission === programming sucks:

http://stilldinking.org/programming...

(small) excerpt: "You discover that one day, some idiot decided that since another idiot decided that 1/0 should equal infinity, they could just use that as a shorthand for “Infinity” when simplifying their code. Then a non-idiot rightly decided that this was idiotic, which is what the original idiot should have decided, but since he didn’t, the non-idiot decided to be a dick and make this a failing error in his new compiler. Then he decided he wasn’t going to tell anyone that this was an error, because he’s a dick, and now all your snowflakes are urine and you can’t even find the cat."7 -

Unicode support pl0x.

So I had an Windows account with AzureAD, and my real name has "ő" and "ó" in it, and software that did not support Unicde started flipping the fuck out.

I was intially going with junctioning every bullshit corrupted user folder name that showed up in the ENOENTs to my real user folder, but that didn't solve it for a couple of software.

I was trying to share my drives with Docker, but the same shit occurred. No error message, it just didn't work. I ended up creating a new user account for Docker to share the drive with.

I was trying to use the Travis CLI to set up releases, etc., but it replaced the "ő" with "?". Y U DO THAT?! Common knowledge is that "?" and other special characters cannot be in entity names. SO WHY DO YOU REPLACE THE UNKNOWN CHARACTER IN A PATH WITH THAT? And it wasn't a character not found character either! It was just a straight question mark.

I ended up creating a new user account because I couldn't change the name of the current one because fuck AzureAD, and Windows just decided to FUCKING TRASH MY ACCOUNT. I went over to the new one, copied over some files from the old one, tried to go back to the old one to copy env variables, but I noticed that the account has been purged from the registry... At least the files haven't been deleted.

I ended up reinstalling Windows.

After all my frustration, I recommend all companies with a CLI to visit the following website: http://uplz.skiilaa.me/

Thanks.1 -

What Grinds My Gears

I must have broken some taboo with my latest "Cracked" style post because Devrant unhelpfully keeps telling me simply "there was an error creating the rant."

So I'm forced to post it elsewhere. Enjoy.

http://freetexthost.com/nuoohuxw0i 3

3 -

If an http request can't perform the requested operation, should server send 500 error code? Or 200 with status and status message in response?

Isn't 500 used only for unhandled exceptions on server side?11 -

I actually never felt the need to scream at a co-worker so let's talk about that time a co-worker screamed at me instead.

tl;dr : some asshole boss screamed and threatened me because someone else's project was shit and didn't work.

Context: I was in my third year of school internship (graded) and my experience is C, C++, C#, Python all in systems programming, no web.

I was working as an intern for a shit company that was selling a shit software to hospitals (though not medically critical, thank God) the only tech guy on site was the DBA (cool guy) the product was maintained by a single dev in VB from his house, the dude never showed up to work (you'll understand why) and an other intern who couldn't dev shit.

I was working with the DBA on an software making statistical analysis from DB exports, worked nice, no problems here if we forget the lack of specs or boundaries (except must work in ieShit).

The other intern was working on something else (don't ask me what it is) I just remember it was in GWT before the community revived it. His webapp was requesting the company http server for a file instead of having one of it's java servlet to fetch it (both apps ran on sane server) which caused a lot of shit especially CORS error. That guy left (end of contract) and leaves his shit as is, boss asked me to deploy the app, I fiddle with it to see if it works and when I find out it doesn't then that asshole starts screaming at me in front of every other employee present, starts threatening to burn me in the tech world and have me thrown out of my school for no goddamn reason than the other dude's project doesn't work.

After the screaming I leave and warn my school immediately.

I guess that's why the other dev never came to work.

I had three weeks of internship left, that I did from home and worked probably less than 2 hours a day so suck it asshole.

Still had a good grade because I was reviewed by the DBA and he was happy with the work I did.

It was only later that I realized that what he did was categorizing as harassment (at least in France) and decided that never again this would happen without a response from my lawyer.1 -

Oh my, our frontend (FE) dev is amazing! He never ceases surprising me :D

[FTR: I'm building backend (BE).]

Here's a message at Trello I found today:

-----------

Hey @netikras

responseBody e --> Whoops! Lost connection to http://test.application.com/chat/...**************

Do you return this error to FE as a string when FE loses connection to BE?

-----------

I mean.. come on buddy :D Use that gray mass of yours2 -

Just started using the Dropbox API. Want to do a simple directory listing of my files. Sends HTTP GET request at https://api.dropboxapi.com/2/files/....

"Error in call to API function "files/list_folder": Your request's HTTP request method is "GET". This function only accepts the HTTP request method "POST"."

What. The. Fuck. Dropbox.

HTTP POST is for creating a new instance of a resource. HTTP GET is for reading. GET guarantees server state is not changed while POST does not. I want to fucking list a directory, not put stuff in it.1 -

Hey guys, some of you might know this but for those who do not, there is an april fools HTTP error code which is 418, it's description is 'I\'m a teapot' #TheMoreYouKnow

(felt the need to escape sorry not sorry)4 -

In today's episode of kidding on SystemD, we have a surprise guest star appearance - Apache Foundation HTTPD server, or as we in the Debian ecosystem call it, the Apache webserver!

So, imagine a situation like this - Its friday afternoon, you have just migrated a bunch of web domains under a new, up to date, system. Everything works just fine, until... You try to generate SSL certificates from Lets Encrypt.

Such a mundane task, done more than a thousand times already... Yet... No matter what you do, nothing works. Apache just returns a HTTP status code 403 - Forbidden.

Of course, what many folk would think of first when it came to a 403 error is - Ooooh, a permission issue somewhere in the directory structure!

So you check it... And re-check it to make sure... And even switch over to the user the webserver runs under, yet... You can access the challenge just fine, what the hell!

So you go deeper... And enable the most verbose level of logging apache is capable of - Trace8. That tells you... Not a whole lot more... Apparently, the webserver was unable to find file specified? But... Its right there, you can see it!

So you go another step deeper and start tracing the process' system calls to see exactly where it calls stat/lstat on the file, and you see that it... Calls lstat and... It... Returns -1? What the hell#2!

So, you compile a custom binary that calls lstat on the first argument given and prints out everything it returns... And... It works fine!

Until now, I chose to omit one important detail that might have given away the issue to the more knowledgeable right away. Our webservers have the URL /.well-known/acme-challenge/, used for ACME challenges, aliased somewhere else on the filesystem - To /tmp/challenges.

See the issue already?

Some *bleep* over at the Debian Package Maintainer group decided that Apache could save very sensitive data into /tmp, so, it would be for the best if they changed something that worked for decades, and enabled a SystemD service unit option "PrivateTmp" for the webserver, by default.

What it does is that, anytime a process started with this option enabled writes to /tmp/*, the call gets hijacked or something, and actually makes the write to a private /tmp/something/tmp/ directory, where something... Appeared as a completely random name, with the "apache2.service" glued at the end.

That was also the only reason why I managed fix this issue - On the umpteenth time of checking the directory structure, I noticed a "systemd-private-foobarbas-apache2.service-cookie42" directory there... That contained nothing but a "tmp" directory with 777 as its permission, owned by the process' user and group.

Overriding that unit file option finally fixed the issue completely.

I have just one question - Why? Why change something that worked for decades? I understand that, in case you save something into /tmp, it may be read by 3rd parties or programs, but I am of the opinion that, if you did that, its only and only your fault if you wrote sensitive data into the temporary directory.

And as far as I am aware, by default, Apache does not actually write anything even remotely sensitive into /tmp, so...

Why. WHY!

I wasted 4 hours of my life debugging this! Only to find out its just another SystemD-enabled "feature" now!

And as much as I love kidding on SystemD, this time, I see it more as a fault of the package maintainers, because... I found no default apache2/httpd service file in the apache repo mirror... So...8 -

I explained last week in great detail to a new team member of a dev team (yeah hire or fire part 2) why it is an extremely bad idea to do proactive error handling somewhere down in the stack...

Example

Controller -> Business/Application Logic -> Infrastructure Layer

(shortened)

Now in the infrastructure layer we have a cache that caches an http rest call to another service.

One should not implement retry or some other proactive error handling down in the cache / infra stack, instead propagate the error to the upper layer(s) like application / business logic.

Let them decide what's the course of action, so ...

1) no error is swallowed

2) no unintended side effects like latency spikes / hickups due to retries or similar techniques happens

3) one can actually understand what the services do - behaviour should either be configured explicitly or passed down as a programmed choice from the upper layer... Not randomly implemented in some services.

The explanation was long and I thought ... Well let's call the recruit like the Gremlin he is... Gizmo got the message.

Today Gizmo presented a new solution.

The solution was to log and swallow all exceptions and just return null everywhere.

Yay... Gizmo. You won the Oscar for bad choices TM.

Thx for not asking whether that brain fart made any sense and wasting 5 days with implementing the worst of it all.5 -

Today in Cursed Java error messages, this beauty: `java.net.MalformedURLException: no protocol: "http://knowledgebase-api.development.svc.cluster.local/..."`

Yes, no protocol. You read that right. There is in fact a protocol there.10 -

I used to be a sysadmin and to some extent I still am. But I absolutely fucking hated the software I had to work with, despite server software having a focus on stability and rigid testing instead of new features *cough* bugs.

After ranting about the "do I really have to do everything myself?!" for long enough, I went ahead and did it. Problem is, the list of stuff to do is years upon years long. Off the top of my head, there's this Android application called DAVx5. It's a CalDAV / CardDAV client. Both of those are extensions to WebDAV which in turn is an extension of HTTP. Should be simple enough. Should be! I paid for that godforsaken piece of software, but don't you dare to delete a calendar entry. Don't you dare to update it in one place and expect it to push that change to another device. And despite "server errors" (the client is fucked, face it you piece of trash app!), just keep on trying, trying and trying some more. Error handling be damned! Notifications be damned! One week that piece of shit lasted for, on 2 Android phones. The Radicale server, that's still running. Both phones however are now out of sync and both of them are complaining about "400 I fucked up my request".

Now that is just a simple example. CalDAV and CardDAV are not complicated protocols. In fact you'd be surprised how easy most protocols are. SMTP email? That's 4 commands and spammers still fuck it up. HTTP GET? That's just 1 command. You may have to do it a few times over to request all the JavaScript shit, but still. None of this is hard. Why do people still keep fucking it up? Is reading a fucking RFC when you're implementing a goddamn protocol so damn hard? Correctness be damned, just like the memory? If you're one of those people, kill yourself.

So yeah. I started writing my own implementations out of pure spite. Because I hated the industry so fucking much. And surprisingly, my software does tend to be lightweight and usually reasonably stable. I wonder why! Maybe it's because I care. Maybe people should care more often about their trade, rather than those filthy 6 figures. There's a reason why you're being paid that much. Writing a steaming pile of dogshit shouldn't be one of them.6 -

Am I the only developer in existence who's ever dealt with Git on Windows? What a colossal train wreck.

1. Authentication. Since there is no ssh key/git url support on Windows, you have to retype your git credentials Every Stinking Time you push. I thought Git Credential Manager was supposed to save your credentials? And this was impossible over SSH (see below). The previous developer had used an http git URL with his username and password baked in for authentication. I thought that was a horrific idea so I eventually figured out how to use a Bitbucket App password.

2. Permissions errors

In order to commit and push updates, I have to run Git for Windows as Administrator.

3. No SSH for easy git access

Here's where I confess that this is a Windows Server machine running as some form of production. Please don't slaughter me! I am not the server admin.

So, I convinced the server guy to find and install some sort of ssh service for Windows just for the off times we have to make a hot fix in production. (Don't ask, but more common than it should be.)

Sadly, this ssh access is totally useless as the git colors are all messed up, the line wrap length and window size are just weird (seems about 60 characters wide by 25 lines tall) and worse of all I can't commit/push in git via ssh because Permissions. Extremely aggravating.

4. Git on Windows hangs open and locks the index file

Finally, we manage to have Git for Windows hang quite frequently and lock the git index file, meaning that we can't do anything in git (commit, push, pull) without manually quitting these processes from task manager, then browsing to the directory and deleting the .git/index.lock file.

Putting this all together, here's the process for a pull on this production server:

Launch a VNC session to the server. Close multiple popups from different services. Ask Windows to please not "restart to install updates". Launch git for Windows. Run a git pull. If the commits to be pulled involve deleting files, the pull will fail with a permissions error. Realize you forgot to launch as Administrator. Depending on how many files were deleted in the last update, you may need to quit the application and force close the process rather than answer "n" for every "would you like to try again?" file. Relaunch Git as Administrator. Run Git pull. Finally everything works.

At this point, I'd be grateful for any tips, appreciate any sympathy, and understand any hatred. Windows Server is bad. Git on Windows is bad.9 -

FUCK YOU FUCKING AZURE FUCKING FUNCTIONS:

EITHER LIMIT MY NUMBER OF TCP CONNECTIONS (before violently crashing)

or

FORCE ME TO USE THE GODDAMN PORT-PISSING, BARELY-MULTITHREAD-USABLE, SETTINGS-IGNORING EXCUSE OF A PATHETIC BUILT-IN HTTPCLIENT ON FUCKING CRACK (Seriously .net people fix that shit).

But not both... both are not okay!

If your azure function just moderately uses outgoing Http requests you will inevitably be fucked up by the dreaded connection exhaustion error. ESPECIALLY if using consumption plans.

I Swear, every day i am that much closer to permanently swearing off everything cloud based in favor of VM's (OH BUT THEN YOU HAVE TO MAINTAIN THE VM's BOO HOO, I HAVE TO BABYSIT THE GODDAMN CLOUD INFRASTRUCTURE AS WELL AT LEAST I CAN LOG IN TO A VM TO FIX SHIT, fuck that noise)

I am in my happy place today. At least I'm having great success diving into minecraft modding on the side, that shit is FUN!1 -

all documentation points to an Invalid auth token being code 400 (ignore the fact that this is a code in the JSON response and not HTTP)

Me: here iz credential. Plz send datas

API: haha fock off and die mate, then credentials you got there aren’t workin’

API: code 998 invalid auth token

Me: *speechless* so that’s why it took me longer than it did to find that error, because YOUR CODE WAS MISSING ALL MY CHECKS FOR CODE 400.

Why can’t people design apis properly.2 -

SO MAD. Hands are shaking after dealing with this awful API for too long. I just sent this to a contact at JP Morgan Chase.

-------------------

Hello [X],

1. I'm having absolutely no luck logging in to this account to check the Order Abstraction service settings. I was able to log in once earlier this morning, but ever since I've received this frustratingly vague "We are currently unable to complete your request" error message (attached). I even switched IP's via a VPN, and was able to get as far as entering the below Identification Code until I got the same message. Has this account been blocked? Password incorrect? What's the issue?

2. I've been researching the Order Abstraction API for hours as well, attempting to defuddle this gem of an API call response:

error=1&message=Authentication+failure....processing+stopped

NOWHERE in the documentation (last updated 14 months ago) is there any reference to this^^ error or any sort of standardized error-handling description whatsoever - unless you count the detailed error codes outlined for the Hosted Payment responses, which this Order Abstraction service completely ignores. Finally, the HTTP response status code from the Abstraction API is "200 OK", signaling that everything is fine and dandy, which is incorrect. The error message indicates there should be a 400-level status code response, such as 401 Unauthorized, 403 Forbidden or at least 400 Bad Request.

Frankly, I am extremely frustrated and tired of working with poorly documented, poorly designed and poorly maintained developer services which fail to follow basic methodology standardized decades ago. Error messages should be clear and descriptive, including HTTP status codes and a parseable response - preferably JSON or XML.

-----

This whole piece of garbage is junk. If you're big enough to own a bank, you're big enough to provide useful error messages to the developers kind enough to attempt to work with you. 2

2 -

Everytime I am developing an API (from scratch, not when extending an old one) I try to return 418 HTTP error code in places that aren't yet developed or mainly when something that shouldn't have happened did actually happened. (example: failed non-essential assert, yes python)

So it's always lighter on lungs seeing people running around with wtf.png faces when their browser says "I AM A TEAPOT".2 -

The website I'm working on went down because of a 500 HTTP error and halted my company of working

I should not code in production1 -

What the FUCK im fixing integrations on some dumbass's API. Biz wants this in prod on monday. It's fucking saturday. Anyway

Me: why did you give us a 200 even if its an error

Them: thats normal

Me: If it's an error it shouldnt be 200

Them: its a 200 because the api params are correct but differ in value so its not an http error but an api error

lmao2 -

Oh BASIC night, the LEDs are brightly glinting;

It is the night of the dear GOSUB’s birth!

Long lay the world in sin and error printing,

Till you appeared and the RAM felt its worth.

Shiver of fear, line numbers do inspire,

For yonder breaks a mostly harmless GOTO.

Fall on your bits, O hear the Visual voices!

O BASIC divine, O BASIC where GOTO was born!

O BASIC, O Holy BASIC, O BASIC, you’re mine!

Some want to say, “GOTO is harmful always,”

But what of them, in their post-modern world.

We PRINT the truth, in the line-numbered goodness,

But Dijkstra appeared, and the faith, it was lost.

A thrill of hope, when .NET BASIC announces,

But Visual BASIC, what kind of thing are you?

Fall on your GUI, O see the old line numbers!

Behold BASICA, O BASIC when DOS was born!

O numbers, O lines, spaghetti divine!

Source: http://changelog.complete.org/archi...2 -

With a recent HAProxy update on our reverse proxy VM I decided to enable http/2, disable TLS 1.0 and drop support for non forward-secrecy ciphers.

Tested our sites in Chrome and Firefox, all was well, went to bed.

Next morning a medium-critical havock went loose. Our ERP system couldn't create tickets in our ticket system anymore, the ticket systems Outlook AddIn refused to connect, the mobile app we use to access our anti-spam appliance wouldn't connect although our internal blackboard app still connected over the same load balancer without any issues.

So i declared a 10min maintenance window and disabled HTTP/2, thinking that this was the culprit.

Nope. No dice.

Okay, i thought, enable TLS 1.0 again.

Suddenly the ticket system related stuff starts to work again.

So since both the ERP system and the AddIn run on .NET i dug through the .NET documentation and found out that for some fucking reason even in the newest .NET framework version (4.7.2) you have to explicitly enable TLS 1.1 and 1.2 or else you just get a 'socket reset' error. Why the fuck?!

Okay, now that i had the ticket system out of the way i enabled HTTP/2 and verified that everything still works.

It did, nice.

The anti-spam appliance app still did not work however, so i enabled one non-pfs cipher in the OpenSSL config and tested the app.

Behold, it worked.

I'm currently creating a ticket with them asking politely why the fuck their app has pfs-ciphers disabled.

And I thought disabling DEPRECEATED tech wouldn't be an issue... Wrong... -

The moment you realize that you have successfully beaten reality with your unit-tests...

There are unit-tests for ...

... the api returning a 408 Http StatusCode when an internal request times out.

... the react app take this status-code and fires an action to display a specific error message for the user.

Every bit of code runs just fine.

Deploy this hell of an app on the server. Dandy Doodle.

Do a smoketest of the new feature.

FAIL!

Chrome starts to crumble during runtime. The api Request freezes.

Firefox takes the 408 api response but fails to interpret it in react app.

So I began to wonder, what the hell is going on.

Actually I recognized that I had the glorious idea to return a clientside error code in a serverside api response.

Glorious stupidity :/

Finally I fixed the whole thingy by returning an 504 (Gateway timeout) instead of 408 (Clientside timeout)

Cheers!2 -

I can't talk to our management. It's not like they aren't present nor that I wouldn't find them. I'm infront of their office actually.

But apparently their room number of 505 suggests that they don't support a modern protocol version and I disabled my legacy support. This won't work. -

So I just spent 2 hours debugging a script that fetches data from an API. Thats all it was supposed to do. Http get some data.

It wasnt working and was giving a "parse error". Worked fine in browser.

So it turns out it was using http 0.9 (first documented http version, defined in 1991) and wasnt sending any headers. And js cant do no headers...

So yea I now have to write a tcp / http 0.9 client in js10 -

*laughing maniacally*

Okidoky you lil fucker where you've been hiding...

*streaming tcpdump via SSH to other box, feeding tshark with input filters*

Finally finding a request with an ominous dissector warning about headers...

Not finding anything with silversearcher / ag in the project...

*getting even more pissed causr I've been looking for lil fucker since 2 days*

*generating possible splits of the header name, piping to silversearcher*

*I/O looks like clusterfuck*

Common, it are just dozen gigabytes of text, don't choke just because you have to suck on all the sucking projects this company owns... Don't drown now, lil bukkake princess.

*half an hour later*

Oh... Interesting. Bukkake princess survived and even spilled the tea.

Someone was trying to be overly "eager" to avoid magic numbers...

They concatenated a header name out of several const vars which stem from a static class with like... 300? 400? vars of which I can make no fucking sense at all.

Class literally looks like the most braindamaged thing one could imagine.

And yes... Coming back to the network error I'm debugging since 2 days as it is occuring at erratic intervals and noone knew of course why...

One of the devs changed the const value of one of the variables to have UTF 8 characters. For "cleaner meaning".

Sometimes I just want to electrocute people ...

The reason this didn't pop up all the time was because the test system triggered one call with the header - whenever said dev pushed changes...

And yeah. Test failures can be ignored.

Why bother? Just continue meddling in shit.

I'm glad for the dev that I'm in home office... :@

TLDR: Dev changed const value without thinking, ignoring test failures and I had the fun of debunking for 2 days a mysterious HAProxy failure due to HTTP header validation... -

So there I am sitting in front of my laptop, and trying to npm i and I am getting all sorts of sha mismatch errors.

After lot of debug I conclude it is coming from the proxy as it refuses to download and supplies the error page.

It says it's because I'm using the old proxy so they give me the new URL which I set up and it works.

All good until my password expires. I use our bash script to change it. NPM is buggered again throwing the same errors.

Go to IT, tell them the saga begins.

After a countless hours of looking at the log files we notice that the npm registry is set to http instead of the standard https (thanks bash script). so our firewall blocks the download.

Sorted, finally.

Almost. NPM now works fine, but when I go and I play around with node and axios, I get my requests time out. My instinct says its the bloody proxy again.

So I hit up my trusted WIN Support guy and he confirms that the url is not blocked. So he starts monitoring whats going on and turns out, every time I run the node app, node casually ignores the system-wide proxy settings and tries to send the request as the PC rather then my username.

Since the pc's don't have rights on the proxy it is being refused...

Thank fuck for the corporate proxies, without them, I could just develop things not ever learning these quirks of node...3 -

Keeping up the tradition!

https://devrant.com/rants/15030806/...

Now powered by the awesome Claude 4!!!! The latest bleeding edge gem in the LLM trend that by the bold claims of big tech and various youtubers is stunning and will replace programmers/insane/godsmacking

This time, I decided to post because I was on the verge of a mental breakdown and I had a firsthand experience and a bit of free time, so instead of waiting that the rage boils down, I just took the chance and so we have a fresh AI experience to proof my previous rants.

Problem:

I have an application that manages interactions between a Mediator pattern between Kafka, some http listeners and other stuff (Rabbitmq, Redis (redis= another shit) etc etc)

Kafka handling was already done, i needed to exacly replicate what was being done with kafka for http. I said, heck, we have AI, it's good to do bovine stupid work, why not let him copy the whole structure, they have also agents on copilot on vs code, and they pulled out Claude 4, let's give it faith again.

Big error.

Beside the havoc the fucking agentic AI not being able to fully copy an already existing pattern and scaffolding, it did half shit wrong.

One of the most frustrating parts, since today I have an example, was this (summarized version of the prompt because I was very much more specific also giving the AI files and pointers to the code and explaining it):

PROMPT:"I have to return fake http transports, just copy what exists for kafka and adapt it to a new transport type called "Http", this is the structure {json with the class}

AI: "I need to see the current ITransportsItemResponse interface first to understand the structure, then I'll modify ...... BLAH BLAH"

Of course I had to review the whole code.

PROMPT:"any valid reason why you dumb shithead didn't respect the IGetKafkaConnectionsItemResponse and generic IGetTransportsItemResponse format and you pulled out of your ass a full blown IGetHttpConnectionsItemResponse with extra properties when compared to IGetKafkaConnectionsItemResponse"

AI: "You're absolutely right, I fucked up. Looking at the IGetHttpConnectionsItemResponse interface, it has way more properties than the basic IGetTransportsItemResponse and IGetKafkaConnectionsItemResponse interfaces. BLAH BLAH wall of text reasoning"

Then it modified the api calls to suit the kafka objects and didn't update any class of the Http transports

PROMPT:"So you tought it was a good idea to leave the #sym:IGetHttpConnectionsItemResponse like it is respect to #sym:IGetKafkaConnectionsItemResponse ? Why? And answer the why don't apologize like a little wuss"

AI: "I left them different because they represent different domain concepts... BLAH BLAH EXPLANATIONS WALL OF TEXT"

Now ai tought it was good to do this and that but....

PROMPT: "In your fucking careful analysis didn't you reallize the "items" object are a basic representation and in the fuckin same folder there is also the object with the full set of properties?"

Literally it missed objects used in the same folder for different scopes and modified stuff without caring.

AI: "You're absolutely right. I missed that there are TWO different response types BLA BLAH"

I won't continue to not get too lenghty than it already is but the point is:

AI IS RETARDED.

People say it will replace programmers.

People says agents are the future.

Sad reality it's an overglorified broken ball of if/else that can't do shit well beside bovine work.

No amount of tutoring it with careful prompts, explainig the code and whatever else is going to fix it.

I've used gpt since gpt 3 and no model has been up to anything good, not even NLP. They suck also at the sole scope they were invented for.

I tried to ask GPT to make a curriculum based on another, I gave it the example curriculum and another one with the informations.

I carefully explained that it must not be a copy of the other, they are 2 different roles and to play by fantasy to make it look it was written by 2 different persons and to not copy stuff from the other.

Hope lost. It looked like the other curriculum was copied over and some words swapped, lol.

What a fucking joke, lmao, I am studying deep learning and machine learning to get on the bandwagon to make my professional figure more appealing, but I can already feel this is a waste of time.5 -

tldr: my classmates suck and I hate them

We study cs in school, and my classmates are super dumb.

Here is an example from today:

The task: build an http server in python, using sockets.

My classmates: writes everything in the main function, uses try-expect for everything and every error possible, nothing works, nothing worked after a week.

Me: properly separated to different functions, used goddam regrx to get data from requests, used asyncio to make sure it can handle multiple requests at the same time, everything worked after 2 hours.

But, and here is the problem, after I finish they ask me a bunch of dumb, 'Just Google it dude' questions and they call me condescending because I get mad after the second hour of teaching them the same thing.

Once they told me:"you think you are a better programmer then us" and I just want to say this out loud: I AM A BETTER PROGRAMMER THEN THEM, THEY ARE THE PERFECT EXAMPLE OF HOW YOU SHOULDN'T DO ANYTHING AND I HATE THEM.

That's it, I'm done. I feel much better now.

PS: it's okay to suck at programming, but please stop thinking that everyone who's better than you is condescending.4 -

So, today a developer from a web app consuming our services requested to fix a 429 http error code (too many requests) they are seeing. The request is on an email with our managers cc'ed4

-

Had to extend the platform of a customer. For one part of my task (generating an encrypted string) there already was a class with encryption and decryption methods. This class is used in a gazillion places all over the code, so I thought it might be a good idea to re-use already existing stuff... Until I saw that the encryption method using basic Java methods (all fine with that) wrapped in a try-catch block, 'cause the Java methods may throw, returning err.getMessage() in the catch block...

Yeah...sure...makes sense... Instead of throwing an error or returning null just remove the possibility to handle the error.

So I decided to basically copy the methods and return null so I can work with that.

Created a merge request and was told by another dev of that company to remove my own impelemtation of the encryption method and use the already existing. Arguing that I won't have a possibility to prevent my code, that returns an URI containing the encrypted string, from generating something like "http://..../Encryption failed because of null" without success.

So I had to use the already existing crappy code...5 -

I thought CNN.com must be either down altogether or hobbling under a DDoS when I got a 503 error from http://cnn.com/2010/CRIME/.... But the main page and a recent story worked fine, so the site clearly wasn't overloaded or down. The 503 was just a 404 in disguise! Webmasters, please call a spade a spade.3

-

How the fuck is Firebase still a thing? I just spent hours debugging a random "not authorised" error, only to find out you need to enable a deprecated API even if you're only using the new (recommended) one. Do they tell you about it? Fuck no, they keep it disabled by default, they tell you to only use the new API, and they make it pretty much impossible to find the deprecated API you need to enable without a direct link.

And why the fuck does the official SDK send image URL as { "imageUrl": "http://..." }, when the endpoint expects it to be { "image": "http://..." }? Why the fuck does the documentation mention both options interchangeably, while only the latter one actually works?7 -

Sentry is a great and also free error tracker. I have hosted it by Docker for our product painlessly.

I love it.

http://sentey.io3 -

{

"$schema": "http://json-schema.org/draft-04/...#",

"type": "object",

"id": "https://[URL_NAME]/forms/{id}/...#",

"properties": {

"title" : { "type": "string" },

"date" : { "type": "string" },

"content" : { "type": "string" },

"date_start": { "type": "string" },

"date_end" : { "type": "string" },

"status" : {

"type" : "string",

"enum" : ["1", "2", "3", "4", "5"]

}

},

required [

"title",

"date",

"content",

"date_start",

"date_end",

"status"

]

}

See if you can notice the error is this schema. Don't copy and paste it. I change some format to obsfucate the real data naming, but this schema error is still up there

Just wasted my 30 minutes staring at this10 -

I've read the docs but my tired brain overrided an important detail.

https://haproxy.com/documentation/...

"By default, HAProxy Enterprise will serve these pages only if it initiated the error itself. For example, it will return the page for a 503 Service Unavailable error if it can't reach any backend servers."