Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "grep"

-

Me : "Hey, I can't find the comments.js file, do you know where it is stored?"

Co-worker : "Yep, look in the CSS folder"

Me : "OK, thanks!"

5 seconds later..

Me : "Wait, what?"8 -

When you stare into git, git stares back.

It's fucking infinite.

Me 2 years ago:

"uh was it git fetch or git pull?"

Me 1 year ago:

"Look, I printed these 5 git commands on a laptop sticker, this is all I need for my workflow! branch, pull, commit, merge, push! Git is easy!"

Me now:

"Hold my beer, I'll just do git format-patch -k --stdout HEAD..feature -- script.js | git am -3 -k to steal that file from your branch, then git rebase master && git rebase -i HEAD~$(git rev-list --count master..HEAD) to clean up the commit messages, and a git branch --merged | grep -v "\*" | xargs -n 1 git branch -d to clean up the branches, oh lets see how many words you've added with git diff --word-diff=porcelain | grep -e '^+[^+]' | wc -w, hmm maybe I should alias some of this stuff..."

Do you have any git tricks/favorites which you use so often that you've aliased them?50 -

If you understand -_-

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep😋13 -

Every Unix command eventually become an internet service .

Grep- > Google

rsync- > Dropbox

man- > stack overflow

cron- > ifttt5 -

Storytime!

Manager: Hey fullstackchris, the maps widget on our app stopped working recently...

Dev: (Skeptical, little did he know) Sigh... probably didn't raise quota or something stupid... Logs on to google cloud console to check it out...

Google Dashboard: Your bill.... $5,197 (!!!!!!) Payment method declined (you think?!)

Dev: 😱 WTF!?!?!! (Calls managers) Uh, we have HUGE problem, charges for $5000+ in our google account, did you guys remove the quota limits or not see any limit reached warnings!?

Managers: Uh, we didn't even know that an API could cost money, besides, we never check that email account!

Dev: 🤦♂️ yeah obviously you get charged, especially when there have literally been millions of requests. Anyway, the bigger question is where or how our key got leaked. Somewhat started hammering one of the google APIs with one of our keys (Proceeds to hunt for usages of said API key in the codebase)

Dev: (sweating 😰) did I expose an API key somewhere? Man, I hope it's not my fault...

Terminal: grep results in, CMS codebase!

Dev: ah, what do we have here, app.config, seems fine.... wait, why did they expose it to a PUBLIC endpoint?!

Long story short:

The previous consulting goons put our Angular CMS JSON config on a publicly accessible endpoint.

WITH A GOOGLE MAPS API KEY.

JUST CHILLING IN PLAINTEXT.

Though I'm relieved it wasn't my fault, my faith in humanity is still somewhat diminished. 🤷♂️

Oh, and it's only Monday. 😎

Cheers!11 -

More Unix commands are becoming web services. What else can you think of?

Grep -> Google

rsync -> Dropbox

man -> stack overflow

cron -> ifttt"9 -

Ah, these classic LINUX commands:

$ unzip; strip; touch; finger; grep; mount; fsck; more; yes; fsck; fsck; umount; clean; sleep3 -

Christmas song for UNIX hackers:

better !pout !cry

better watchout

lpr why

santa claus < north pole > town

cat /etc/passed > list

ncheck list

ncheck list

cat list | grep naughty > nogiftlist

cat list | grep nice > giftlist

santa claus < north pole > town

who | grep sleeping

who | grep awake

who | egrep 'bad|good'

for (goodness sake) {

be good

}

(By Frank Carey, AT&T Bell Laboratories, 1985 )1 -

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep3 -

So I ran grep to count the occurrences of the word "bitch" In a pretty large project of ours... eleven. ELEVEN.

12

12 -

Let me ask you something: why do most people prefer ms word over a simple plain text document when writing a manual. Use Markdown!

You can search and index it (grep, ack, etc)

You don't waste time formatting it.

It's portable over OS.

You only need a simple text editor.

You can export it to other formats, like PDF to print it!

You can use a version control system to version it.

Please! stop using those other formats. Make everyone's life easier.

Same applies when sharing tables. Simple CSV files are enough most of the time.

Thank you!!?!18 -

Lets Start a list of all the impertant gnutools, everybody

I start:

- ls

- cd

- pwd

- cp

- $(cd contdir; tar -cf - .) > $(cd destdir; tar -xf - .)

- man

- grep

- vim

- cat

- tac

-18 -

It took forever to get SSH access to our office network computers from outside. Me and other coworkers were often told to "just use teamviewer", but we finally managed to get our way.

But bloody incompetents! There is a machine with SSH listening on port 22, user & root login enabled via password on the personal office computer.

"I CBA to setup a private key. It's useless anyways, who's ever gonna hack this computer? Don't be paranoid, a password is enough!"

A little more than 30 minutes later, I added the following to his .bashrc:

alias cat="eject -T && \cat"

alias cp="eject -T && \cp"

alias find="eject -T && \find"

alias grep="eject -T && \grep"

alias ls="eject -T && \ls"

alias mv="eject -T && \mv"

alias nano="eject -T && \nano"

alias rm="eject -T && \rm"

alias rsync="eject -T && \rsync"

alias ssh="eject -T && \ssh"

alias su="eject -T && \su"

alias sudo="eject -T && \sudo"

alias vboxmanage="eject -T && \vboxmanage"

alias vim="eject -T && \vim"

He's still trying to figure out what is happening.5 -

http://mindprod.com/jgloss/...

Skill in writing unmaintainable code

Chapter : The art of naming variables and methods

- Buy a copy of a baby naming book and you’ll never be at a loss for variable names. Fred is a wonderful name and easy to type. If you’re looking for easy-to-type variable names, try adsf or aoeu

- By misspelling in some function and variable names and spelling it correctly in others (such as SetPintleOpening SetPintalClosing) we effectively negate the use of grep or IDE search techniques.

- Use acronyms to keep the code terse. Real men never define acronyms; they understand them genetically.

- Randomly capitalize the first letter of a syllable in the middle of a word. For example: ComputeRasterHistoGram().

- Use accented characters on variable names.

- Randomly intersperse two languages (human or computer). If your boss insists you use his language, tell him you can organise your thoughts better in your own language, or, if that does not work, allege linguistic discrimination and threaten to sue your employers for a vast sum.

and many others :D -

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep3 -

Me: *kills process*

Linux: 3243 killed.

Me: "sudo netstat -ntlp | grep 3243"

Linux: 3243 running.

* hour later *

Me: *kills process with 3045974th method*

Linux: 3243 killed.

Me: "sudo netstat -ntlp | grep 3243"

Linux: 3243 running.

Me: "Are you absolutely FUCKING kidding me?! What is this fucking thing, the god damn grim reaper? I've done some SKETCHY fucking things at the terminal to kill this BASIC fucking server and it is still running!! WHAT THE FUCK ARE YOU?!"

Manager: *peeks in helpfully* "Did you try the 'kill' command?"15 -

What Unix tool would like to use in real world? Like superpower :)

I would choose grep.

- easy ads filtering with -f

- fast search in books15 -

colleague: AWS is facing some network issue.

me: But I see you are able to SSH into EC2 instances.

colleague: Well, I am running my nodejs app on 8080 but can't access it over the Internet. Works fine on my laptop though.

me: ec2_prod ~# netstat | grep node

lolol -> 127.0.0.1:8080 node

Turns out it was running on localhost IP. Worked fine on his laptop though.3 -

Why devs like Linux/Unix :

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep3 -

Why programmers like UNIX:

unzip, strip, touch, finger, grep, mount, fsck, more, yes, fsck, fsck, fsck, umount, sleep5 -

What a lazy fuck.

This so called full-stack developer doesn't know how to use mysql from command line. The only way he can do anything in the database is using phpMyAdmin or MySQL gui.

What? How do you even call yourself a developer when you don't know how to use basic command line tools?

The fucker wants me to find out why a particular feature is not working?

Why the fuck are you being paid for? You stupid idiot.

"Can you please grep ... in the server?"

What? Why would I do that for you? How about you ssh the server yourself?

What a waste of time.5 -

How could I only name one favorite dev tool? There are a *lot* I could not live without anymore.

# httpie

I have to talk to external API a lot and curl is painful to use. HTTPie is super human friendly and helps bootstrapping or testing calls to unknown endpoints.

https://httpie.org/

# jq

grep|sed|awk for for json documents. So powerful, so handy. I have to google the specific syntax a lot, but when you have it working, it works like a charm.

https://stedolan.github.io/jq/

# ag-silversearcher

Finding strings in projects has never been easier. It's fast, it has meaningful defaults (no results from vendors and .git directories) and powerful options.

https://github.com/ggreer/...

# git

Lifesaver. Nough said.

And tweak your command line to show the current branch and git to have tab-completion.

# Jetbrains flavored IDE

No matter if the flavor is phpstorm, intellij, webstorm or pycharm, these IDE are really worth their money and have saved me so much time and keystrokes, it's totally awesome. It also has an amazing plugin ecosystem, I adore the symfony and vim-idea plugin.

# vim

Strong learning curve, it really pays off in the end and I still consider myself novice user.

# vimium

Chrome plugin to browse the web with vi keybindings.

https://github.com/philc/vimium

# bash completion

Enable it. Tab-increase your productivity.

# Docker / docker-compose

Even if you aren't pushing docker images to production, having a dockerfile re-creating the live server is such an ease to setup and bootstrapping the development process has been a joy in the process. Virtual machines are slow and take away lot of space. If you can, use alpine-based images as a starting point, reuse the offical one on dockerhub for common applications, and keep them simple.

# ...

I will post this now and then regret not naming all the tools I didn't mention. -

My company decided to reinvent the wheel by writing its own queue system instead of using the existing message queue service.

And it uses plain PHP with exec() to run the workers.

Where do we store the job? We use mongoDB which is already used in our existing projects. We can query the collection/table each time the queue service start, execute the jobs, and let it exit if there's no job anymore. Don't worry, systemd will start the queue service again once it exits.

How to monitor the workers? Yep, we use ps and grep to check if the worker's PID still exists in the OS.

What about error stack traces? Nice question, we redirect the stdout and stderr when exec()-ing into a file.

What about timeout? We don't need it, let's just assume no one is going to write while(true).

It works flawlessly! /s8 -

Fuck this Kibana shit and give me back my old grep (or even better: ripgrep). In 2008, I used to find shit in my fucking logfiles. Now I have an ELK stack that smells like liquid shit.4

-

With the brand new Microsoft C++ compiler, what you see in the debugger, is not always what you get...

8

8 -

So we found an interesting thing at work today...

Prod servers had 300GB+ in locked (deleted) files. Some containers marked them for deletion but we think the containers kept these deleted files around.

300 GB of ‘ghost’ space being used and `du` commands were not helping to find the issue.

This is probably a more common issue than I realize, as I’m on the newer side to Linux. But we got it figured out with:

`lsof / | grep deleted`3 -

I'm going on vacation next week, and all I need to do before then is finish up my three tickets. Two of them are done save a code review comment that amounts to combining two migrations -- 30 seconds of work. The other amounts to some research, then including some new images and passing it off to QA.

I finish the migrations, and run the fast migration script -- should take 10 minutes. I come back half an hour later, and it's sitting there, frozen. Whatever; I'll kill it and start it again. Failure: database doesn't exist. whatever, `mysql` `create database misery;` rerun. Frozen. FINE. I'll do the proper, longer script. Recreate the db, run the script.... STILL GODDAMN FREEZING.

WHATEVER.

Research time.

I switch branches, follow the code, and look for any reference to the images, asset directory, anything. There are none. I analyze the data we're sending to the third party (Apple); no references there either, yet they appear on-device. I scour the code for references for hours; none except for one ref in google-specific code. I grep every file in the entire codebase for any reference (another half hour) and find only that one ref. I give up. It works, somehow, and the how doesn't matter. I can just replace the images and all should be well. If it isn't, it will be super obvious during QA.

So... I'll just bug product for the new images, add them, and push. No need to run specs if all that's changed is some assets. I ask the lead product goon, and .... Slack shits the bed. The outage lasts for two hours and change.

Meanwhile, I'm still trying to run db migrations. shit keeps hanging.

Slack eventually comes back, and ... Mr. Product is long gone. fine, it's late, and I can't blame him for leaving for the night. I'll just do it tomorrow.

I make a drink. and another.

hard horchata is amazing. Sheelin white chocolate is amazing. Rum and Kahlua and milk is kind of amazing too. I'm on an alcoholic milk kick; sue me.

I randomly decide to switch branches and start the migration script again, because why not? I'm not doing anything else anyway. and while I'm at it, I randomly Slack again.

Hey, Product dude messaged me. He's totally confused as to what i want, and says "All I created was {exact thing i fucking asked for}". sfjaskfj. He asks for the current images so he can "noodle" on it and ofc realize that they're the same fucking things, and that all he needs to provide is the new "hero" banner. Just like I asked him for. whatever. I comply and send him the archive. he's offline for the night, and won't have the images "compiled" until tomorrow anyway. Back to drinking.

But before then, what about that migration I started? I check on it. it's fucking frozen. Because of course it fucking is.

I HAD FIFTEEN MINUTES OF FUCKING WORK TODAY, AND I WOULD BE DONE FOR NEARLY THREE FUCKING WEEKS.

UGH!6 -

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

Every Unix command eventually become an internet service.

Grep -> Google

rsync -> Dropbox

man -> stack overflow

cron -> ifttt"

Anything more you can think of?4 -

Does anyone else use:

cat /path/to/file | grep "blah"

Rather than:

grep "blah" /path/to/file

...when grepping? Or is it just me? Mainly asking because in my half asleep state I just wrote `tail -f | grep "blah"` by mistake and wondered why it was taking way to long to read the file...9 -

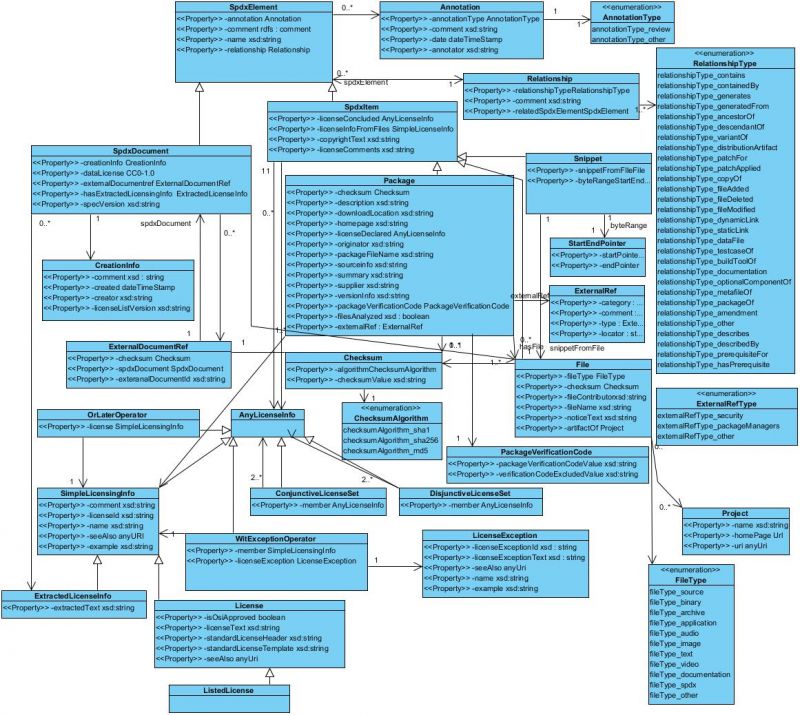

SPDX. Actually a cool idea, you slap one line of comment in your source files that gives the licence. Easy to understand at a glance, and grep friendly. Also no more "huh what exactly does this licence here say, is that MIT, BSD with or without shit or what".

But once you have something simple, you can bet some design committee tries to "improve" it and cover everything imaginable.

The result looks like this (see also screenshot): https://wiki.spdx.org/view/...

Holy shit. What was that about? Simplifying crap? Yeah sure that's totally what it looks like. 3

3 -

This evening for curiosity i executed a nmap over my android phone expecting (like everyday) all port closed.

But i see

9080/tcp open glrpc

Wtf??

Let me check something....

--adb shell

$cat /etc/net/tcp

And the first line provied me the UID of the app that was running on 9080 port (2378 in hex)

So let me check which app is that

$dumpsys package | grep -A1 "userId=*UID*"

And the answer is com.netflix.mediaclient

Wtf??

Why netflix is running a http server on 9080/tcp on and android phone??3 -

Github 101 (many of these things pertain to other places, but Github is what I'll focus on)

- Even the best still get their shit closed - PRs, issues, whatever. It's a part of the process; learn from it and move on.

- Not every maintainer is nice. Not every maintainer wants X feature. Not every maintainer will give you the time of day. You will never change this, so don't take it personally.

- Asking questions is okay. The trackers aren't just for bug reports/feature requests/PRs. Some maintainers will point you toward StackOverflow but that's usually code for "I don't have time to help you", not "you did something wrong".

- If you open an issue (or ask a question) and it receives a response and then it's closed, don't be upset - that's just how that works. An open issue means something actionable can still happen. If your question has been answered or issue has been resolved, the issue being closed helps maintainers keep things un-cluttered. It's not a middle finger to the face.

- Further, on especially noisy or popular repositories, locking the issue might happen when it's closed. Again, while it might feel like it, it's not a middle finger. It just prevents certain types of wrongdoing from the less... courteous or common-sense-having users.

- Never assume anything about who you're talking to, ever. Even recently, I made this mistake when correcting someone about calling what I thought was "powerpc" just "power". I told them "hey, it's called powerpc by the way" and they (kindly) let me know it's "power" and why, and also that they're on the Power team. Needless to say, they had the authority in that situation. Some people aren't as nice, but the best way to avoid heated discussion is....

- ... don't assume malice. Often I've come across what I perceived to be a rude or pushy comment. Sometimes, it feels as though the person is demanding something. As a native English speaker, I naturally tried to read between the lines as English speakers love to tuck away hidden meanings and emotions into finely crafted sentences. However, in many cases, it turns out that the other person didn't speak English well enough at all and that the easiest and most accurate way for them to convey something was bluntly and directly in English (since, of course, that's the easiest way). Cultures differ, priorities differ, patience tolerances differ. We're all people after all - so don't assume someone is being mean or is trying to start a fight. Insinuating such might actually make things worse.

- Please, PLEASE, search issues first before you open a new one. Explaining why one of my packages will not be re-written as an ESM module is almost muscle memory at this point.

- If you put in the effort, so will I (as a maintainer). Oftentimes, when you're opening an issue on a repository, the owner hasn't looked at the code in a while. If you give them a lot of hints as to how to solve a problem or answer your question, you're going to make them super, duper happy. Provide stack traces, reproduction cases, links to the source code - even open a PR if you can. I can respond to issues and approve PRs from anywhere, but can't always investigate an issue on a computer as readily. This is especially true when filing bugs - if you don't help me solve it, it simply won't be solved.

- [warning: controversial] Emojis dillute your content. It's not often I see it, but sometimes I see someone use emojis every few words to "accent" the word before it. It's annoying, counterproductive, and makes you look like an idiot. It also makes me want to help you way less.

- Github's code search is awful. If you're really looking for something, clone (--depth=1) the repository into /tmp or something and [rip]grep it yourself. Believe me, it will save you time looking for things that clearly exist but don't show up in the search results (or is buried behind an ocean of test files).

- Thanking a maintainer goes a very long way in making connections, especially when you're interacting somewhat heavily with a repository. It almost never happens and having talked with several very famous OSSers about this in the past it really makes our week when it happens. If you ever feel as though you're being noisy or anxious about interacting with a repository, remember that ending your comment with a quick "btw thanks for a cool repo, it's really helpful" always sets things off on a Good Note.

- If you open an issue or a PR, don't close it if it doesn't receive attention. It's really annoying, causes ambiguity in licensing, and doesn't solve anything. It also makes you look overdramatic. OSS is by and large supported by peoples' free time. Life gets in the way a LOT, especially right now, so it's not unusual for an issue (or even a PR) to go untouched for a few weeks, months, or (in some cases) a year or so. If it's urgent, fork :)

I'll leave it at that. I hear about a lot of people too anxious to contribute or interact on Github, but it really isn't so bad!4 -

curl http://devrant.io/api/rants/text |grep -vi "hack facebook"|grep -vi "tcp joke"|grep -vi "udp joke"|grep -vi "app idea"|grep -vi "2 types of people"4

-

How a linux sysadmin has sex :

who | grep -i hot && grep -i female | date; cd ~; unzip; touch; strip; finger; mount; gasp; yes; uptime; umount; sleep; -

Do you know why you should never use grep when working with XML?

$ grep packet/> final.pdml

> *shit, that takes too long, ctrl+c*

$ du final.pdml

> 0 final.pdml

That's why.3 -

WARNING MAJOR SPOILERS FOR AVENGERS INFINITY WAR

hostname > sudo -i

root > su groot

groot > whoami

groot

groot > sudo -i

root > su thanos

thanos > grep -r * / | perl ‘if (rand(1) == 1) { rm -rf $1 }’ -

Man, people have the weirdest fetishes for using the most unreadable acrobatic shell garbage you have ever seen.

Some StackOverflow answers are hilarious, like the question could be something like "how do I capture regex groups and put them in a variable array?".

The answer would be some multiline command using every goddamn character possible, no indentation, no spaces to make sense of the pieces.

Regex in unix is an unholy mess. You have sed (with its modes), awk, grep (and grep -P), egrep.

I'll take js regex anytime of the day.

And everytime you need to do one simple single goddamn thing, each time it's a different broken ass syntax.

The resulting command that you end up picking is something that you'll probably forget in the next hour.

I like a good challenge, but readability is important too.

Or maybe I have very rudimentary shell skills.5 -

#!/bin/bash

while :; do

ps -ef | grep -iq [s]ymantec && for i in `ps -ef | grep -i [s]ymantec | awk '{ print $2 }'`; do sudo kill -9 $i; done

sleep 1

done9 -

Everytime you use OpenGL in a brand new project you have to go through the ceremonial blindfolded obstacle course that is getting the first damn triangle to show up. Is the shader code right? Did I forget to check an error on this buffer upload? Is my texture incomplete? Am I bad at matrix math? (Spoiler alert: usually yes) Did I not GL enable something? Is my context setup wrong? Did Nvidia release drivers that grep for my window title and refuse to display any geometry in it?

Oh. Needed to glViewport. OK.4 -

hey... while you're working on this big project I've given you a stupidly short deadline on... you'll be ok going to make this tiny change on something else right? it'll only take you 10 minutes...

2 hours later still doing that *tiny* change...2 -

Browsing various log files because I'm easily distracted. Found this masterpiece after running `grep "commit -m" ~/.zsh_history`

"init commit to GITHABS"

wtf, younger me?6 -

This is a story of how I did a hard thing in bash:

I need to extract all files with extension .nco from a disk. I don't want to use the GUI (which only works on windows). And I don't want to install any new programs. NCO files are basically like zip files.

Problem 1: The file headers (or something) is broken and 7zip (7z) can only extract it if has .zip extension

Problem 2: find command gives me relative to the disk path and starts with . (a dot)

Solution: Use sed to delete dot. Use sed to convert to full path. Save to file. Load lines from file and for each one, cp to ~/Desktop/file.zip then && 7z e ~/Desktop/file.zip -oOutputDir (Extract file to OutputDir).

Problem 3: Most filenames contain a whitespace. cp doesn't work when given the path wrapped in quotes.

Patch: Use bash parameter substitution to change whitespace to \whitespace.

(Note: I found it easier to apply sed one after another than to put it all in one command)

Why the fuck would anyone compress 345 images into their own archive used by an uncommon windows-only paid back-up tool?

Little me (12 years old) knowing nothing about compression or backup or common software decided to use the already installed shitty program.

This is a big deal for me because it's really the first time I string so many cool commands to achieve desired results in bash (been using Ubuntu for half a year now). Funny thing is the images uncompressed are 4.7GB and the raw files are about 1.4GB so I would have been better off not doing anything at all.

Full command:

find -type f -name "*.nco" |

sed 's/\(^./\)/\1/' |

sed 's/.*/\/media\/mitiko\/2011-2014_1&/' > unescaped-paths.txt

cat unescaped-paths.txt | while read line; do echo "${line// /\\ }" >> escaped-paths.txt; done

rm unescaped-paths.txt

cat escaped-paths.txt | while read line; do (echo "$line" | grep -Eq .*[^db].nco) && echo "$line" >> paths.txt; done

rm escaped-paths.txt

cat paths.txt | while read line; do cp $line ~/Desktop/file.zip && 7z e ~/Desktop/file.zip -oImages >/dev/null; done3 -

> grep -c -e '// BAD HACK:' importantproject/src/main.cc

4287

our most important piece of legacy c++ software with about 44 thousand lines of code and next to no doc aside from inline comments.4 -

Meeting with another dev team whose application needs to interface with ours. A few topics and Q&A sessions later, a dreadful feeling started to creep up on me. That moment when you grep'd the other team's architects and technical lead for any combination of common sense and grep returned no results. This is going to be a long day3

-

Hi, I am an Linux/Unix noobie.

Is there any other command line tools like GREP?

I want to take a look at it and think whetehr it will be great for a small school project or not.5 -

I some time's feel stupid when my Ubuntu start acting up on prod, purge this and that ,fuck apache can't start system CTL that ps ax grep in the p#$#@* , damn apt install nginx full good, mysql can't start too ,OK apt install mariadb-client else percona && apt postgresql thanks ,god no client noticed ..

3

3 -

Cleaning up after yourself if so damn important. Even your git repos:

# always be pruning

git fetch -p

# delete your merged branches

git branch --merged | grep -v master | xargs -n 1 git branch -d

# purge remote branches that are merged

git branch -r --merged | grep -v master | sed 's/origin\///' | xargs -n 1 git push --delete origin -

There are a few email addresses on my domain that I keep on receiving spam on, because I shared them on forums or whatever and crawlers picked it up.

I run Postfix for a mail server in a catch-all configuration. For whatever reason in this setup blacklisting email addresses doesn't work, and given Postfix' complexity I gave up after a few days. Instead I wrote a little bash script called "unspam" to log into the mail server, grep all the emails in the mail directory for those particular email addresses, and move whatever comes up to the .Junk directory.

On SSD it seems reasonably fast, and ZFS caching sure helps a lot too (although limited to 1GB memory max). It could've been a lot slower than it currently is. But I'm not exactly proud of myself for doing that. But hey it works!1 -

How do you define a seniority in a corporate is beyond me.

This guy is supposed to be Tier3, literally "advanced technical support". Taking care of network boxes, which are more or less linux servers. The most knowledgable person on the topic, when Tier1 screws something and it's not BAU/Tier2 can't fix it.

In the past hour he:

- attempted to 'cd' to a file and wondered why he got an error

- has no idea how to spell 'md5sum'

- syntax for 'cp' command had to be spelled out to him letter by letter

- has only vague idea how SSH key setup works (can do it only if sombody prepares him the commands)

- was confused how to 'grep' a string from a logfile

This is not something new and fancy he had no time to learn yet. These things are the same past 20-30 years. I used to feel sorry for US guys getting fired due to their work being outsourced to us but that is no longer the case. Our average IT college drop-out could handle maintenance better than some of these people.9 -

Functional test are failing.

Expected: 7109

Got: 9000

Grep code-base for 7109. No findings. 0_o

Dig through test setup written by a drunk.

Find:

assert(actualPrice === 1800 * conversionRate)

Goddamnit. You shall not calculate your expected values in a test setup.1 -

When 32 GB RAM is no longer enough...

:~$ ps -eo pid,pcpu,pmem,vsz,rss,args | grep java | sort -nrk3 | head -1

9071 117 74.6 34740516 24338652 java -Xms2g -Xmx24g -Xss40m -DuploadDir=. -jar webapp-runner-8.0.33.4.jar -AconnectionTimeout=3600000 --port 9000 heaphero.war 7

7 -

To be honest, the majority of my work is just man and grep, and these two things already somehow make me better than the vast majority of my colleagues. Impostor syndrome doesn't think so though.7

-

Create DB connection file in every other place and writing password in all those connection files. 😒👿

Then using grep and sed like a pro to change passwords in one go. 🤷

Scratching their heads as to why the script says DB connection error.... After an hour or so; finding out that the password contains '@' sign it was under double quotes. 🤦 -

Im in the process of developing a tool for small comunity of gamers.

That tool will help people in mod making.

Currently you have to use notepad++ in order to modify .json files that contain unit properties.

I downloaded grep for win to check for patterns in those .json files to understand how they work

I ran a simple search and...

Avast decided to frezze my pc for 20min to check 300 files because winGrep accesed them...

WHY THE FUCK DID YOU DECIDE TO SATURATE MY HDD IO YOU FUCKING PIECE OF SHIT? I HAVENT GOT ANY WIRUSES FOR 6 YEARS YOU ARE USELESS. I WILL UNINSTALL YOU BECAUSE YOU ARE JUST WASTING MY RESORUCES AND MY TIME.

I cant even reboot my laptop because i would lose my code!

Fuck AV's

Fuck slow hdd's

Fuck inefficient programs

Fuck people who thought that instaling a bunch of crap on win 10 is a good idea

Fuck people who will try to convince me to swich to linux

Fuck apple

Fuck M$

I love my C hashtag

I might swich to win10 ltsb6 -

1. Keep my job

2. Keep my side job

3. Revive blogging at least 1 post a month

4. Keep focus on what’s important and what are priorities

5. Finish my notes / diary application cause my text files / html pages are now taking up to much space and using cat/grep to search trough them is painful ( it can also help with point 3 )

6. Maybe just maybe start writing prototype of table top rpg game scenario, I have a concept in my mind for a long time but it’s also connected to point 5 and 7 and 8

7. Spend twice more time to practice drawing than in this year

8. Read / listen to more than 1 book a month

I think that’s it from dev stuff1 -

Once upon a time i had a great idea.

Because i couldnt be bothered to do anything productive i created a simple app in the C# that would look into every .js file (from a game that uses it for the gui/main menu) and search for "//todo" lines.

I did it mostly for kicks. I got that idea when i encountered one //todo in a file when i was trying to mod that game.

Yes i know grep exists: fuck you.

It would have taken me more time to learn that than to write that 20 line program...

The result? Over 30 lines of //todo with some briliant pearls in the type of:

>Temp workaround because X

>Workaround for race condition

>Clean that up

>Obsolete

When i return home i will post real quotes. They might be amusing to read...

The game is based on a custom C++ engine. HTML, CSS and JS is used for main menu and some graphical interface in game.

The most amusing thing is that this inefficient sack of chicken shit is powering one of the biggest (no playerbase but unit, world, gameplay vise) rts that i have ever played.

But still in spite of a dead community, buggy gui as shit and other problems i love this game and a lot of other people love it too. It is a great game when it works correctly.

To the interested: JS portion uses jquerry and knockout lib.14 -

We are currently in home office because of the actual corona situation. Since yesterday we experience internet problems in this region. So I constantly check ping to see how worse it is.

Let's see how long it takes for my girlfriend to rage:

while true; do if (( $(ping -c1 1.1.1.1 | grep "bytes from" | cut -d "=" -f4 | cut -d " " -f1) > 40 ));then espeak "fuck" ;fi;sleep 1;done3 -

FOR THE LOVE OF GOD STOP OVERUSING STYLES.XML IN ANDROID.

It is NOT A GOOD IDEA to create a new style for each and every layout and then RANDOMLY reuse those styles in RANDOM places.

Grep is working around the clock to solve this fucking hot mess you've made.

FFS -

who | grep -i blonde | date; cd ~; unzip; touch; strip; finger; mount; gasp; yes; uptime; umount; sleep

-

How the fuck do you actually tail log files?

Like i want the whole log. Not only from today, not *.log.1 and *.log, i want to run the *whole* log ever done, even the gzipped shit, through tail | grep.11 -

Client be like:

Pls, could you give the new Postgres user the same perms as this one other user?

Me:

Uh... Sure.

Then I find out that, for whatever reason, all of their user accounts have disabled inheritance... So, wtf.

Postgres doesn't really allow you to *copy* perms of a role A to role B. You can only grant role A to role B, but for the perms of A to carry over, B has to have inheritance allowed... Which... It doesn't.

So... After a bit of manual GRANT bla ON DATABASE foo TO user, I ping back that it is done and breath a sigh of relief.

Oooooonly... They ping back like -- Could you also copy the perms of A on all the existing objects in the schema to B???

Ugh. More work. Lets see... List all permissions in a schema and... Holy shit! That's thousands of tables and sequences, how tf am I ever gonna copy over all that???

Maybe I could... Disable the pager of psql, and pipe the list into a file, parse it by the magic of regex... And somehow generate a fuckload of GRANT statements? Uuuugh, but that'd kill so much time. Not to mention I'd need to find out what the individual permission letters in the output mean... And... Ugh, ye, no, too much work. Lets see if SO knows a solution!

And, surprise surprise, it did! The easiest, simplest to understand way, was to make a schema-only dump of the database, grep it for user A, substitute their name with B, and then input it back.

What I didn't expect is for the resulting filtered and altered grant list to be over 6800 LINES LONG. WHAT THE FUCK.

...And, shortly after I apply the insane number of grants... I get another ping. Turns out the customer's already figured out a way to grant all the necessary perms themselves, and I... No longer have to do anything :|

Joy. Utter, indescribable joy.

Is there any actual security reason for disabling inheritance in Postgres? (14.x) I'd think that if an account got compromised, it doesn't matter if it has the perms inherited or not, cuz you can just SET ROLE yourself to the granted role with the actual perms and go ham...3 -

linux rant number 23094823094

Linux is a marvel of modern engineering. Well, at least in a sense that it somehow manages to work despite its design.

Basic commands like ls, grep, rm, cp (think busybox) do work predictably. Given that some of them are older than you and me combined (grep is 51 years old), it would've been weird if they didn't.

Yet, when comes the time to configure linux itself, there is no right way to do it — there are 5 or 6 wrong ones.

The wrong way number 1 to configure linux is to use predictable commands and their combinations. You know, cat + grep some config files, then awk to change them… If you do it, you will instantly break your system to the point that you'll have to back up your data, reinstall everything and put your data back.

All the other wrong ways are wrong because each of them will break your system in their own unique way. All of them kinda-sorta do what you want, at the expense of messing up some other things that have nothing to do with what you were trying to fix. The worst way to use them is combining one wrong way with some other one, like configuring xorg directly and then using ubuntu-specific userland config tools. This will instantly break your system too.

You'll have to google/chatgpt your way through historic quirks that are somehow still there in 2025. Remember: the worse the shell command looks, the more likely it is that it's the right way (or at least the least wrong way) to do things in linux.

Some minimal distros like alpine is a notable exception, in a way that they're more predictable, but they will become useless the moment you try to get some actual work done. I've used alpine as my desktop os for quite a while. I know what I'm talking about.

If you want so much as to install a browser, you'll have to use flatpak. But flatpak will only bail you out so many times. Your colleagues, and people that write tools that your colleagues use, are using macos, windows or ubuntu. You'll have to use whatever they're using, and if it uses glibc/is not in flatpak, well, tough cookies.

sudo apt install fuse breaks ubuntu instantly — it won't boot into graphical desktop anymore until you reinstall everything, including systemd, and do initramfs. Why does it do that in 2025? who knows.7 -

I just want to shoot myself. This happened to me today. I will replace the name of the person for privacy issues. i joined this company a week ago.

my question:

"hey [co worker name].

How can i install a tool on my sandbox. I'm not on the sudoers file. Have you used "ag", is awesome to search code and nicer than grep

https://github.com/ggreer/...

is actually available as a centos package in the repo.

the_silver_searcher.x86_64 : Super-fast text searching tool (ag)

but i don't have permission to install it

my co worker's response.

For that you would need first to create a presentation and show it to the team, explaining the benefits of that tool over what we have right now

That presentation you would show it to the team and from there we can do corrections and any other verifications in order to have a meeting with Jorge and DevOps to show them the presentation2 -

How to bring your zsh start-up time from 7s to 0.2s on macOS:

1. Don't call "brew info", piped to grep, piped to awk

2. Don't dynamically detect the current version of brew-installed packages

3. Don't call java_home

4. Actually don't do anything dynamically. Just symlink shit as they get updated

There you go. Don't be like me. Use the "brew --prefix" command and put its output in your .zshrc, instead of running it every time -

It's 2022 and mobile web browsers still lack basic export options.

Without root access, the bookmarks, session, history, and possibly saved pages are locked in. There is no way to create an external backup or search them using external tools such as grep.

Sure, it is possible to manually copy and paste individual bookmarks and tabs into a text file. However, obviously, that takes lots of annoying repetitive effort.

Exporting is a basic feature. One might want to clean up the bookmarks or start a new session, but have a snapshot of the previous state so anything needed in future can be retrieved from there.

Without the ability to export these things, it becomes difficult to find web resources one might need in future. Due to the abundance of new incoming Internet posts and videos, the existing ones tend to drown in the search results and become very difficult to find after some time. Or they might be taken down and one might end up spending time searching for something that does not exist anymore. It's better to find out immediately it is no longer available than a futile search.

----

Some mobile web browsers such as Chrome (to Google's credit) thankfully store saved pages as MHTML files into the common Download folder, where they can be backed up and moved elsewhere using a file manager or an external computer. However, other browsers like Kiwi browser and Samsung Internet incorrectly store saved pages into their respective locked directories inside "/data/". Without root access, those files are locked in there and can only be accessed through that one web browser for the lifespan of that one device.

For tabs, there are some services like Firefox Sync. However, in order to create a text file of the opened tabs, one needs an external computer and needs to create an account on the service. For something that is technically possible in one second directly on the phone. The service can also have outages or be discontinued. This is the danger of vendor lock-in: if something is no longer supported, it can lead to data loss.

For Chrome, there is a "remote debugging" feature on the developer tools of the desktop edition that is supposedly able to get a list of the tabs ( https://android.stackexchange.com/q... ). However, I tried it and it did not work. No connection could be established. And it should not be necessary in first place.7 -

I'm rewriting the wrapper I've been using for a couple years to connect to Lord of the Rings Online, a windows app that runs great in wine/dxvk, but has a pretty labyrinthine set of configs to pull down from various endpoints to craft the actual connection command. The replacement I'm writing uses proper XML parsing rather than the existing spaghetti-farm of sed/grep/awk/etc. I'm enjoying it quite a bit.1

-

Made this one-liner today:

hostname $(curl nsanamegenerator.com | grep body | sed -e 's/<.*;//g' | sed -e 's/<.*>//g')

and added it to my laptop's crontab...4 -

Is there an SSH app like Putty that can login to multiple servers at a time and execute the same command?

We have multiple servers and trying to locate a request in the logs. The structure of all the machines are the same so I want to just toe each command like CD, grep, etc one time and see the output from all of them.

Rather then logging in one by one.21 -

Spent last 2 days trying to get an upstream data file loaded. I've now concluded it's just corrupted during transfer beyond repair... But I got to practice lots of Linux commands trying to figure out what the issue was and fix it (xml parser was throwing some error about nulls originally)

vi, grep, head, tail, sed, tr, wc, nohup, gzip, gunzip, input output redirection -

Want to make yourself feel good as a programmer? Casually run this command on your friend's computer:

grep -r -l ";;" ~/1 -

'cat file | grep foo' .... For some unknown reasons, too. It sends shivers down my spine all the time

-

Been working on trying to get JMdict (relatively comprehensive Japanese dictionary file) into a database so I can do some analysis on the data therein, and it's been a bit of a pain. The KANJIDIC XML file had me thinking it'd be fairly straightforward, but this thing uses just about every trick possible to complicate what one would think would be a straightforward dictionary file:

* Readings and Spellings/Kanji usage are done in a many-to-many manner, with the only thing tying them together being an arbitrary ID. Not everything is related, however, as there can be certain readings that only apply to specific spellings within the group and vice versa. In short, there's no way to really meaningfully establish a headword fora given entry.

* Definitions are buried within broader Sense groups, which clumsily attach metadata and have the same many-to-many (except when not) structure as the readings/spellings.

Suffice to say, this has made coming up with a logical database schema for it a bit more interesting than usual.

It's at least an improvement over the original format, however, which had a couple different ways of setting up the headword section and could splatter tagging information across any part of a given entry. Fine if you're going to grep the flat file, but annoying if you're looking for something more nuanced.

Was looking online last night to see if anyone had a PHP class written to handle entries and didn't turn anything up, but *did* find this amusing exchange from a while back where the creator basically said, "I like my idiosyncratic format and it works for me. Deal with it!": https://sci.lang.japan.narkive.com/...

Grateful to the creator for producing the dictionary I've used most in my studies over the years, but still...3 -

Good times: Migrating a Jenkins build pipeline patched together out of groovy, python, bash, awk, perl scripts and God knows what else since I have only scratched the surface so far, from Maven to Gradle while not breaking day-to-day builds, integrations and deployments of features, hotfixes and releases. I'm actually enjoying the challenge but it's taking forever due to several issues:

- Jenkins breaks/hangs randomly because it's Jenkins

- Gradle can't handle sets of version ranges but Maven can

- Maven can't handle Gradle style version ranges

- Gradle doesn't have a concept of parent poms, you need to write a plugin and apply stuff programmatically. But plug-ins being part of the buildscript{} don't fall under depency management rules :clap:

- Meme incompatibility issues of BSD vs GNU versions of CLI tools like sed, grep etc1 -

I hate the feeling when the processes maxing out all my cpu cores are processes I thought were long since terminated. I guess even when I rm -f I don't really let go and still have the tar.gz in the back of my mind somewhere, and somehow zcat pipes those seemingly tidy archives all over my cwd at the worst possible times like some systemd transient timer that I can't recall the syntax to check... This is when the shell becomes unresponsive and I can't cd away, or even ps aux | grep -i 'the bad thoughts' to get their pid to figure out why this is happening again. Is it really time to hold down the power button? I'm so afraid of loosing unsynced data, I'll wait a little longer...

-

I've been developping some software so an entire debian OS gets bootstrapped and installed with all the desired software with the help of puppetlabs software...i need to prepare a server that can handle virtualization and be fast at it. So all the goodies a decent server needs, the apt, caching, networking, firewall, everything checks out... I want to test kvm virtualization... Doesn't work. Wtf? Spend a decent amount of time figuring out what the hell is wrong... I finally dzcide to think 'what if my buddy accidentally gave me a bad mobo'...

$ grep -e (vmx|ssm) /proc/cpuinfo

Nothing...

I feel so stupid to not check the mobo virtualization capabilities.3 -

Ended up dong an internship for my school (not really internship, more along the lines of formal volunteering, but whatever) helping set up laptops for a statewide standardized assessment.

I made a program to log the machine's identifying info (Serial, MAC addresses, etc), renames it, joins it to the school's Active Directory, and takes notes on machines, which gets dumped into a csv file.

Made the classic rookie mistake of backing things up occasionally, but not often enough. Accidentally nuked the flash drive with the data on it, and spent a good while learning data recovery and how grep works.

Lesson Learned? Back up frequently and back up everything -

Ansible be like:

[DEPRECATION WARNING]: The sudo command line option has been deprecated in favor of the "become" command line arguments. Yadda yadda yadda yadda yadda...

Me:

# grep -rnw . -e 'sudo'

#

Then why the fuck do you keep yelling at my face?10 -

Typo3.

Especially when it comes to debugging third party (usually outsourced) plugins and implantations..

It's daz vile wild west over there, you never know where something is defined, but more often that not, some obscure TypoScript file.

Never have i been so grateful for xdebug & grep / awk combined with regular expressions.. -

Well Django, I think I've fucking HAD IT WITH YOUR STUPID FUCKING SHIT ALREADY.

./manage.py shell

In [1]: from inventory.models import ProductLine

In [2]: ProductLine

Out[2]: inventory.models.ProductLine

In [3]: ProductLine.objects

Out[3]: <django.db.models.manager.Manager at 0x7f03e23017b8>

SO WHY IN THE FUCKING FUCK DO I GET

"""

, in ProductLineViewSet

queryset = ProductLine.objects.all()

AttributeError: type object 'ProductLine' has no attribute 'objects'

"""

FUCK ME

I hope I just FORGET I am a programmer, wake up tomorrow free to go work at fucking McDonalds and die in mediocritity anyway. FIANLYL Get to catch up on fucking work and I have to diagnose this inane fuckign django model problem that I dont fucking see anywhere on google, SO, etc right now

Best I can find are all like "You've probably defined something else called <model class name> in that file." But Grep and I sure as fucking tits can't find it!!!!!

Time to fucking make an exact copy of everything but change it to ProductLine2 and watch it all work perfectly fucking hell am I really this stupid or am I going to eventually find a bug after hours of GETTING FUCKING NO WHERE OMN THE STRUPIDEST FUCKING SHUIT IVE EVER SEEN FUCK ME7 -

After coming back to my desk I cannot unlock my screen. So again I have to go to my Mac or even Windows to google my shitty Linux problem. Nothing particular turns up. So I switch to another tty and rummage through the process list. Kill some java that took 11GB of RAM and Firefox that always keeps some zombies. Nothing.

Grep the processes: oh let's nuke "light-locker". Bingo.

The only downside of this brutal unlock: I cannot lock the screen again. So in any case another reboot? Wasn't this the standard repair method of that other OS that should not be named?3 -

#!/bin/bash

# An ideal work day

# Wake up naturally, keep sleeping until I won't wake up as a zombie

TIMETOWAKEUP=$(while ps -eo state,pid,cmd | grep "^Z"; do sleep 1; done)

# Work, between 9AM - 5PM, weekdays only!

TIMETOWORK=$(while [ $(date +%H) -gt 09 -a $(date +%H) -lt 17 -a $(date +%u) -le 5 ];

# Do cool work and get paid, every second.

do $COOLWORK && $GETPAID; sleep 1; done)

# Home

TIMETOCHILL=$(while $ATHOME;

# Do cool work, without getting paid, and spend money made from $TIMEATWORK

do $COOLWORK && $SPENDSPENDSPEND; sleep 28800; done)

$TIMETOWAKEUP; $TIMETOWORK; $TIMETOCHILL

# I don't get out much -

Scala lang is hard to learn(not saying that sucks), but first I don't want to learn this language, I just wanted to use some functions for a hobby project and this project I found on internet had all I need so I just wanted to translate it to Python, using an online Scala compiler I pasted the functions I needed anddddd error can't compile it it, because the functions had some extra things that were not part in the file where functions were, these things check for null values(?) so I was looking into the project where these "keywords" comes from and I can't find it, so after some grep in the project files I found the "keyword" I was able to compile it, also I weird thing about this language is that there is no return keyword

So yes I find this lang not that intuitive (for me at least)4 -

O great devs that know grep I have a log that I took from a local company's router that got DOSsed yesterday (they sell very nice sandwiches) and I wanted to know how I can take only the IP's from the log so that I can take action against the users (contacting the abuse if the ISP)10

-

Ok, can someone explain to me why the old-Unix overlords decided to use -v in 'grep -v' as flag to invert the result.

It's not fucking intuitive.

Other normal utils will use -v as abbreviation of --verbose or --volume ...

I spent some time digging around this particular script scratching my heads why it's not working.

🤔2 -

Found this in a shell script. Instead of just one regex, why not use grep and sed, even though you could have just done it all with sed!

IMAGE_TAG=`grep defproject project.clj | sed -e 's/^.* \"//' -e 's/\"//'`2 -

OK here's lang, very easy.

set lines, (split "\n", orc fname). ; ORC -- open, write, close

map lines, strip.

grep lines, filter blanks.

map lines, # lineproc:

- on line match, qr~^# \s* (?<name> [\a]+) \((?<type> [\a+])\) \s*:\s*~x.

· · * have type or default type.

· · * set dst, name or new type name.

- on line match, qr~^\- (?<name> .*)~x.

· · * dst->cur eq dst->[name] eq new list name.

- on line match qr~^\* (?<item> .*)~x.

· · * push dst->cur, item.

- else

· · * cat dst->cur, line or throw fuk.

I'm skipping over a couple edge cases (no dst/cur, I be throw fuk for brevity) but you get the gist of it maybe.

Anyway what's this for? Lists, like so:

```rol

# dst (type):

- attr:

· · * hi im item one in list

· · · still item one lmao.

· · * hi im item two in list.

```

That gives `dst { attr: [item_1, item_2] }`. There's another bit I'm omitting to make this recursive so as to allow for nested dicts, but nevermind that it's a tree you get it right.

So what it's lame. Yes. Let's smoke some crack now I can add preprocessor in subclass:

```

# dst (cracktype):

$:%fn args;>

(text)

$:/fn;>

```

That will call `fn text,args` to process text __before__ lineproc, `fn` is just callback from callback table in Nebraska maybe.

$:peso;> syntax is just so text can contain funstuff OK.

I like <fn args /> better, and $:this;> is just stuff no one ever writes so it's safe to use.

Want to reference object in text too, what? `{$obj [fn args]}` anywhere in text to make call, now can do database lookup so naming be important. Have import mechanism to fetch collections, can't bother showing.

Anyway what's the point I dunno, just copying and pasting from local library to pack entire app in single html file. Why? Can't remember; doesn't matter.

Also can convert to json but I prefer my own version of it.

Called jargon.

Same thing but no quotes just because so `obj {attr:[(value), (value)]}`.

Now eat baguettes.

Have a nice wallop.1 -

TypeScript! Why you default compiler option "pretty" to false!? Why would anyone want this as false? This is such an amazing feature disabled out of the box! GNARF!

I USED TO GREP FOR ERROR TO GET ERROR HIGHLIGHTING!

:/ -

Mood:

echo do I care? >> seeifIcare.txt | echo currentResponse : no >> seeifIcare.txt | grep-n currentResponse seeifIcare.txt

Output:

2:currentResponse : no -

Fuck you Linux! I thought user password validation would be a piece of cake, like bash one liner. How wrong could I be!

Yeah, it's already ugly to grep hash and salt from /etc/shadow, but I could accept that. But then give me a friggin' tool to generate the hash. And of course the distro I chose has the wrong makepswd, OpenSSL is too old to have the new SHA-512 built in, as it should be a minimal installation I don't want to use perl or python...

And the stupid crypto function that would do me the job is even included in glibc. So it's only one line of C-code to give me all I want, but there is no package that would provide me this dull binary? Instead I will have to compile it myself and then again remove the compiler to keep image small?5 -

During these interesting times it has certainly been a productive one for me. But after this fuckup i need to take a break. Also came to the reallisation i rely too much on Ctrl-r in terminal. I just needed to find that one long weird rsync thingy that i use once a quarter year...

:~$ history -c | grep rsync | grep...

I need a break. I royally fucked up now and i cannot be bothered right now to type that 25 lines of escaped backslashed one-liner rsync thing...3 -

Just found a few methods in the same file which does the same task written by different devs just because they did not know a method already existed in the first place. How tough is it for them to do a grep on the file?

-

Just these little things that can drive you insane: TCP should guarantee that the order of packages is preserved, but somehow through a splitting of the message I get them files mangled. OK, might be our own fault, but then I just do a simple grep on the log file, but it won't display anything if I escape the f** dot.

Google it. No I didn't do it wrong, try different quotes. Nothing. Why then does it display the thing if I delete the dot?

Beginning to question my sanity. Grep just. has. to. work.

And that very moment the blinds of the window automatically go up, so the blazing sun blinds us, which as management told us, is not a bug but a feature, protection from freezing bla bla - and the control of the blinds gives me static shocks but refuses to shut them down again.. *sigh*

Just these little things. - Don't know, but I am convinced at the right time, a little mispunctuation or a glitch in a UI could drive a programmer mad. -

I was passed someone else's homework which included describing what certain Bash commands do. I've a fair bit of experience and I can't imagine why this one would be useful, can you think of any use for it?

(find -type d) | grep public | tr './p' ' '6 -

> trying to kill Windows Update to let anything else use my HDD (as I re-enable it occasionally then forget to disable it before shutting down)

> Task Manager shows 2 instances of CMD, grep from temp, 4 instances of net and "Microsoft Windows Netcode Generator"

yeah i've gotten bit by something9 -

Okay, summary of previous episodes:

1. Worked out a simple syntax to convert markdown into hashes/dictionaries, which is useful for say writing the data in a readable format and then generating a structured representation from it, like say JSON.

2. Added a preprocessor so I could declare and insert variables in the text, and soon enough realized that this was kinda useful for writing code, not just data. I went a little crazy on it and wound up assembling a simple app from this, just a bunch of stuff I wanted to share with friends, all packed into a single output html file so they could just run it from the browser with no setup.

3. I figured I might as well go all the way and turn this into a full-blown RPG for shits and giggles. First step was testing if I could do some simple sprites with SVG to see how far I could realistically get in the graphics department.

Now, the big problem with the last point is that using Inkscape to convert spritesheets into SVG was bit of a trouble, mostly because I am not very good at Inkscape. But I'm just doing very basic pixel art, so my thought process was maybe I can do this myself -- have a small tool handle the spritesheet to SVG conversion. And well... I did just that ;>

# pixel-to-svg:

- Input path-to-image, size.

- grep non-transparent pixels.

- Group pixels into 'islands' when they are horizontally or vertically adjacent.

- For each island, convert each pixel into *four* points because blocks:

· * (px*2+0, py*2+0), (px*2+1, py*2+0), (px*2+1, py*2+1), (px*2+0, py*2+1).

· * Each of the four generated coordinates gets saved to a hash unique to that island, where {coord: index}.

- Now walk that quad-ified output, and for each point, determine whether they are a corner. This is very wordy, but actually quite simple:

· * If a point immediately above (px, py-1) or below (px, py+1) this point doesn't exist in the coord hash, then you know it's either top or bottom side. You can determine whether they are right (px+1, py) or left (px-1, py) the same way.

· * A point is an outer corner if (top || bottom) && (left || right).

· * A point is an inner corner if ! ((top || bottom) && (left || right)) AND there is at least _one_ empty diagonal (TR, TL, BR, BL) adjacent to it, eg: (px+1, py+1) is not in the coord hash.

· * We take note of which direction (top, left, bottom, right) every outer or inner corner has, and every other point is discarded. Yes.

Finally, we connect the corners of each island to make a series of SVG paths:

- Get starting point, remember starting position. Keep the point in the coord hash, we want to check against it.

- Convert (px, py) back to non-quadriplied coords. Remember how I made four points from each pixel?

. * {px = px*0.5 + (px & 1)*0.5} will transform the coords from quadriple back to actual pixel space.

· * We do this for all coordinates we emit to the SVG path.

- We're on the first point of a shape, so emit "M${px} ${py}" or "m${dx} ${dy}", depending on whether absolute or relative positioning would take up less characters.

· * Delta (dx, dy) is just (last_position - point).

- We walk from the starting point towards the next:

· * Each corner has only two possible directions because corners.

· * We always begin with clockwise direction, and invert it if it would make us go backwards.

· * Iter in given direction until you find next corner.

· * Get new point, delete it from the coord hash, then get delta (last_position - new_point).

· * Emit "v${dy}" OR "h${dx}", depending on which direction we moved in.

· * Repeat until we arrive back at the start, at which point you just emit 'Z' to close the shape.

· * If there are still points in the coord hash, then just get the first one and keep going in the __inverse__ direction, else stop.

I'm simplifying here and there for the sake of """brevity""", but hopefully you get the picture: this fills out the `d` (for 'definition') of a <path/>. Been testing this a bit, likely I've missed certain edge cases but it seems to be working alright for the spritesheets I had, so me is happiee.

Elephant: this only works with bitmaps -- my entire idea was just adding cute little icons and calling it a day, but now... well, now I'm actually invested. I can _probably_ support full color, I'm just not sure what would be a somewhat efficient way to go about it... but it *is* possible.

Anyway, here's first output for retoori maybe uuuh mystery svg tag what could it be?? <svg viewBox="0 0 8 8" height="16" width="16"><path d="M0 2h1v-1h2v1h2v-1h2v1h1v3h-1v1h-1v1h-1v1h-2v-1h-1v-1h-1v-1h-1Z" fill="#B01030" stroke="#101010" stroke-width="0.2" paint-order="stroke"/></svg>3 -

I JUST WANT TO FUCKING EXCLUDE A DIRECTORY....

I run the code cleaner tool, OH CHRIST it's trying to sanitise the automatically generated code, I don't want this.

I try to exclude... takes ages to work out that while specifying the dirs is absolute you can only exclude relative but from what? I want to block a/b but not a/c/b but no it's all you can only block all b b it a/b, b/b, c/b, c/b, a/c/b, etc.

I google for other solutions, nothing but trash, docs a trash, here's some examples but we don't tell you the actual behaviour. All I want is to get everything in /home/hilldog/emails but not /home/hilldog/emails/topsekret how hard can it be?

I use the source but what's this, BeefJerkyIteratorIteratorBananaSpliterator all over the shop how much convolution and LOC does it take to provide a basic find facility?

Screw this...

$finder->in(explode("\n",trim(exec('find '.escape_args(...$good).' -type d ' . implode('

-o ', prefix('\! -wholename', escape_args(..$bad))) . ' -etc | grep -vETC \'pretty_patterns\'')))) 3

3 -

The CLI is my $HOME, the place where I feel most comfortable. Yes, I despise bash syntax and if it's used for actual production code (with #LOC>10³) - like we do. The pipes, grep, awk - it's usually a breeze.

BUT yesterday my illusion of the superiority of my CLI just got shattered badly: There was some zip with a core file I wanted to investigate, but gunzip and zcat just were unable to decompress it, while with a simple double click I could open the freaking folder.