Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "search api"

-

Last year I built the platform 'Tindex'. It was an index of Tinder profiles so people could search by name, gender and age.

We scraped the Tinder profiles through a Tinder API which was discontinued not long ago, but weird enough it was still intact and one of my friends who was also working on it found out how to get api keys (somewhere in network tab at Tinder Online).

Except name, gender and age we also got 3 distances so we could calculate each users' location, then save the location each 15 minutes and put the coordinates on a map so users of Tindex could easily see the current location of a specific Tinder user.

Fun note: we also got the Spotify data of each Tinder user, so we could actually know on which time and which location a user listened to a specific Spotify track.

Later on we started building it out: A chatbot which connected to Tinder so Tindex users could automatically send a pick up line to their new matches (Was kinda buggy, sometimes it sent 3 pick up lines at ones).

Right when we started building a revenue model we stopped the entire project because a friend of ours had found out that we basically violated almost all terms.

Was a great project, learned a lot from it and actually had me thinking twice or more about online dating platforms.

Below an image of the user overview design I prototyped. The data is mock-data. 51

51 -

So a friend of Mine asked me to check their Mail server because some emails got lost. Or had a funny signature.

Mails were sent from outlook so ok let's do this.

I go create a dummy account, and send/receive a few emails. All were coming in except one and some had a link appended. The link was randomly generated and was always some kind of referral.

Ok this this let's check the Mail Server.

Nothing.

Let's check the mail header. Nothing.

Face -> wall

Fml I want to cry.

Now I want to search for a pattern and write a script which sends a bunch of mails on my laptop.

Fuck this : no WLAN and no LAN Ports available. Fine let's hotspot the phone and send a few fucking mails.

Guess what? Fucking cockmagic, no funny mails appear!

At that moment I went out and was like chainsmoking 5 cigarettes.

BAM!

It hit me! A feeling like a unicorn vomiting rainbows all over my face.

I go check their firewall. Shit redirected all email ports from within the network to another server.

Yay nobody got credentials because nobody new it existed. Damn boy.

Hook on to the hostmachine power down the vm, start and hack yourself a root account before shit boots. Luckily I just forgot the credentials to a testvm some time ago so I know that shit. Lesson learned: fucking learn from your mistakes, might be useful sometimes!

Ok fucker what in the world are you doing.

Do some terminal magic and see that it listens on the email ports.

Holy cockriders of the galaxy.

Turns out their former it guy made a script which caught all mails from the server and injected all kind of bullshit and then sent them to real Webserver. And the reason why some mails weren't received was said guy was too dumb to implement Unicode and some mails just broke his script.

That fucker even implented an API to pull all those bullshit refs.

I know your name "Matthias" and I know where you live and what you've done... And to fuck you back for that misery I took your accounts and since you used the same fucking password for everything I took your mail, Facebook and steam account too.

Git gut shithead! You better get a lawyer15 -

So, you start with a PHP website.

Nah, no hating on PHP here, this is not about language design or performance or strict type systems...

This is about architecture.

No backend web framework, just "plain PHP".

Well, I can deal with that. As long as there is some consistency, I wouldn't even mind maintaining a PHP4 site with Y2K-era HTML4 and zero Javascript.

That sounds like fucking paradise to me right now. 😍

But no, of course it was updated to PHP7, using Laravel, and a main.js file was created. GREAT.... right? Yes. Sure. Totally cool. Gotta stay with the times. But there's still remnants of that ancient framework-less website underneath. So we enter an era of Laravel + Blade templates, with a little sprinkle of raw imported PHP files here and there.

Fine. Ancient PHP + Laravel + Blade + main.js + bootstrap.css. Whatever. I can still handle this. 🤨

But then the Frontend hipsters swoosh back their shawls, sip from their caramel lattes, and start whining: "We want React! We want SPA! No more BootstrapCSS, we're going to launch our own suite of SASS styles! IT'S BETTER".

OK, so we create REST endpoints, and the little monkeys who spend their time animating spinners to cover up all the XHR fuckups are satisfied. But they only care about the top most visited pages, so we ALSO need to keep our Blade templated HTML. We now have about 200 SPA/REST routes, and about 350 classic PHP/Blade pages.

So we enter the Era of Ancient PHP + Laravel + Blade + main.js + bootstrap.css + hipster.sass + REST + React + SPA 😑

Now the Backend grizzlies wake from their hibernation, growling: We have nearly 25 million lines of PHP! Monoliths are evil! Did you know Netflix uses microservices? If we break everything into tiny chunks of code, all our problems will be solved! Let's use DDD! Let's use messaging pipelines! Let's use caching! Let's use big data! Let's use search indexes!... Good right? Sure. Whatever.

OK, so we enter the Era of Ancient PHP + Laravel + Blade + main.js + bootstrap.css + hipster.sass + REST + React + SPA + Redis + RabbitMQ + Cassandra + Elastic 😫

Our monolith starts pooping out little microservices. Some polished pieces turn into pretty little gems... but the obese monolith keeps swelling as well, while simultaneously pooping out more and more little ugly turds at an ever faster rate.

Management rushes in: "Forget about frontend and microservices! We need a desktop app! We need mobile apps! I read in a magazine that the era of the web is over!"

OK, so we enter the Era of Ancient PHP + Laravel + Blade + main.js + bootstrap.css + hipster.sass + REST + GraphQL + React + SPA + Redis + RabbitMQ + Google pub/sub + Neo4J + Cassandra + Elastic + UWP + Android + iOS 😠

"Do you have a monolith or microservices" -- "Yes"

"Which database do you use" -- "Yes"

"Which API standard do you follow" -- "Yes"

"Do you use a CI/building service?" -- "Yes, 3"

"Which Laravel version do you use?" -- "Nine" -- "What, Laravel 9, that isn't even out yet?" -- "No, nine different versions, depends on the services"

"Besides PHP, do you use any Python, Ruby, NodeJS, C#, Golang, or Java?" -- "Not OR, AND. So that's a yes. And bash. Oh and Perl. Oh... and a bit of LUA I think?"

2% of pages are still served by raw, framework-less PHP.31 -

I’m surrounded by idiots.

I’m continually reminded of that fact, but today I found something that really drives that point home.

Gather ‘round, everybody, it’s story time!

While working on a slow query ticket, I perused the code, finding several causes, and decided to run git blame on the files to see what dummy authored the mental diarrhea currently befouling my screen. As it turns out, the entire feature was written by mister legendary Apple golden boy “Finder’s Keeper” dev himself.

To give you the full scope of this mess, let me start at the frontend and work my way backward.

He wrote a javascript method that tracks whatever row was/is under the mouse in a table and dynamically removes/adds a “.row_selected” class on it. At least the js uses events (jQuery…) instead of a `setTimeout()` so it could be worse. But still, has he never heard of :hover? The function literally does nothing else, and the `selectedRow` var he stores the element reference in isn’t used elsewhere.

This function allows the user to better see the rows in the API Calls table, for which there is a also search feature — the very thing I’m tasked with fixing.

It’s worth noting that above the search feature are two inputs for a date range, with some helpful links like “last week” and “last month” … and “All”. It’s also worth noting that this table is for displaying search results of all the API requests and their responses for a given merchant… this table is enormous.

This search field for this table queries the backend on every character the user types. There’s no debouncing, no submit event, etc., so it triggers on every keystroke. The actual request runs through a layer of abstraction to parse out and log the user-entered date range, figure out where the request came from, and to map out some column names or add additional ones. It also does some hard to follow (and amazingly not injectable) orm condition building. It’s a mess of functional ugly.

The important columns in the table this query ultimately searches are not indexed, despite it only looking for “create_order” records — the largest of twenty-some types in the table. It also uses partial text matching (again: on. every. single. keystroke.) across two varchar(255)s that only ever hold <16 chars — and of which users only ever care about one at a time. After all of this, it filters the results based on some uncommented regexes, and worst of all: instead of fetching only one page’s worth of results like you’d expect, it fetches all of them at once and then discards what isn’t included by the paginator. So not only is this a guaranteed full table scan with partial text matching for every query (over millions to hundreds of millions of records), it’s that same full table scan for every single keystroke while the user types, and all but 25 records (user-selectable) get discarded — and then requeried when the user looks at the next page of results.

What the bloody fucking hell? I’d swear this idiot is an intern, but his code does (amazingly) actually work.

No wonder this search field nearly crashed one of the servers when someone actually tried using it.

Asdfajsdfk.rant fucking moron even when taking down the server hey bob pass me all the paperclips mysql murder terrible code slow query idiot can do no wrong but he’s the golden boy idiots repeatedly murdered mysql in the face21 -

I made a web app that utilizes the GeoLocation API, that is used by search and rescue services in a couple of countries, to located missing and/or injured people “in the wild”. Over a few years, hundreds of people has been found due to this tool, some of them would probably not have survived without it! Made the first prototype myself, then two other devs joined in.

Open source and SaaS is offered free of charge to the rescue services. :)4 -

I'm trying to sign up for insurance benefits at work.

Step 1: Trying to find the website link -- it's non-existent. I don't know where I found it, but I saved it in keepassxc so I wouldn't have to search again. Time wasted: 30 minutes.

Step 2: Trying to log in. Ostensibly, this uses my work account. It does not. Time wasted: 10 minutes.

Step 3: Creating an account. Username and Password requirements are stupid, and the page doesn't show all of them. The username must be /[A-Za-z0-9]{8,60}/. The maximum password length is VARCHAR(20), and must include upper/lower case, number, special symbol, etc. and cannot include "password", repeated charcters, your username, etc. There is also a (required!) hint with /[A-Za-z0-9 ]{8,60}/ validation. Want to type a sentence? better not use any punctuation!

I find it hilarious that both my username and password hint can be three times longer than my actual password -- and can contain the password. Such brilliant security.

My typical username is less than 8 characters. All of my typical password formats are >25 characters. Trying to figure out memorable credentials and figuring out the hidden complexity/validation requirements for all of these and the hint... Time wasted: 30 minutes.

Step 4: Post-login. The website, post-login, does not work in firefox. I assumed it was one of my many ad/tracker/header/etc. blockers, and systematically disabled every one of them. After enabling ad and tracker networks, more and more of the site loaded, but it always failed. After disabling bloody everything, the site still refused to work. Why? It was fetching deeply-nested markup, plus styling and javascript, encoded in xml, via api. And that xml wasn't valid xml (missing root element). The failure wasn't due to blocking a vitally-important ad or tracker (as apparently they're all vital and the site chain-loads them off one another before loading content), it's due to shoddy development and lack of testing. Matches the rest of the site perfectly. Anyway, I eventually managed to get the site to load in Safari, of all browsers, on a different computer. Time wasted: 40 minutes.

Step 5: Contact info. After getting the site to work, I clicked the [Enroll] button. "Please allow about 10 minutes to enroll," it says. I'm up to an hour and 50 minutes by now. The first thing it asks for is contact info, such as email, phone, address, etc. It gives me a warning next to phone, saying I'm not set up for notifications yet. I think that's great. I select "change" next to the email, and try to give it my work email. There are two "preferred" radio buttons, one next to "Work email," one next to "Personal email" -- but there is only one textbox. Fine, I select the "Work" preferred button, sign up for a faux-personal tutanota email for work, and type it in. The site complains that I selected "Work" but only entered a personal email. Seriously serious. Out of curiosity, I select the "change" next to the phone number, and see that it gives me four options (home, work, cell, personal?), but only one set of inputs -- next to personal. Yep. That's amazing. Time spent: 10 minutes.

Step 6: Ranting. I started going through the benefits, realized it would take an hour+ to add dependents, research the various options, pick which benefits I want, etc. I'm already up to two hours by now, so instead I decided to stop and rant about how ridiculous this entire thing is. While typing this up, the site (unsurprisingly) automatically logged me out. Fine, I'll just log in again... and get an error saying my credentials are invalid. Okay... I very carefully type them in again. error: invalid credentials. sajfkasdjf.

Step 7 is going to be: Try to figure out how to log in again. Ugh.

"Please allow about 10 minutes" it said. Where's that facepalm emoji?

But like, seriously. How does someone even build a website THIS bad?rant pages seriously load in 10+ seconds slower than wordpress too do i want insurance this badly? 10 trackers 4 ad networks elbonian devs website probably cost $1million or more too root gets insurance stop reading my tags and read the rant more bugs than you can shake a stick at the 54 steps to insanity more bugs than master of orion 312 -

Google sucks!

No, not as e-mail or for privacy reasons. Sure, that too, but it comes with "free" stuff.

It sucks because it's breaking every possible record in the worst, shittiest, most insanely stupid APIs and integrations out there on the entire fucking planet!

It is comically stupid!

Aside from their LOVE of hard-deprecating APIs every few months, requiring constant, time consuming maintenance of every tool that integrates deeply with Google services, some of their APIs, for expensive stuff, look like they've been written by Bobby McFartface from 7th grade.

Take a look at DoubleClick Search (their ad performance reporting tool, that sure does sound like one). To upload custom, additional data, you must pass in a ton of parameter, and they REQUIRE some of them to have a specific, hardcoded value. What's the point in passing that parameter then you dickheads?!

But fine, so you uploaded some stuff using the API. Now you want to delete everything and try again after you fixed a bug - well you fucking CAN'T! You can't delete stuff, you can only mark them as "deleted" using an update call.

Bulk operations? Fuck no!

Can I just add on top? Well of course not! That will raise a ton of exceptions. Same message should be transmitted using the PUT, not POST request, in order to edit.

Can I send everything to PUT? Of course not! You can't edit something that's not there, dummy!

Can I see what's there so that I can update it, and add what's missing?

Well of course not! Why on Earth would you need to see what information is in there after you uploaded it? Who needs that anyway?

Simply send, pray, and hope that everything will be fine (it will not).

Like holy fucking crap, it can't get any more stupid!

Google is a huge pile of idiots who feed on only a single cow - the search engine.

It's times like these when I think that Google right now is the worst thing that exists for everyone in tech. It's dragging everyone down with their monopolies everywhere and complete idiocy in managing them.5 -

I had to open the desktop app to write this because I could never write a rant this long on the app.

This will be a well-informed rebuttal to the "arrays start at 1 in Lua" complaint. If you have ever said or thought that, I guarantee you will learn a lot from this rant and probably enjoy it quite a bit as well.

Just a tiny bit of background information on me: I have a very intimate understanding of Lua and its c API. I have used this language for years and love it dearly.

[START RANT]

"arrays start at 1 in Lua" is factually incorrect because Lua does not have arrays. From their documentation, section 11.1 ("Arrays"), "We implement arrays in Lua simply by indexing tables with integers."

From chapter 2 of the Lua docs, we know there are only 8 types of data in Lua: nil, boolean, number, string, userdata, function, thread, and table

The only unfamiliar thing here might be userdata. "A userdatum offers a raw memory area with no predefined operations in Lua" (section 26.1). Essentially, it's for the API to interact with Lua scripts. The point is, this isn't a fancy term for array.

The misinformation comes from the table type. Let's first explore, at a low level, what an array is. An array, in programming, is a collection of data items all in a line in memory (The OS may not actually put them in a line, but they act as if they are). In most syntaxes, you access an array element similar to:

array[index]

Let's look at c, so we have some solid reference. "array" would be the name of the array, but what it really does is keep track of the starting location in memory of the array. Memory in computers acts like a number. In a very basic sense, the first sector of your RAM is memory location (referred to as an address) 0. "array" would be, for example, address 543745. This is where your data starts. Arrays can only be made up of one type, this is so that each element in that array is EXACTLY the same size. So, this is how indexing an array works. If you know where your array starts, and you know how large each element is, you can find the 6th element by starting at the start of they array and adding 6 times the size of the data in that array.

Tables are incredibly different. The elements of a table are NOT in a line in memory; they're all over the place depending on when you created them (and a lot of other things). Therefore, an array-style index is useless, because you cannot apply the above formula. In the case of a table, you need to perform a lookup: search through all of the elements in the table to find the right one. In Lua, you can do:

a = {1, 5, 9};

a["hello_world"] = "whatever";

a is a table with the length of 4 (the 4th element is "hello_world" with value "whatever"), but a[4] is nil because even though there are 4 items in the table, it looks for something "named" 4, not the 4th element of the table.

This is the difference between indexing and lookups. But you may say,

"Algo! If I do this:

a = {"first", "second", "third"};

print(a[1]);

...then "first" appears in my console!"

Yes, that's correct, in terms of computer science. Lua, because it is a nice language, makes keys in tables optional by automatically giving them an integer value key. This starts at 1. Why? Lets look at that formula for arrays again:

Given array "arr", size of data type "sz", and index "i", find the desired element ("el"):

el = arr + (sz * i)

This NEEDS to start at 0 and not 1 because otherwise, "sz" would always be added to the start address of the array and the first element would ALWAYS be skipped. But in tables, this is not the case, because tables do not have a defined data type size, and this formula is never used. This is why actual arrays are incredibly performant no matter the size, and the larger a table gets, the slower it is.

That felt good to get off my chest. Yes, Lua could start the auto-key at 0, but that might confuse people into thinking tables are arrays... well, I guess there's no avoiding that either way.13 -

When you start a new job as a Senior Developer, and start asking questions about the code, and you have these collections of conversations with other front-end people:

Exhibit 1:

Me: Ahh so I see the filtering and pagination is all done with Javascript in the front end...

Random dev: No, it's done with Angular.

Exhibit 2:

Me: I think we should add frontend pagination to this page. There will be too many elements on it if you're a customer with 2000 servers.

Random dev: Don't bother, there's no pagination in the API call... So that will not gain any performance.

Me: But it wouldn't take long to implement and it would improve the user experience, why would you want to show ALL the elements, when you have an option not to... Also, it WILL be a major performance hit, especially on mobile.

Random dev: People will use search anyway.

😥🔪

Also, there are no coding standards, every file looks different, and my opinion is being disregarded in everything, and I thought my last job was bad...

Seriously how are some people hired as front-enders?

Since I just took this job, I feel obligated to stay a couple of months... But hey, don't cry for me, I might have more rants for you. 😂

Sorry for the long rant, here's cake: 🍰5 -

Me: *Demoed my search API which supports multiple database implementations at the backend*

My Manager: Great!! Is the API independent of DB? Can you plug this API to any DB?

Me: Yes

My Manager: How can user specific DB at runtime?

Me: Why will user be interested in the DB used at the backend? He will just query the API for data.

My Manager: Let's just assume he wants to select a database at runtime.

Me: While searching a movie on Netflix, do you specific from which DB you wanna stream the movie?

My Manager: *Confused and pissed*7 -

Not a rant about anything in particular. Just a summary of some feelings stored in the hateful part of my heart.

Developing for Android: Add this third-party library to your Gradle build. Use (this) built-in Android class to make the thing work.

*Clicks link

Deprecated since API version SUCKMYDICK-7. Use (this) instead

*Clicks link

Deprecated since API version LICKMYBALLS-32. Use...

Developing for Windows: Please use (this) API call. It was literally already available before Bill Gates was born. Carbon dating has placed this item to older than the universe itself and it is likely the entry point for the big bang. It is also still the best way to accomplish (task).

Developing for Linux: "Hmm, I wonder how to use this"

> > > Some shitty mailing list in small blue monospace font tells you to reference a man page that is three versions behind but the only version available.

What? Those three sentences didn't explain it enough? Well, maybe you aren't cut out for this type of thing.

JavaScript: you know how it is.

SQL: You expect a decent-quality answer from stack overflow but you always get an outdated and hacky response and it's using syntax from Microsoft SQL. You need MySQL.

C#: A surprising number of Microsoft forum results ranking high on Google. You click on one in hopes that it will be of any sort of quality. You quickly close the tab and wonder why you ever even had hope.

Literally any REST API: Is it "query" or "q"? "UserID" or "user_id"? Oh, fuck, where's the docs again?

You thought you escaped JavaScript, but it was a trick!: Some bullshit library you downloaded to make your other library work redefined one of the global variables in the project you inherited. Now you get 347 "<x> is not a function" errors in your console. Good luck, asshole.

FontAwesome/ Material fonts/ Any icon font pack: You search "Close" for a close button icon. No results. You search "Simplified railroad crossing sign without the railroad". You get a close icon.

I think that's all of my pent up rage. Each of them were too small for an individual rant so I had to do this essay.2 -

FUCK THE RECRUITERS WHO ASK US TO MAKE AN ENTIRE PROJECT AS A CODE TEST.

Oh you need to scrape this website and then store the data in some DB. Apply sentimental analysis on the data set. On the UI, the user should be able to search the fields that were scraped from the website. Upon clicking it should consume a REST API which you have to create as well. Oh and also deploy it somewhere... Oh I almost forgot, make the UI look good. If you could submit it in one week, we will move towards further rounds if we find you fit enough.

YOU KNOW WHAT, FUCK YOU!

I can apply to 10 others companies in one week and get hired in half the effort than making this whole project for you which you are going to use it on your website YOU SADIST MOTHERFUCK

I CURSE YOUR COMPANY WITH THE ETERNITY OF JS CALLBACK HELL 😡😤😣9 -

So… I released v2.0.0 of devRant UWP a few weeks ago.

Then I got a lot of reports of problems on Windows 10 Mobile and older (than 1809) versions of Windows 10 on Desktop.

I decided to resubmit v2.0.0-beta16 to the store, and try to find the issue in the update… I didn't find it.

The code seems the same as the working version (at least the part I try to test is 100% equal).

So it seems I fucked up the vs project.

This means that to find the issue I can spend weeks to search it over and over inside the latest project (using shitty emulators of older Windows 10 builds to debug it), or I could just restore it to the old v2.0.0-beta16 (released in august) and implement again every single new feature and fix (something like 5 new features, dozens of improvements, changes and bug fixes).

In any case, this will require a lot of time (which I don't have at this moment).

I'm really sorry for this inconvenience, I know some of you use my client daily (~3.000 users I guess), I'm really glad someone likes it, and thanks a lot for the awesome reviews and feedback, but stable v2 (v2.1.0 at this point) will be available not earlier than in February.

Probably some of you have already download v2.0.0 while it was available in the store, and maybe it works on your device (please let me know in the comments below if you did, how is it going, and also if you like the new features and improvements).

After this epic fail, and more than 1 year (way too much) of v2 public beta, I want to throw the current project in the trash, and start it from scratch.

Which means I will start to work on v3 as soon as you will see v2.1.0 in the store, making it faster, lighter and with better support for the latest Windows 10 (Fluent Design and not) features, dropping the support for the very old UWP API.

Thanks for your attention.

Have a good day (or night)! 5

5 -

I've recently received another invitation to Google's Foobar challenges.

A while ago someone here on devRant (which I believe works at Google, and whose support I deeply appreciate) sent me a couple of links to it too. Unfortunately back then I didn't take the time to learn the programming languages (Python or Java) that Google requires for these challenges. This time I'm putting everything on Python, as it's the easiest language to learn when coming from Bash.

But at the end of the day.. I am a sysadmin, not a developer. I don't know a single thing about either of these languages. Yet I can't take these challenges as the sysadmin I am. Instead, I have to learn a new language which chances are I'll never need again outside of some HR dickhead's interview with lateral thinking questions and whiteboard programming, probably prohibited from using Google search like every sane programmer and/or sysadmin would for practical challenges that actually occur in real life.

I don't want to do that. Google is a once in a lifetime opportunity, I get that. Many people would probably even steal that foobar link from me if they could. But I don't think that for me it's the right thing to do. Google has made a serious difference by actually challenging developers with practical scenarios, and that's vastly superior to whatever a HR person at any other company could cobble together for an interview. But there's one thing that they don't seem to realize. A company like Google consists of more than just developers. Not only that, it probably consists - even within their developer circles - of more than just Python and Java developers. If any company would know about languages that are more optimized such as C, it would be Google that has to leverage this performance in order to be able to deliver their services.

I'll be frank here. Foobar has its own issues that I don't like. But if Google were a nice company, I'd go for it all the way nonetheless - after all, they are arguably the single biggest tech company in the world, and the tech industry itself is one of the biggest ones in the world nowadays. It's safe to say that there's likely no opportunity like working at Google. But I don't think it's the right thing. Even if I did know Python or Java... Even if I did. I don't like Google's business decisions.

I've recently flashed my OnePlus 6T with LineageOS. It's now completely Google-free, except for a stock Yalp account (that I'm too afraid to replace with my actual Google account because oh dear, third-party app stores, oh dear that could damage our business and has to be made highly illegal!1!). My contacts on that phone are are all gone. They're all stored on a Google server somewhere (except for some like @linuxxx' that I consciously stored on device storage and thus lost a while back), waiting for me to log back in and sync them back. I've never asked for this. If Google explicitly told me that they'd sync all my contacts to my Google account and offer feasible alternatives, I'd probably given more priority to building a CalDAV and CardDAV server of my own. Because I do have the skills and desire to maintain that myself. I don't want Google to do this for me.

Move fast and break things. I've even got a special Termux script on my home screen, aptly named Unfuck-Google-Play. Every other day I have to use it. Google Search. When I open it on my Nexus 6P, which was Google's foray into hardware and in which they failed quite spectacularly - I've even almost bent and killed it tonight, after cursing at that piece of shit every goddamn day - the Google app opens, I type some text into it.. and then it just jumps back to the beginning of whatever I was typing. A preloader of sorts. The app is a fucking web page parser, or heck probably even just an API parser. How does that in any way justify such shitty preloaders? How does that in any way justify such crappy performance on anything but the most recent flagships? I could go on about this all day... I used to run modern Linux on a 15 year old laptop, smoothly. So don't you Google tell me that a - probably trillion dollar - company can't do that shit right. When there's (commercialized) community projects like DuckDuckGo that do things a million times better than you do - yet they can't compete with you due to your shit being preloaded on every phone and tablet and impossible to remove without rooting - that you Google can't do that and a lot more. You've got fucking Google Assistant for fucks sake! Yet you can't make a decent search app - the goddamn thing that your company started with in the first place!?

I'm sorry. I'd love to work at Google and taste the diversity that this company has to offer. But there's *a lot* wrong with it at the business end too. That is something that - in that state - I don't think I want to contribute to, despite it being pretty much a lottery ticket that I've been fortunate enough to draw twice.

Maybe I should just start my own company.6 -

I give MS a lot of cr@p for terrible API documentation, but even Google's API docs are pretty terrible to read through.

Seriously, guys... Your docs shouldn't read like an endless page of search results.4 -

While trying to integrate a third-party service:

Their Android SDK accepts almost anything as a UID, even floats and doubles. Which is odd, who uses those as UIDs? I pass an Integer instead. No errors. Seems like it's working. User shows up on their dashboard.

Next let's move onto using their data import API. Plug in everything just like I did on mobile. Whoa, got an error. "UIDs must be a string". What. Uh, but the SDK accepts everything with no error. Ok fine. Change both the SDK and API to return the UID as a string. No errors returned after changing the UIDs.

Check dashboard for user via UID. Uh, properties haven't been updating. Check search properties. Find out that UIDs can only be looked up as Integers. What? Why do you ask me to send it as a string via the API then? Contact support. Find out it created two distinct records with the UID, one as a string and the other as an Integer.

GFG.3 -

I'M BACK TO MY WEBDEV ADVENTURES GUYS! IT TOOK ME LIKE 4 MONTHS TO STOP BEING SO FUCKING DEPRESSED SO I CAN ACTUALLY STAND TO WORK ON IT AGAIN

I learned that the linear gradient looks cool as FUCK. Honestly not too fond of the colors I have right now, but I just wanted to have something there cause I can change it later. The page has evolved a bunch from my original concept.

My original concept was the bar in the middle just being a URL bar and having links on the sides. If I had kept that, it would have taken me a few hours to get done. But as time went on when I was working on it, my idea kept changing. Added the weather (had a forecast for a while but the code was gross and I never looked at the next days anyways, so I got rid of it and kept the current data). I wanted to attempt an RSS reader, but yesterday I was about to start writing the JavaScript to parse the feeds, then decided "nah", ended up making the space into a todo list.

The URL bar changed into a full command bar (writing the functions for the commands now, also used to config smaller things, such as the user@hostname part, maybe colors, weather data for city and API key, etc)....also it can open URLs and subreddits (that part works flawlessly). The bar uses a regex to detect if it's a legit URL (even added shit so I don't need http:// or https://), and if it's not, just search using duckduckgo (maybe I'll add a config option there too for search engines).

At this very moment it doesn't even take a second to fully load. It fetches weather data from openweathermap, parses it, and displays it, then displays the "user" name grabbing a localstorage value.

I'm considering adding a sidebar with links (configurable obviously, I want everything to be dynamic, so someone else could use my page if they wanted), but I'm not too sure about it.

It's not on git yet because I was waiting until I get some shit finished today before I commit. From the picture, I want to know if anyone has any suggestions for it. Also note that I am NOT a designer. I can't design for shit. 12

12 -

Did a bunch more cowboy coding today as I call it (coding in vi on production). Gather 'round kiddies, uncle Logan's got a story fer ya…

First things first, disclaimer: I'm no sysadmin. I respect sysadmins and the work they do, but I'm the first to admit my strengths definitely lie more in writing programs rather than running servers.

Anyhow, I recently inherited someone else's codebase (the story of my profession career, but I digress) and let me tell you this thing has amateur hour written all over it. It's written in PHP and JavaScript by a self-taught programmer who apparently discovered procedural programming and decided there was nothing left to learn and stopped there (no disrespect to self-taught programmers).

I could rant for days about the various problems this codebase has, but today I have a very specific story to tell. A story about errors and logs.

And it all started when I noticed the disk space on our server was gradually decreasing.

So today I logged onto our API server (Ubuntu running Apache/PHP) and did a df -h to check the disk space, and was surprised to see that it had noticeably decreased since the last time I'd checked when everything was running smoothly. But seeing as this server does not store any persistent customer data (we have a separate db server) and purely hosts the stateless API, it should NOT be consuming disk space over time at all.

The only thing I could think of was the logs, but the logs were very quiet, just the odd benign message that was fully expected. Just to be sure I did an ls -Sh to check the size of the logs, and while some of them were a little big, nothing over a few megs. Nothing to account for gigabytes of disk space gradually disappearing.

What could it be? I wondered.

cd ../..

du . | sort --sort=numeric

What's this? 2671132 K in some log folder buried in the api source code? I cd into it and it turns out there are separate PHP log files in there, split up by customer, so that each customer of ours (we have 120) has their own respective error log! (Why??)

Armed with this newfound piece of (still rather unbelievable) evidence I perform a mad scramble to search the codebase for where this extra logging is happening and sure enough I find a custom PHP error handler that is capturing (most) errors and redirecting them to these individualized log files.

Conveniently enough, not ALL errors were being absorbed though, so I still knew the main error_log was working (and any time I explicitly error_logged it would go there, so I was none the wiser that this other error-catching was even happening).

Needless to say I removed the code as quickly as I found it, tail -f'd the error_log and to my dismay it was being absolutely flooded with syntax errors, runtime PHP exceptions, warnings galore, and all sorts of other things.

My jaw almost hit the floor. I've been with this company for 6 months and had no idea these errors were even happening!

The sad thing was how easy to fix all the errors ended up being. Most of them were "undefined index" errors that could have been completely avoided with a simple isset() check, but instead ended up throwing an exception, nullifying any code that came after it.

Anyway kids, the moral of the story is don't split up your log files. It makes absolutely no sense and can end up obscuring easily fixable bugs for half a year or more!

Happy coding.6 -

Fuck me in the ass, but do it harder then this api just so I can feel some love 😖

it's one of those days where you have to migrate from soap to rest, only the rest api doesn't have the same structure or search parameters as the soap api, so there's this entire fucking application sending requests at a brick wall, and expecting a purple throbbing 12 inch cock of xml to be pushed into an multi dimensional array and pushed through to the views to derange the mess, only you have to create that fucking 12 inch cock from several 2 inch dipsticks that have a different hierarchy, different field names, and merge the shit together with a glue gun...

good thing it's only an unexpected prod problem... right? 🤷♂️

Ah, the woes of a Monday on the legacy app adventures.rant bullshit applications over engineered using a view to build a data set from hell adopt a piece of shit day1 -

Fuck this

I get to work with API where you CAN authenticate with username/password and get a token

But you CAN'T get user info from token (auth response contains ONLY token)

So what I have to do:

1. Get token

2. Request ALL FUCKING USERS and load them into my DB

3. Search through local DB by username and, yeah, here I go

Now I need to have a cron job to update user DB 1/2 times per day

I can't think of ANY reason not to allow this8 -

!rant

Heres a Tip someone showed me a while back, thought I shared it here if somebody didn't knew. It works with Browser bookmarks and keywords that you assign.

Use-case:

typing "java: String" into the search bar will show searchresults in Google that only returns Pages from the Java API about Strings.

Steps:

1.Search for "https://docs.oracle.com/javase/7/...: %s" in Google.

2.Bookmark it

3.Edit the Bookmark and assign the Keyword "java: "

4.??? (Search "java: Sring". duh)

5.Profit!!1!1

Use-case:

Or typing "stack: help" will search for help in stack overflow.

Steps:

Search %s in SO, bookmark and assign a keyword.

As far as I know this works in FF and Chrome. Cheers2 -

Received $1000 bill from google because my navigation app used google maps and the places search/autocomplete API to allow users to search for a place.

Switching to mapbox maps and places api.

#%$£€@&?10 -

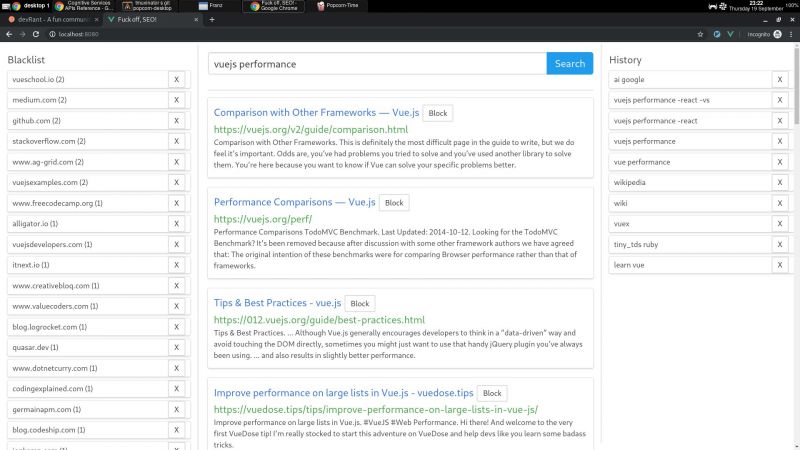

I wrote a node + vue web app that consumes bing api and lets you block specific hosts with a click, and I have some thoughts I need to post somewhere.

My main motivation for this it is that the search results I've been getting with the big search engines are lacking a lot of quality. The SEO situation right now is very complex but the bottom line is that there is a lot of white hat SEO abuse.

Commercial companies are fucking up the internet very hard. Search results have become way too profit oriented thus unneutral. Personal blogs are becoming very rare. Information is losing quality and sites are losing identity. The internet is consollidating.

So, I decided to write something to help me give this situation the middle finger.

I wrote this because I consider the ability to block specific sites a basic universal right. If you were ripped off by a website or you just don't like it, then you should be able to block said site from your search results. It's not rocket science.

Google used to have this feature integrated but they removed it in 2013. They also had an extension that did this client side, but they removed it in 2018 too. We're years past the time where Google forgot their "Don't be evil" motto.

AFAIK, the only search engine on earth that lets you block sites is millionshort.com, but if you block too many sites, the performance degrades. And the company that runs it is a for profit too.

There is a third party extension that blocks sites called uBlacklist. The problem is that it only works on google. I wrote my app so as to escape google's tracking clutches, ads and their annoying products showing up in between my results.

But aside uBlacklist does the same thing as my app, including the limitation that this isn't an actual search engine, it's just filtering search results after they are generated.

This is far from ideal because filter results before the results are generated would be much more preferred.

But developing a search engine is prohibitively expensive to both index and rank pages for a single person. Which is sad, but can't do much about it.

I'm also thinking of implementing the ability promote certain sites, the opposite to blocking, so these promoted sites would get more priority within the results.

I guess I would have to move the promoted sites between all pages I fetched to the first page/s, but client side.

But this is suboptimal compared to having actual access to the rank algorithm, where you could promote sites in a smarter way, but again, I can't build a search engine by myself.

I'm using mongo to cache the results, so with a click of a button I can retrieve the results of a previous query without hitting bing. So far a couple of queries don't seem to bring much performance or space issues.

On using bing: bing is basically the only realiable API option I could find that was hobby cost worthy. Most microsoft products are usually my last choice.

Bing is giving me a 7 day free trial of their search API until I register a CC. They offer a free tier, but I'm not sure if that's only for these 7 days. Otherwise, I'm gonna need to pay like 5$.

Paying or not, having to use a CC to use this software I wrote sucks balls.

So far the usage of this app has resulted in me becoming more critical of sites and finding sites of better quality. I think overall it helps me to become a better programmer, all the while having better protection of my privacy.

One not upside is that I'm the only one curating myself, whereas I could benefit from other people that I trust own block/promote lists.

I will git push it somewhere at some point, but it does require some more work:

I would want to add a docker-compose script to make it easy to start, and I didn't write any tests unfortunately (I did use eslint for both apps, though).

The performance is not excellent (the app has not experienced blocks so far, but it does make the coolers spin after a bit) because the algorithms I wrote were very POC.

But it took me some time to write it, and I need to catch some breath.

There are other more open efforts that seem to be more ethical, but they are usually hard to use or just incomplete.

commoncrawl.org is a free index of the web. one problem I found is that it doesn't seem to index everything (for example, it doesn't seem to index the blog of a friend I know that has been writing for years and is indexed by google).

it also requires knowledge on reading warc files, which will surely require some time investment to learn.

it also seems kinda slow for responses,

it is also generated only once a month, and I would still have little idea on how to implement a pagerank algorithm, let alone code it. 4

4 -

!Rant

Is this what we've all been waiting for?

CodeCorrect finds solutions to common errors in your code

"The hack works by inserting a piece of JavaScript in your web code that reroutes uncaught exceptions to a local node.js web server. From there, the code sends a request to StackOverflow's API to search for error messages and return the highest-ranked solutions to user-submitted questions. Answers are extracted from the StackOverflow, and if they can automatically be converted into instructions, changes will be made to the original code."

https://techcrunch.com/2017/05/...3 -

My god the wall looks really punchable right now. Let me tell you why.

So I’m working on a data mining project, and I’m trying to get data from google trends. Unfortunately, there have been a lot of roadblocks for what should have been an easy task.

First it won’t give a raw search volume, only relative “interest”.

Fortunately it lets me compare search terms, which would work for my needs however it will only let me compare a few at a time. I need to compare 300.

So my solution is simple: compare all the terms relative to one term. Simple enough, but it would be time consuming so I figured I’d write a program to get the data.

But then I learned that they don’t have an official api. There’s a node module for this very thing based on a python module that reverse engineers the api endpoints. I thought as long as it works I’d use it.

It does work... But then I discovered that google heavily rate limits the endpoints.

So... I figured I’d build a system to route the requests through different tor nodes to get around the rate limit. Good solution right? Well like a slap to the face, after spending way to much time getting requests through tor working, I discovered that THEY FUCKING BLOCKED TOR IPS.

So I gave up, and resigned to wait 5 hours for my program to get the data... 1 comparison at a time... 60s interval between requests. They, of course, don’t tell you the rate limit threshold, so this is more or less a guess (I verified that 30s interval was too short and another person using the module suggested 60s).

Remember when I said the discovery that the blocked tor came like a slap to the face? This came as a sledge hammer to the face: for some reason my program didn’t dump the data at the end. I waited 5 fucking hours to get nothing.

I am so mad right now. I am so fucking mad.4 -

Doing a full rewrite from some DIY spaghetti framework: when it can't find a search query it returns "false" with the status code 200, the same php file responsible for querying an external api is put into all sorts of named folders, so e.g. a user that is in the results page X can continue searching on the same URL, instead of doing proper url rewrites or ajax calls to the one in the root directory, html is thrown into every other php line, a DIY sort function for a numbers array that fails to sort 0 before 1 and that all is just a 10 minute review, can't wait to see the rest.2

-

Discovered pro tip of my life :

Never trust your code

Achievements unlocked :

Successfully running C++ GPU accelerated offscreen rendering engine with texture loading code having faulty validation bug over a year on production for more than 1.5M daily Android active users without any issues.

History : Recently I was writing a new rendering engineering that uses our GPU pipeline engine.. and our prototype android app benchmark test always fails with black rendering frame detection assertion.

Practice:

Spend more than a month to debug a GPU pipeline system based on directed acyclic graph based rendering algorithm.

New abilities added :

Able to debug OpenGL ES code on Android using print statement placed in source code using binary search.

But why?

I was aware of the issue over a month and just ignored it thinking it's a driver bug in my android device.. but when the api was used by one of Android dev, he reported the same issue. In the same day at night 2:59AM ....

Satan came to me and told me that " ok listen man, here is what I am gonna do with you today, your new code will be going production in a week, and the renderer will give you just one black frame after random time, and after today 3AM, your code will not show GL Errors if you debug or trace. Buhahahaha ahhaha haahha..... Puffff"

And he was gone..

Thanks satan for not killing me.. I will not trust stable production code anymore enevn though every line is documented and peer reviewed. -

I like js and node in general.

But there's this thing I hate about NodeJs...

The blogs. The goddamn blogs.

Every goddamn blog post. Is code. Dozens of lines of code.

Oh, so you want X feature? Just copy paste this shit.

I swear to god, blog posts are the source versioning system to these people.

What they should instead is

a) Create a package.

b) Add tests to it.

c) Present the package to the reader with some minimal code.

But I'm a getting a huge impression that node blog writers want you to copy the code in their post, paste it in your project, and be happy with it.

Now, I'm not assuming that every person posting in medium.com is a software engineer (and by engineer I mean an engineer, not some fuckwad who begs for github stars on dev communities).

The problem to me is that they fucking SATURATE the goddamn search results.

The same goes for finding an npm package for your need, because there are so many low quality packages it's saturated too, you have too plow this stinking pile of projects that have very low quality,

and there's not a really good npm finder out there. Half of them are dead, some look and load like shit, and npm search has a low barrier for good code.

Me on rails, OTOH "ok, I need this thing", I google that and I swear to [-∞,+∞] I find GOOD packages, well designed, no cookie cutter bullshit, no obscure marketing shit on the README.md, it is very clear what this shit does, and the api is designed for HUMANS.

and it actually takes very little time to know if there's no such package.

I don't have to read dozens of fucking my-fuck-blog.io (jesus christ, the io domain has become such a fucking joke, it got fucking abused to death, there are some cool sites out there using it, but my god, James H. Marketing likes to just absorb everything he can, and the internet was not going to be a fucking exception)

does all of this make sense?3 -

So I just spent the last few hours trying to get an intro of given Wikipedia articles into my Telegram bot. It turns out that Wikipedia does have an API! But unfortunately it's born as a retard.

First I looked at https://www.mediawiki.org/wiki/API and almost thought that that was a Wikipedia article about API's. I almost skipped right over it on the search results (and it turns out that I should've). Upon opening and reading that, I found a shitload of endpoints that frankly I didn't give a shit about. Come on Wikipedia, just give me the fucking data to read out.

Ctrl-F in that page and I find a tiny little link to https://mediawiki.org/wiki/... which is basically what I needed. There's an example that.. gets the data in XML form. Because JSON is clearly too much to ask for. Are you fucking braindead Wikipedia? If my application was able to parse XML/HTML/whatevers, that would be called a browser. With all due respect but I'm not gonna embed a fucking web browser in a bot. I'll leave that to the Electron "devs" that prefer raping my RAM instead.

OK so after that I found on third-party documentation (always a good sign when that's more useful, isn't it) that it does support JSON. Retardpedia just doesn't use it by default. In fact in the example query that was a parameter that wasn't even in there. Not including something crucial like that surely is a good way to let people know the feature is there. Massive kudos to you Wikipedia.. but not really. But a parameter that was in there - for fucking CORS - that was in there by default and broke the whole goddamn thing unless I REMOVED it. Yeah because CORS is so useful in a goddamn fucking API.

So I finally get to a functioning JSON response, now all that's left is parsing it. Again, I only care about the content on the page. So I curl the endpoint and trim off the bits I don't need with jq... I was left with this monstrosity.

curl "https://en.wikipedia.org/w/api.php/...=*" | jq -r '.query.pages[0].revisions[0].slots.main.content'

Just how far can you nest your JSON Wikipedia? Are you trying to find the limits of jq or something here?!

And THEN.. as an icing on the cake, the result doesn't quite look like JSON, nor does it really look like XML, but it has elements of both. I had no idea what to make of this, especially before I had a chance to look at the exact structured output of that command above (if you just pipe into jq without arguments it's much less readable).

Then a friend of mine mentioned Wikitext. Turns out that Wikipedia's API is not only retarded, even the goddamn output is. What the fuck is Wikitext even? It's the Apple of wikis apparently. Only Wikipedia uses it.

And apparently I'm not the only one who found Wikipedia's API.. irritating to say the least. See e.g. https://utcc.utoronto.ca/~cks/...

Needless to say, my bot will not be getting Wikipedia integration at this point. I've seen enough. How about you make your API not retarded first Wikipedia? And hopefully this rant saves someone else the time required to wade through this clusterfuck.12 -

Boss"So, we need to get some data about the users using the APIs from this list of sites."

Me"Alright, sounds feasible enough"

Navigating to first site.

M"Hold on, where's the API?"

B"What do you mean? You're looking at it."

M"This is a website with a search bar, not an API"

B"Same thing. Get to scrapping that data."

M"I-It's written in a JS framework to be reactive in a half-assed way."

B"We need that data"

M"The data is not even consistent!"

B"That's why we need to join it with all these different sources."

The API was a lie. None of the sites had anything remotely similar to an API.

Having to use bloody selenium with chrome driver to scrap all the information because of course, it has to be done programatically every week from now on.

I just hope no captcha of any kind is installed before I finish this project.4 -

React router is shit

I have never seen more retarded library.

Not only those suckers change the 100% of the API every fucking update for no reason, also they have the most fucked up documentation ever.

No search in the docs!!! Fucking bullshit examples with no such easy things like how to create nested routes.

Please, stop using this piece of shit, I'm tired of working with this fucking abomination. Hope they will delete their shit repo one day.22 -

Chrome. Hit F12 and start typing. Those keystrokes used to go into the console, right? I'm not imagining things...

And then some giant free-standing penis decided that instead, the initial focus should be in the search box.

So you type, nothing appears in the console, you focus the console, and carry on.

Then you're wondering why your api calls aren't in the network tab. Caching issues? Event handler crapping out? No, it's because that command you tried to enter ten minutes ago is still in the search box and being used as a filter.

Because someone decided to change the default focus.

As a wise man once said: "who the fuck was that? Who's the slimy little communist shit twinkle-toed cocksucker who just signed his own death warrant?"

Why didn't anyone stop him? In the meeting where he suggested that, why didn't his colleagues grab him by the testicles and drag him out of the building?

Why?

Fuckers.8 -

Received feedback on a task I made for a job interview (I didn't get a technical interview).

The task was easy with nothing special about it that made me think if that's what the job is like, I don't want to work there. It was a simple web page with search functionality. I did the task anyway.

The feedback I got was useless. It said that I made a complex and an over-engineered solution.

What I made, mind you, was a one endpoint API and a single Vue.js component instead of using jQuery to update the results. That's it. OVER-ENGINEERED!

Complete waste of time.5 -

Why Gmail. Why the fuck do your search parameters, especially your date filters, not work anywhere near as expected.

You make me have to query and test, query and test, just, randomly fucking guessing because, fuck it, right?

With a good 10 second refresh time. I love twiddling my thumbs and pulling my hair out.

after:2018/11/1 should produce emails from Nov 1st onward.

Not, TODAY ONLY, if no other parameters are

specified.

If there's a from: parameter, now we want to do after Nov 1st, right?

And also, don't show me how to sort in reverse order, either. Not without a complete rewrite of my class there, which clearly I'm too lazy to do right now.

Fuck the Gmail Api, responsible for weeks of wasted dev time... or more aptly put, "fuck devs using our gmail api" says the maniacal, sociopath devils that created it

fuckers.1 -

That's it, where do I send the bill, to Microsoft? Orange highlight in image is my own. As in ownly way to see that something wasn't right. Oh but - Wait, I am on Linux, so I guess I will assume that I need to be on internet explorer to use anything on microsoft.com - is that on the site somewhere maybe? Cause it looks like hell when rendered from Chrome on Ubuntu. Yes I use Ubuntu while developing, eat it haters. FUCK.

This is ridiculous - I actually WANT to use Bing Web Search API. I actually TRIED giving up my email address and phone number to MS. If you fail the I'm not a robot, or if you pass it, who knows, it disappears and says something about being human. I'm human. Give me free API Key. Or shit, I'll pay. Client wants to use Bing so I am using BING GODDAMN YOU.

Why am I so mad? BECAUSE THIS. Oauth through github, great alternative since apparently I am not human according to microsoft. Common theme w them, amiright?

So yeah. Let them see all my githubs. Whatever. Just GO so I can RELAX. Rate limit fuck shit workaround dumb client requirements google can eat me. Whats this, I need to show my email publicly? Verification? Sure just go. But really MS, this looks terrible. If I boot up IE will it look any better? I doubt it but who knows I am not looking at MS CSS. I am going into my github, making it public. Then trying again. Then waiting. Then verifying my email is shown. Great it is hello everyone. COME ON MS. Send me an email. Do something.

I am trying to be patient, but after a few minutes, I revoke access. Must have been a glitch. Go through it again, with public email. Same ugly almost invisible message. Approaching a billable hour in which I made 0 progress. So, lets just see, NO EMAIL from MS, Yes it appears in my GitHub, but I have no way to log into MS. Email doesnt work. OAuth isn't picking it up I guess, I don't even care to think this through.

The whole point is, the error message was hard to discover, seems to be inaccurate, and I can't believe the IRONY or the STUPIDITY (me, me stupid. Me stupid thinking I could get working doing same dumb thing over and over like caveman and rock).

Longer rant made shorter, I cant come up with a single fucking way to get a free BING API Key. So forget it MS. Maybe you'll email me tomorrow. Maybe Github was pretending to be Gitlab for a few minutes.

Maybe I will send this image to my client and tell him "If we use Bing, get used to seeing hard to read error messages like this one". I mean that's why this is so frustrating anyhow - I thought the Google CSE worked FINE for us :/

-

Alrighty, saturday morning rant time!

I just recieved a mail from one of my not-so-much-loved colleagues.

Now Background first: I work in IT-Support. We provide services for other companies. One of those services is monitoring servers and clients for various things. I recently took over the project (was assigned to do it) and restructured everything, wrote new scripts to test more stuff, successfully tested it internally and rolled it out over the last 2 weeks.

Now one of these scripts hooks into the Windows Update API and looks at the update history. It filters for known Windows Update Agent strings (UpdateOrchestrator, AutomaticUpdates and AutomaticUpdatesWuApp in case you also want to do something like this) and then looks for installation errors over the last 24 hours and wherever there have even been any successful updates over the last one and a half months.

Back to that mail.

My colleague sent me this lovely mail about a ticket i opened about his customers servers beeing all out-of-date on updates.

"This is all wrong, everything's fine. I disabled the checks."

...

It's on bitch.

So i logged on to my work PC via TeamViewer, opened my script, connected to the customer and was ready to debug the shit out of my script, knowing i probably won't even need to.

I looked at the update history via Windows Update itself and behold: 1st April. That's almost 50 days in the past.

So the script works, go figure.

Great, so search for new Updates then.

>None found.

Hm. What could it be? Did my super special colleague forget to care about his very special totally-needs-WSUS-customer WSUS again?

Yup.

Online-Search finds a ton of new Updates.

Screenshot, write pissed mail to colleague, re-enable checks, breakfast.1 -

Disclaimer: I hold no grudges or prejudices toward [CENSORED] company. I love the concept of the business model and the perks they pay their employees. Unfortunately, the company is very petty, and negligence is the core of the management. I got into an interview for the position, of Senior Software Engineer, and the interview wouldn't take place if wasn't for me to follow up with the person in charge countless times a day. The Vice President of Engineering was the most confused person ever encountered. Instead of asking challenging questions that plausibly could explain and portray how well I can manage a team, the methodology of working with various technology, and my problem-solving skills. They asked me questions that possibly indicated they don't even know what they need or questions that can easily get from a Google Search. I was given 40 hours to build a demo application whereby I had to send them a copy of the source code and the binary file. The person who contacted me don't even bother with what I told her that it is not a good practice to place the binary in cloud storage (Google Drive, OneDrive, etc) and I request extra time to complete the demo application. Since I got the requirement to hand them the repository of the codebase, it is common practice to place the binary in the release section in the Git Platform (Jire, Azure DevOps, Github, Gitlab, etc). Which he surprisingly doesn't know what that is. There's the API key I place locally in .env hidden from the codebase (it's not good practice to place credentials in the codebase), I got a request that not only subscript to an API is necessary but I have to place them in the codebase. I succeed to pass the source code on time with the quality of 40 hours, I told him that I could have done it better, clearer and cleaner if I was given more grace of time. (Because they are not the only company asking me to write a demo application prior to the assessment. Extra grace was I needed)

So long story short, I asked him how is it working in a [CENSORED] company during my turn to ask questions. I got told that the "environment is friendly, diverse". But with utmost curiosity, I contacted several former employees (Software Engineer) on LinkedIn, and I got told that the company has high turnover, despises diversity the nepotism is intense. Most of the favours are done based on how well you create an illusion of you working for them and being close to the upper management. I request shreds of evidence from those former employees to substantiate what they told me. Seeing the pieces of evidence of how they manage the projects, their method of communication, and how biased the upper management actually is led me to withdraw from continuing my application. Honestly, I wouldn't want to work for a company where the majority can't communicate. -

So I finished 6-month long frontend studies and the school proposed internship in one of the best local coding companies. I got their test, basically to write 'API-based internet app with any of JS frameworks'.

Me: 'Hooray!!!'. Couple of days later, app delivered. Made with jQuery (because this is the only js framework the fucking coding school taught me). Very long, very personal cover letter sent along with it.

They: ' We are sorry, but we will not consider anything written with jQuery'.

Me: 'OK'. Learning ReactJS alone by myself for two weeks, 8-10 hours daily. Another two weeks - another project delivered. News agregator, fetching from 3 APIs and merging news based on publication time. News categories, news search - all the bells and whistles. Made 100% myself - not some clone from Udemy workshop or youtube.

They: 'Sorry, your project isn't good enough'.

Me (silently): Fuck you too, stupid HR manager. If you aren't able to see the motivation and dedication in a person, shove a dildo up your ass.5 -

-Rant-

How do you (not) secure your Rest based web service?

1. Chain it to shady organic authentication system built by a hoard of monkeys high on Tequila.

2. have secret keys that get copy pasted into config flat files, and index them on your code search engine.

3. make the onboarding extremely platform specific that you need 500 environment variables, 50 scripts, 5 fancy device presses and a tap dance to make a GET call to the service.

4. fish through 500 rotating log files that the authentication system generates for each API call made.

5. Leave traces all over the host so if you have to start over, you should sudo rm -rf / and set fire to your computer. -

TLDR; I was editing the wrong file, let's go to bed.

We have this huge system that receives data from an API endpoint, does a whole bunch of stuff, going through three other servers, and then via some calculation based on the data received from the UI, and data received from the endpoint, it finally sends the calculated fields to the UI via websocket.

Poor me sitting for over 4 hours debugging and changing values in the logic file trying to understand why one of the fields ends up being null.

Of course every change needs a reboot to all the 4 servers involved, and a hard refresh of the UI.

I even tried to search for the word null in that file, but to no avail.

After scattering hundreds of console logs, and pulling my hair out, I found out that I am editing the wrong file.

I guess it's time for some sleep.1 -

¡rant|rant

Nice to do some refactoring of the whole data access layer of our core logistics software, let me tell an story.

The project is around 80k lines of code, with a lot of integrations with an ERP system and an sql database.

The ERP system is old, shitty api for it also, only static methods through an wrapper to an c++ library

imagine an order table.

To access an order, you would first need to open the database by calling Api.Open(...file paths) (yes, it's an fucking flat file type database)

Now the database is open, now you would open the orders table with method Api.Table(int tableId) and in return you would get an integer value, the pointer.

Now for the actual order. first you need to search for it by setting the search parameter to the column ID of the order number while checking all calls for some BS error code

Api.SetInt(int pointer, int column, int query Value)

Then call the find method.

Api.Find(int pointer)

Then to top this shitcake of an api of: if it doesn't find your shit it will use the "close enough" method of search.

And now to read a singe string 😑

First you will look in the outdated and incorrect documentation given to you from the devil himself and look for the column ID to find the length of the column.

Then you create a string variable with ALL FUCKING SPACES.

Now you call the Api.GetStr(int pointer, int column, ref string emptyString, int length)

Now you have passed your poor string to the api's demon orgy by reference.

Then some more BS error code checking.

Now you have read an string value 😀

Now keep in mind to repeat these steps for all 300+ columns in the order table.

News from the creators: SQL server? yes, sql is good so everything will be better?

Now imagine the poor developers that got tasked to convert this shitcake to use a MS SQL server, that they did.

Now I can honestly say that I found the best SQL server benchmark tool. This sucker creams out just above ~105K sql statements per second on peak and ~15K per second for 1.5 second to read an order. 1.5 second to read less than 4 fucking kilobytes!

Right at that moment I released that our software would grind to an fucking halt before even thinking about starting it. And that me & myself and I would be tasked to fix it.

4 months later and two weeks until functional beta, here I am. We created our own api with the SQL server 😀

And the outcome of all this...

Fixes bugs older than a year, Forces rewriting part of code base. Forces removal of dirty fixes. allows proper unit and integration testing and even database testing with snapshot feature.

The whole ERP system could be replaced with ~10 lines of code (provided same relational structure) on the application while adding it to our own API library.

Best part is probably the performance improvements 😀. Up to 4500 times faster and 60 times less memory usage also with only managed memory.3 -

Am I crazy ?

Right now we have an API which returns a full planning for a week for 300 employees with indicators (Like "late", "may be postponed" etc) in 4 seconds.

I have a pressure, people telling me it's not fast enough.

I honestly think it is fast.

In order of data it'a around 100 MB of JSON. AND you can do actions on the whole set if needed.

Long story short, I think 4 seconds to get all that data is pretty great. Customers think they should have it instantly.

(Never mind the whole filtering system at thier disposal, they literall only lod the full set and then MANUALLY scroll (Yes there is a quick search box)).

What can I do more ????? cache that ? I can. But they also expect that any changed value is reflected.

And we fucking do it. While you are on the page there is a SignalR conenxion created and notified when any of data is changed and updates it on front. Takes around 500 ms.

Apprently "too slow".

I honestly don't see what we can do more with our small 4 dev team.

Give me 56 developpers I can do something, but right now I'm proud of result.14 -

Microsoft: "Let's publish APIs that all developers want and not tell them how to access it, making them waste hours on researching it...."

https://developer.linkedin.com/docs...

Microsoft: "That's a good one! Let's also not answer any of the stack overflows"

https://google.com/search/...

Microsoft: "That'll show em !"3 -

PSA Cloudflare had a bug in there system where they were dumping random pieces of memory in the body of HTML responses, things like passwords, API tokens, personal information, chats, hotel bookings, in plain text, unencrypted. Once discovered they were able to fix it pretty quickly, but it could have been out in the wild as early as September of last year. The major issue with this is that many of those results were cached by search engines. The bug itself was discovered when people found this stuff on the google search results page.

It's not quite end of the world, but it's much worse than Heartbleed.

Now excuse me this weekend as I have to go change all of my passwords.3 -

So, since almost a week I was trying to get familiar with Algolia which was to be used as API for the search feature in our App. But now, we are going with Elastic Search.

-

Working on an app to sync data between our ticketing system and an API a vendor made for us to interact with their ticketing system. I put off working on it for months, mostly because I had mountains of other "urgent" things that jumped in my face, but also because I needed to design the whole thing, and I really have to get into the right frame of mind for that kind of creative organization.

Today I dove into it. I built the JSON to submit, given whatever variables are necessary, and figured out after a while that the smartest way to handle this is not to search for an existing internal ticket, but to have the creation of the internal ticket set a flag for an automated sync process to check when it runs.

It's going to be much easier when I get that built, but now, knowing that, I'm daunted enough that I'm procrastinating. Think of something, chart it out with notes in a text editor, procrastinate.That is probably like 95% of the time I spend in "development." -

Context: New to typescript. Writing a thing, doing it for work, good opportunity to stretch my dev legs. Using a propriety lib, alternatives not an option.

Rant begin:

SOOOO, who the fuck thought THIS was a good idea:

1. Lib has minified react in dev (because closed source) meaning no downstream errors AND the entire premise of the lib is that a widget is a react component, so I'm writing typescript react the entire time without downstream errors

2. SHIT docs. By that, I mean there's an API reference page that's so sparse there's literally a set of CRUCIAL interfaces that only say the word 'Interface' on them. That's it. that's what i get. It's an interface. NO FUCKING SHIT SHERLOCK, what the fuck is it though? What's its purpose? Is it an interface for a dog? A dog that has a 'shit' property? or a cat? or a cat eating dog shit? Nobody fucking knows - the docs sure as fuck don't care.

3. No syntax highlighting - editors, IDEs (i've tried a few) can't even find the lib inside this environment, so Code and everything else thinks I'm importing shit that doesn't even exist - so no error prediction, code completion based on syntax of the library, none of that.

4. There are some EXTREMELY basic samples - these samples exclusively use React classes - no function components, no hooks, nada - just classes and even perfect replicas of the sample code display erratic behavior like errors about missing props, so that's mostly FUCKING USELESS

5. And this... this is where the straw breaks the fucking camel's back... there's no... there's no hot reloading... Do you know what that (in conjunction with the previous 4 fuckups) means?

When I write anything or I fuck up (which of course I'm doing every time I write half a line because how the fuck?) I have to restart the client and server EVERY FUCKING TIME and manually test to see if the error (THAT ONLY GETS REPORTED IN THE LOCAL UI) is gone or different.