Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "faster speed"

-

I absolutely HATE "web developers" who call you in to fix their FooBar'd mess, yet can't stop themselves from dictating what you should and shouldn't do, especially when they have no idea what they're doing.

So I get called in to a job improving the performance of a Magento site (and let's just say I have no love for Magento for a number of reasons) because this "developer" enabled Redis and expected everything to be lightning fast. Maybe he thought "Redis" was the name of a magical sorcerer living in the server. A master conjurer capable of weaving mystical time-altering spells to inexplicably improve the performance. Who knows?

This guy claims he spent "months" trying to figure out why the website couldn't load faster than 7 seconds at best, and his employer is demanding a resolution so he stops losing conversions. I usually try to avoid Magento because of all the headaches that come with it, but I figured "sure, why not?" I mean, he built the website less than a year ago, so how bad can it really be? Well...let's see how fast you all can facepalm:

1.) The website was built brand new on Magento 1.9.2.4...what? I mean, if this were built a few years back, that would be a different story, but building a fresh Magento website in 2017 in 1.x? I asked him why he did that...his answer absolutely floored me: "because PHP 5.5 was the best choice at the time for speed and performance..." What?!

2.) The ONLY optimization done on the website was Redis cache being enabled. No merged CSS/JS, no use of a CDN, no image optimization, no gzip, no expires rules. Just Redis...

3.) Now to say the website was poorly coded was an understatement. This wasn't the worst coding I've seen, but it was far from acceptable. There was no organization whatsoever. Templates and skin assets are being called from across 12 different locations on the server, making tracking down and finding a snippet to fix downright annoying.

But not only that, the home page itself had 83 custom database queries to load the products on the page. He said this was so he could load products from several different categories and custom tables to show on the page. I asked him why he didn't just call a few join queries, and he had no idea what I was talking about.

4.) Almost every image on the website was a .PNG file, 2000x2000 px and lossless. The home page alone was 22MB just from images.

There were several other issues, but those 4 should be enough to paint a good picture. The client wanted this all done in a week for less than $500. We laughed. But we agreed on the price only because of a long relationship and because they have some referrals they got us in the door with. But we told them it would get done on our time, not theirs. So I copied the website to our server as a test bed and got to work.

After numerous hours of bug fixes, recoding queries, disabling Redis and opting for higher innodb cache (more on that later), image optimization, js/css/html combining, render-unblocking and minification, lazyloading images tweaking Magento to work with PHP7, installing OpCache and setting up basic htaccess optimizations, we smash the loading time down to 1.2 seconds total, and most of that time was for external JavaScript plugins deemed "necessary". Time to First Byte went from a staggering 2.2 seconds to about 45ms. Needless to say, we kicked its ass.

So I show their developer the changes and he's stunned. He says he'll tell the hosting provider create a new server set up to migrate the optimized site over and cut over to, because taking the live website down for maintenance for even an hour or two in the middle of the night is "unacceptable".

So trying to be cool about it, I tell him I'd be happy to configure the server to the exact specifications needed. He says "we can't do that". I look at him confused. "What do you mean we 'can't'?" He tells me that even though this is a dedicated server, the provider doesn't allow any access other than a jailed shell account and cPanel access. What?! This is a company averaging 3 million+ per year in revenue. Why don't they have an IT manager overseeing everything? Apparently for them, they're too cheap for that, so they went with a "managed dedicated server", "managed" apparently meaning "you only get to use it like a shared host".

So after countless phone calls arguing with the hosting provider, they agree to make our changes. Then the client's developer starts getting nasty out of nowhere. He says my optimizations are not acceptable because I'm not using Redis cache, and now the client is threatening to walk away without paying us.

So I guess the overall message from this rant is not so much about the situation, but the developer and countless others like him that are clueless, but try to speak from a position of authority.

If we as developers don't stop challenging each other in a measuring contest and learn to let go when we need help, we can get a lot more done and prevent losing clients. </rant>14 -

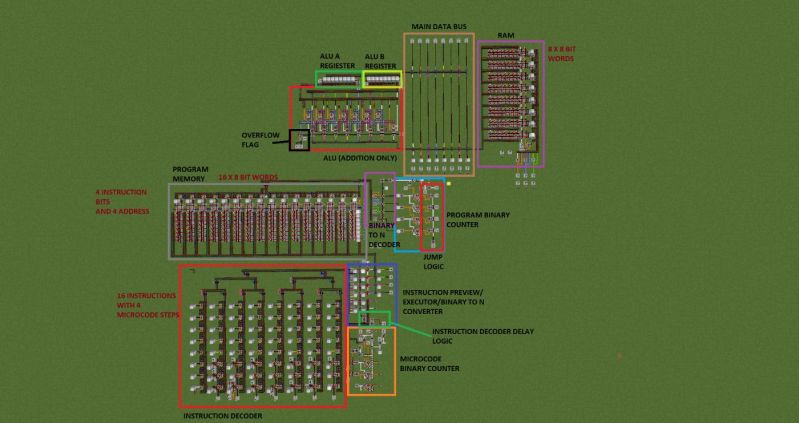

There is. My latest creation. A 8bit microcontroler made in minecraft.

Features:

(1.0 version without control room)

-8bit full adder + overflow flag

-8x8bit RAM

-16x8(4bit instruction, 4bit address)

program memory

-64 possible microinstructions (16 instructions with 4 step each)

-uncondintional and if oveflow jumps

(place determined using address written with instruction)

-1/3Hz clock speed 😨

New working version (2.0) has 1Hz clock and new faster instruction decoder.

In 3.0 in addition to that useless bus was replaced with 16x8bit "hardware" stack that can store adresses and data. The clock is going to be yeeted out because it is unnecesary #clocklessisbetter (WIP tho)

Might add more documentation and post it as learning model for CS wanabees 🤔. What do you think?

Picture: Old working version 1.0

(the only one with fancy diagram)

Newer version screenshots in comments. 33

33 -

My parents have never had a router faster than 10Mb/s. When our most recent one finally bit the dust, I urged them to buy a decent one, because everyone uses the internet now. LOOK AT THE SPEED WE'VE BEEN MISSING.

9

9 -

Navy story time again. Grab that coffee and fire up Kali, the theme is security.

So, when I got promoted to Lieutenant Jr. I had to attend a 1-year school inside my nostalgic Naval Academy... BUT! I was wiser, I was older... and I was bored. Like, really bored. What could go wrong? Well, all my fellow officers were bored too, so they started downloading/streaming/torrenting like crazy, and I had to wait for hours for the Kali updates to download, so...

mdk3 wlan0mon -d

I had this external wifi atheros card with two antennae and kicked all of them off the wifi. Some slightly smarter ones plugged cables on the net, and kept going, enjoying much faster speeds. I had to go to the bathroom, and once I returned they had unplugged the card. That kind of pissed me off, since they also thought it would be funny to hide it, along with the mouse.

But, oh boy, they had no idea what supreme asshole I can be when I am irked.

So, arpspoof it is. Turns out, there were no subnetworks, and the broadcast domain was ALL of the academy. That means I shut EVERYONE off, except me. Hardware was returned in 1 minute with the requested apologies, but fuck it, I kept the whole academy off the net for 6 hours. The sysadmin ran around like crazy, because nothing was working. Not even the servers.

I finally took pity on the guy (he had gotten the duties of sysadmin when the previous sysad died, so think about that) and he almost assaulted me when I told him. As it turned out, the guy never had any training or knowledge on security, so I had to show him a few things, and point him to where he could study about the rest. But still, some selective arp poison on select douchebags was in order...

Needless to say, people were VERY polite to me after that. And the net speed was up again, so I got bored. Again. So I started scanning the net.

To be continued...3 -

So. A while ago I was on OkCupid, trying to find the Pierre to my Marie Curie (without the whole brain getting crushed under a horse carriage wheel obviously) and I decided the best way was to have my profile lead with my passion for technology. It turned out pretty unique, if I do say so myself.

At the end of it, I amassed some interesting and unique messages:

- A Java pickup line (that I never responded to. Yes I'm a very basic Devranter)

- A request to turn the man's software into hardware (to which I politely informed him that this was scientifically impossible unless a reader proves me wrong)

- Another impossible request to turn his floppy disk into a hard drive (how outdated too, why not HDD to SSD for faster speed amirite? That was awful don't mind me)

- A sincere request to help troubleshoot a laptop (Honestly I would've helped with help requests but this is a dating site...)

- A sincere request to help debug a student project followed with a link to a GitHub repo

- Another sincere request with studying for a computer exam

- And lastly, my favourite: a sincere job offer by a guy who went from flirtatious to desperate for a programmer in a minute. He was looking for *insert python, big data, buzzwords here* and asked me for a LinkedIn. I proceeded to inquire exactly what he wanted me to do. He then asks me to WRITE a Python tutorial and that he would pay a few cents per word written so he could publish it. Literally no programming involved.

Needless to say I went to look elsewhere.26 -

About two years ago I get roped into a something when someone was requesting an $8000 laptop to run an "program" that they wrote in Excel to pull data from our mainframe.

In reality they are using our normal application that interacts with the mainframe and screen scrapping it to populate several Excel spreadsheets.

So this guy kept saying that he needed the expensive laptop because he needed the extra RAM and processing power for his application. At the time we only supported 32 bit Windows 7 so even though I told him ten times that the OS wouldn't recognize more than 3.5 GB of RAM he kept saying that increasing the RAM would fix his problem. I also explained that even if we installed the 64 bit OS we didn't have approval for the 64 bit applications.

So we looked at the code and we found that rather than reusing the same workbook he was opening a new instance of a workbook during each iteration of his loop and then not closing or disposing of them. So he was running out of memory due to never disposing of anything.

Even better than all of that, he wanted a faster processor to speed up the processing, but he had about 5 seconds of thread sleeps in each loop so that the place he was screen scrapping from would have time to load. So it wouldn't matter how fast the processor was, in the end there were sleeps and waits in there hard coded to slow down the app. And the guy didn't understand that a faster processor wouldn't have made a difference.

The worst thing is a "dev" that thinks they know what they are doing but they don't have a clue.7 -

> Open Quora

> Read highly upvoted answer that in essence says "ping - t localhost to make internet speed faster by 'sucking the wifi'"

> Realize that senior at office has upvoted it

> Jump out of the window2 -

When your internet download speed is faster than your system copy speed. Damn! I need a new laptop !!12

-

Yesterday my my mother had problems with Office365 and called a servicedesk. They said her computer was slow and that an SD could improve the speed. My mother said that there already was an SSD in there to which he replied yes but that is for RAM, to make opening things faster you need an SD like in your phone...

Where is the world going?13 -

I hate people who think that building software is all about one click away and generating things. I got told to complete the task faster than the speed of light.

Fancy me some rant time? Let's name that cunt, "Bob".

"

Hey Bob, I got questions for you. Are you sure you were in your mum's womb for 8-9 months? Are you the kind of twat who honk at people as soon as the traffic light's turning green?

Building software takes time, the CI/CD takes time, TestFlight takes time, approvals from the Google Play store take time, approvals from Apple App Store connect take time, Unit testing takes time and every fucking thing you can name takes time!

It's just like sex, nobody wants to be with someone who can only last in bed for 0.000000000001 nanoseconds, the longer, the better, (but not too long).

It is also like building houses, which takes months to build not hours. As from my experience so far, something tells me that you are not the kind of person who would understand how to build a house but a sand castle which takes only hours to build.

Relentlessly, you bombarded me with a pile of bollocks and a pile of nonsense is not going to fasten up the compilation of the software.

"4 -

Screw the German Telekom!

I recently got a new home without internet so naturally, I went to an isp, Telekom. I went there a few weeks ago and was pleasantly surprised by the personal and the general competence. He told me they would send a technician to check my cable. So I thought great and went home. 1 A week passes, nobody shows up. I then went back to the shop and asked(someone different). He basically told me that such a service must be specifically asked for and a contract has to be signed. I then told

him his colleague told me no such thing, and that the technician should have checked up on my connection last week. He excuses him self and I signed the thingy.

Now you would imagine that this would have worked.

but.

NOOOoooo.

A week came and went and I got pissed. So I went back to the shop the guy from the first try was there. I Asked what happened, he types in his Computer. and. and. and. nothing. Apparently, the previous guy forgot, fucking forgot, to enter my request to their bloody System.

Now I asked if I can Just become a customer.

Guy: Sure, what speed is available in your region?

Me: I don't know...

Guy: Let me check

/Type/ /Type/

Guy: I can't see your speed the technician should have checked.

Me: Um, so, can he check?

Guy: Clearly you don't know what you want

Me:???

Guy:*leaves table*

(shorten but you get the Idea)

At this point, I really wanted to change isp so I went to Vodafone.

Lady comes up to me asks me a bunch of stuff and I explain I would want to change my phone, internet, tv, mobile and my friends mobile(I lost a bet once ^~^) to Vodafone.

What happened next I can't really explain, but she talked to her boss and "cheated" (how she calls it) on Vodafone and got me an AMAZING deal it is cheaper than Telekoms has waay more mobile data, faster Internet and I got a new phone :D.

And guess what she could fucking check, fucking check from here Computer my max internet speed.

I can only hope that the lady got a big fat commission for what she has done.6 -

Biggest challenge I overcame as dev? One of many.

Avoiding a life sentence when the 'powers that be' targeted one of my libraries for the root cause of system performance issues and I didn't correct that accusation with a flame thrower.

What the accusation? What I named the library. Yep. The *name* was causing every single problem in the system.

Panorama (very, very expensive APM system at the time) identified my library in it's analysis, the calls to/from SQLServer was the bottleneck

We had one of Panorama's engineers on-site and he asked what (not the actual name) MyLibrary was and (I'll preface I did not know or involved in any of the so-called 'research') a crack team of developers+managers researched the system thoroughly and found MyLibrary was used in just about every project. I wrote the .Net 1.1 MyLibrary as a mini-ORM to simplify the execution of database code (stored procs, etc) and gracefully handle+log database exceptions (auto-logged details such as the target db, stored procedure name, parameter values, etc, everything you'd need to troubleshoot database errors). This was before Dapper and the other fancy tools used by kids these days.

By the time the news got to me, there was a team cobbled together who's only focus was to remove any/every trace of MyLibrary from the code base. Using Waterfall, they calculated it would take at least a year to remove+replace MyLibrary with the equivalent ADO.Net plumbing.

In a department wide meeting:

DeptMgr: "This day forward, no one is to use MyLibrary to access the database! It's slow, unprofessionally named, and the root cause of all the database issues."

Me: "What about MyLibrary is slow? It's excecuting standard the ADO.Net code. Only extra bit of code is the exception handling to capture the details when the exception is logged."

DeptMgr: "We've spent the last 6 weeks with the Panorama engineer and he's identified MyLibrary as the cause. Company has spent over $100,000 on this software and we have to make fact based decisions. Look at this slide ... "

<DeptMgr shows a histogram of the stacktrace, showing MyLibrary as the slowest>

Me: "You do realize that the execution time is the database call itself, not the code. In that example, the invoice call, it's the stored procedure that taking 5 seconds, not MyLibrary."

<at this point, DeptMgr is getting red-face mad>

AreaMgr: "Yes...yes...but if we stopped using MyLibrary, removing the unnecessary layers, will make the code run faster."

<typical headknodd-ers knod their heads in agreement>

Dev01: "The loading of MyLibrary takes CPU cycles away from code that supports our customers. Every CPU cycle counts."

<headknod-ding continues>

Me: "I'm really confused. Maybe I'm looking at the data wrong. On the slide where you highlighted all the bottlenecks, the histogram shows the latency is the database, I mean...it's right there, in red. Am I looking at it wrong?"

<this was meeting with 20+ other devs, mgrs, a VP, the Panorama engineer>

DeptMgr: "Yes you are! I know MyLibrary is your baby. You need to check your ego at the door and face the facts. Your MyLibrary is a failed experiment and needs to be exterminated from this system!"

Fast forward 9 months, maybe 50% of the projects updated, come across the documentation left from the Panorama. Even after the removal of MyLibrary, there was zero increases in performance. The engineer recommended DBAs start optimizing their indexes and other N+1 problems discovered. I decide to ask the developer who lead the re-write.

Me: "I see that removing MyLibrary did nothing to improve performance."

Dev: "Yes, DeptMgr was pissed. He was ready to throw the Panorama engineer out a window when he said the problems were in the database all along. Didn't you say that?"

Me: "Um, so is this re-write project dead?"

Dev: "No. Removing MyLibrary introduced all kinds of bugs. All the boilerplate ADO.Net code caused a lot of unhandled exceptions, then we had to go back and write exception handling code."

Me: "What a failure. What dipshit would think writing more code leads to less bugs?"

Dev: "I know, I know. We're so far behind schedule. We had to come up with something. I ended up writing a library to make replacing MyLibrary easier. I called it KnightRider. Like the TV show. Everyone is excited to speed up their code with KnightRider. Same method names, same exception handling. All we have to do is replace MyLibrary with KnightRider and we're done."

Me: "Won't the bottlenecks then point to KnightRider?"

Dev: "Meh, not my problem. Panorama meets primarily with the DBAs and the networking team now. I doubt we ever use Panorama to look at our C# code."

Needless to say, I was (still) pissed that they had used MyLibrary as dirty word and a scapegoat for months when they *knew* where the problems were. Pissed enough for a flamethrower? Maybe.5 -

When I started my current job 6 years ago I was given a desk phone with a 100mb port. Speed didn't matter at the time as everyone was given laptops for our desks. I changed positions in the company where I'm going to be provisioning servers and whatnot over the network. Started using a desktop that didn't have a Wi-Fi adapter. I requested a new phone with a Gig switch port, if possible, so doing file transfers on the network wouldn't be limited. IT had a couple of questions...

IT-Have you noticed slowness when downloading/uploading?

Me-No, but its a 100mb port...so.

IT-Well I just did a speed test and we're getting 60up/5down. Your phone is over that.

(Working from home? Our fiber was way faster than that I thought.)

Me-That's fine, but this will be for internal network transfers. Not going out to the internet. We have gigabit switches on campus correct?

It-Yes but you shouldn't notice a speed difference.

Me, now lost-If you can't change out the phone that's fine. I'll figure our something.

IT-Now now, lets troubleshoot your issue. Can you plug your phone in?

Me-Yes I have it, but I'm remote today. There is no way for it to reach the call manager.

IT-Let's give it a try.

40 min of provisioning later he gave up and said maybe it is broke. Got a "working" one the next week.

PS first post, and writing on phone. Yay insomnia! -

Got fed up with having to use the mouse/trackpad while editing code or using the terminal, so I decided to (finally) learn proper vim keybindings and tmux.

Boooooy oh boy, this certainly changes things.

I think I'm in love with tmux. Damn that piece of software is so sexy. Disabled the mouse, propped up my dotfiles and installed tmux + my conf on all machines I use. It's so useful, so fast and so pretty...

Spent some time with vimtutor too. Finally getting faster with the keybindings. Installed neovim, got some plug-ins (nerdtree, fzf etc), disabled the mouse and arrow keys, and made it pretty. It's actually pretty nice, but I'm not at the "buff gorilla who took speed and pressed 24 keys in a microsecond" typing level yet. One day though.

Also I'm using the Nord color scheme on everything. Overall pretty satisfied with the end result. Still not as productive as I was with VS Code, but I think I'll eventually surpass my previous productivity levels.

If anyone has any tips for vim/nvim or tmux, feel free to share!10 -

There was a meme that was going around a couple of years ago, it was picture of the System32 folder in Windows and underneath it was a text that said "If you want to make your internet download speed faster, delete this folder". I had shared it on Facebook, thinking nobody could fall for this.

Surely enough, about half an hour later came the surge of messages saying that they're not able to delete the folder and wanted help. Most of them were my classmates in college.3 -

Just as I wait for my train, some advertisers from a utility company here approached me. Asking what my company is etc..

Me: "well I'm making my own company..."

*Looks at their pamphlet*

"Oh, utility company you mean. My apartment building has solar panels."

Them: "oh you know about electricity right... And F-16, the fighter jets that fly at 3000km/h"

(My neighbor is a former aerospace technician who mentioned that previously, should be about right)

Them: "they fly faster than electricity!"

Me: "but um.. electricity travels at the speed of light..."

Them: *avoid subject*

Them: "yeah it travels 7 times around the globe in 1 second"

Me: *recalls ping to my servers in Italy*

"Yeah to Italy my ping is about 300ms if memory serves me right... So that'd make sense"

(Turned out to be 40ms.. close enough though, right 🙃)

Them: "don't travel too much at light speed, alright!"

*They pack up and leave*

Meanwhile me, thinking: but guys.. all I wanted to do was smoke a cigarette before my train comes. Why did you waste my time with this? And uninformed time wastage at that.

Advertisers are the worst 😶12 -

TIP:

1.1.1.1: the fastest, privacy-first consumer DNS service

I switched to faster DNS,

And believe it or not, it improved my internet speed.

Just add this DNS and you're gonna experience faster browsing

DNS1: 1.1.1.1

DNS2: 1.0.0.1

comment below if you experience it. 19

19 -

Static HTML pages are better than "web apps".

Static HTML pages are more lightweight and destroy "web apps" in performance, and also have superior compatibility. I see pretty much no benefit in a "web app" over a static HTML page. "Web apps" appear like an overhyped trend that is empty inside.

During my web browsing experience, static HTML pages have consistently loaded faster and more reliably, since the browser is immediately served with content useful for consumption, whereas on JavaScript-based web "apps", the useful content comes in **last**, after the browser has worked its way through a pile of script.

For example, an average-sized Wikipedia article (30 KB wikitext) appears on screen in roughly two seconds, since MediaWiki uses static HTML. Everipedia, in comparison, is a ReactJS app. Guess how long that one needs. Upwards of three times as long!

Making a page JavaScript-based also makes it fragile. If an exception occurs in the JavaScript, the user might end up with a blank page or an endless splash screen, whereas static HTML-based pages still show useful content.

The legacy (2014-2020) HTML-based Twitter.com loaded a user profile in under four seconds. The new react-based web app not only takes twice as long, but sometimes fails to load at all, showing the error "Oops something went wrong! But don't fret – it's not your fault." to be displayed. This could not happen on a static HTML page.

The new JavaScript-based "polymer" YouTube front end that is default since August 2017 also loads slower. While the earlier HTML-based one was already playing the video, the new one has just reached its oh-so-fancy skeleton screen.

It would once have been unthinkable to have a website that does not work at all without JavaScript, but now, pretty much all popular social media sites are JavaScript-dependent. The last time one could view Twitter without JavaScript and tweet from devices with non-sophisticated browsers like Nintendo 3DS was December 2020, when they got rid of the lightweight "M2" mobile website.

Sometimes, web developers break a site in older browser versions by using a JavaScript feature that they do not support, or using a dependency (like Plyr.js) that breaks the site. Static HTML is immune against this failure.

Static HTML pages also let users maximize speed and battery life by deactivating JavaScript. This obviously will disable more sophisticated site features, but the core part, the text, is ready for consumption.

Not to mention, single-page sites and fancy animations can be implemented with JavaScript on top of static HTML, as GitHub.com and the 2018 Reddit redesign do, and Twitter's 2014-2020 desktop front end did.

From the beginning, JavaScript was intended as a tool to complement, not to replace HTML and CSS. It appears to me that the sole "benefit" of having a "web app" is that it appears slightly more "modern" and distinguished from classic web sites due to use of splash screens and lack of the browser's loading animation when navigating, while having oh-so-fancy loading animations and skeleton screens inside the website. Sorry, I prefer seeing content quickly over the app-like appearance of fancy loading screens.

Arguably, another supposed benefit of "web apps" is that there is no blank page when navigating between pages, but in pretty much all major browsers of the last five years, the last page observably remains on screen until the next navigated page is rendered sufficiently for viewing. This is also known as "paint holding".

On any site, whenever I am greeted with content, I feel pleased. Whenever I am greeted with a loading animation, splash screen, or skeleton screen, be it ever so fancy (e.g. fading in an out, moving gradient waves), I think "do they really believe they make me like their site more due to their fancy loading screens?! I am not here for the loading screens!".

To make a page dependent on JavaScript and sacrifice lots of performance for a slight visual benefit does not seem worthed it.

Quote:

> "Yeah, but I'm building a webapp, not a website" - I hear this a lot and it isn't an excuse. I challenge you to define the difference between a webapp and a website that isn't just a vague list of best practices that "apps" are for some reason allowed to disregard. Jeremy Keith makes this point brilliantly.

>

> For example, is Wikipedia an app? What about when I edit an article? What about when I search for an article?

>

> Whether you label your web page as a "site", "app", "microsite", whatever, it doesn't make it exempt from accessibility, performance, browser support and so on.

>

> If you need to excuse yourself from progressive enhancement, you need a better excuse.

– Jake Archibald, 20139 -

Me and the unofficial CTO (3rd party's CTO) are interviewing an ios dev. (I'm an android dev, btw)

CTO: In your view, would you find developing an ios app would be faster or at the same speed as an android dev?

Me: *shock*

Canidate: I've never developed on Android before, but I'd think it would be the same speed. We both need to make screens, views, and type code.6 -

I was out sick the day an urgent ETL job I was building would be due, so it got reassigned. When I return, I find most of my code commented out and replaced.

The first step was rewritten, with a comment that reads "Made changes to run faster." What used to be a single execution lasting 30 seconds was now a 4 step process taking 5 minutes, and yielding identical results.

Being a one-time execution (not a recurring job), I'm left wondering why they thought execution speed was even an issue, let alone what about their redesign they felt was an improvement...2 -

[Update: https://devrant.com/rants/4425480/...]

So had a 1:1 with my manager today followed by 1:1 with lead.

I did bring up the topic that I felt a little insecure about being sacked.

Both of them reassured me multiple times that losing my job would be the last of the last things. We have so much work and going through a resource crunch to keep up with the pace.

There are still many things I have to learn here. I am glad that my proactive-ness has always helped me learn faster and better. This way, I was also able to offer a helping hand to my manager by saying if they need any help on the transitioning, I am will to take extra on my plate until we have a replacement.

A bumpy ride ahead for sometime but surely manager is impressed with the speed at which I ramped up and willingness to go beyond.

Overall, I see this as a good opportunity to step into the lime light, build an amazing product from scratch in a publicly traded company, and a good good chance to relocate to EU when I show them good results with my performance.

Overall, sky looks brighter but sea will be a little rough for some time.4 -

Never lose your sense of wonder when it comes to working with clients. Client berated us saying her data were outdated. Ok. Check the file the third party that generated the data is sending us.

Outline all stated discrepancies in the data back to the client, showing that everything lines up with what we are receiving.

Client is frustrated. Contact the third party in their behalf.

Third party support: “oh yea, client had us start sending data to your competitor like a month ago”

Bruh. Bruh. Bruh.

Fortunately the client wants to stay with us and is getting their data pointed back but how in the hell do you forget that. The reason the client when looking at competition (at least guessing looking at previous call records) is to get faster processing of the data coming from the third party. How are you gonna forget you turned off the sending when you are so worried about speed?! Most of our clients are running 7-8 figure businesses by the way.1 -

Does anyone practise code reading and comprehension? If so, are you able to share your idea?

I try to find how to read code faster with retention and comprehension. It is much like speed reading, but I am reading code.

Here is my journey so far:

Stage 1:

When reading code, I literally each word in line as comment. I though it will help me to understand better. It did, but the retention was not strong enough.

Stage 2:

After reading each line, I will close my eyes for 1-2 seconds and do a reflection what I just read. Better understanding, but comprehension still not good.

Stage 3:

After reading each line, I use my own words to describe what it does and write down as comment. I found that I have better comprehension

Stage 4:

Constantly, remind myself to describe with my own words. this speeds up the reading and understanding.

To be honest, I am still trying.6 -

We were documenting a feature which has system wide affect. We’ll be delivering it to customer on Monday.

So we’ve asked the colleague who worked on it about how it works and asked few follow up questions that arise during the documenting. All were good.

Comes Friday when I had a question as some things didn’t add up and I checked the source. To my surprise the very core operation colleague explained us works in exact opposite way. I kid you not in %50 percent of the documentation we ramble about why it was implemented this way since it is faster/safer best practices bla bla.

Moreover we’ve already had some exchange with the customer and we informed(misinformed) them about this core operation...

Also changing the behavior will reduce the overall speed as it will cause extra branchings. Other option is to rewrite the documentation and inform(re-convince) the customer. If it was me I wouldn’t trust us anymore but we’ll see.

I really don’t know what to say about this fucker why would you say something if you’re not sure of it or why the fuck you didn’t confirm in the last 3 weeks....

Anyway we have a meeting on Monday morning to discuss how to proceed, that’s gonna be fun!1 -

Imagine you work in a mechanic’s shop. You just got trained today on a new part install, including all the task-specific tools it takes to install it.

Some are standard tools, like a screwdriver, that most people know how to use. Others are complicated, single-purpose tools that only work to install this one part.

It takes you a couple of hours compared to other techs who learned quicker than you and can do it in 20 minutes. You go to bed that night thinking “I’ve got this. I’ll remember how this works tomorrow and I’ll be twice as fast tomorrow as I was today.”

The next morning, you wake up retaining a working, useful memory of only about 5% on how to use the specialized tools and installation of the part.

You retrain that day as a review, but your install time still suffers in comparison. You again feel confident by the end of the day that you understand and go to bed thinking you’ll at least get within 10-20 minutes of the faster techs in your install.

The next morning, you wake up retaining a working, useful memory of only 10% on how to use the specialized tools.

Repeat until you reach 100% mastery and match the other techs in speed and efficiency.

Oops! Scratch that! We are no longer using those tools or that part. We’re switching to this other thing that somehow everyone already knows or understands quickly. Start over.

This has been my entire development career. I’m so tired.2 -

Ok, first rant, about my struggles getting reliable internet over the past 6 years. It's not too interesting of a topic, but here we go:

I'm living in a more rural part of Germany and internet here is shit. I pay more than 50 bucks a month for 700kb/s downstream (let's just not talk about upstream...), which is meh by itself but it gets worse. Before this I had roughly 230kb/s downstream using DSL. My provider came out with a new oh-so-fucking-fancy solution for giving people faster internet without upgrading their lame ass fucking backbone and POS infrastructure from 70 years ago: they sell you hybrid internet which combines your shit DSL and an LTE connection using TCP Multicast. Not only do I get only 6 of my promised (and payed for) 50 Mbit, no, It's also a fucking piece of nonworking shit!!!

Let me illustrate:

You constantly have problems with web content (or any remote content) not loading because the host server does not support TCP Multicast. It either refuses connection altogether or it takes about 30-50 seconds to establish a connection. Think about your live when it takes two or three fucking minutes to load 5 YouTube thumbnails or load new tweets at the bottom of the Twitter page! Also, you never know if you a) have an error in your implementation of a new API or if b) the remote host doesn't support TCPMC (there's never an error for that! Fuck you!), your SSH sessions ALWAYS drop in the most inopportune fucking moments because the LTE thing lost connection, you always have to turn on a VPN if you want to visit specific websites (for example your school's website) and so on....

Oh and also, my provider started throttling specific services again these days with Netflix and YouTube struggling to display 240p, fucking 240p video without buffering when I get 600kbit down on steam (ofc the steam download is paused when watching videos). When using a VPN, YouTube 720p and Netflix HD work like a charm again. Fucking Telekom bastards

Then there is the problem with VPNs. The good thing about them is that they solve all the TCP Multicast problems. Yay. Now for the bad things:

First of all, as soon as I use a VPN, access times to remote go up by like fucking 500%. A fucking DNS lookup takes 8-15 seconds!!! The bandwidth is there but it takes forever.. because reasons I guess. Then the speed drops to DSL speeds after a while because the router turns off my LTE connection when it is unused and it does not detect VPN traffic as traffic (again because... Reasons?) And also, the VPN just dies after an hour and you have to manually reconnect (with every VPN provider so far)

And as if that wasn't enough, now the lan is dying on me, too, with the router (the fucking expensive hybrid piece of shit, 230 bucks..) not providing DHCP service anymore or completely refusing all wifi connections or randomly dropping 5Ghz devices, or.....

You get the point.

The worst thing is, they recently layed down 400mbit fiber in my neighborhood. Guess where the FUCKING PIECE OF SHIT CABLE ENDS??? YEAH, RIGHT IN FRONT OF MY NEIGHBORS HOUSE. STREET NUMBER 19 IS SERVED WITH 400MBIT AND MY HOME, THE 20, IS NOT IN THEIR FUCKING SERVICE REGION. Even though there is a fucking cable with the cable companies name on it on my property, even leading up to my house! They still refuse to acknowledge it! FUCK YOU!!!!

Well anyways thanks for reading. Any of you got the same problems? :/2 -

!dev

Guys, we need talk raw performance for a second.

Fair disclaimer - if you are for some reason intel worker, you may feel offended.

I have one fucking question.

What's the point of fucking ultra-low-power-extreme-potato CPUs like intel atoms?

Okay, okay. Power usage. Sure. So that's one.

Now tell me, why in the fucking world anyone would prefer to wait 5-10 times more for same action to happen while indeed consuming also 5-10 times less power?

Can't you just tune down "big" core and call it a day? It would be around.. a fuckton faster. I have my i7-7820HK cpu and if I dial it back to 1.2Ghz my WINDOWS with around lot of background tasks machine works fucking faster than atom-powered freaking LUBUNTU that has only firefox open.

tested i7-7820hk vs atom-x5-z8350.

opening new tab and navigating to google took on my i7 machine a under 1 second, and atom took almost 1.5 second. While having higher clock (turbo boost)

Guys, 7820hk dialled down to 1.2 ghz; 0.81v

Seriously.

I felt everything was lagging. but OS was much more responsive than atom machine...

What the fuck, Intel. It's pointless. I think I'm not only one who would gladly pay a little bit more for such difference.

i7 had clear disadvantages here, linux vs windows, clear background vs quite a few processes in background, and it had higher f***ng clock speed.

TL;DR

Intel atom processors use less power but waste a lot of time, while a little bit more power used on bigger cpu would complete task faster, thus atoms are just plain pointless garbage.

PS.

Tested in frustration at work, apparently they bought 3 craptops for presentations or some shit like that and they have mental problems becouse cheapest shit on market is more shitty than they anticipated ;-;

fucking seriously ;-;16 -

Client just said to me "we'll give you a 'speed' payment to deliver the website faster, you just charge us what you think is right". WTF!

Blank cheque time... :)2 -

Ok here goes me trying to explain some logic here, I apologise in advance!

I've been using an axis based movement system for my games for a while now but always had the issue of characters moving faster diagonally because the movement shape would form a square; meaning things would move at twice the speed.

Only now thought 'hang on, direction's act as circles when given a radius..'

Suddenly everything works perfectly fine and all it took was 3 lines of code... Well done Alex you tool. 18

18 -

Fun fact:

The gradual speed increase in the descent pattern of the aliens in space invaders was actually a bug, due to the amount of aliens in the screen.

The more you kill, the faster they get.3 -

During one of our visits at Konza City, Machakos county in Kenya, my team and I encountered a big problem accessing to viable water. Most times we enquired for water, we were handed a bottle of bought water. This for a day or few days would be affordable for some, but for a lifetime of a middle income person, it will be way too much expensive. Of ten people we encountered 8 complained of a proper mechanism to access to viable water. This to us was a very demanding problem, that needed to be sorted out immediately. Majority of the people were unable to conduct income generating activities such as farming because of the nature of the kind of water and its scarcity as well.

Such a scenario demands for an immediate way to solve this problem. Various ways have been put into practice to ensure sustainability of water conservation and management. However most of them have been futile on the aspect of sustainability. As part of our research we also considered to check out of the formal mechanisms put in place to ensure proper acquisition of water, and one of them we saw was tree planting, which was not sustainable at all, also some few piped water was being transported very long distances from the destinations, this however did not solve the immediate needs of the people.We found out that the area has a large body mass of salty water which was not viable for them to conduct any constructive activity. This was hint enough to help us find a way to curb this demanding challenge. Presence of salty water was the first step of our solution.

SOLUTION

We came up with an IOT based system to help curb this problem. Our system entails purification of the salty water through electrolysis, the device is places at an area where the body mass of water is located, it drills for a suitable depth and allow the salty water to flow into it. Various sets of tanks and valves are situated next to it, these tanks acts as to contain the salty water temporarily. A high power source is then connected to each tank, this enable the separation of Chlorine ions from Hydrogen Ions by electrolysis through electrolysis, salt is then separated and allowed to flow from the lower chamber of the tanks, allowing clean water to from to the preceding tanks, the preceding tanks contains various chemicals to remove any remaining impurities. The whole entire process is managed by the action of sensors. Water alkalinity, turbidity and ph are monitored and relayed onto a mobile phone, this then follows a predictive analysis of the data history stored then makes up a decision to increase flow of water in the valves or to decrease its flow. This being a hot prone area, we opted to maximize harnessing of power through solar power, this power availability is almost perfect to provide us with at least 440V constant supply to facilitate faster electrolysis of the salty water.

Being a drought prone area, it was key that the outlet water should be cold and comfortable for consumers to use, so we also coupled our output chamber with cooling tanks, these tanks are managed via our mobile application, the information relayed from it in terms of temperature and humidity are sent to it. This information is key in helping us produce water at optimum states, enabling us to fully manage supply and input of the water from the water bodies.

By the use of natural language processing, we are able to automatically control flow and feeing of the valves to and fro using Voice, one could say “The output water is too hot”, and the system would respond by increasing the speed of the fans and making the tanks provide very cold water. Additional to this system, we have prepared short video tutorials and documents enlighting people on how to conserve water and maintain the optimum state of the green economy.

IBM/OPEN SOURCE TECHNOLOGIES

For a start, we have implemented our project using esp8266 microcontrollers, sensors, transducers and low payload containers to demonstrate our project. Previously we have used Google’s firebase cloud platform to ensure realtimeness of data to-and-fro relay to the mobile. This has proven workable for most cases, whether on a small scale or large scale, however we meet challenges such as change in the fingerprint keys that renders our device not workable, we intend to overcome this problem by moving to IBM bluemix platform.

We use C++ Programming language for our microcontrollers and sensor communication, in some cases we use Python programming language to process neuro-networks for our microcontrollers.

Any feedback conserning this project please? 8

8 -

FUCKING MOZILLA!

>Quantum

>over9999 times faster

-Ughm, okay, but what is the trick?..

-THE TRICK IS THAT NONE OF YOUR FAVORITE ADDONS WILL WORK!!!

Seriously this is fucking insane! Several of my addons which were essential for me to stay sane is gone and I don't even know how to live without them. And all this bullshit is happening because these idiots in Mozilla decided to enforce use of WebExtensions. For ones who unaware, WebExtensions is much, much poorer framework and many cool addons simply unable to work under WebExtensions because of its limitations.

Are you the one who loved the speed and joy of using mouse gestures? GUESS WHAT — IT'S GONE.

Maybe you made your browsing activity super efficient with use of the “Tab Groups” addon that used to allow you to group your browser sessions? SAY BYE-BYE TO IT!

And many more!

Most importantly, I cannot understand why would a company enforce use of a framework that will decrease functionality of a product.

Anyway, it seems like Firefox is not a browser for addon enthusiasts like me anymore; got to find a new one, any recommendations?11 -

I decided to upgrade my intellij ultimate from 2019.3 to 2020.2 and I saw there is update button.

I clicked on it.

As I expected it didn’t work and it was 30 minutes waiting looking at progress bar going back and forth couple of times before I decided just to download latest version and drag and drop it to applications folder ( took me 5 minutes) - I use mac so it replaces all crap ( I think ).

I cleared the old cache that growed to 2 gigabytes leaving some configuration files.

Next as always crash on startup cause of incompatible plugins with long java stacktrace - at least I could click the close button or popup closed itself I can’t remember ( one version I remember this button couldn’t be clicked cause it was off the screen and you need to do some cheating to launch ide )

The font has changed and I see that it at least work a little faster - that is nice. Indexing is finally fixed after all those years - probably thanks to visual studio code intellisense pushing those lazy bastards to deal with this.

But the preloader on first logo disappears so I think they decided to remove it cause it’s so fast - no it loads the same time or maybe little longer when I launch it on my old macbook.

After that as always I looked at plugins to see if there’s something interesting, so to find ability to scroll over whole plugins I needed to click couple of times. I think they assume I remember all the nice plugins in their marketplace and I only type search.

Maybe I should be type of user who reads best 2020 plugins for your best ide crap articles filled with advertising or even waste more time to watch all of this great videos about ide ( are there any kind of this stuff ? )

After a few operations I unfortunately clicked apply instead of restart ide and it hanged up on uninstalling some plugin I’m no longer interested in for 5 minutes so I decided to use always working ‘kill -9’ from command line.

Launched again and this time success.

Fortunately indexing finished for this workspace and I can work.

I’m intellij ultimate subscriber for 7+ years and I see those craps are not changing from like forever.

What’s the point of automate something that you can’t regression test ?

I started thinking that now when most people are facebook wall scrolling zombies companies assume that when new software comes out everyone is installing it right away and if not they’re probably not our customers cause they’re dead.

What a surprise they have when I pay for another year I can only imagine ( to be fair probably they even don’t know who I am ).

Yeah for sure I am subscribed to newsletters and I have jetbrains as a start page cause I shit myself with money and have nothing better to do then be grupie ( is there corporate grupies already a big community? )

Well I am a guy who likes to spend some time when installing anything and especially software that is responsible for my main source of income and productivity speed up.

Anyway I decided to upgrade cause editing es7 and typescript got to be pain in the ass and I see it’s working fine now. I don’t know if I like the font but at least the editor it’s working the same or maybe faster then the original that is huge improvement as developers lose most of their time between keyboard and screen communication protocol.

I don’t write it to discourage intellij as it’s great independent ide that I love and support for such a long time but they should focus on code editor and developers efficiency not on things that doesn’t make sense.

Congratulations if you reached this point of this meaningless post.

Now I started thinking that maybe it’s working faster cause I removed 2 gigs of crap from it.

Well we’ll see. -

Tabs, or No Tabs? I did the same as this commentor 2 years ago. I can code so quick now because of this simple switch. Here's why:

(source, Laracasts.com)

Ben Smith

"I think the most beneficial tip was to do away with tabs. Although it took a while to get used to and on many occasions in the first few days I almost switched them back on, it has done wonders for my workflow.

I find it keeps my brain more engaged with the task at hand due to keeping the editor (and my mind) clutter free. Before when I had to refer to a class, I would have opened it in a new tab and then I might have left it open to make it easier to get to again. This would quickly result in a bar full of tabs and navigation around the editor would become slow and my brain would get bogged down keeping track of what was open and which tab it was in. With the removal of the tab bar I'm now able to keep only the key information in my mind and with the ability to quickly switch between recently opened files, I find I haven't lost any of the speed which I initially thought I might.

In fact this is something I have noticed in all areas of writing code, the more proficient I have become with an editor the better the code I have been writing. Any time spent actually writing your code is time in which your brain is disconnected from the problem you are trying to solve. The quicker you are able to implement your ideas in code, the smaller the disconnect becomes. For example, I have recently been learning how to do unit testing and to do so I have been rewriting an old project with tests included. The ability to so quickly refactor has meant that whereas before I might have taken 30 seconds shuffling code around, now I can spend maybe 5 seconds allowing my mind to focus much better on how best to refactor, not on the actual process of doing so."

jeff_way Mod

"Yeah - it takes a little while to get used to the idea of having no tabs. But, I wouldn't go back at this point. It's all about forcing yourself into a faster workflow. If you keep the tabs and the sidebar open, you won't use the keyboard."2 -

My Vega56 gpu is mining a bit faster than usual after the last reset. Not by a huge amount, but a little faster. Enough for it to be noticeable.

I don't wanna touch it. I just wanna let it sit there and happily chew away those hashes.

But I will have to touch it eventually, if I wanna turn my monitor off. If I wanna go to sleep.

Doing that will trigger a restart of the miner.

And I will never know if it will have that extra lil speed bump ever again.

Why is life so cruel2 -

Swapped workplaces as the previous one wanted to get rid of me, the new one so far feels even worse.

Teammates are too busy to help, codebase makes spaghetti code look like a compliment (and it takes forever to compile) and my manager somehow believes I’m super man, supposed to finish everything faster than speed of light.

I’m miserable.2 -

I moved to another ISP today. Now my download speed is 40Mbps and upload 20Mbps. I can browse devRant faster and download porn much faster via IDM.12

-

End of my rant about 35 day recruitment process for anyone interested to hear the ending.

Just got rejected by a company (name Swenson He) for a remote Android Engineer SWE role after wasting 35 days for the recruitment process.

First intro interview went good, then I did an assignment that had 72 hours deadline (asked for couple days longer to do this task, did it in around 40 hours, it was purely an assignment not free work, I could have done it in a day but I choose to overeenginer it so I could use the project as a portfolio piece, no regrets there and I learned some new things). After that It took them 2 weeks just to organize the technical interview.

2 days after the technical interview I received an offer from a second company with 1 week to decide. I immediately informed the Swenson He about it and politely asked whether they could speed up their decision process.

Now I know that I could just sign with the second company and if the big Swenson He would decide to bless me with and offer I could jump ship. But out of principle I never did that for 7 years of my career. If Im in a situation with multiple offers I always inform all parties (it's kinda a test for them so I could see which one is more serious and wants me to work for them more). Swenson He didnt pass the test.

6 days of silence. I pinged the techlead I interviewed with on LinkedIn about my situation, he assured me that he'l ask his hiring team to get back to me with feedback that day.

2 days of silence. I had to decide to sign or not to sign with a second company. I pinged the techlead again regarding their decision and 10min after received a rejection letter. There was no feedback.

I guess they got pissed off or something. Idk what were they thinking, maybe something along the lines of "Candidate trying to force our 35day recruitment process to go faster? Pinging us so much? Has another offer? What an asshole! ".

Didnt even receive any feedback in the end. Pinged their techlead regarding that but no response. Anyways fuck them. I felt during entire process that they are disrespecting me, I just wanted do see how it ends. Techlead was cool and knowledgeable but recruitment team was incompetent and couldn't even stay in touch properly during entire process. Had I didn't force their hand I bet they would have just ghosted me like they did with others according to their Glassdoor reviews.

Glad I continued to interview for other places, tomorrow Im signing an offer. Fuck Swenson He.

P.S. From my experience as a remote B2B contractor if a company is serious the entire process shouldn't take longer than max 2 weeks. Anything outside of that is pure incompetence. Even more serious companies can organize the required 2-3 meetings in a week if they have to and if they are interested. Hiring process shouldnt take longer than MAX 3 weeks unless you are applying for some fancy slow picky corporate company or a FAANG, which is out of topic for this post. -

Question to the frontend devs: I'm currently trying to optimize part of our JS. How do sourcemaps influence loading/rendering Speed in the Browser?

In the `webpack.config.js` we use uglify as minimizer for js. It has the option `sourceMap` Set to `true`. Now as I understand it, this caused it to Inline a sourcemap inside the JS file (which leads to a slightly bigger file size and thus longer load time for the generated file...).

My Question: should sorcemaps be enabled for prod (minified) files? Why yes, why no? Do they help the browser parse the JS faster?12 -

It's really annoying when you're watching a tutorial video and the person speaks much faster than he can type.

1.5x speed and the guy manages to type a single line of <p> tag and has like spoken 30000 sentences. -

Dear Microsoft: I don't care if Edge or any other of your in-house developed software is faster than the alternatives, it's about quality not speed.

If you design quality products people will use them, just because they are "faster" doesn't mean shit.5 -

So I'm going to wait a bit longer to actually buy the phone since I want to have at least had my S7 for a year before I buy a new one, but for those who saw my other rant about buying a new phone, I've made a decision.

I'll be buying a One Plus 5. It's just... How can you even say there's a better phone out there? So far the only phone faster than it is the Note 8, and eventually iPhone 8. The only difference is that those phones are $1000, and the 1+5 is only just over $500. (Don't believe me? Go watch the phonebuff speed tests with it. It actually beat an iPhone 7+. The first phone to do that in a couple years)

Sure, it doesn't have any of that great screen tech in the S8. But it's still got a great AMOLED screen, and it's battery lasts much longer than most of its competition. And Dash charge is much faster than Samsung's fast charging. Did I mention it's only 500$? Selling my phone would make they $350! How tf is it even that cheap?

Look, I'm not saying other phones out there are bad. Not at all. Hell, I love Samsung's phones. But the 1+5 is just better than the S8 or any other current flagship.5 -

After learning a bit about alife I was able to write

another one. It took some false starts

to understand the problem, but afterward I was able to refactor the problem into a sort of alife that measured and carefully tweaked various variables in the simulator, as the algorithm

explored the paramater space. After a few hours of letting the thing run, it successfully returned a remainder of zero on 41.4% of semiprimes tested.

This is the bad boy right here:

tracks[14]

[15, 2731, 52, 144, 41.4]

As they say, "he ain't there yet, but he got the spirit."

A 'track' here is just a collection of critical values and a fitness score that was found given a few million runs. These variables are used as input to a factoring algorithm, attempting to factor

any number you give it. These parameters tune or configure the algorithm to try slightly different things. After some trial runs, the results are stored in the last entry in the list, and the whole process is repeated with slightly different numbers, ones that have been modified

and mutated so we can explore the space of possible parameters.

Naturally this is a bit of a hodgepodge, but the critical thing is that for each configuration of numbers representing a track (and its results), I chose the lowest fitness of three runs.

Meaning hypothetically theres room for improvement with a tweak of the core algorithm, or even modifications or mutations to the

track variables. I have no clue if this scales up to very large semiprime products, so that would be one of the next steps to test.

Fitness also doesn't account for return speed. Some of these may have a lower overall fitness, but might in fact have a lower basis

(the value of 'i' that needs to be found in order for the algorithm to return rem%a == 0) for correctly factoring a semiprime.

The key thing here is that because all the entries generated here are dependent on in an outer loop that specifies [i] must never be greater than a/4 (for whatever the lowest factor generated in this run is), we can potentially push down the value of i further with some modification.

The entire exercise took 2.1735 billion iterations (3-4 hours, wasn't paying attention) to find this particular configuration of variables for the current algorithm, but as before, I suspect I can probably push the fitness value (percentage of semiprimes covered) higher, either with a few

additional parameters, or a modification of the algorithm itself (with a necessary rerun to find another track of equivalent or greater fitness).

I'm starting to bump up to the limit of my resources, I keep hitting the ceiling in my RAD-style write->test->repeat development loop.

I'm primarily using the limited number of identities I know, my gut intuition, combine with looking at the numbers themselves, to deduce relationships as I improve these and other algorithms, instead of relying strictly on memorizing identities like most mathematicians do.

I'm thinking if I want to keep that rapid write->eval loop I'm gonna have to upgrade, or go to a server environment to keep things snappy.

I did find that "jiggling" the parameters after each trial helped to explore the parameter

space better, so I wrote some methods to do just that. But what I wouldn't mind doing

is taking this a bit of a step further, and writing some code to optimize the variables

of the jiggle method itself, by automating the observation of real-time track fitness,

and discarding those changes that lead to the system tending to find tracks with lower fitness.

I'd also like to break up the entire regime into a training vs test set, but for now

the results are pretty promising.

I knew if I kept researching I'd likely find extensions like this. Of course tested on

billions of semiprimes, instead of simply millions, or tested on very large semiprimes, the

effect might disappear, though the more i've tested, and the larger the numbers I've given it,

the more the effect has become prevalent.

Hitko suggested in the earlier thread, based on a simplification, that the original algorithm

was a tautology, but something told me for a change that I got one correct. Without that initial challenge I might have chalked this up to another false start instead of pushing through and making further breakthroughs.

I'd also like to thank all those who followed along, helped, or cheered on the madness:

In no particular order ,demolishun, scor, root, iiii, karlisk, netikras, fast-nop, hazarth, chonky-quiche, Midnight-shcode, nanobot, c0d4, jilano, kescherrant, electrineer, nomad,

vintprox, sariel, lensflare, jeeper.

The original write up for the ideas behind the concept can be found at:

https://devrant.com/rants/7650612/...

If I left your name out, you better speak up, theres only so many invitations to the orgy.

Firecode already says we're past max capacity!5 -

Sooo as of January of this year, I have a new boss, this dude basically acted as my “mentor” for the last year so he’s already tried micromanaging me but bc he wasn’t my boss, I could push back.

Long story short, he is now my manager, he’s the global marketing leader and I’m the marketing director for the Americas (been doing this role for two years) yet he treats me like I’m an idiot, in his words he wants to make sure I’m in control of my team before he lets me lead fully while simultaneously telling me that I need to step up and lead.

I politely asked him to let me lead and stop attending all my team meetings, stop delegating tasks to my team directly and instead consult w me so then I can delegate, and basically to respect the fact that clearly I’ve been successfully doing the job for the last two years.

He said no, that he won’t leave my meetings until he feels I have full control of my team, continues to over involve himself in all my projects, pulling my team in a bunch of directions w new projects and ideas left and right, and burning us all out.

To add insult to injury, he sent me a very “helpful” email detailing how I need to work better and faster and how he expects me and my team at full speed, my team is made up of me, two new hires that are a month old, my marketing manager, and I’m currently hiring for another team member. (This after he led a company restructure of my previous team that resulted in me losing 4 team members in December so I’m rebuilding my team).

I’m already overwhelmed and demotivated, pretty sure he wants me to quit and he has a proven history of bullying his staff, he was actually fired from our parent company for this exact reason a few years ago, he also happens to be European so not sure how rules work over there, but he was rehired by my company. My European colleagues hate him too, but they’re too scared to speak up.

I used to love my job and now i dread it, I drink every day after work and I get anxiety everytime he emails me which is at all hours if the day. Is it worth it documenting his bullshit for HR or should I just cut my losses snd leave?

Appreciate the advice!3 -

!dev

I wanted to take small loan from bank I am loyal customer for 15 years to speed up things by month. I decided to pay money for it.

They have some online form for it and I filled it.

So what happened next ?

I got call to confirm every input I filled (heard keyboard typing every time I answered question).

I asked how long I will wait and got response that it will take couple of hours, max 2 days.

Just received another call 10 days later that they need documents to prove my income.

They got 15 years history of every operation and it looks like it means nothing.

I said to person I will earn this money faster then I get it from them so at this point this conversation is just waste of my time.

It’s 10 days left till end of month and I think it will be easier to just wait or ask friend for a favor.

Yet another reason to say fuck banks.

Time is money.7 -

I never understood the programming language discussion thing. In modern programming, all you do is gluing APIs together, no matter if they're third party or built-in. My JS code is literally 95% fetch() followed by querySelector.

All built-in APIs that do something useful are just linking C++ modules. If you're not doing stupid shit, Python is exactly as fast as C++ or Rust.

The only scenario where speed matters is algos. Guess what? You should write your algos in C and link them to your Node/Python/Go/whatever code. And don't even get me started on reinventing algos. Do you really think you can write an algo in one evening that will be more efficient than what the guy whose PhD thesis it was a part of?

Just because some engine parts require the precision only a million-dollar CNC machine can provide, doesn't matter you have to cut the whole car out of a solid block of high-performance engine block alloy.

Remember kids, sorting array in Python is always faster than sorting array in C, because Python's sort was written by someone else who's smarter than you, went through years of scrutiny and iteration, and doesn't have stupid novice algo complexity errors.

Grow up.23 -

Meanwhile in a parallel universe composer update runs faster than the speed at which universe expands.

-

It was simple Tuesday morning, got to work, turned on laptop. And hell began. First call, my co worker asked me to come. Got shouted, why I bought this peace of shit printer. Why it's printing slow. Asked to bring back old one because it's faster. But before I switched printers. I got strange and funny question, "why paper comes out hotter from this printer and not from older one ", I became speechless, and left her without answer. Ok I changed printer. Went to take tea break(hate coffee). Got asked by same women to bring original power cord that was with printer, because that one connected somehow slowed printing speed. The fuck? Too hot paper, now power cord? Why? How? That was stupidest things I ever heard.

P.S Slow printing problem was with her computer, bad drivers, something wrong with computer or OS. Anyway I need to change her computer pretty soon anyway.6 -

!rant

Ever find something that's just faster than something else, but when you try to break it down and analyze it, you can't find out why?

PyPy.

I decided I'd test it with a typical discord bot-style workload (decoding a JSON theoretically from an API, checking if it contains stuff, format and then returning it). It was... 1.73x the speed of python.

(Though, granted, this code is more network dependent than anything else.)

Mean +- std dev: [kitsu-python] 62.4 us +- 2.7 us -> [kitsu-pypy] 36.1 us +- 9.2 us: 1.73x faster (-42%)

Me: Whoa, how?!

So, I proceed to write microbenches for every step. Except the JSON decoding, (1.7x faster was at least twice as slow (in one case, one hundred times slower) when tested individually.

The combination of them was faster. Huh.

By this point, I was all "sign me up!", but... asyncpg (the only sane PostgreSQL driver for python IMO, using prepared statements by default and such) has some of it's functionality written in C, for performance reasons. Not Cython, actual C that links to CPython. That means no PyPy support.

Okay then.1 -

Velocity? You want to talk about velocity while it is you steerig us towards full speed reverse over and over again?

What's faster, armageddon or the apocalypse?

NOBODY FUCKING CARES MATE 🤦♂️3 -

This shit is long story of my computer experience over my lifetime.

When I was young I got my first PC with windows it was not so bad. It required safe shut down of it’s fat32 partition. From time to time I needed to reinstall it cause of slow down but I got used to it I was only a gamer.

Time passes and I got more curious about computers and about this linux. Everything worked there but installation of anything was complete madness and none of windows programs worked well, and I wanted to play games and be productive so I sticked with windows.

I bought hp laptop once with nvidia card, it was overheating and got broken. So I bought toshiba and all I told to the seller was I want ATI card. Took me 5 minutes to do it and I was faster then my friend buying pack of cigarettes because I was earning money using computer.

Then I grown up running my small one person programming businesses and I wanted to run and compile every fucking program on this world. I wanted linux shell commands. I wanted package manager, and I wanted my os to be simple because I wasn’t earning money by using my os but by programming. So after getting my paycheck I bought mac. I can run windows and linux on vm if I need it. I try not to steal someones work so I didn’t want to run hackintosh. I am using this mac for some time.

Also I use playstation for gaming. Because I only want to run and play game I am not excited about graphics but gameplay. I think I am pragmatic person.

I can tell you something about my mac.

When I close lid it go sleep when I open it wakes up instantly. I never need to wonder if I want to hibernate or shut down or sleep and drain battery. It is fucking simple.

When I want to run or open something it doesn’t want me to wait but it gives me my intellij or terminal or another browser or whatever I search for. Yeah search is something that works.

Despite it got 8 gigs of ram I can run whatever number of programs I want at the same speed. The speed is not very fast sometimes but it’s constant fast.

I have a keychain so my passwords are in one place I can slow down shared internet speed, I can put my wifi in monitor mode and I don’t need to install some 3rd party software.

And now I updated my mac to high sierra, cause it’s free and I want to play with ios compilation. Before I did it I didn’t even backup whole work. I just used time machine and regular backups. And guess what, it still works at the same speed and all I did was click to run update and cook something to eat.

When I got bored I close the lid, when got idea open lid and code shit, not waiting for fucking wakeup or fucking updates.

I wanted to rant apple products I use but they work, they got fucking updates all along at the same time. And all of updates are optional.

I cannot tell that about all apple products but about products I use.

I think I just got old and started to praise my limited time on this world. Not being excited about new crap. When I buy something I choose wisely. I bought iPhone. I can buy latest iPhone x but I bought iPhone 7 cause it’s from fucking metal. And I know that metal is harder then glass, why the fucking apple forgot about it? I don’t know.

I know that I am clumsy and drop stuff. Dropped my phone at least 100 times and nothing.

I am not a apple fan boy I won’t buy mac with this glowing shit above keyboard that would got me blind at night.

I buy something when I know that it can save my time on this world. I try to buy things that make me productive and don’t break after a year.

So now piece of advise, stop wasting your time, buy and update wisely, wait a week or a month or a year when more people buy shit and buy what’s not broken. And if something’s broken rant this shit so next customer can be smarter.

Cheers1 -

I am using Ubuntu for two years now, for me it is faster than windows. To have more speed what’s next!?6

-

>making bruteforce MD5 collision engine in Python 2 (requires MD5 and size of original data, partial-file bruteforce coming soon)

>actually going well, in the ballpark of 8500 urandom-filled tries/sec for 10 bytes (because urandom may find it faster than a zero-to-FF fill due to in-practice files not having many 00 bytes)

>never resolves

>SOMEHOW manages to cut off the first 2 chars of all generated MD5 hashes

>fuck, fixed

>implemented tries/sec counter at either successful collision or KeyboardInterrupt

>implemented "wasted roll" (duplicate urandom rolls) counter at either collision success or KeyboardInterrupt

>...wait

>wasted roll counter is always at either 0% or 99%

>spend 2 hours fucking up a simple percentage calculation

>finally fixed

>implement pre-bruteforce calculation of maximum try count assuming 5% wasted rolls (after a couple hours of work for one equation because factorials)

>takes longer than the bruteforce itself for 10 bytes

this has been a rollercoaster but damn it's looking decent so far. Next is trying to further speed things up using Cython! (owait no, MicroPeni$ paywalled me from Visual Studio fucking 2010)4 -

The l33t h4ck3r experience: making a js bookmark that adjusts the video speed on an online LMS that reeally doesn't want you to watch at the same speed that you process information.

The trick is to continuously change the playback rate faster than the site can change it back to painfully slow. -

Sonic the Hedgehog is kind of developer, which smashes bugs and goes through stages with speed of sound... But at the end, Eggman is faster than him.

-

I'm never committing to finishing another PC game ever again... And sticking to arcade, short games only.

Playing Nu no Kuni 2 for the last 3 days... 25hrs and counting... Now at final boss... I think and I can't get infinite HP in Skirmish... So Keep getting entire force wiped out one hit with his laser beam which keeps getting stronger....

It's like a fly vs us. In order to win it's gotta slowly chip away at u, but he can die at an instant any time...

Oh and the 25hrs includes massive cheating and speed hacking to move faster.2 -

So apparently my code went to prod more or less all right. Phew, the deadline was this week-end. This project have been sitting there for month, they gave me the technical requirement and never bothered to ask the stakeholders about it. When the contract went in, they started to freak out it wasn't usable.

The thing is, this project had way more moving part and trying to threat video in the frontend is not the easiest. But now is REFACTOR TIME.