Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "interpreter"

-

Costumer: I found a 40 line python script on Stackoverflow to do that.

Dev team: ok, now... how many lines you think we will need to put the python interpreter, libraries and your 40 line script inside an Android and iOS apps with legacy code?3 -

Anyone looking for something interesting to do???

Step 1) understand how basic circuitry works on a bread board nothing too fancy. ( Implement NAND, AND, ADDER, SUBTRACTOR)

Step 2) learn about microprocessors and how OS works

Step 3) learn assembly

Step 4)write a basic assembler and understand how loaders and linkers works !

Step 5) write a kernel with very basic features like memory management and process management and some drivers for IO

Step 5) write an emulator for some simple systems .! ex chip-8.

Step 6) read about compiler theory and automata

Step 7) write a basic Python interpreter that compiles (not interpreter) to native assembly.

Step 8) implement TCP stack .

Step 9) learn as much as u can about complexity measurement ), data structures and algorithms using C or C++ it's very important ( familiarity with pointers and thus computer memory )

Step 10) learn any high level language of choice like Python or Ruby.

Step 11) stop debating over tabs vs spaces , emacs vs vim , angular vs vue, php vs Python , OOps vs procedular vs functional ( just know about all of them and when to use but don't fucking debate over which one is superior )..

Step 12) live happily and be healthy.30 -

– “It doesn’t work. I don’t know how to run this.”

– “Ok, did you install the Python interpreter?”

– “No, what’s that?”

– “You have to download it from www.python.org. Get the 2.7 version.”

– “Yeah, it’s ok. I’ll just use something else.”9 -

Me talking to a recruiter (even though I am not looking for a job)

Me: If I walk into an interview, and they ask me to reverse a binary tree for a frontend Reac or Vue position or something along those lines, I will end the call and/or walk away from it.

Him: I get similar feelings from other programmers, I don't quite understand why the notion is as common

Me: Because it is fucking useless, it servers no purpose to a dev to know about that when building frontends with react, I link my github profile, for which they can find advanced backend-frontend related projects, compiler and interpreter projects, plus the title I currently have at my workplace and a bunch of other shit, I am not interviewing for a teaching position at an institute, but an actual place of work, for which if they want to know about DS and A they can review my profile which has a repo of DS and A in about 5 different languages including plain C++. I do not need to be offended by such notions since they server no purpose on the frontend, and neither do other devs. If anything it should be a casual conversation during the interview, not a basis for employment.

Recruiter: .........thank you for explaining this to me, I am sure I can bring it up to the agencies doing the reviews and interviews. Are you still interested?

Me: Are they going to give me a coding assignment for a project or a bs question like what I mentioned?

Him: I don't know

Me: then I am not interested12 -

My teacher told me not to do a project. He said it was much too hard.

I did it.

2 months pass

And then i come to him with anothec project idea.

Same thing goes, nobody believes in me, but i still succeed.

in the aftermath, i was able to create an interpreter AND a compiler in about 1 year... 😁22 -

Me reviewing some high school level exams after an Excel course.

"hmmm the next question is 'what does the symbol $ mean when found inside a FORMULA in Excel' ... Let's see what they answered..."

* "it's the symbol for DOLLARS" <-- well, he tried

* "I don't know" <-- mmh ok, he doesn't know

* "it can be either a plus or a minus" <-- mmmh maybe the interpreter will just figure out the correct one

* "it's used to keep an index fixed when you copy/drag the formula" <-- nice, someone who actually followed the lesson or at least knows how to google things when the teacher doesn't see

* "it's the symbol for POUNDS" <-- WTF!! Wait a moment: POUNDS???? Have you ever lived a single moment in this world? -

Books and command lines.

I don't like teachers.

I think it's because my learning process is very async and chaotic. When I see a snippet in Golang, I relate it to PHP, Rust and Haskell. I jump to resolving the problem in other languages, trying to find out which approaches work in Go.

Then I read about some computer science concept on Wikipedia and get lost in that while my hunger for knowledge and food increases. After a while I look up a recipe for a pasta salad, and while cutting bell peppers, I see the recipe in terms of typed morphisms, I sprinkle and intersperse ingredients through mapping functions, then decide to write an interpreter for the esoteric "Chef" language in Go so I can interpret my salad recipe while eating it.

Voila, I'm learning Go.

I have no patience for linear mentoring, and others have no patience for mentoring me.

But that's OK.1 -

Got home from work and found this Python at my doorstep, I cringed initially but remembered I write Python, so I said "Hey! Where's your interpreter?"5

-

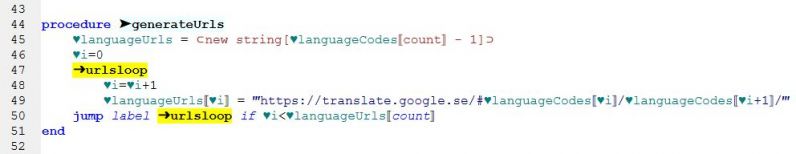

I'm working on a programming language with a "bytecode" interpreter and a compiler that translates source code to said bytecode and... it sort of actually works!

I want to recreate an Erlang-style environment, currently you can write functions, call C++ functions via wrappers, have immutable-only values, and it has no explicit control structure apart from statement sequencing and the if-expression because I want to make it as functional as possible. Next thing on the list is to add a green threads implementation and ability to spawn and send messages to processes.

Still a WIP and heck even design-in-progress.

Now for the rant:

I'm using CMake for building C++ (interpreter) and Stack for Haskell (compiler) and I've been trying to get them to talk to each other for hours because I want CMake to manage the Stack build too and shove all the executables into one place. CMake documentation is weird and Stack isn't too helpful either, so I guess I'll just spend another few hours trying to get Stack to fuckin reveal its build directory to CMake and/or build to a given directory. Ugh. 8

8 -

Bonus points if you also wrote it in a language entirely new to you, or even in entirely different paradigm. (In my case Haskell.)

11

11 -

My first work was a paid internship.

My first couple weeks on the job I was supposed to be working on the same machine with another dev to get the gist of the process and everything. Kind of pair programming mixed with mentorship. Sounds cool?

Yeah... Problem is my fellow dev was more interested in spending around 80% of her time chatting around with her boyfriend and friends on Microsoft Chat.

Anyway, I soon got bored of having to look to the other side all the time, and went to our boss and asked for some other stuff to do "because I'm better learning by doing than by example".

Almost 20 years later, I'm still in touch with this dev... But she soon left the job and pursued a career as a translator and interpreter. She was always more interested in talking than programming 😃1 -

I've caught the efficiency bug.

I recently started a minimum wage job to get my life back in order after a failed 2 year project (post mortem: next time bring more cash for a longer runway)

I've noticed this thing I do at every job, where I see inefficiency and I think "how can I use technology to automate myself out of this job?"

My first ever application was in C++ for college (a BASIC interpreter) and it's been so long I've since forgotten the language.

But after a while every language starts to look like every other language, and you start to wonder if maybe the reason you never seriously went anywhere as a programmer was because you never really were cut out for it.

Code monkey, sure. Programmer? Dunno, maybe I just suffer from imposter syndrome.

So a few years back I worked at a retail chain. Nothing as big as walmart, but they have well over 10k store locations. They had two IBM handscanners per store, old grungy ugly things, and one of these machines would inevitably be broken, lost or in need of upgrade/replacement about once a year, per location. District manager, who I hit it off with, and made a point of building report with, told me they were paying something like $1500 a piece.

After a programming dry spell, I picked up 'coding' with MIT app inventor. Built a 'mostly complete' inventory management app over the course of a month, and waited for the right time.

The day of a big store audit, (and the day before a multi-regional meeting), I made sure I was in-store at the same time as my district manager, so he could 'stumble upon' me working, scanning in and pricing items into the app.

Naturally he asked about it, and I had the numbers, the print outs, and the app itself to show him. He seemed impressed by what amounted to a code monkeys 'non-code' solution for a problem they had.

Long story short, he does what I expected, runs it by the other regionals and middle executives at the meeting, and six months later they had invested in a full blown in house app, cutting IBM out of the mix I presume.

From what I understand they now use the app throughout the entire store chain.

So if you work at IBM, sorry, that contract you lost for handscanners at 10k+ stores? Yeah that was my fault (and MIT app inventor).

They say software is 'eating the world' but it really goes to show, for a lot of 'almost coders' and 'code monkeys' half our problem is dealing with setup and platform boilerplate. I think in the future that a lot of jobs are either going to be created or destroyed thanks to better 'low code' solutions, and it seems to be a big potential future market.

In the mean while I've realized, while working on side projects, that maybe I can do this after all, and taken up Kotlin. I want to do a couple of apps for efficiency and store tracking at my current employer to see if I'm capable and not just an mit app-inventor codemonkey after all.

I'm hoping, by demonstrating what I can do, I can use that as a springboard into an internal programming position at my current gig (which seems to be a company thats moving towards a more tech oriented approach to efficiency and management). Also watching money walk out the door due to inefficiency kinda pisses me off, and the thought of fixing those issues sounds really interesting. At the end of the day I just like learning new technologies, and maybe this is all just an excuse to pick up something new after spending so long on less serious work.

I still have a ways to go, but the prospect of working on B2B, and being able to offer technological solutions to common and recurring business needs excites the hell out of me..as cringy and over-repeated as that may sound.7 -

A LOT of this article makes me fairly upset. (Second screenshot in comments). Sure, Java is difficult, especially as an introductory language, but fuck me, replace it with ANYTHING OTHER THAN JAVASCRIPT PLEASE. JavaScript is not a good language to learn from - it is cheaty and makes script kiddies, not programmers. Fuck, they went from a strong-typed, verbose language to a shit show where you can turn an integer into a function without so much as a peep from the interpreter.

And fUCK ME WHY NOT PYTHON?? It's a weak typed but dynamic language that FORCES good indentation and actually has ACCESS TO THE FILE SYSTEM instead of just the web APIs that don't let you do SHIT compared to what you SHOULD learn.

OH AND TO PUT THE ICING ON THE CAKE, the article was comparing hello worlds, and they did the whole Java thing right but used ALERT instead of CONSOLE.LOG for JavaScript??? Sure, you can communicate with the user that way too but if you're comparing the languages, write text to the console in both languages, don't write text to the console in Java and use the alert api in JavaScript.

Fuck you Stanford, I expected better you shitty cockmunchers. 31

31 -

ChatGPT was asked to write a script for benchmarking some SQL and plotting the resulting data.

Not only was it able to do it, but, without further prompting, it realized it had made an error, explained what it 'thought' the error was and fixed it.

Excuse me, I need to go get my asshole sewn up because I'm hemorrhaging to death from the brick I just shat.

source:

https://simonwillison.net/2023/Apr/...6 -

Today I learned that someone wrote a Python interpreter in JavaScript called Brython. So now you can include a framework to write shitty code in a buggy framework and tell your users to throw more hardware at it.

The guy I heard this from also believed that his code would somehow be "compiled" rather than what's essentially a framework be loaded and then execute code in a language not native to the browser...

So now you can write JavaScript where it doesn't belong in Node and write Python where it doesn't belong through a framework. Frontend and backend are so passé, we might as well start calling it fluid instead.

FULLSCHTAK!!!

🙂🔫21 -

EEEEEEEEEEEE Some fAcking languages!! Actually barfs while using this trashdump!

The gist: new job, position required adv C# knowledge (like f yea, one of my fav languages), we are working with RPA (using software robots to automate stuff), and we are using some new robot still in beta phase, but robot has its own prog lang.

The problem:

- this language is kind of like ASM (i think so, I'm venting here, it's ASM OK), with syntax that burns your eyes

- no function return values, but I can live with that, at least they have some sort of functions

- emojies for identifiers (like php's $var, but they only aim for shitty features so you use a heart.. ♥var)

- only jump and jumpif for control flow

- no foopin variable scopes at all (if you run multiple scripts at the same time they even share variables *pukes*)

- weird alt characters everywhere. define strings with regular quotes? nah let's be [some mental illness] and use prime quotes (‴ U+2034), and like ⟦ ⟧ for array indexing, but only sometimes!

- super slow interpreter, ex a regular loop to count to 10 (using jumps because yea no actual loops) takes more than 20 seconds to execute, approx 700ms to run 1 code row.

- it supports c# snippets (defined with these stupid characters: ⊂ ⊃) and I guess that's the only c# I get to write with this job :^}

- on top of that, outdated documentation, because yea it's beta, but so crappin tedious with this trail n error to check how every feature works

The question: why in the living fartfaces yolk would you even make a new language when it's so easy nowadays to embed compilers!?! the robot is apparently made in c#, so it should be no funcking problem at all to add a damn lua compiler or something. having a tcp api would even be easier got dammit!!! And what in the world made our company think this robot was a plausible choice?! Did they do a full fubbing analysis of the different software robots out there and accidentally sorted by ease of use in reverse order?? 'cause that's the only explanation i can imagine

Frillin stupid shitpile of a language!!! AAAAAHHH

see the attached screenshot of production code we've developed at the company for reference.

Disclaimer: I do not stand responsible for any eventual headaches or gauged eyes caused by the named image.

(for those interested, the robot is G1ANT.Robot, https://beta.g1ant.com/) 4

4 -

Hey everyone! I've been writing a programming language for a while now and wanted to share with you all 😊.

I call it Ethereal and have created a medium post for it giving an overview of my experience here: https://link.medium.com/SEg39iB9xZ

GitHub link is: https://github.com/Electrux/...

Hope you guys enjoy it 😁😁7 -

The myth about the missing semicolon. I don't get it, if your editor doesn't pick it up, the compiler og interpreter will. How is this a common problem?4

-

When I was about 10 I tried to make a basic midi sequencer/synthesiser using just the python standard library.

The only sound production there was was winsound.beep, which played a sine wave at the frequency given.

I realised that if I put enough really short beeps together I could make some mildly convincing instruments - I remember an electric piano, acoustic guitar, some kind of bass synth, and maybe more?

Then I put them together to make a song. The problem was though that you can't play multiple notes together as winsound.beep was blocking (though I didn't understand that at the time).

I had no knowledge of threading or async so I opened multiple python interpreter instances to play multiple channels. That's how I learnt about command-line arguments!

But I really struggled to get the sounds to be in time because python is not exactly rapid.

I made a kind of note sequencer using a library called easygui, based on tkinter (TCL wrapper), and I remember being told off at school for bringing in a usb stick with the exe of my program that I made with py2exe.

So many old technologies and fond memories...2 -

The year was 1983. My best friend and neighbour at the time invited me over to see an amazing device that his father had brought home from work, an IBM PC. We played a game called Track & Field, and I was amazed that the machine remembered my name once I've entered it. (Uptil then the only machines with any kind of memory that I've come in touch with, were arcade games and my cousin's video game console, which was also the first electronic gaming device I've ever played, back in 1978). In the early 1980s, computers were anything but commonplace in Åland Islands, but I think that it was in 1983 that people became aware of them, and there was a budding interest to buy one, at least among us kids. It was my sister who wished for a home computer for Christmas, so the same year Santa gave us a ZX Spectrum. It came with a game called Thro' the Wall, an Arcanoid clone(, that has inspired me to make my own clone "Wall" for all the different home computers I've had, ranging from Commodore 16 and Canon V-20 to Amiga 500 and Amiga 1200). Unfortunately, we only managed to load the game (delivered on a C cassette) like once or twice after several attempts. It turned out that the hardware was faulty and dad got a refund after first having had to complain a lot at the dealer (which went out of business some ten years ago), and then bought the Commodore the next Christmas. Anyway, I wrote my first code on the ZX Spectrum. It doesn't really count for programming as all I did was typing examples and running them. I do recall altering one example though, a program drawing the Swedish flag on the screen, by adding an inner red cross thus turning it in the Åland flag. But, with the Commodore 16 (which had an excellent Basic interpreter) I got started with programming almost immediately and by the end of 1984 I had written my fist very own Basic programs. In 1996 I got my first IT job, and am still a dev. So, what became of my childhood friend and neighbour? He runs a successful computer dealership :)

-

Was forced to do some work on Windows this week (CAD tools that runs only on Windows). I spent a few days just setting up the tools. There were quite a few things I realized I forgot about Windows (as compared to Linux).

1) Installation times are down right horrific. What exactly are the installer doing for 10 minutes?

2) .NET is a cluster fuck. Not even Microsofts repair tool can fix it, but rather just hangs. I ended up using another tool to nuke it and reinstall.

3) Windows binary installs are insanely huge, thus, takes forever to download.

4) The registry is a pointless database that must have been written in hell with the single intent of destroying users will to live. The sole existence of the registry is another proof that completely incompetent engineers designed Windows.

5) Rebooting is the only way to solve many problems. This is another sure sign of a fundamentally fucked up OS design.

6) What the heck is wrong with the GUIs designers? The control panel must be the worst design ever. There are so many levels to get to a particular setting I'm getting dizzy. Nothing gets better by the illogical organisation.

7) Windows networking. A perversion of the tcp/ip stack that makes it virtually impossible to understand a damn thing about the current network configuration. There are at least 3 different places that effects the settings.

8) Windows command prompt. Why did they even bother to leave it in? The interpreter is as intelligent as retarded donut. You can't do anything with it, except typing "exit" and Google for another solution.

8) Updates. Why does it takes hundreds of updates per month to keep that thing safe?

9) Despite all updates that is flying out of Redmond like confetti, it is still necessary to install antivirus to keep the damn thing safe. That cost extra money, and further cost you by degrading performance of your hardware.

10) Window performance. Software runs like it was swimming in molasses. The final stab in the back on your hardware investment, and pretty much sends performance on your hardware back a few hundred bucks more.

11) Closed source is evil. If something crash consistently, you might find a forum that address the issues you have. Otherwise you're out of luck. On the other hand, it might be for the better. I imagine reading the code for Windows can lead to severe depression.

I'm lucky to be a Linux dev, and should probably not complain too much... But really, Windows, go get yourself hit by a truck and die. I won't miss you.14 -

Fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fuck fucking python interpreter versions !

I feel better now.1 -

So, this is probably somewhat esoteric but...

While studying at university I had a "programming paradigms" module, dunno why they called it that, it was more like "introduction to functional programming".

So, it's kinda mind bending, we'd only really started to get our heads around classical object oriented programming and they throw functional programming at us.

It's worse than that though, for do they use an established language, like lisp/scheme, functional Python, or even given Haskell?

No, of course they didn't. They taught us Oz.

You probably won't have heard of it, but this language is burned into the back of my brain, along with a vague understanding of the n-queens problem we had to solve graphically (using qTk, which I dunno if someone took qt and tk and blended them, I stopped asking questions after a while).

To top it off did this language (at the time) have a stand alone interpreter? Did it buggery! It was coupled to the Mozart programming system, which is just Emacs (which has a bloody lisp built into it,so close, yet so far 😭).

It gets worse, though, oh does it get worse, for pause dear reader and consider, have you ever heard of Mozart/oz before, I'd put money on most of you had not heard of it until today.

For, you see, I believe at the time of writing, one, yes, ONE text book exists on this language. When I was doing my assignment there was merely some published conference notes and language design documents.

That's not all, I was not the only one experiencing difficulties with this language, someone in the class ended up pouring through the mailing lists and found the very tutor teaching the class struggling at first to understand the language.

I had to repeat that year. The functional programming class was one semester.

When I retook that year, it was a whole year long. However, halfway through the year, original tutor was fired and a new tutor was hired to teach the language.

He was, understandably, just as confused as we were.

There was a Starbucks and a pub equidistant from the lecture hall, though in opposite directions. From lecture to lecture we had no idea which one we'd end up in.

I have reason to believe Mozart/Oz it some sort of otherworldly abomination designed to give students the occasional nightmare flashback, long after they've left.

My room had post it notes, sheets of paper, print outs, diagrams, doodles and pens, just stuck to the wall, I looked like a raving lunatic three hours away from being institutionalised. There was string connecting one diagram to the next and images of a chess queen all over. As I attempted to solve the n-queens problem.

Madmans knowledge, I call it. I can never unlearn all that, in fact it seeps into much of the code I write. Such information was not meant for the minds of a simple country bumpkin such as myself...

Mozart/Oz... I wouldn't be the programmer I am today without it, and that's frankly terrifying...10 -

So.... yeah, making a Scratch clone (with more features) is frustrating and super hard.

Major problems include

- Drag&Drop from listbox to usercontrol - stress level : 3/10

- connect blocks when two blocks are close to each other - stress level : 10/10

- generate live code when there was a change in blocks editor - stress level : 9/10

- write a compiler or some interpreter that converts block code to real c# code - stress level : 10/10

- generate output by calling csc.exe - stress level : 1/10

- make code at least readable - stress level : 7/1014 -

Our CEO had a virtual town hall using Zoom and now have a sign language interpreter box as a regular feature... To go along with all the Inclusion stuff...

The most immediate problem though is they didn't turn on auto-captions...

I don't know sign but am deaf so needed the captions which it turns out you can get using the Google Recorder app on Pixels. (This is literally like a fuck you to non-Pixel users and Zoom which disables Live Captions in conferences and recording full transcripts).

Anyway I left it own and near the end, a speaker was like "we're getting a lot of likes and positive feedback about the interpreter box! See how small changes make such a big difference?!"

And well of course in my mind I'm going "uh.... No."

I'll just go back to not caring about anything that isn't related to how much I make.2 -

Python sucks so hard!! Slow Interpreter, Broken Multithreading. It really sucks. Overrated language.10

-

So after exactly 30 minutes of building a basic interpreter (yes I'm back on the interpreter wagon) in python, I have once again come to the conclusion that python can go fuck itself with its backwards and inconsistent syntax with needlessly over worked and backwards data structures...

Would rather work in fucking COBOL at this stage...3 -

So, first time ranting, sorry if I mess anything up.

When I first started my current job and got introduced to the system we were coding in, something seemed a little fishy to me. Didn't like the system anyway, but at least the language is a compiler language, so it runs quite quickly, right?

In theory, yeah. If the lead dev liked the IDE that came with it. But he has to REALLY fucking hate it, because rather than using it, he codes in plaintext. No syntax highlighting, no auto-indent, nothing. And he's built the entire damn system around doing that. Sadly the compiler is only integrated into the IDE, so what do we do there? Copy the code from the plaintext file to the IDE to compile it there? No no, why would you. The language has a function you can use to compile some code at runtime.

And so he does. Every. Single. Fucking. Script. There's a single main script that runs and finds the correct textfile to then runtime-compile and execute. So we effectively made a compiler language into a massively unoptimized interpreter lang.

I even mentioned that this might be a problem, but I was completely dismissed, so at that point it's not my problem anymore and I have then switched to a different system anyway.

Couple weeks later I heard the same guy complaining that the scripts were running almost the whole night so we'd probably need some better hardware or something.

Well if only there was a really obvious solution that would improve the performance by probably about a factor of 20 or so...12 -

My best dev-memories of 2016??

First in february I won a competition (Jugend - Forscht) in which i had programmed an interpreter. And then, the first time my selfmade compiler actually printed hello world... -

I didn't leave, I just got busy working 60 hour weeks in between studying.

I found a new method called matrix decomposition (not the known method of the same name).

Premise is that you break a semiprime down into its component numbers and magnitudes, lets say 697 for example. It becomes 600, 90, and 7.

Then you break each of those down into their prime factorizations (with exponents).

So you get something like

>>> decon(697)

offset: 3, exp: [[Decimal('2'), Decimal('3')], [Decimal('3'), Decimal('1')], [Decimal('5'), Decimal('2')]]

offset: 2, exp: [[Decimal('2'), Decimal('1')], [Decimal('3'), Decimal('2')], [Decimal('5'), Decimal('1')]]

offset: 1, exp: [[Decimal('7'), Decimal('1')]]

And it turns out that in larger numbers there are distinct patterns that act as maps at each offset (or magnitude) of the product, mapping to the respective magnitudes and digits of the factors.

For example I can pretty reliably predict from a product, where the '8's are in its factors.

Apparently theres a whole host of rules like this.

So what I've done is gone an started writing an interpreter with some pseudo-assembly I defined. This has been ongoing for maybe a month, and I've had very little time to work on it in between at my job (which I'm about to be late for here if I don't start getting ready, lol).

Anyway, long and the short of it, the plan is to generate a large data set of primes and their products, and then write a rules engine to generate sets of my custom assembly language, and then fitness test and validate them, winnowing what doesn't work.

The end product should be a function that lets me map from the digits of a product to all the digits of its factors.

It technically already works, like I've printed out a ton of products and eyeballed patterns to derive custom rules, its just not the complete set yet. And instead of spending months or years doing that I'm just gonna finish the system to automatically derive them for me. The rules I found so far have tested out successfully every time, and whether or not the engine finds those will be the test case for if the broader system is viable, but everything looks legit.

I wouldn't have persued this except when I realized the production of semiprimes *must* be non-eularian (long story), it occured to me that there must be rich internal representations mapping products to factors, that we were simply missing.

I'll go into more details in a later post, maybe not today, because I'm working till close tonight (won't be back till 3 am), but after 4 1/2 years the work is bearing fruit.

Also, its good to see you all again. I fucking missed you guys.9 -

Compiling software on Linux:

Python interpreter? Easy peasy, just some dependencies here and there. Make does a good job.

Linux kernel? Piece of cake, 20 years of development will be freshly served on your machine after one hour compiling (I have a pretty powerful computer).

Tensorflow? Fuck this shit I am outta.

What is your story with self-built software? Which piece of code has the most terrible dependency hell?5 -

1. Build a language interpreter from scratch. Without any tool like Gnu Bison

2. Get comfortable with modern C++

3. Find an internship related with operating systems or other similar areas. -

Hey everyone!

I've created an interpreter that I am very proud of :)

It's based off the variation of Scheme(I think, maybe just Lisp) called Lel.

So my language is like a variation of a variation of Lisp... Written in an Interpreter(Python).

I've got a wiki, and source code on GitHub:

https://github.com/coolq1000/...

Thanks for any feedback. And help appreciated!11 -

Suicide Linux:

You know how sometimes if you mistype a filename in Bash, it corrects your spelling and runs the command anyway? Such as when changing directory, or opening a file.

I have invented Suicide Linux. Any time - any time - you type any remotely incorrect command, the interpreter creatively resolves it into rm -rf / and wipes your hard drive.

It's a game. Like walking a tightrope. You have to see how long you can continue to use the operating system before losing all your data.

Please install: https://qntm.org/suicide9 -

Last night, while under the full belief that I could write a very simple lisp interpreter, I was awake until stupid o'clock and to my credit I got the tokenizer working and produces an output of parsed code. It's really basic but I was pleased to have gotten anything correctly parsed at that time.

But I'm also sitting outside my apartment waiting for a locksmith because lack of sleep left me unprepared to function correctly today and I'm now locked out...

Well done me! -

Sometimes I think "I should write a meaningful error message for this exception" and then i remember how useless 99% of the error messages of every compiler / interpreter I've ever had to use and decide "msg ='something went wrong'" is good enough.3

-

Be me at work, 12h nights shift, 4th day like that

Following online course on machine learning, instructor says we'll use python 3.x as the interpreter for the project, boot personal laptop and start pycharms, create the file, choose right interpreter no big deal

pip install the modules I need for the course - done, try to import them.

Doesn't work, first reboot, still not working, browsing Internet for answers, no ideas, reboot again (you never know) reload pycharms, browse Internet again, find out the modules only work on python 2.7.

Wasted 45minutes for this shit

Feels good bro.2 -

!rant Eeeeeeeeee!!!! The interpreter can now handle floats and integers! And accept the power operator! And modulo! (Sorry, absurdly excited)2

-

Over the summer I was recruited to be a supplement instructor for a data structures course. As a result of that I was asked (separately by the professor) to be a grader for the course. Because of pay limitations I've mostly been grading homework project assignments. In any case, it's a great job to get my foot into the department and get recognized.

Over the course of the semester I've had this one person, OSX, named after their operating system of choice, who has been giving me awkward submissions. On the first assignment they asked the professor for extra time for some reason or the other, and that's perfectly fine.

So I finally receive OSX's submission, and it's a .py file as per course of the course. So I pop up a terminal in the working directory and type "python OSX_hw1.py". Get some error spit out about the file not being the right encoding. I know that I can tell python to read it in a different encoding, so I open it up in a text editor. To my surprise it's totally not a text file, but rather a .zip file!

I've seen weirder things done before, so no big deal. I rename the file extension, and open it up to extract the files when I see that there's no python files. "Okay, what's goin on here OSX..." I think to myself.

Poking around in the files it appears to be some sort of meta-data. To what, I had no clue, but what I did find was picture files containing what appeared to be some auto-generated screenshots of incomplete code. Since I'm one to give people the benefit of doubt even when they've long exhausted other peoples', I thought that it must be some fluke, and emailed OSX along with the professor detailing my issue.

I got back a rather standard reply, one of which was so un-notable I could not remember it if my life depended on it. However, that also meant I didn't have to worry about that anymore. Which when you're juggling 50 bazillion things is quite a relief. Tragically, this relief was short lived with the introduction of assignment 2.

Assignment 2 comes around, and I get the same type of submission from OSX. At this time I also notice that all their submissions are *very* close to the due time of 11:59pm (which I don't care about as long as it's in before people start waking up the next morning). I email OSX and the professor again, and receive a similar response. I also get an email from OSX worried about points being deducted. I reply, "No issue. You know what's wrong. Go and submit the right file on $CentralGradingCenter. Just submit over your old assignment".

To my frustration OSX claimed to not know how to do this. I write up a quick response explaining the process, and email it. In response OSX then asks if I can show them if they comes to my supplemental lesson. I tell OSX that if they are the only person, sure, otherwise no because it would not be a fair use of time to the other students.

OSX ends up showing up before anyone else, so I guide them through the process. It's pretty easy, so I'm surprised that they were having issues. Another person then shows up, so I go through relevant material and ask them if they have any questions about recent material in class. That said, afterwards OSX was being somewhat awkward and pushy trying to shake my hand a lot to the point of making me uncomfortable and telling them that there's no reason to be so formal.

Despite that chat, I still did not see a resubmission of either of those two assignments, and assignment 3 began to show it's head. Obviously, this time, as one might expect after all those conversations, I get another broken submission in the same format. Finally pissed off, I document exactly how everything looks on my end, how the file fails to run, how it's actually a zip file, etc, all with screenshots. That then gets emailed to the professor and OSX.

In response, I get an email from OSX panicking asking me how to submit it right, etc, etc. However, they also removed the professor from the CC field. In response I state that I do not know how to use whatever editor they are using, and that they should refer to the documentation in order to get a proper runnable file. I also re-CC the professor, making sure OSX's email to me is included in my reply.

OSX then shows up for one of my lessons, and since no one had shown up yet, I reiterate through what I had sent in the email. OSX's response was astonished that they could ever screw up that bad, but also admits that they had yet to install python(!!!). Obviously, the next thing that comes from my mouth is asking OSX how they write their code. Their response was that they use a website that lets them run python code.

At this point I'm honestly baffled and explain that a lot of websites like those can have limitations which might make code run differently then it should (maybe it's a simple interpreter written on JavaScript, or maybe it is real python, but how are you supposed to do file I/O?) .

After that I finally get a submission for assignment 1! -

For the PHP pros: Is there a way of turning notices and warnings into exceptions thrown in the scope of occurence without hacking the interpreter?

The answer most likely is "No!" - but if there is another way i certainly would like to know it...8 -

I do not understand people who critique Python for using indentation to mark code blcoks. I've seen multiple posts being like "Uhh in Python a missing space breaks everything and it's so hard to find it". Yeah so hard when the interpreter throws a parse error and tells you exactly which line it's on. Let me go back to a bracefest language, misplace a brace halfway through the file, and get told there's a syntax error at the end, and spend a whole two minutes finding it.11

-

When it comes to the idea of programming and magic, or the comparison between software developers/engineers, computer scientists etc as magicians or wizards, nothing brings the idea much more close to hearth than the C programming language.

A while ago I read the R.A Salvatore books concerning Drizzt, the dark elf. I loved the books, have not continued reading them but I remember them vividly. There was one book in which a human magician came about wielding extremely explosive magic, humans were capable of channeling large amounts of it through explosive and unwieldly ends.

This is the same feeling I get from C

Consider:

int items[] = {1, 2, 3};

printf("Third : %i\n", 3[items]);

and fuck me if shit like the above is not dangerous, it makes sense, arrays have the first items of it server as the pointer address to a first element, doing the above operation returns the third element of the array of 3. But holy shit if I don't think this is dangerous and interesting as fuck

there are many more examples I have that I am finding through me fucking around with: language development (compiler, interpreter), kernel programming as well as net sec. C is the most powerful and devastating thing we have in our hands indeed.7 -

I recently started learning Erlang. This is the story of how I got trapped into it.

When I code, I usually use my trusty text editor and a terminal to either compile my code or run tests in the language interpreter. The interpreter, erl, works fine, but when I wanted to close it, I ran into a small issue.

Because I never know what the command is to close an interpreter, I usually use the EOF character (^D), that is widely recognized. Except erl does not react to it, not even a tiny message saying it won't close or doesn't recognize the output.

Alright then, let's try quit. That's an atom, it does not behave how I want.

quit() is an undefined shell command, exit() terminates the shell process but the interpreter automatically starts a new one...

But I get the welcome message, telling me to abort with ^G! Some progress, finally... except ^G redirects from Erlang interpreter to user switch command. Damn, another interpreter...

I ended up killing the process from an other terminal.4 -

This afternoon, I was sitting in on a class, not really paying attention to what the teacher was saying, when all of a sudden out of nowhere the teacher shoots me with the question what is "1260 divided by 30?" Thakfully I was messing with my python interpreter, so I just typed in 1260/30 yelled, (as if I knew the answer) "42!"

Genius.4 -

Good evening programmers, IT's, devranters and memeians.

I would like to use a little bit of your collective conciousness - the hive mind if you will.

I've been working on my automation system for quite a while and I've received some exposure from non-programmers - which resulted in more questions than suggestions.

I would like to ask you guys to give me some suggestions as to what I could add to my system.. that is, if you have time..

The program in short (if you don't want to read the readme file) is an automation system scriptable in pure Lua.

It utilizes Selenium for web automations, NAudio for audio operations and Moonsharp as an interpreter.

While my tester friends say that they use it for the actual testing, I myself found it very useful in writting bots (for browser games for example).

Here's the github link: https://bit.ly/2GDu92g

Thanks a ton!

PS. Here's an unrelated image to draw your attention. 6

6 -

Why people uses Python for OS scripting? What’s the point in forcing me to download a proper interpreter to run a script when the same result can be achieved with a more portable and cleaner Bash/PowerShell script?!?!23

-

Writing a full interpreter for a pseudo-assembly language.

It's kinda fun but the things a bit of a cunt of a problem because I only did this once before almost a decade and a half ago.

getting just the right format and deciding on the syntax is a slog. Just need something thats quick and sufficiently expressive because I'm not writing the assembly myself, I'm generating the assembly code that runs through the interpreter, so it has to be valid under a lot of conditions. -

If you've ever tried using Go plugins raise your hand.

If you've ever tried doing plugins in Go, raise your hand.

If you think that the following rant will be interesting, raise your hand.

If you raised your hand, press [Read More]:

This is a tale of pain and sorrow, the sorrow of discovering that what could be a wonderful feature is woefully incomplete, and won't be for a very long time...

Go plugins are a cool feature: dynamically load pre-compiled code, and interact with it in a useful and relatively performant way (e.g. for dynamically extending the capabilities of your program). So far it sounds great, I know right?

Now let me list off some issues (in order of me remembering them):

1. You can't unload them (due to some bs about dlopen), so you need to restart the application...

2. They bundle the stdlib like a regular Go binary, despite the fact that they're meant to be dynamic!

3. #2 wouldn't be so bad if they didn't also require identical versions of all dependencies in both binaries (meaning you'd need to vendor the dependencies, and also hope you are using the right Go version).

4. You need to use -trimpath or everything dies...

All in all, they are broken and no one is rushing to fix it (literally, the Go team said they aren't really supporting it currently...).

So what other options are there for making plugins in Go?

There's the Hashicorp method of using RPC, where you have two separate applications one the plugin, one the plugin server, and they communicate over RPC. I don't like it. Why? Because it feels like a hack, it's not really efficient and it carries a fear of a limitation that I don't like...

Then we come to a somewhat more clever approach: using Lua (or any other scripting language), it's well known, it's what everyone uses (at least in games...). But, it simply is too hard to use, all the Go Lua VMs I could find were simply too hard to set up...

Now we come to the most creative option I've seen yet: WASM. Now you ask "WASM!? But that's a web thing, how are you gonna make that work?" Indeed, my son, it is a web thing, but that doesn't mean I can't use it! Someone made a WASM VM for Go, and the pros are that you can use any WASM supporting language (i.e. any/all of them). Problem inefficient, PITA to use, and also suffers from the same issues that were preventing me from using Lua.

Enter Yaegi, a Go interpreter created by the same guys who made (and named) Traefik. Yes, you heard me right, an INTERPRETER (i.e. like python) so while it's not super performant (and possibly suffering from large inefficiency issues), it's very easy to set up, and it means that my plugins can still be written in Go (yay)! However, don't think this method doesn't have its own issues, there's still the problem of effectively abstracting different types of plugins without requiring too much boilerplate (a hard problem that I'm actively working on, commits coming soon). However, this still feels to be the best option.

As you can see, doing plugins in Go is a very hard problem. In the coming weeks (hopefully), I'm going to (attempt to at least) benchmark all the different options, as well as publish a library that should help make using Yaegi based plugins easier. All of this stuff will go (see what I did there 😉) in a nice blog post that better explains the issues and solutions. But until then I have some coding to do...

Have a good night(/day)!13 -

Watching the small interpreter that I am building compile and run as I want it to is my big highlight, I am working on a project that a lot of people will hate really (I am trying to bring back VBScript for the web, but adding a ton of shit to it to make it a proper PHP alternative, this is a side project really)

But before that? Understanding the neckbeard rants in hacker news, legit, I used to browse there trying to find perspective of what experts would think, would not understand shit, eventually, skills came (and so did the degree) and I was able to fully understand them and even interact with them.

that also squandered all notions of impostor syndrome.2 -

Does anyone else get triggered when you use the python socket .recv() and the server does not return anything so the program just stays there indefinitely? For me, I can't even Ctrl+C it so I have to close the entire window. It's especially annoying when I start a server in the interpreter (quite a bit of lines) and I have to rewrite it afterwards.7

-

So decided to take a step back and build an interpreter for a custom version of BASIC for the cosmos kernel and a way to learn python but can we just take a moment to admire how BASIC is designed...

It is such a well designed, open and malleable language to design for specific needs...

Except visual basic, fuck visual basic, fuck it with a rake...4 -

Lua users, have you used moonscript?

It's a little language that has it's own interpreter or can be compiled down to Lua and it's absolutely lovely (currently using it with Love2d).

Of course, as with most things, what I love about it also royally pisses me off sometimes.

For starters local has to be declared for variables, unlike lua.

Otherwise the variable goes to _

Also note, that some tutorials literally tell you the opposite.

all variables are local by default

unless you don't declare them

then they go to _ (throwaway)

Some tutorials get this wrong too.

all variables have to be declared local

except tables. failure to declare a table WITHOUT a local will cause things like

table.insert to fail with "nil" values for no god damn reason.

No tutorial I could find mentioned this.

Did you know we call methods with '\'?

By the way, we call methods with '\'.

Why? Who the fuck knows.

Does make writing web routes more natural though.

Variables in the parameters of new are declared and bound for you. Would have loved to know this before hand instead of trying

to bind to them like a fucking idiot.

Fat arrows are used to pass in self for methods.

Unless you're calling a method. Then you use backwards slash. This fact is unhelpful when you're a beginner and dealing with the differences between the *other* arrow, the backslash, the fat arrow, and the fact that functions can be called with or WITHOUT parenthesis.

And on that note..

While learning all this other shit, don't forget parenthesis are optional!

Except when they're not!

..Like when you have a function call among your arguments and have to disambiguate which args belong to the outer call and to the inner call! Why not just be fucking consistent?

But on the plus size, ":" is now used for what it should have been used for in the fucking beginning: binding values to keys.

And on the downside, it's in a language thats built on top of another language that uses it for fucking *method calls*, a completely

different fucking usage.

And better still, to add to that brainfuckery thats lost in the mental translational noise like static on a fucking dialup modem, you define methods with the fat arrow. Wait, was that the single arrow or fat one? Yeah the fat one. Fuck. But not before you do THIS shit..

someShit: =>

yeah, you STILL include the god damn colon just so when you're coming from lua you can do a mental double take. "Why am I passing self twice? Oh right, because fuck me, I decided to use moonscript." It's consistent on that front but it also pisses me off.

A lot of these are actually quality of life improvements disguised as gotchas, but when you're two beers in to a 30 minute headscratcher it sure doesn't fucking feel like it.

Nevertheless, once I moved beyond the gotchas, it was like night and day. Sure moonscripts takes a giant steaming dump all over the lua output, like a schizophrenic alcoholic athena from the head of zeus, but god damn, when it works it just WORKS.

Locals that act like locals? Check.

Sane OOP? Check.

Classes, constructors, easy access to class methods, iterators? Check, check, check, check, check.

I fucking hate ceremony. Configuration over convention is for cunts. And moonscript goes a long ways toward making lua less cunty.

If you've ever felt this way while using lua, please, give moonscript a try.

You'll regret it, but in a good way!6 -

So I'm gonna finish my interpreter. No matter how much fucking work it is to implement a proper cross platform terminal library, because all the existing ones suck. I'm gonna write a debugger for it.

And then I'm gonna learn to play the ukulele.

And I'm going to start a new project that may actually make me a good sum of money.

This is the plan. If I don't do this then literally fuck my life, I might aswell jump off a bridge because I can't fucking do anything12 -

!rant...

...i am actually scared about posting this one... because... well, i've mentioned that language idea that i've been mucking around with "designing"... and... i have grand ideas, but no idea if i understand stuff and dev needs and stuff well enough to be doing what i'm doing right now in trying to put it into lang design....

...and posting it here is throwing myself into lion's den with almost nothing, and risting shame when someone who knows this stuff looks at it and laughs at me, realizing that it's utter bullshit that has no idea what's it doing, a perfect dunning-kruger example...

...and this fear is reinforced by the fact that the whole thing is still (about 5 years after i've been mucking around with it mildly) very much in flux containing lots of things i'm not sure about, undecided about, don't know enough about, don't realize the implications of, etc etc...

but... let's try it.

let's link this thing and let you probably tear me to shreds =D

(ignore the c# project, that's the exmaple of what i was talking about regarding the parser, bullshit that kinda spins out into self-referential circles because although i understand the parser and interpreter theory, I wasn't able to transform any of it into practice yet)

https://github.com/sh-code/AsmOs44 -

Anyone able to link me to some good reading material on compilers, interpreters, emulation and CPU design?

Keen to actually get some of this knowledge under my belt, don't mind if it requires a little investment as long as it isn't written in (fucking) python, preferably C if anyone knows of anything.

Thanks guys! :-D9 -

Last year, I made an application of A* maze-solving algorithm in class. I used a linked list and my friends used arrays. Their algorithms were way faster than mine (I remade it later :p).

OK I understand that accessing memory by address if way faster than accessing by iterations, but I also see that python lists or C# lists are really fast. How is it possible to make a list performance-proof like this? Do the python interpreter make a realloc each time you append or pop a value?1 -

I've ranted about this before, but here we go again:

Go Plugins.

I was racking my brains trying to figure out how one could possibly implement plugins easily in Go.

I had a look at using RPC, which requires far to much boilerplate to be realistic. I looked at using Lua, but there doesn't seem to be a straight forward way of using it. I was even about to go with using WASM (yes, WASM). But then I came across Yaegi ("Yet another elegant Go interpreter", you heard right: "interpreter"), Yaegi is also very easy to use.

There are a few issues (including some I haven't solved yet), including flexibility (multiple types of plugins), module support, etc. Fortunately, Traefik just released their plugin system which is based on Yaegi (same company), and I got to learn a few tricks from them.

Here's how module loading works: The developer vendors their dependencies and pushes them to a repo. The user downloads the repo as a zip and saves it to the plugins folder. I hash the zip, unzip it to a cache, and set the the GOPATH for the interpreter to be that extracted folder. I then load the module (which is defined by a config file in the folder), and save it for later. This is the relatively easy part.

The hard part is allowing for different types of plugins. It looks easy, but Go has a strict typing system, makes things complicated. I'm in the process of solving this problem, and so far it should go like this: Check that the plugin fits an arbitrary interface, and if it does, we're good the go. I will just have to apply the returned plugin to that interface. I don't like this method for a few reasons, but hopefully with generics it will become a bit more clean.1 -

i adore compiler based programmers. yesterday i changed a bit of python code without being able to run it only to find out later i wasn't even able to spell True and False correctly.7

-

How meta will it be when I get close to finishing the interpreter for the language I'm building, and I use my OWN language for all my future projects?2

-

the one that exists (c#) seems underused compared to where it could (or even should) be used. and the place that uses it the most (enterprise) butchers and mangles its use, just as enterprise tends to do with everything.

the one that i'm designing... the fact that it doesn't exist yet, and that even as i'm zeroing in on syntax and philosophy that i'm very much starting to be proud of, i still don't have a proper idea of how to implement even the most basic parser/interpreter for it, not because it's in any way difficult or unusual, but just because... i've never done that before, so i get into weird circular thought paths that produce weird nonsensical code...

... on top of that, i still only have a very, very fuzzy idea of how will it (sometime in extremely distant future) actually implement the most interesting and core feature - event-based continuous (partial) re-parsing of the source code and the fact that traversing the tokens at the leaf level of the syntax tree should result in valid machine code (or at least assembly) that is the "compiled" program.

i *know* it's possible, i just don't yet know enough to have a contrete idea how exactly to achieve it.

but imagine - a programming language where interactive programming is basically the default way of working, and basically the same as normal programming in it, except the act of parsing is also the (in-memory) compilation at the same time, so it's running directly on the hardware instead of via interpretrer/vm/any of that overhead crap.

also then kinda open-source by definition.

and then to "only" write an OS in that, and voilá! a smalltalk-like environment with non-exotic, c-family syntax and actual native performance!

ahhh... <3

* a man can dream *2 -

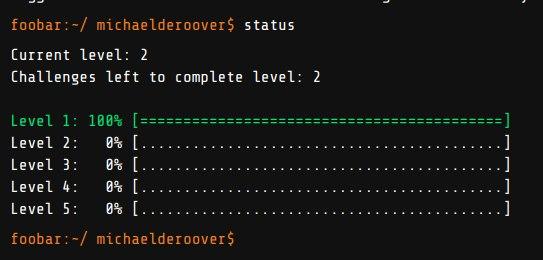

Just remembered that I still had a foobar invite link in my email inbox 😋

The challenges are odd though, first challenge was super easy (basically an idiot check), but while I was able to convert 3 cans of energy drink into a functional solution in half an hour, the verification utility is not very verbose at all. So in Python 3.7.3 in my Debian box it worked just fine, yet the testing suite in Foobar was failing the whole time. After sending an email to my friend that gave the link (several years ago now, sorry about that! 😅) asking if he knew the problem, I found out that Google is still using Python 2.7.13 for some reason. Even Debian's Python is newer, at 2.7.16. To be fair it does still default to Python 2 too. But why.. why on Earth would you use Python 2.7 in a developer oriented set of challenges from a massive company, in 2020 when Python 2 has already been dead for almost a whole year?

But hey now that it's clear that it's Python 2.7, at least the next challenges should be a bit easier. Kind of my first time developing in SnekLang regardless actually, while the language doesn't have everything I'd expect (such as integer square root, at least not in Debian or the foobar challenge's interpreter), its math expressions are a lot cleaner than bash's (either expr or bc). So far I kinda like the language. 2-headed snake though and there's so much garbage for this language online, a lot more than there is for bash. I hate that. Half the stuff flat out doesn't work because it was written by someone who requires assistance to breathe.

Meh, here's to hoping that the next challenges will be smooth sailing :) after all most of the time spent on the first one (17.5 hours) was bottling up a solution for half an hour, tearing my hair out for a few hours on why Google's bloody verification tool wouldn't accept my functioning code (I wrote it for Python 3, assuming that that's what Google would be using), and 10 hours of sleep because no Google, I'm not scrubbing toilets for 48 hours. It's fair to warn people but no, I'm not gonna work for you as a cleaning lady! 😅

Other than the issues that the environment has, it's very fun to solve the challenges though. Fuck the theoretical questions with the whiteboard, all hiring processes should be like this! 1

1 -

Ahhh i don't even want to remember... Dynamic text interpreter that will translate a PDF+excel to a dynamic data structure that will accommodate any changes... Where is my gun again?!

-

Shit bathed and stack smashing ass loads of fuck.

I wrote a virtual machine, and just to fuck myself harder, I make the decision of applying some fancy dumbass theories of mine. This translates to a piece of shit modular design that works exactly as intended, but constantly gives me vietnam flashbacks to the horrifying, multiple concurrent instances of my younger mind being incessantly turbo-raped by the dozen object-obsessed pedophiles that I initially studied under.

Now, were they *actual* pedophiles? No, of course not. But I have to make fun of the acronym somehow and that's what came to mind, leaking horse dung all over the walls, floor, curtains and carpets.

Anyway, I feel so smart after this traumatic experience I just have to keep doing it to relive the terror once again. Find me in the corner, laying down in the fetal position, sobbing until the tears build up and drown me in this well of despair, or rather this finely shit painted portrait of a toilet in a lonely and stinking unisex public bathroom stall.

But let me squeeze these fucking tits a little bit harder, because that's my actual day job. That's right. I get PAID for slapping around mammary glands, it's not much but it's an honest living.

So where was I? Ah, yes, absolute degeneration. I'm truly the Max Wright of programming, mostly for smoking crack and having unprotected sex with homeless people, but also for keeping alien life forms in my basement that go out at night to hunt for sweet feline delight.

But as I keep going, I decide I want a language for the machine so I don't have to punch bits by hand all fucking day like an idiot, so alright let's make a small assembler for this shit... oh, right, except it's not small, because gently suckle the bile out the lips of my fucking butthole.

I may redefine a load of shit two months down the line, so I have to make everything perfectly encapsulated and easily fucked with -- which in my licking vomit off the floor of a porn theater travesty of a case means I'm generating half the code and scrambling as hard as I can to glue everything together.

Does it work? Of course it works, I'm Max Wright bitch. I can redefine the ISA all I want, anytime I want without breaking anything because of my pristine crackhead encapsulation. And to credit the scrambled eggs I have for fucking brains, it's not even *that* complex.

The problem is I keep forgetting shit, not how it works, just that it's there. So I forget that I have a virtual machine, and I forget that I have an assembler, and so I spend an entire day trying to figure out how the fuck I'm going to handle a loop inside an unrelated interpreter.

By the time I manage to remind the drooling undead jackass that is this husk that my irredeemably demonic self inhabits, that we can easily solve this by using the tools we've already built, it's so late and we're so tired there's not much we can do. All this time, WASTED.

Which circles back to crack. Are you tired of blowing your babysitter for cash? Have you considered suicide by a thousand used trojan condoms? Is your roommate possesed by the forces of Avernum, and now seeking all-destructive vengeance against your rectum?

Try no other than Soul Excision, the treatment that will neuter your being and curse it to the TRUEST form of eternal damnation! Through Soul Excision, you will be CUT OFF from the very essence of the universe, and turned into an astral prostitute that offers their EVERY orifice to the BUTTLOADS of maggots that debour their mind and body, all for the pleasure of some rich and powerful wankers that *deeply* enjoy watching questionable erotic tapes from nightmarish outer dimensions!

Use my promo code SLUTSKANK for 20% OFF in your very LAST purchase on this earth! And once you surrender your BODILY holes to cosmic oblivion, remember: when it comes to your ASS, we're ALWAYS open for business!

Thanks to Soul Excision for sponsoring this DDDDDDDDDDDDDDDDDDDDD$$$$$"2402"$$?"="$0"?¿"=¿?40'0"$="¿¿=$¿"?=4¿?"$="?¿$="¿?$0¿?"=$¡'0$"¿?$=::::::

:~%4 -

Today I watched "the birth and death of js":

https://destroyallsoftware.com/talk...

Here Gary Bernhardt talks about compiling executables to asm.js and about running the compiled files using a js interpreter that can be included in the kernel.

Eventually, some responsibility can be moved from the kernel to this interpreter, responsibility like virtual memory and trap management.

This speech aims to be fun, so not everything should be taken seriously...

but...

but...

(Forgive me)

...this trick seems to be a nice idea, and projects like Node OS work likewise.

So now, would you even consider this? Or is it just something that will be nothing more than craziness of a mad man?1 -

I had the idea that part of the problem of NN and ML research is we all use the same standard loss and nonlinear functions. In theory most NN architectures are universal aproximators. But theres a big gap between symbolic and numeric computation.

But some of our bigger leaps in improvement weren't just from new architectures, but entire new approaches to how data is transformed, and how we calculate loss, for example KL divergence.

And it occured to me all we really need is training/test/validation data and with the right approach we can let the system discover the architecture (been done before), but also the nonlinear and loss functions itself, and see what pops out the other side as a result.

If a network can instrument its own code as it were, maybe it'd find new and useful nonlinear functions and losses. Networks wouldn't just specificy a conv layer here, or a maxpool there, but derive implementations of these all on their own.

More importantly with a little pruning, we could even use successful examples for bootstrapping smaller more efficient algorithms, all within the graph itself, and use genetic algorithms to mix and match nodes at training time to discover what works or doesn't, or do training, testing, and validation in batches, to anneal a network in the correct direction.

By generating variations of successful nodes and graphs, and using substitution, we can use comparison to minimize error (for some measure of error over accuracy and precision), and select the best graph variations, without strictly having to do much point mutation within any given node, minimizing deleterious effects, sort of like how gene expression leads to unexpected but fitness-improving results for an entire organism, while point-mutations typically cause disease.

It might seem like this wouldn't work out the gate, just on the basis of intuition, but I think the benefit of working through node substitutions or entire subgraph substitution, is that we can check test/validation loss before training is even complete.

If we train a network to specify a known loss, we can even have that evaluate the networks themselves, and run variations on our network loss node to find better losses during training time, and at some point let nodes refer to these same loss calculation graphs, within themselves, switching between them dynamically..via variation and substitution.

I could even invision probabilistic lists of jump addresses, or mappings of value ranges to jump addresses, or having await() style opcodes on some nodes that upon being encountered, queue-up ticks from upstream nodes whose calculations the await()ed node relies on, to do things like emergent convolution.

I've written all the classes and started on the interpreter itself, just a few things that need fleshed out now.

Heres my shitty little partial sketch of the opcodes and ideas.

https://pastebin.com/5yDTaApS

I think I'll teach it to do convolution, color recognition, maybe try mnist, or teach it step by step how to do sequence masking and prediction, dunno yet.6 -

!rant

...so I started learning F#... again, second attempt, first one failed like 3 years ago because I had no real usecase appropriate for the language, so I didn't get it.

...but now i'm trying to make my own language, meaning also (wanting) parser/interpreter/compiler, and I found a lecture where dude shows off THREE he wrote (within 24 hours) for three different languages...

...and it showed me that doing my parser/interpreter/compiler in F#, using pattern matching, is going to be incredibly awesome, as opposed to doing it as string parsing in C#...6 -

"Programming is a craft. At its simplest, it comes down to getting a

computer to do what you want it to do (or what your user wants it to do). As a programmer, you are part listener, part advisor, part interpreter, and part dictator. You try to capture elusive requirements and find a way of expressing them so that a mere machine can do them justice. You try to document your work so that others can understand it, and you try to

engineer your work so that others can build on it. What's more, you try to do all this against the relentless ticking of the project clock. You work small miracles every day.

It's a difficult job. "

- The pragmatic programmer -

I'm studying atm and I survived Haskell, SKI, ... now, in the second semester we started with Python (yeay ♡) and Java (that's fine).

One of the first exercises is about installing Jython ('cause it's good, right? /sarcasm off), using the lecturer's module and write some code for it. It's about painting some shitty graphics *gasp*...

I use PyCharm (not really necessary for these crappy exercises) and programming on Windows and/or Linux.

Downloaded Jython, installed it, set it as interpreter - works fine (win10, pycharm).

Some students got weird errors using linux - for me it's the same but meh Idc.

Today I tried using Jython on my notebook, too (win10, pycharm). Downloaded it from the Jython Project website. Can't update pip, can't run modules - error is about fckin charsets...

Some other student figured out - wrong version of Jython. The newer version has some bug fixes.

2.7.1 is the one and only - the download section of their website offers 2.7.0 as latest release...

So - how to know there is a version 2.7.1?

#1 version control website = Wikipedia

So... there is a blog, guy's writing about this release - this installer is hosted at maven central. Yeay. Obvious. Thanks.

Can't describe such stupidity - maybe it's the user again 😂 -

A: [technology] really sucks. For example, it does this thing where [shitty thing].

B: So just because [shitty thing], you throw out the entire [technology]?

No you dimwit. It's because of all the other things that led up to this point. This new bullshit is just being tossed on top of the mountain of other bullshit.

I'm not going to list off all 5000 reason why Cobol-interpreter-using-C-preprocessor is absolute garbage when "it's cobol implemented in the C preprocessor" is what I'm ranting about *now*.

Pedantic twats.1 -

What's the strangest Assembler or Pseudo-Assembler you've ever encountered?

I wrote a Z-machine (Infocom's virtual text adventure interpreter) and it was quite an interesting interpretation of bytes:

- The first 3 bits of an instruction tell you the opcode category, the rest are the instruction

- The 2nd (and maybe 3rd) byte tell you in 2-Bit chunks the operand types.

- text is encoded in 5-Bit chunks, with special characters for CapsLock that double function as padding (if your text doesn't align with the 3 letters per 2 bytes).

- and of course there are 5 different versions that all work slightly differently (as in CapsLock becomes Shift or "this special character isn't in use anymore")3 -

I am some Kind of angry right now.

Some of you may know the App "Jodel" (for those who don't: it is an app which lets you talk to strangers at in your city/near your location)

I am in an informatics-Channel and I feel a bit annoyed.

There is a groundless hate against JavaScript or Java, it seems because... People feel cool? It remembers me of the PHP-Hate. Clueless people are talking shit, even if the web is not even their programming-field of activity.

Someone just said that in js you can do any shit and it works.

- you can leave out semicolons. wow.

Another one meant that one problem is the unlogical backwards-conpatibility. "You have to look if the script is running on the browsers and on your engine."

- Isn't that part of any programming language? To see if it works?

I don't know what to say right now.

#ilovejs

Uhm btw.: Can someone explain me, what he meant with "engine"? I mean there is an interpreter, but "engine"?!10 -

My work product: Or why I learned to get twitchy around Java...

I maintain a Java based test system, that tests a raster image processor. The client is a Java swing project that contains CORBA bindings to the internal API of the raster image processor. It also has custom written UI elements and duplicated functionality that became available in later versions of Java, but because some of the third party tools we use don't work with later versions of Java for some reason, it's not possible to upgrade Java to gain things as simple as recursive directory deletion, yes the version of Java we have to use does not support something as simple as that and custom code had to be written to support it.

Because of the requirement to build the API bindings along with the client the whole application must be built with the raster image processor build chain, which is a heavily customised jam build system. So an ant task calls out to execute a jam task and jam does about 90% of the heavy lifting.

In addition to the Java code there's code for interpreting PostScript files, as these can be used to alter the behaviour of the raster image processor during testing.

As if that weren't enough, there's a beanshell interface to allow users to script the test system, but none of the users know Java well enough to feel confident writing interpreted Java scripts (and that's too close to JavaScript for my comfort). I once tried swapping this out for the Rhino JavaScript interpreter and got all the verbal support in the world but no developer time to design an API that'd work for all the departments.

The server isn't much better though. It's a tomcat based application that was written by someone who had never built a tomcat application before, or any web application for that matter and uses raw SQL strings instead of an orm, it doesn't use MVC in any way, and insane amount of functionality is dumped into the jsp files.

It too interacts with a raster image processor to create difference masks of the output, running PostScript as needed. It spawns off multiple threads and can spend days processing hundreds of gigabytes of image output (depending on the size of the tests).

We're stuck on Tomcat seven because we can't upgrade beyond Java 6, which brings a whole manner of security issues, but that eager little Java updated will break the tool chain if it gets its way.

Between these two components we have the Java RMI server (sometimes) working to help generate image data on the client side before all images are pulled across a UNC network path onto the server that processes test jobs (in PDF format), by reading into the xref table of said PDF, finding the embedded image data (for our server consumed test files are just flate encoded TIFF files wrapped around just enough PDF to make them valid) and uses a tool to create a difference mask of two images.

This tool is very error prone, it can't difference images of different sizes, colour spaces, orientations or pixel depths, but it's the best we have.

The tool is installed in both the client and server if the client can generate images it'll query from the server which ones it needs to and if it can't the server will use the tool itself.