Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "scraping"

-

A client wanted me to make a website that compared the users face to that of a wrestler. We had done a lot of work, but now he wants to switch it...

to pornstars.

So, I guess the next week of my work is gonna be scraping porn websites. NSFW, for work.23 -

Example #1 of ??? Explaining why I dislike my coworkers.

[Legend]

VP: VP of Engineering; my boss’s boss. Founded the company, picked the CEO, etc.

LD: Lead dev; literally wrote the first line of code at the company, and has been here ever since.

CISO: Chief Information Security Officer — my boss when I’m doing security work.

Three weeks ago (private zoom call):

> VP to me: I want you to know that anything you say, while wearing your security hat, goes. You can even override me. If you need to hold a release for whatever reason, you have that power. If I happen to disagree with a security issue you bring up, that’s okay. You are in charge of release security. I won’t be mad or hold it against you. I just want you to do your job well.

Last week (engineering-wide meeting):

> CISO: From now on we should only use external IDs in urls to prevent a malicious actor from scraping data or automating attacks.

> LD: That’s great, and we should only use normal IDs in logging so they differ. Sounds more secure, right?

> CISO: Absolutely. That way they’re orthogonal.

> VP: Good idea, I think we should do this going forward.

Last weekend (in the security channel):

> LD: We should ONLY use external IDs in urls, and ONLY normal IDs in logging — in other words, orthogonal.

> VP: I agree. It’s better in every way.

Today (in the same security channel):

> Me: I found an instance of using a plain ID in a url that cancels a payment. A malicious user with or who gained access to <user_role> could very easily abuse this to cause substantial damage. Please change this instance and others to using external IDs.

> LD: Whoa, that goes way beyond <user_role>

> VP: You can’t make that decision, that’s engineering-wide!

Not only is this sane security practice, you literally. just. agreed. with this on three separate occasions in the past week, and your own head of security also posed this before I brought it up! And need I remind you that it is still standard security practice!?

But nooo, I’m overstepping my boundaries by doing my job.

Fucking hell I hate dealing with these people.14 -

I was offered to work for a startup in August last year. It required building an online platform with video calling capabilities.

I told them it would be on learn and implement basis as I didn't know a lot of the web tech. Learnt all of it and kept implementing side by side.

I was promised a share in the company at formation, but wasn't given the same at the time of formation because of some issues in documents.

Yes, I did delay at times on the delivery date of features on the product. It was my first web app, with no prior experience. I did the entire stack myself from handling servers, domains to the entire front end. All of it was done alone by me.

Later, I also did install a proxy server to expand the platform to a forum on a new server.

And yesterday after a month of no communication from their side, I was told they are scraping the old site for a new one. As I had all the credentials of the servers except the domain registration control, they transferred the domain to a new registrar and pointed it to a new server. I have a last meeting with them. I have decided to never work with them and I know they aren't going to provide me my share as promised.

I'm still in the 3rd year of my college here in India. I flunked two subjects last semester, for the first time in my life. And for 8 months of work, this is the end result of it by being scammed. I love fitness, but my love for this is more and so I did leave all fitness activities for the time. All that work day and night got me nothing of what I expected.

Though, they don't have any of my code or credentials to the server or their user base, they got the new website up very fast.

I had no contract with them. Just did work on the basis of trust. A lesson learnt for sure.

Although, I did learn to create websites completely all alone and I can do that for anyone. I'm happy that I have those skills now.

Since, they are still in the start up phase and they don't have a lot of clients, I'm planning to partner with a trusted person and release my code with a different design and branding. The same idea basically. How does that sound to you guys?

I learned that:

. No matter what happens, never ignore your health for anybody or any reason.

. Never trust in business without a solid security.

. Web is fun.

. Self-learning is the best form of learning.

. Take business as business, don't let anyone cheat you.19 -

My client is trying to force me to sign an ethics agreement that would allow them to sue me if found in breach of it. At the same time they are scraping eBay's data without their consent and refuse to sign the licence agreement. Apparently they don't understand irony.2

-

At one of my previous companies, there was a guy, let's call him X.

X was the ideal employee.

X used to come to office at 8.

X used to go to sleep in AC office.

X used to wake up at 10 when everyone started coming in.

X used to play Uno and Pokemon Go till 6.

X was a master in Uno and Pokemon Go.

X used to wait till 8 to get free cab facility.

X didn't do one single productive piece of shit whole day.

My boss loved X Because he came early and left late.

My boss didn't give a damn if that person even switched on his laptop or not.

My boss didn't care about productivity.

I didn't come on time and didn't leave on time (I travelled in non-traffic hours)

I slogged my ass off because I really wanted to learn.

My boss scolded me, asked to be like X.

This was the last straw.

I resigned the next day.

I never wanted to be like X. Seeing him daily, motivated me so much.

When I worked, I focussed on it, I didn't keep checking the clock waiting for it to hit 5 pm.

I aimed for productivity, set realistic targets and always achieved them, no matter what.

My boss was an a--hole. I met X and Boss recently. Both are still in the same role, just scraping through.

Felt really good that I worked hard and have achieved something in life ^_^13 -

When your reworking a bot because they've realised your scraping their site and you spot this; GAME ON MF'ers

7

7 -

Client : I have a scraping project for you...

Me : Yeah tell me which site you want me to scrape and what data from it?

Client : I want you to scrape data from 500 sites

Me : 500 sites...are you serious?

Client : Yeah 500 sites...can you do the job?

Me : ok...for 500 sites...the charge will be $500...

Client : Are you out of your mind? $500 for just 500 sites...I can only give you $5018 -

I always have guilt complexion of saying that I'm a Data Scientist - when I'm actually spending weeks scraping and annotating data into a csv file.3

-

me: “Realistically, the only way to pull in this data without replicating and without an API feed is to scrape it from the site”

manager -> to the client: “basically he’s got to hack your system to do it”2 -

This rant is inspired by another rant about automated HR emails like "we appreciate your interest [bla bla] you got rejected [bla bla]". (Please bare with me).

I live in an underdeveloped country, I graduated in September, did Machine Learning for my thesis and I will soon publish a paper about it, loved it wanted to work as ML/data science engineer. On all the job postings I found there was only one job related, I sent resume, they didn't answer, couple months later that company posted that they want a full stack web dev with knowledge of mobile dev and ML, basically an all in one person, for the salary of a junior dev.

- another company posted about python/web scraping developer, I had the experience and I got in touch, they sent me a test, took me 3 days, one of the questions took me 2 days, I found an unanswered SO question with the exact wording dating to 6 months ago, I solved it, sent answers, never heard back from them again.

- one company weren't really hiring, I got in touch asking if the have a position, they sent a test, I did it, they liked it, scheduled an interview, the interviewer was arrogant, not giving any attention to what I am saying, kept asking in depth questions that even an expert might struggle answering. In the end they said they're not really hiring but they interview and see what they can find. Basically looking for experts, I mentioned that im freshly graduated from the very beginning.

- over 1000 applications on different positions on LinkedIn across the whole world, same automated rejection email, but at least they didn't keep me waiting.

- I lost hope. Found a job posting near me, python/django dev, in the interview they asked about frontend (react/vueJS) and Flutter, said I don't have experience and not interested in that, they asked about databases, C and java and other stuff that I have experience in, they hired me with an insulting salary (really insulting) cuz they knew im hopeless, filling 2 positions, python dev and tech support for an app built in the 90s with C/java and sorcery... A week into the job while I'm still learning about the app I'm supposed to support, the guy called me into the office: "here's the thing" he said, "someone else is already working on python, i want you to learn either react or vueJS or flutter" I was in shock, I didn't know what to say, I said I'll think about it, next week I said I'll learn react, so I spent the week acting like im learning react while I scroll on FB and LinkedIn (I'm bad, I know).

- in the weekend a foreign company that I applied to few weeks ago got in touch, we had some interviews and I got hired as DevOps/MLOps. It's been a month and I'm loving it, the salary is decent and I love what I do.

Conclusion: don't lose hope.8 -

Client : We need real time analysis.

Me : But we can't just scrape thousands of results and process them on user's click.

Client : Don't do that, Real-time analysis is scraping it once and processing it everytime the user demands.

Me : Okay

WHAT THE FUCK !!!!!7 -

A manager, a mechanical engineer, and software analyst are driving back from convention through the mountains. Suddenly, as they crest a hill, the brakes on the car go out and they fly careening down the mountain. After scraping against numerous guardrails, they come to a stop in the ditch. Everyone gets out of the car to assess the damage.

The manager says, "Let's form a group to collaborate ideas on how we can solve this issue."

The mechanical engineer suggests, "We should disassemble the car and analyze each part for failure."

The software analyst says, "Let's push it back up the hill and see if it does it again."1 -

So our class had this assignment in python where we had to code up a simple web scraper that extracts data of the best seller books on Amazon. My code was ~100 lines long( for a complete newbie in python guess the amount of sweat it took) and was able to handle most error scenarios like random HTML 503 errors and different methods to extract the same piece of data from different id's of divs. The code was decently fast.

All wss fine until I came to know the average number of lines it took for the rest of the class was ~60 lines. None of the others have implemented things that I have implemented like error handling and extracting from different places in the DOM. Now I'm confused if I have complicated my code or have I made it kind of "fail proof".

Thoughts?8 -

I've been a part of this industry for over two decades, found myself scraping and clawing my way up, recently leaving a high paying position to create my own company; in an attempt to fix the things I feel are severely broken within the ones I've worked for in the past.

Sometimes, we are challenged in ways we never thought we would be. And, it should always result in the improvement of something we never thought would be possible to improve.

There's a certain beauty of hitting a personal impasse. Because it allows you to choose a better path for yourself - which is a key element in accepting and conquering any one of life's many challenges.

So, just remember, we are - by nature - problem solvers. So what the fuck would we do, without a problem to solve?5 -

Ok going to rant about other developers this time.

Can you please stop doing just the minimal amount of work on your games/apps?!

I understand you may not have the time to go through with a fine tooth comb but just delay it, delay it and finish the product to a state that doesn't feel half assed and broken right at the get go.

A small note that the thing that triggered me with this is Android Devs at the moment, with Google requiring you support the adaptive icons and a newer SDK, so many Devs are just scraping by and putting in no effort to bring things up to date (also put more effort into adaptive icons rather than just putting your old square Icon on a white background)

This shit is just leading to everything being 'early access' or in a constant 'beta' stage with the promise of polish later.

Don't be that guy, put the extra few days of polish in... Just please...19 -

So one year ago, when I was second year in college and first year doing coding, I took this fun math class called topics in data science, don't ask why it's a math class.

Anyway for this class we needed to do a final project. At the time I teamed up with a freshman, junior and a senior. We talked about our project ideas I was having random thoughts, one of them is to look at one of the myths of wikipedia: if you keep clicking on the first link in the main paragraph, and not the prounounciation, eventually you will get to philosophy page.

The team thought it was a good idea and s o we started working.

The process is hard since noe of us knew web scraping at the time, and the senior and the junior? They basically didn't do shit so it's me and the freshman.

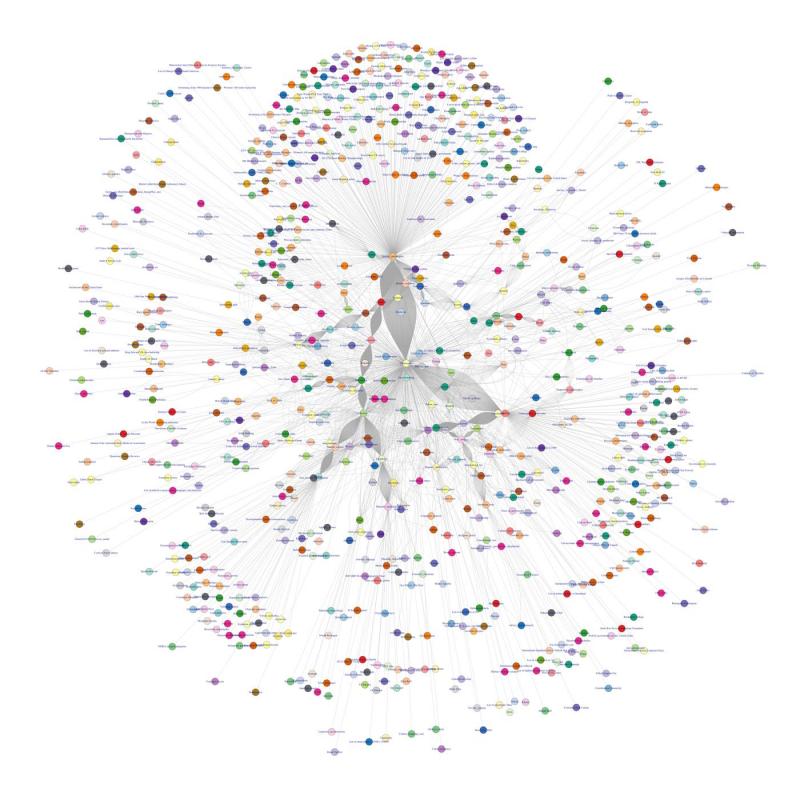

At the end, we had 20000 page links and tested their path to philosophy. The attached picture is a visualization of the project, and every node is a page name and every line means the page is connected.

This is the first open project and the first python project that I have ever done. Idk if it is something good enough that I can out on my resume, but definitely proud of this.

PS: if you recognize the picture, you probably know me. If you were the senior or the junior in the team, I'm not sorry for saying you didn't do shit cuz that's the truth. If you were the freshman, I am very happy to have you as a teamate. 3

3 -

So I just launched a website where you can create web-scrapers with just the click of a button:

http://scrape.host16 -

in the past 48 hours my partner must have asked me 50 times to create an "AI" that can get the data we need off of wikipedia.

Background: I am in AP Computer Science AB but I have been programming long enough that this class is a joke. We were assigned to partners with the task of creating a search engine that finds informations on wikipedia("which is dumb because thats what the search tool is for") so I created a Java Web-Scraping program in probably 30 minutes and showed my partner. He told me I am completely wrong because it would be "cool" to incorporate machine learning into the assignment.

Do I even tell his what machine learning is or should I just let his figure it out?7 -

Tldr; its a long introduction

Hi Ranters,

I've been on this app for quite a while now. As a shy cat watching from a distance and reading all kinds of rants. Anywho I feel comfortable enough to crawl out of my shell and introduce myself. Since I feel you guys together made such a pleasant and safe community, I'm really happy to be a part of it!

Anyway I'm Sam, 24 year old, from the Netherlands. My favorite color is green. Mostly the green you can find in nature. The one that calms you down:). I'm a very introverted person but always very curious and eager to learn new things.

I started to program when I was 12. I did assembly and C++. Because I liked making cheats for online games. Later I learned about C#, Java and Python. Mostly used it for web stuff, scraping, services etc. But also chatbots (for Skype for example).

Currently I'm 2 years in as a data scientist, mostly working in Python.

But on the side as a hobby and with an ambition I have a basic understanding of full stack development.

Mostly Nodejs, express, mongo, and frontend, no frameworks.

(I will later ask you guys some more questions about that! I could really use some advice!)

Anyway enough about me! Tell a bit about yourselves! Happy to get to know you all a little better!17 -

My biggest regret is the same as my best decision ever made.

The company I work for specializes in performing integrations and migrations that are supposed to be near impossible.

This means a documented api is a rare sight. We are generally happy if there even IS an (internal) api. Frequently we resort to front-end scraping, custom server side extensions and reverse-engineered clients.

When you’re in the correct mindset it’s an extreme rush to fix issues that cannot be fixed and help clients who have lost most hope. However, if your personal life is rough at the moment or you are not in a perfect mental state for a while it can be a really tough job.

Been here for 3+ years and counting. Love and hate have rarely been so close to each other. -

So I may have got a little fed up with people complaining about problems at work... Apologies to @dfox. I'll stop scraping your website now 😬

7

7 -

When you're a hardcore web developer, the only 'action' you .get() is when you're writing a login form scraper for your three-legged oauth flow in Python

7

7 -

Ok so to recap, we had shit beginning. We couldn't find client like 3 months and thank god that we agreed that we don't register the firm right away. If we did we would be broke a long time ago.

We found first client and he wanted to build some scrapers with gui. So me being BackEnd developer I created API for scraping (boredom) and my friend created website for that api and I just created gui that displays that site. The project was about 1200$. And since there are 3 of us we splited it into 3x400$.

After that it was again really hard to find clients again. We thought of quitting and just going to uni or something but we really didn't want to and anyways we needed to get money for uni ourselfs if we wanted to go.

So we said that as we are not paying anything and not losing money we will continue as long as we can.

And after we managed to get a hold of it and now we have 2 clients and after we finish them we have 2 more.

So I think the most important thing is that you help your coworkers. My friend who finds clients had a rough time at the beggining as I mentioned. So all 3 of us got together and started spamming people for few weeks. That's how we found our first client.

So now we are running. Not a milion dollar company but we are happy that we are doing what we love and that we have money doing it. We aim higher but we don't want to hurry and screw things up as we are young still.

Also thank you for getting interested after 300 days :)11 -

So a client hired us to rebuild their website, because their current website is being held hostage at their current provider. The provider locked them out of WordPress and says they will shut down the website at the end of the month.

The client wants us to hack into the website and get the files. I told them "no chance in hell", but that their current website will be at our host later today.

What I didn't tell them is that I just scraped their website pages to flat HTML.5 -

I’m going through the book automate the boring stuff and I’m working on the chapter with web scraping right.

Well I wanted to just count all of the comic links that are in the xkcd archive as a small exercise to help me get used to and better learn web scraping.

I go through hell trying to do this but after more than a few hours later I finally have done it I returned every link of ONLY the comics, so it was time to start counting them.

I implemented the counting. The total number as of today is 2279 and it my code counted 2278, and I started to lose it.

So I go through this motherfucker manually to see where my loops count and the count on the tags start to differ. I found it, whoever made it went from 403 to 405. The euphoria I felt for this incredibly small task was incredible. (Still haven’t pieced it together yet)

I found the email of the guy who I assume owns the site and I started writing an email that basically said “hey the count of your comics is off by one and you made me rethink existence trying to figure out why, you skipped number 404-”

I look at the gap between 403 and 405 Then the words “Error 404 Not Found” popped into my head. I proceeded to scream for a second and stopped writing the email and now I’m trying to come to terms with this.

TL:DR the guy who runs xkcd comics trolled me with a simple error 404 joke4 -

Me a month ago: "I'm done with full-time corporate work. I'm going to shift to freelance only so I can enjoy my life and not be in a grind, bowing and scraping to a manager all day."

*Recruiter finds me and offers me a full-time opportunity*

Me now: "Well, I guess I can look into it..."

Me 9 months from now: "I'm done with full-time corporate work. I'm going to shift to freelance only so I can enjoy my life and not be in a grind, bowing and scraping to a manager all day."

Repeat until dead.2 -

So after the original idea getting scraped during a hackathon this week, we created a slack bot to fetch most relevant answers from StackOverflow using user's input. All the user had to do was input few words and the bot handled all typos, links etc and returned the link as well as the most upvoted or the accepted amswer after scraping it from the website.

The average time to find an answer was around 2 seconds, and we also told that we're planning to use flask to deploy a web application for the same.

After the presentation, one of the judge-guys called me and told me that "It isn't good enough, will not be used widely" and "Its similar to Quora".

Never ever have I wanted to punch a son of a bitch in the balls ever.3 -

Ran a script on production to scrape ~1000 sites continously and update our ~50.000 productions from the data. On the same server as our site was running. Needless to say, with traffic and scraping, our server had almost 100% CPU and ram usage all the time for 2 weeks until I realised my fuckup2

-

So here's my problem. I've been employed at my current company for the last 12 months (next week is my 1 year anniversary) and I've never been as miserable in a development job as this.

I feel so upset and depressed about working in this company that getting out of bed and into the car to come here is soul draining. I used to spend hours in the evenings studying ways to improve my code, and was insanely passionate about the product, but all of this has been exterminated due to the following reasons.

Here's my problems with this place:

1 - Come May 2019 I'm relocating to Edinburgh, Scotland and my current workplace would not allow remote working despite working here for the past year in an office on my own with little interaction with anyone else in the company.

2 - There is zero professionalism in terms of work here, with there being no testing, no planning, no market research of ideas for revenue generation – nothing. This makes life incredibly stressful. This has led to countless situations where product A was expected, but product B was delivered (which then failed to generate revenue) as well as a huge amount of development time being wasted.

3 - I can’t work in a business that lives paycheck to paycheck. I’ve never been somewhere where the salary payment had to be delayed due to someone not paying us on time. My last paycheck was 4 days late.

4 - The management style is far too aggressive and emotion driven for me to be able to express my opinions without some sort of backlash.

5 - My opinions are usually completely smashed down and ignored, and no apology is offered when it turns out that they’re 100% correct in the coming months.

6 - I am due a substantial pay rise due to the increase of my skills, increase of experience, and the time of being in the company, and I think if the business cannot afford to pay £8 per month for email signatures, then I know it cannot afford to give me a pay rise.

7 - Despite having continuously delivered successful web development projects/tasks which have increased revenue, I never receive any form of thanks or recognition. It makes me feel like I am not cared about in this business in the slightest.

8 - The business fails to see potential and growth of its employees, and instead criticises based on past behaviour. 'Josh' (fake name) is a fine example of this. He was always slated by 'Tom' and 'Jerry' as being worthless, and lazy. I trained him in 2 weeks to perform some basic web development tasks using HTML, CSS, Git and SCSS, and he immediately saw his value outside of this company and left achieving a 5k pay rise during. He now works in an environment where he is constantly challenged and has reviews with his line manager monthly to praise him on his excellent work and diverse set of skills. This is not rocket science. This is how you keep employees motivated and happy.

9 - People in the business with the least or zero technical understanding or experience seem to be endlessly defining technical deadlines. This will always result in things going wrong. Before our mobile app development agency agreed on the user stories, they spent DAYS going through the specification with their developers to ensure they’re not going to over promise and under deliver.

10 - The fact that the concept of ‘stealing data’ from someone else’s website by scraping it daily for the information is not something this company is afraid to do, only further bolsters the fact that I do not want to work in such an unethical, pathetic organisation.

11 - I've been told that the MD of the company heard me on the phone to an agency (as a developer, I get calls almost every week), and that if I do it again, that the MD apparently said he would dock my pay for the time that I’m on the phone. Are you serious?! In what world is it okay for the MD of a company to threaten to punish their employees for thinking about leaving?! Why not make an attempt at nurturing them and trying to find out why they’re upset, and try to retain the talent.

Now... I REALLY want to leave immediately. Hand my notice in and fly off. I'll have 4 weeks notice to find a new role, and I'll be on garden leave effective immediately, but it's scary knowing that I may not find a role.

My situation is difficult as I can't start a new role unless it's remote or a local short term contract because my moving situation in May, and as a Junior to Mid Level developer, this isn't the easiest thing to do on the planet.

I've got a few interviews lined up (one of which was a final interview which I completed on Friday) but its still scary knowing that I may not find a new role within 4 weeks.

Advice? Thoughts? Criticisms?

Love you DevRant <33 -

Hi! I'm new in freelancing. I've created a program that scrapes data from a website, parses it, runs DB queries, and emails the prepared data to the customer for whom I've created this program. The whole program is written in PHP and uses a MySQL table. There's almost no front-end, it's just like an automated background process that runs with a cron job. I've bought and set up a domain and hosting for them (my cutomer paid it all). I got the core part of the program running after ~2 days, and it took me ~a week to complete the project including adding features and the testing phase. Now, I'd like to know, how much does this kind of project cost? The business operates in Silicon Valley.question php scraping webdevelopment scraper cost webdev freelancing price cost of website siliconvalley silicon valley10

-

tldr: maintainers can be assholes

So there's this python package+cli tool that I found interesting while browsing github and thought of contributing to it. Now this repo has around 2000 issues and multiple open PRs so seemed like a good start.

So i submit 2 PRs implementing similar features on different sites (it is a scraping repo). This douche of a maintainer marks comments various errors in the code convention not being followed without specifying what they actually were. Now I had specified that i was new to this repo so and would need his help (I guess this is one of the jobs of the reviewer). This piece of shit comments changes in the pr with one or two word sentences like "again", "wtf" and occasionally psycopathic replies. That son of a bitch can't tell what's wrong like wtf dude, instead of having a long discussion over the comments section of the fucking pr why can't you just point out what exactly is wrong and I'll happily fix that shit, but no, you have to be a douche about out it and employ sarcasm. Well FUCK YOU TOO.1 -

I just found out google web dev tools let you copy a request as curl command!

Time to scrape some websites baby!8 -

While scraping web sources to build datasets, has legality been ever a concern?

Is it a standard practice for checking whether a site prohibits scraping?22 -

Me: I've not done this before, so any guess would be pure assumption.

Client: Okay, but still, you would have some idea, right?

Me: It might get done in 3 days or may take even 30.

After 3 days:

Client: But you said that it will be done in 3 days. Now you are saying there MVP is not ready. Do you even know, your part is the most critical one in the project. We believed in you. We trusted you. This is insane. It was a wrong decision to choose you.

Me (in my head): Didn't I say, this is the first time I am trying to scrape Coles? It might take time?

Me (in actual): I understand, it is getting delayed. Am trying to get this up ASAP....

Anyone else experienced toxic clients but still didn't lose their cool?12 -

That feeling when you’re scraping a website to build an API and your script downloads 4049/6170 pages before failing and you have to rewrite it so that puppeteer hits the next button 4049 times before executing the script. 😅

This database is so frustrating.

I hate this website (the one I’m scraping).

It’s going to be so satisfying when this is finished.3 -

I suspected that our storage appliances were prematurely pulling disks out of their pools because of heavy I/O from triggered maintenance we've been asked to automate. So I built an application that pulls entries from the event consoles in each site, from queries it makes to their APIs. It then correlates various kinds of data, reformats them for general consumption, and produces a CSV.

From this point, I am completely useless. I was able to make some graphs with gnumeric, libre calc, and (after scraping out all the identifying info) Google sheets, but the sad truth is that I'm just really bad at desktop office document apps. I wound up just sending the CSV to my boss so he can make it pretty.1 -

Just found I can bypass CORS / Same-Origin-Policy with anyorigin or crossorigin in Javascript.

Now I can easily scrap motivational quotes, Hell Yeah.

* btw I am building random quotes generator but want to generate quotes with web-scraping * 8

8 -

The company that I work for has recently recruited a team for Web Development, so they don't have to pay a monthly fee to the previous team who designed their website.

They have over 3000+ products in the old website, and no logical way to import them to the new website. The old team was asking for 300$ to give them an API which would return the product details in an XML format.

Obviously, paying that amount of money wasn't logical for a dying website, so the manager decided to hire someone to manually copy the content from the old admin panel to the new one, that is until I stopped him.

My solution? Write a simple web scraper to login to the old panel and collect data. Boom! 300$ saved from going to waste.

Now, the old team found about this and as much as my manager was happy, they were quite angry. So they implanted a Google reCaptcha to prevent my bot from scraping the old panel.

I spent about 20 minutes, and found out once you're logged in to the old panel, the session is saved in a cookie and you are no longer greeted by a Captcha.

So I re-written a small portion of my bot, and Boom! Instant karma from manager. We finished publishing the new site, and notified the old team, only to see the precious look on their face. Poor guy, he thought I was a wizard or something 😂😂

That's what you get for overcharging people!

TL;DR: Company's old website team wanted to overcharge us writing an API to fetch 3000+ records.

Written a basic web scraper to do the same job in less than an hour.3 -

i am at the point of deep depression again as a CS student. a few weeks back and forward is a busy weeks with a lot of team projects/research. as always, team project never be as smooth as i expect, I always who be the one who work in the project with the rest of the team and they doesn't even care what the project does.

also a few week forward there will be a Leadership Training, and i just quit from it, why ? because i need sleep. why again ? BECAUSE I AM THE *ONLY* ONE WHO WORK ON THE PROJECT YOU FUCKING DIPSHIT, i am the one who can't sleep everyday working on the project scraping the deadline and class hour.

why i drop important thing (Leadership Training) just to keep me from depriving my sleep and to keep the project up while the team disregard me? am i being too humble yet i just rant about "don't be too humble".

..i...i just... I just can't take it anymore. :( god help me15 -

I had a wonderful run-in with corporate security at a credit card processing company last year (I won't name them this time).

I was asked design an application that allowed users in a secure room to receive instructions for putting gift cards into envelopes, print labels and send the envelopes to the post. There were all sorts of rules about what combinations of cards could go in which envelopes etc etc, but that wasn't the hard part.

These folks had a dedicated label printer for printing the address labels, in their secure room.

The address data was in a database in the server room.

On separate networks.

And there was absolutely no way that the corporate security folks would let an application that had access to a printer that was on a different network also have access to the address data.

So I took a look at the legacy application to see what they did, to hopefully use as a precedent.

They had an unsecured web page (no, not an API, a web page) that listed the addresses to be printed. And a Windows application running on the users' PC that was quietly scraping that page to print the labels.

Luckily, it ceased to be an issue for me, as the whole IT department suddenly got outsourced to India, so it became some Indian's problem to solve.2 -

Just succesfully converted my entire app from using web scraping data fetching to direct API by reverse-engineering their android app to get to their private API

App is running much faster and more stable now, feels good3 -

✓ running server on windows 10

✓ running postgresql server

✓ running ngrok server

✓ running android studio

✓ running 15 chrome tabs

✓ running ubuntu virtualbox

✓ scraping a website with python in ubuntu

✓ laptop freezes

✓ gently slam the laptop so it can go to sleep mode

✓ try to login so it can unfreeze

✓ get a purple screen of death

✓ system crashed and has to restart

✓ get a blue screen of death for memory diagnostic tool

✓ all unsaved work is lost

✓ gtx 1060

✓ i7

✓ ddr4

✓ 8gb ram

✓ acer laptop of $2400

✓ regret buying acer laptop 10

10 -

When I was in college I was approached by an entrepreneur whose "search engine" idea consisted of scraping the search results of Google and posing them as his own results (after a little shuffling and filtering). Needless to say I declined.2

-

When you're web scraping and the site suddenly redirect their url to their second site so your codes becomes useless.

-

So here's how the story goes.

I was in my academic writing class the other day and we were learning about APA formatting for our argumentative essays. We have a blackboard, whiteboard, projector connected to a pc and even a lovely projector screen to present with in the classroom.

I sit at the front right of the room. Closest to the window(it's behind me as all the desks face inwards)

Professor walks up to front of class and says we are going to learn how to format our typed essays properly.

Awesome, I thought. Pulled out my XPS laptop and fired it up. As I was making a new Word document, I hear scratching. I look up and the professor is writing with CHALK on the BLACKBOARD. I was astonished. Making matters worse, she started from the far left of the board from which the glare from the window was the greatest. I could not see anything. And from that point on I knew this class was going to be abysmal.

What was so depressing was my professor never once touched the projector. Scraping and erasing. Over and over. Couldn't see if it was a period or a comma after the first initial.

My eyes were never so dry from squinting, rolling my eyes and face-palming over and over. After an hour and 15 minute class, I was not far away from drowning my XPS in my tears.6 -

Backstory:

The webpage for (basically) the only movie theater chain is slow. The app, goddamn, is worse.

So I made an app to scrape the data and save it in a SQLite db for my use. However, there is one theater which doesn't belong to the same company. So I decided to also include it in the app.

But it sucks! I still have to find a way to automatically get the data from their shitty site.6 -

Fucking Power Apps and Automate/Flow:

You want to make an app?, great!

- Easy UI and editor, you can make a decent app in a day

- Best data integration in MS space bar none, connect to anything under the planet no problem.

- Deployment on mobile and desktop instantly and at scale, you better believe it.

- Wanna take from sharepoint, manipulate the data and throw it at XRM, we gothcu.

- Source control? FUCK YOU FOR ASKING GO DIE IN A FIRE.

- Proper permission system, Yep, based on O365 and azure AD

- Just let me get the source code please?: BURN IN HELL MOTHERFUCKER

- Integrated AI, indeed we have it. And chatbot frameworks on top of it, no problem at all

- ...

As a tool it is aimed at non technical people, not by making it beginner friendly, but by making it developer hostile. And whenever you hit a wierd quirk in the editor you wish you could just go edit the source code (WHICH YOU CAN TOTALLY SEE SNIPPETS OF), but you are never allowed to touch it.

I am so very tempted to make a version control layer on top of it myself, scraping it via scripts and doing the reverse on upload, but it will be janky as fuck.1 -

Niceeee.

Been receiving packages every day but today I good good shit..

Ideias for me to try?

4 relay module, 4 mostef board, finally the gears for the motors I'm scraping, and more mostefs. My mom is saying that I have the mailman for myself lol 2

2 -

That moment you underestimated a local company site from using APIs. So you manually collect the data by scraping the HTML. But months later learn that they DO in fact use APIs.

🙃5 -

Anybody have any great tutorials about web scraping with python? The data science courses I took only covered maipulauting and visualizing data not getting it.4

-

I want to love and support pons for providing a good dictionary for free, but the api is literally worse than just scraping the website...

3

3 -

Hm... Apparently I've been doing TDD all along... it's just that I don't save the tests in a seperate project.

I just keep editing Main() to test whatever i'm working on (each class).

Also the NJTransit site is sneaky as ****. It seems the devs know a bit about how to prevent site scraping by checking Headers and Client information...

Took all afternoon to get this test to pass....

it works in Chrome but not in my code... and even after I spoofed all the headers... including GZIP.... it wouldn't work for multiple requests...

I need to create a new WebClient for each request.... no idea how it knows the difference or why it cares... maybe it's a WebClient bug...

And this is only the test app. Originally was supposed to be built in React Native but that has it's own problems...

Books are too old, the examples don't work with the latest...

But I guess this also has a upside... learn TDD and React rather than just React... hopefully can finish this week...

I'm actually on vacation... yea... i still code like a work day... 10AM - 8PM.... 2

2 -

upside I guess, if your website's content is generated by JavaScript I'm too lazy to find an emulator to scrape it off you

granted I'm sure this website's business would've been much better had they made it scrapable since I'm quite literally trying to retrieve their capabilities listed in one of their help pages and give myself alerts when they gain new features

but no, I guess3 -

Has anyone here worked on news scraping?

I am currently doing my academic project where I need to scrap the news headlines. I have built scrappers for some news sources using their native API. I also tried using newsapi.org, but it returns only 10 results.

If anyone have worked on similar projects or know of their existence, some advice would be highly appreciated.5 -

Went to visit a friend at a junkyard I worked for 6 months and brought some stuff I found there...

I new there was a DC motor on this piece... And what a goodie. To bad I only brought 3 hehehe1 -

I was assigned a project which was previously done by another fresher, the project used angular and bootstrap. That fucker wrote custom styles for the fucking bootstrap classes!!! Every time I use "btn-primary" the button won't become blue, it becomes white!! Fuck! He even wrote his own fucking styles for the grid classes!!

I was so frustrated, I had a discussion with my CTO, he told me, that after 3 months, we'll be scraping this and moving to a new frontend. So I'm stuck in this hell for 3 months. -

Automate this!

I'm an aspiring coder working some chappy administrator job just to pay the bills for now. My boss found out that I may actually be more computer literate than I let on.

Boss: "I want you to make X happen automatically if I click here on this spreadsheet"

Me "X!? That means processing data from 4 different spreadsheets that aren't consistently named and scraping comparison info from the fronted of the Web cms we're using"

Boss: "if you say so.. Can you do it?"

Me: "maybe.. Can I install python?"

Boss: "No..."

Me: "what about node.js or ruby?"

Boss: "no.. I don't know what you're talking about but you're not installing anything, just get it done"

Me: "Errm Ok.."

So here I am now, way over my head loving the fact that I'm unofficially a Dev and coding my first something in Powershell and vb that will be used in business :)

Sucks that I still have to keep my regular work on target whilst doing this though!2 -

50 build minutes per month? what is this, 1990? it's not like atlassian is scraping around for money either....

sheesh

and the rich get richer...

WELCOME CIRCLE CI! (almost TOO generous in my opinion) 4

4 -

Having developer skills comes sometimes in handy in certain situations.

In my case I visited a new website but first I had to choose their cookies.. but.. it was a list of about 150 radio buttons (150 advertisers), I shit you not.

And so I was like: "No, I refuse to click each one of them". I kept thinking.. hm.. how am I going to do a mass-toggle-off? And then it hit me: if the button "toggle all" toggles all buttons.. then that means if I invert the logic of the call, it means I will turn them all off! And it worked.. it was something like: "toggleAll(!-1)" and I did "toggleAll(0)".

That sure saved me some time! Oh yeah and there are of course other situations when you don't want to use a scraper for getting all the;. I don't know.. menu links out of a page. Console > import jQuery > select all elements with 'a' and text() on their DOM node! It can be done with native JavaScript as well document.getElementsById() but yeah, there are plenty of examples.

Hooray for being a developer!1 -

Question Time:

What technologies would u suggest for a web based project that'll do some data scraping, data preprocessing and also incorporate a few ML models.

I've done data scraping in php but now I want to move on and try something new....

Planning to use AWS to host it.

Thanks in advance :)5 -

As we are all aware, no two programmers are identical with regard to personal preferences, pet peeves, coding style, indenting with spaces or tabs, etc.

Confession:

I have a somewhat strong fascination with SVG files/elements. Particularly icons, logos, illustrations, animations, etc. The main points of intrigue for me are the most obvious: lossless quality when scaling and usage versatility, however, it goes beyond simply appreciating the format and using it frequently. I will sit at my PC for a few hours sometimes, just "harvesting" SVG elements from websites that are rich with vector icons, et al. There is just something about SVG that gets my blood and creativity flowing. I have thousands of various SVG files from all over the web and I thoroughly enjoy using Figma to inspect and/or modify them, and to create my own designs, icons, mockups, etc.

Unrelated to SVG, but I also find myself formatting code by hand every now and then. Not like massive, obfuscated WordPress bundle/chunk files and whatnot, but just a smaller HTML page I'm working on, JSON export data, etc. I only do it until it becomes more consciously tedious, but up to that point, I find it quite therapeutic.

Question:

So, I'm just curious if there are others out there who have any similar interests, fascinations or urges, behaviours, etc.

*** NOTE: I am not a professional programmer/developer, as I do not do it for a living, but because it is my primary hobby and I am very passionate about it. So, for those who may be speculating on just what kind of a shitty abomination of a coworker I must be, fret not. Haha.

Also, if anyone happens to have knowledge of more "bare-bones" methods of scraping SVG elements from web pages, apps, etc. and feels inclined to share said knowledge, I would love to hear your thoughts about it. Thank you! :)2 -

Wonderful experience today

I'm scraping data from an old system, saving that data as json and my next step is transforming the data and pushing it to an api (thank god the new system has an api)

Now I stumbled upon an issue, I found it a bit hard to retrieve a file with the scraper library I'm using, it was also quite difficult to set specific headers to download the file I was looking for instead of navigating to the index of the website. Then I tried a built-in language function to retrieve the files that I needed during the scrape, no luck 'cause I had to login to the website first.

I didn't want to use a different library since I worked so hard and got so far.

My quick solution: Perform a get request to the website, borrow the session ID cookie and then use the built-in function's http headers functionality to retrieve the file.

Luckily this is a throwaway script so being dirty for this once is OK, it works now :) -

scraping websites gave me some insights on different design patterns and OH MY GOSH THE HORRORS IV'E SEEN!

some are so inconsistent as if they had been designed and saved with ms word. another loads the whole sites content on every request as an bloated object. i think of me being just an amateur developer, but these seemed likely unprofessional or overengineerd. not what i would expect from major companies. -

I feel like a fucking god now!

We run a webshop and we are in contract with the national post office. Every time there is an update to their program I fear ahead of time what will be fucked up again.

After today's update we weren't able to open any shippment list we just saw a mile long error message. After the customer care couldn't figure out the problem, and the suggested solution might take up to 2days, and it is basically only a new customer file, i fired up my good old sqlite viewer friend, to chek if I am lucky...

Guess what! That shit is using unsecured sqlite dbs, so i've had no problem examining and even rewriting the values. So checking the logs and scraping the DB I've found the problem.

Apparently some asshole thought that deleting a service but keeping all of its references in other tables scattered around is a good fucking idea. And take it customer care, the new customer file won't fix shit, because it was in the global DB. I swear i am getting more familiar with that piece of garbage then the ones who made it.

On top of that the customer care told us, that if we couldn't manage to send the shippment list with the program we are not elligible for our contractual prices.

It is not enough that I had to fix their fucking shit program, they also "would like to charge us" because their pogram isn't working. What a fucking great service. (At least the lady on the telephone was friendly)1 -

I'm about to graduate and I have no idea what I'm doing. I tried learning the basics and even went through a lot of extra stuff. I can only say I dabbled in scripting, web scraping and a little bit of software development. However when I compare myself to my peers, I feel so out of place. I can't confidently say I know even the concepts I practiced. I am really interested in the field but I feel like I'm way behind and this is constantly nagging me. Is this normal or is there anything I can do about it?3

-

Getting a CodinGame puzzle's description without scraping the page.

I spent hours playing with different endpoints and changing values in postman, all to no avail. The most promising endpoint also returned user progress, which requires authentication, which requires a dummy account, which is against their ToS (it is allowed to reverse engineer the API though).

Turns out you just had to submit “null” for your user ID and it would remove the progress field.

Why is this tagged bad design?

["puzzle-id-string", user-id-as-int]

For almost anything, you POST json arrays...

Send help. -

I really love freelancing and I'm getting a few projects here and there ,maybe once in a few months.but I'm currently studying so those few projects does financially reward me a bit. Is anyone here freelancing ,do you have any tips to get more projects.My projects are normally full Django applications and deployment, Web scraping scripts ,WordPress sites(yeah fml but some people want WordPress),Full websites with other various cms's.1

-

me: thinking about scraping for a webapp

Random Guy: walks past me staring me dead in the eyes.

Me outloud: "how do i scrape" -

After all this time I’m still confused, why was Cambridge Analytica such a huge deal? I feel like a lot of people knew this in years prior, that Facebook/Google were scraping user data and activities to use for personal profiles and hence more directed as placement. Stuff like Ghostery, Privacy Badger, Disconnect, Ad Nauseum (rip it’s Chrome plug-in) etc. all focused on not allowing these same trackers to get information, so not like this case just magically busted the doors wide open screaming that all those websites you visited are now in Facebook’s database and no one knew.

I just can’t quite understand why everyone got up in arms after this.1 -

Do you prefer audiobooks? Are you an active medium reader? Do you want audio for the medium articles you read? Are you out of your free medium articles?😢 My Scrapy is here for the rescue.💸

This is a simple application of web scraping, it scrapes the articles of medium and allows you to read or hear the article. If you use this on computer there will be a number of accents in the option.

The audio feature is provided only to the premium medium users, so here comes My Scrapy to save your 5$/month. 💸

.

Tech Stack used :

Python, beautiful soup, Django, speech synthesis

PS: This application was built for educational purpose.

Fun Fact: You can still read any medium articles if they are asking you to upgrade, you must be wondering how? Well, copy the link of the article and browse it in incognito mode on any browser or sign out and read it.😂🤣

githublink:

https://github.com/globefire/...

demo link:

https://youtube.com/watch/...

instagram link:

https://instagram.com/p/...3 -

Today I wrote my first small python application as an exercise:

Scraping all post EuroJackpot draws from a website, save them in a database, sort them, some checks and do some combinations. Everything quite clean in classes and functions.

And the "application" is just 100 lines big. I love it so far how much can be accomplished with just a few lines. -

OK so here's that App I wrote for scraping recently added Prime Videos info...

It's really pre-alpha and lot's of things to clean up but... it works... for me...

https://github.com/allanx2000/...

You need to relink some of the references... You can download the DLLs here. Haven't cleaned it up yet and don't need EntityFramework.

https://github.com/allanx2000/...

Now why am I posting the source code you ask?!!! Well you see writing an app that tells me what new movies were added so I can add it to my watchlist is a poor investment honestly...

Porbably invested 10 hrs writing it and well that adds more movies to my Watchlist. Watching these movies even at 2x speed still takes 1 hour...

I could/should be doing better things...2 -

Few years ago if someone would mention "Javascript" and "Backend" in one sentence, I would burst in laugh. With quick internet scraping I see it's becoming a reality. Quo vadis, orbis?5

-

Can someone give me any ideas on sites that have a lot of textual data worth scraping in mass quantities? I'm trying to scratch a few itches.

My current ideas are scraping Amazon, Indeed, and Twitter. But I'd like to scrape more and maybe not so much FAANG related companies.2 -

I just finished up a blog post on using debugging tools and Python to download HLS videos:

https://battlepenguin.com/tech/...2 -

Is there a way to dynamically change your IP address while scraping website so that you don't get blocked cojstantly7

-

I have to download 500 images from bookreads to help a friend out. Thought I'd use this opportunity to learn about web scraping rather than downloading the images which'd be a plain and long waste of time. I've got a list of books and author names, the process I wanna automate is putting the book name and author name into the search bar, clicking it, and downloading the first image the appears on the new webpage. I'm planning to use selenium, BeautifulSoup and requests for this project. Is that the right way to go?9

-

i am actually proud of my achievement of a scraping download-robot with decent logging, structured setup file, a small auto login to password protected areas and fancy cli options. because i am the only one on this sofa who can do this. :) this day has been kind to me.

-

Just a legal question here.

Is web scraping legal in USA? I am asking here for the sole reason that I am sure that someone might have developed projects with web scraping.

I've heard that Walmart does it a lot.24 -

I hate that fucking Upwork for having so much fucking scrapers

Most of jobs are fucking scraping related

Looks like it is the only useful thing they can do for their projects or advertisments... -

This is a question with a bit of backstory. Bear with me.

Firstly, i’m back (again😂) now pursuing my software engineer goal at a university.

I had a group project course this spring and me and my group produced a kinda half assed product that could help within sports teams, for a customer at the university (won’t go into details). After the course ended I couldn’t go home to northern Sweden and stayed in my student accomodation for the summer. So I took the chance and offered myself to continue work on the product this summer to make it more usable and functionable, and they hired me (first real devjob!🥰)! Now when I look into the parts of the code that I did not write (our team communcation were bad), I realize I don’t understand fully how it works and therefore feel it’s better for me (also to learn more) to rewrite some parts my old group produced, and to actually make it easier to improve. Now finally the question; how do you feel about taking on a product, scraping some parts to rewrite them, and (in your perspective), improve them?4 -

How to write tests for a scraping script cause the website dom may change at anytime so using a website is not an option while writing tests .4

-

Trying to optimize web-scraping has been one of my greatest failures in life. 4 hours later and all my data is finally gathered. : (1

-

Are you out of your free medium articles?😢 My Scrapy is here for the rescue.💸

This is simple application of web scraping, it scrapes the articles of medium and allows you to read or hear the article. If you use this on computer there will be a number of accents in the option.

The audio feature is provided only to the premium medium users, so here comes My Scrapy to save your 5$/month. 💸

.

Tech Stack used :

Python, beautiful soup, Django, speech synthesis

.

PS: This application was built for educational purpose and the source code for this application is not open sourced anywhere.

Fun Fact : You can still read any medium articles if they ask you to upgrade, you must be wondering how? Well, copy the link of the article and browse it in incognito mode on any browser.😂🤣

Try the app and lemme know if you liked it:

https://mymediumscraper.herokuapp.com/...4 -

Webscraper progress delayed for several weeks due to tLinkType and tWildCard. Apparently it's spelled tWildcard

-

So noob question, is automated web scraping a thing? What would you do if you wanted to grab the same information off similar sites and store it in a table that can be manipulated later? All you would have to do is enter the web site link after you finished coding it. I've used Chrome web scraping extensions but want a more automated solution.10

-

// Rant 1

---

Im literally laughing and crying rn

I tried to deploy a backend on aws Fargate for the first time. Never used Fargate until now

After several days of brainwreck of trial and error

After Fucking around to find out

After Multiple failures to deploy the backend app on AWS Fargate

After Multiple times of deleting the whole infrastructure and redoing everything again

After trying to create the infrastructure through terraform, where 60% of it has worked but the remaining parts have failed

After then scraping off terraform and doing everything manually via AWS ui dashboard because im that much desperate now and just want to see my fucking backend work on aws and i dont care how it will be done anymore

I have finally deployed the backend, successfully

I am yet unsure of what the fuck is going on. I followed an article. Basically i deployed the backend using:

- RDS

- ECS

- ECR

- VPC

- ALB

You may wonder am i fucking retarded to fail this hard for just deploying a backend to aws?

No. Its much deeper than you think. I deployed it on a real world production ready app way.

- VPC with 2 public and 2 private subnets. Private subnets used only for RDS. Public for ALB.

- Everything is very well done and secure. 3 security groups: 1 for ALB (port 80), 1 for Fargate (port 8080, the one the backend is running on), 1 for RDS postgres (port 5432). Each one stacked on top and chained

- custom domain name + SSL certificate so i can have a clean version of the fully working backend such as https://api.shitstain.com

- custom ECS cluster

- custom target groups

- task definitions

Etc.

Right now im unsure how all of this is glued together. I have no idea why this works and why my backend is secure and reachable. Well i do know to some extent but not everything.

To know everything, I'll now ask some dumbass questions:

1. What is ECS used for?

2. What is a task definition and why do i need it?

3. What does Fargate do exactly? As far as i understood its a on-demand use of a backend. Almost like serverless backend? Like i get billed only when the backend is used by someone?

4. What is a target group and why do i need it?

5. Ive read somewhere theres a difference between using Fargate and... ECS (or is it something else)? Whats the difference?

Everything else i understand well enough.

In the meantime I'll now start analyzing researching and understanding deeply what happened here and why this works. I'll also turn all of this in terraform. I'll also build a custom gitlab CI/CD to automate all of this shit and deploy to fargate prod app

// Rant 2

---

Im pissing and shitting a lot today. I piss so much and i only drink coffee. But the bigger problem is i can barely manage to hold my piss. It feels like i need to piss asap or im gonna piss myself. I used to be able to easily hold it for hours now i can barely do it for seconds. While i was sleeping with my gf @retoor i woke up by pissing on myself on her bed right next to her! the heavy warmness of my piss woke me up. It was so embarrassing. But she was hardcore sleeping and didnt notice. I immediately got out of bed to take a shower like a walking dead. I thought i was dreaming. I was half conscious and could barely see only to find out it wasnt a dream and i really did piss on myself in her bed! What the fuck! Whats next, to uncontrollably shit on her bed while sleeping?! Hopefully i didnt get some infection. I feel healthy. But maybe all of this is one giant dream im having and all of u are not real9 -

I have a platform idea, I need feedback

Problem statement: it’s hard to find researchers of specific area, which discourages students to even start looking for research opportunities. The reason for that is because people often look into their own academic circle, and the resource available is simply not enough.

Solution: by scraping Google scholar, generate detailed tag of sub areas for each professors, make a search system for that which will display the most important works of a researcher and what they are working on recently. If possible, invite the researchers to use the platform to add tags of traits they are looking for in students.

I have quite polarized feedback right now, one is the subarea tagging is really useful and academic circle is a problem, other is this is completely useless.

Please let me know what you think.3 -

trying to scrap a site since long now. too frustrating to see how google is detecting the bot faster than before. any hacks if you people have please share.

using python for the same.3 -

Helo everyone , I am planning on learning some new tools and languages side by side working on this project which would be an application for creating or managing some lists for any tasks or some web series and categorize them as on going or completed or planning for now and . I want to web scrap information about that task with some pictures and text information whenever you add an entry to make it catchy and informative.

For example if I add any series name like FRIENDS and label it as on going so to make that entry not look boring my app will add some pictures and texts from google using web scraping.

So which language and tools would be helpful for developoy such application ?

Thank you.

Ps: i am pursuing my undergraduate computer science engineering .

I have basics of python and c++ with some data structures as well as basics of Mysql data base.

I am planning to start web or android development by learning kotlin or JavaScript .7 -

Yo, DevRat! Python is basically the rockstar of programming languages. Here's why it's so dope:

1. **Readability Rules**: Python's code is like super neat handwriting; you don't need a decoder ring. Forget those curly braces and semicolons – Python uses indents to keep things tidy.

2. **Zen Vibes**: Python has its own philosophy called "The Zen of Python." It's like Python's personal horoscope, telling you to keep it simple and readable. Can't argue with cosmic coding wisdom, right?

3. **Tools Galore**: Python's got this massive toolbox with tools for everything – web scraping, AI, web development, you name it. It's like a programming Swiss Army knife.

4. **Party with the Community**: Python peeps are like the coolest party crew. Stuck on a problem? Hit up Stack Overflow. Wanna hang out? GitHub's where it's at. PyCon? It's like the Woodstock of coding, man!

5. **All-in-One Language**: Python isn't a one-trick pony. You can code websites, automate stuff, do data science, make games, and even boss around robots. Talk about versatility!

6. **Learn It in Your Sleep**: Python's like that subject in school that's just a breeze. It's beginner-friendly, but it also scales up for the big stuff.

So, DevRat, Python's the way to go – it's like the coolest buddy in the coding world. Time to rock and code! 🚀🐍💻rant pythonbugs pythonwoes pythonlife python pythonprogramming codinginpython pythonfrustration pythoncode pythonrant pythoncommunity pythondev2 -

Hi there! So I am one of these guys who started learning coding, applied for a couple of jobs and didn`t succeed in it, almost a year doing nothing, but I am kinda happy with it. Wanna jump again on coding, thinking about to start learning python, started from scraping (web scraping, reading blogs&articles from big websites like https://www.dataquest.io/ https://www.scrapingbee.com/ https://finddatalab.com/ they help me a lot, and of course youtube is even better I think cause of visualisation. Wanted to ask - what people/articles/blogs you read/listen/view ? Can you give a short characteristics for some famous influencers in this area, like who can give better explanation of exact therms etc. ? I`d bery thankful!

-

So one day I have an idea of making a HN client in the terminal using Go. When I try it, I got stuck at the scraping part (the very first part of this project). The scraping works, but it just have a problem: the first submission's data is duped (duplicated) with the last submission's data. And that problem is why I end this (potential) project. The more I tried to fix it, the more insane I got. Yet that shit is still there, never fixed. So I think "fuck this shit" and remove the username part and the points part of the data. Eventually I end the project.4

-

Should I switch to Chrome Headless/Puppeteer for webpage header+footer scraping or stick with Express+Cheerio?1

-

Fuck spam, email harvesters and fuck moderators too.

I got tired of getting spam in my email inbox from an email address that I published on my website.

The bots and email harvesters were scraping / harvesting my email address from my website and sending me tons of unwanted spam.

I decided to create a free tool to protect peoples email address behind a form captcha so that it knows the person reading it, is indeed human and not a bot or spammer.

Decided to post to reddit to get the word out and the post gets flagged. Really? What gives?

Its a free tool to stop spam for chrikes sake. I am not trying to make money.

Anyhoo wiill post the link here. Hope you guys and gaals are more friendly and will share the link.

the link is Veilmail.io ( can someone post the link please)

RANT OVER 4

4 -

Has anyone seen a good use case for robotic process automation (RPA)? Seems to me like a new buzzword for 90's screen scraping technologies?!

-

ALPHA SPY NEST RECOVERY // ETH/USDT & BITCOIN RECOVERY EXPERT.

It all started when I woke up one morning to find my crypto wallet drained—every last Bitcoin I’d invested in was gone. Months of careful trading, wiped out in an instant. I’d fallen victim to a slick phishing scam that tricked me into handing over my private keys. Devastated, I thought it was over. That’s when a friend mentioned Alpha Spy Nest, a shadowy group of crypto recovery specialists who’d helped him out of a similar mess.Skeptical but desperate, I reached out. Within hours, a voice on the other end—calm, confident, and anonymous—told me they’d take the case. They called themselves “Nest operatives,” and they didn’t waste time. First, they asked for every detail I could remember: the suspicious email, the fake login page, the moment I realized I’d been had. I handed it all over, expecting nothing but a polite “we’ll try.”What I didn’t know was that Alpha Spy Nest was already on the move. Their team—part hackers, part detectives—dived into the blockchain like bloodhounds. They traced the stolen Bitcoin as it bounced through a dizzying maze of wallets, each one a little more obscure than the last. Most people would’ve given up, but not them. They had tools I’d never heard of, scraping data from dark web forums and piecing together clues like a digital jigsaw puzzle.Days later, they called me back. “We’ve got a lead,” the voice said. They’d tracked the thief to a sloppy exchange account tied to a poorly hidden IP address. The operatives didn’t stop there—they cross-referenced the wallet activity with chatter on underground crypto channels and found the culprit bragging about his haul. With that, they flipped the script. Using a mix of social engineering and what I can only assume was some next-level tech, they baited the thief into moving the funds again—right into a trap wallet Alpha Spy Nest controlled. Few days after I’d lost everything, I got a final message: “Check your account.” There it was—my Bitcoin, back where it belonged, minus a modest fee for their trouble. I never met the team, never even learned their real names. All I knew was that Alpha Spy Nest had turned my nightmare into a miracle, and I’d never click a shady link again.Contacts: WhatsApp: +14159714490 1

1 -

Hi guys!

Is there anyone working in deployment, operations? So the people who setup build pipelines, these CI/CD things. My question for you guys is: what motivated you to move away from development (or outright start working in operations)? What kind of base knowledge did you possess in order to be successful in this field? Do you regret making the switch to operations?

I'm at the start of my career and I've been doing development for about one and a half years, but my heart is not really in it. I like setting up tools, learning their capabilities, writing scripts to automate things a lot more than figuring out the client's twisted requirements and then scraping together a solution for that. -

Recently my teachers have started hassling us to get our ‘better’ projects on github for a ‘sorta’ portfolio

I have a simple C# script I wrote for a class assignment many months ago

Inside that script I call an exe created by using pyinstaller on a simple python program to grab info from the web related to the script’s purpose just to see how pyinstaller and web-scraping works

If I put this on github should it be two separate repositories or one with the python stuff in its own contained folder???

Thank you in advance1