Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "parsing"

-

Got this from a recruiter:

We are looking for a **Senior Android Developer/Lead** at Philadelphia PA

Hiring Mode: Contract

Must have skills:

· 10-12 years mobile experience in developing Android applications

· Solid understanding of Android SDK on frameworks such as: UIKit, CoreData, CoreFoundation, Network Programming, etc.

· Good Knowledge on REST Ful API and JSON Parsing

· Good knowledge on multi-threaded environment and grand central dispatch

· Advanced object-oriented programming and knowledge of design patterns

· Ability to write clean, well-documented, object-oriented code

· Ability to work independently

· Experience with Agile Driven Development

· Up to date with the latest mobile technology and development trends

· Passion for software development- embracing every challenge with a drive to solve it

· Engaging communication skills

My response:

I am terribly sorry but I am completely not interested in working for anyone who might think that this is a job description for an Android engineer.

1. Android was released in September 2008 so finding anyone with 10 years experience now would have to be a Google engineer.

2. UIKit, CoreData, CoreFoundation are all iOS frameworks

3. Grand Central Dispatch is an iOS mechanism for multithreading and is not in Android

4. There are JSON parsing frameworks, no one does that by hand anymore

Please delete me from your emailing list.50 -

Oh, man, I just realized I haven't ranted one of my best stories on here!

So, here goes!

A few years back the company I work for was contacted by an older client regarding a new project.

The guy was now pitching to build the website for the Parliament of another country (not gonna name it, NDAs and stuff), and was planning on outsourcing the development, as he had no team and he was only aiming on taking care of the client service/project management side of the project.

Out of principle (and also to preserve our mental integrity), we have purposely avoided working with government bodies of any kind, in any country, but he was a friend of our CEO and pleaded until we singed on board.

Now, the project itself was way bigger than we expected, as the wanted more of an internal CRM, centralized document archive, event management, internal planning, multiple interfaced, role based access restricted monster of an administration interface, complete with regular user website, also packed with all kind of features, dashboards and so on.

Long story short, a lot bigger than what we were expecting based on the initial brief.

The development period was hell. New features were coming in on a weekly basis. Already implemented functionality was constantly being changed or redefined. No requests we ever made about clarifications and/or materials or information were ever answered on time.

They also somehow bullied the guy that brought us the project into also including the data migration from the old website into the new one we were building and we somehow ended up having to extract meaningful, formatted, sanitized content parsing static HTML files and connecting them to download-able files (almost every page in the old website had files available to download) we needed to also include in a sane way.

Now, don't think the files were simple URL paths we can trace to a folder/file path, oh no!!! The links were some form of hash combination that had to be exploded and tested against some king of database relationship tables that only had hashed indexes relating to other tables, that also only had hashed indexes relating to some other tables that kept a database of the website pages HTML file naming. So what we had to do is identify the files based on a combination of hashed indexes and re-hashed HTML file names that in the end would give us a filename for a real file that we had to then search for inside a list of over 20 folders not related to one another.

So we did this. Created a script that processed the hell out of over 10000 HTML files, database entries and files and re-indexed and re-named all this shit into a meaningful database of sane data and well organized files.

So, with this we were nearing the finish line for the project, which by now exceeded the estimated time by over to times.

We test everything, retest it all again for good measure, pack everything up for deployment, simulate on a staging environment, give the final client access to the staging version, get them to accept that all requirements are met, finish writing the documentation for the codebase, write detailed deployment procedure, include some automation and testing tools also for good measure, recommend production setup, hardware specs, software versions, server side optimization like caching, load balancing and all that we could think would ever be useful, all with more documentation and instructions.

As the project was built on PHP/MySQL (as requested), we recommended a Linux environment for production. Oh, I forgot to tell you that over the development period they kept asking us to also include steps for Windows procedures along with our regular documentation. Was a bit strange, but we added it in there just so we can finish and close the damn project.

So, we send them all the above and go get drunk as fuck in celebration of getting rid of them once and for all...

Next day: hung over, I get to the office, open my laptop and see on new email. I only had the one new mail, so I open it to see what it's about.

Lo and behold! The fuckers over in the other country that called themselves "IT guys", and were the ones making all the changes and additions to our requirements, were not capable enough to follow step by step instructions in order to deploy the project on their servers!!!

[Continues in the comments]25 -

Dev: what do I call this file ?

Me: just name it something meaningful so other dev's know what it is

Two days pass

Me: time to do code review .. oh look a new file ..

Git comment : new file for sax parsing , architecture gave the ok.

File name : SomethingMeaningful.java11 -

Started being a Teaching Assistant for Intro to Programming at the uni I study at a while ago and, although it's not entirely my piece of cake, here are some "highlights":

* students were asked to use functions, so someone was ingenious (laughed my ass off for this one):

def all_lines(input):

all_lines =input

return all_lines

* "you need to use functions" part 2

*moves the whole code from main to a function*

* for Math-related coding assignments, someone was always reading the input as a string and parsing it, instead of reading it as numbers, and was incredibly surprised that he can do the latter "I always thought you can't read numbers! Technology has gone so far!"

* for an assignment requiring a class with 3 private variables, someone actually declared each variable needed as a vector and was handling all these 3 vectors as 3D matrices

* because the lecturer specified that the length of the program does not matter, as long as it does its job and is well-written, someone wrote a 100-lines program on one single line

* someone was spamming me with emails to tell me that the grade I gave them was unfair (on the reason that it was directly crashing when run), because it was running on their machine (they included pictures), but was not running on mine, because "my Python version was expired". They sent at least 20 emails in less than 2h

* "But if it works, why do I still have to make it look better and more understandable?"

* "can't we assume the input is always going to be correct? Who'd want to type in garbage?"

* *writes 10 if-statements that could be basically replaced by one for-loop*

"okay, here, you can use a for-loop"

*writes the for loop, includes all the if-statements from before, one for each of the 10 values the for-loop variable gets*

* this picture

N.B.: depending on how many others I remember, I may include them in the comments afterwards 19

19 -

this.title = "gg Microsoft"

this.metadata = {

rant: true,

long: true,

super_long: true,

has_summary: true

}

// Also:

let microsoft = "dead" // please?

tl;dr: Windows' MAX_PATH is the devil, and it basically does not allow you to copy files with paths that exceed this length. No matter what. Even with official fixes and workarounds.

Long story:

So, I haven't had actual gainful employ in quite awhile. I've been earning just enough to get behind on bills and go without all but basic groceries. Because of this, our electronics have been ... in need of upgrading for quite awhile. In particular, we've needed new drives. (We've been down a server for two years now because its drive died!)

Anyway, I originally bought my external drive just for backup, but due to the above, I eventually began using it for everyday things. including Steam. over USB. Terrible, right? So, I decided to mount it as an internal drive to lower the read/write times. Finding SATA cables was difficult, the motherboard's SATA plugs are in a terrible spot, and my tiny case (and 2yo) made everything soo much worse. It was a miserable experience, but I finally got it installed.

However! It turns out the Seagate external drives use some custom drive header, or custom driver to access the drive, so Windows couldn't read the bare drive. ffs. So, I took it out again (joy) and put it back in the enclosure, and began copying the files off.

The drive I'm copying it to is smaller, so I enabled compression to allow storing a bit more of the data, and excluded a couple of directories so I could copy those elsewhere. I (barely) managed to fit everything with some pretty tight shuffling.

but. that external drive is connected via USB, remember? and for some reason, even over USB3, I was only getting ~20mb/s transfer rate, so the process took 20some hours! In the interim, I worked on some projects, watched netflix, etc., then locked my computer, and went to bed. (I also made sure to turn my monitors and keyboard light off so it wouldn't be enticing to my 2yo.) Cue dramatic music ~

Come morning, I go to check on the progress... and find that the computer is off! What the hell! I turn it on and check the logs... and found that it lost power around 9:16am. aslkjdfhaslkjashdasfjhasd. My 2yo had apparently been playing with the power strip and its enticing glowing red on/off switch. So. It didn't finish copying.

aslkjdfhaslkjashdasfjhasd x2

Anyway, finding the missing files was easy, but what about any that didn't finish? Filesizes don't match, so writing a script to check doesn't work. and using a visual utility like windirstat won't work either because of the excluded folders. Friggin' hell.

Also -- and rather the point of this rant:

It turns out that some of the files (70 in total, as I eventually found out) have paths exceeding Windows' MAX_PATH length (260 chars). So I couldn't copy those.

After some research, I learned that there's a Microsoft hotfix that patches this specific issue! for my specific version! woo! It's like. totally perfect. So, I installed that, restarted as per its wishes... tried again (via both drag and `copy`)... and Lo! It did not work.

After installing the hotfix. to fix this specific issue. on my specific os. the issue remained. gg Microsoft?

Further research.

I then learned (well, learned more about) the unicode path prefix `\\?\`, which bypasses Windows kernel's path parsing, and passes the path directly to ntfslib, thereby indirectly allowing ~32k path lengths. I tried this with the native `copy` command; no luck. I tried this with `robocopy` and cygwin's `cp`; they likewise failed. I tried it with cygwin's `rsync`, but it sees `\\?\` as denoting a remote path, and therefore fails.

However, `dir \\?\C:\` works just fine?

So, apparently, Microsoft's own workaround for long pathnames doesn't work with its own utilities. unless the paths are shorter than MAX_PATH? gg Microsoft.

At this point, I was sorely tempted to write my own copy utility that calls the internal Windows APIs that support unicode paths. but as I lack a C compiler, and haven't coded in C in like 15 years, I figured I'd try a few last desperate ideas first.

For the hell of it, I tried making an archive of the offending files with winRAR. Unsurprisingly, it failed to access the files.

... and for completeness's sake -- mostly to say I tried it -- I did the same with 7zip. I took one of the offending files and made a 7z archive of it in the destination folder -- and, much to my surprise, it worked perfectly! I could even extract the file! Hell, I could even work with paths >340 characters!

So... I'm going through all of the 70 missing files and copying them. with 7zip. because it's the only bloody thing that works. ffs

Third-party utilities work better than Microsoft's official fixes. gg.

...

On a related note, I totally feel like that person from http://xkcd.com/763 right now ;;21 -

PineScript is absolute garbage.

It's TradingView's scripting language. It works, but it's worse than any language I have ever seen for shoddy parsing. Its naming conventions are pretty terrible, too:

transparency? no, "transp"

sum? no, cum. seriously. cum(array) is its "cumulative sum."

There are other terrible names, but the parser is what really pisses me off.

1) If you break up a long line for readability (e.g. a chained ternary), each fragment needs to be indented by more than its parent... but never by a multiple of 4 spaces because then it isn't a fragment anymore, but its own statement.

2) line fragments also cannot end in comments because comments are considered to be separate lines.

3) Lambdas can only be global. They're just fancy function declarations. Someone really liked the "blah(x,y,z) =>" syntax

4) blocks to `if`s must be on separate lines, meaning `if (x) y:=z` is illegal. And no, there are no curly braces, only whitespace.

There are plenty more, but the one that really got me furious is:

98) You cannot call `plot()`, `plotshape()`, etc. if they're indented! So if you're using non-trivial logic to optionally plot things like indicators, fuck you.

Whoever wrote this language and/or parser needs to commit seppuku.rant or python? pinescript or fucking euphoria? or ruby? why can't they just use lua? or javascript? tradingview16 -

I'm convinced code addiction is a real problem and can lead to mental illness.

Dev: "Thanks for helping me with the splunk API. Already spent two weeks and was spinning my wheels."

Me: "I sent you the example over a month ago, I guess you could have used it to save time."

Dev: "I didn't understand it. I tried getting help from NetworkAdmin-Dan, SystemAdmin-Jake, they didn't understand what you sent me either."

Me: "I thought it was pretty simple. Pass it a query, get results back. That's it"

Dev: "The results were not in a standard JSON format. I was so confused."

Me: "Yea, it's sort-of JSON. Splunk streams the result as individual JSON records. You only have to deserialize each record into your object. I sent you the code sample."

Dev: "Your code didn't work. Dan and Jake were confused too. The data I have to process uses a very different result set. I guess I could have used it if you wrote the class more generically and had unit tests."

<oh frack...he's been going behind my back and telling people smack about my code again>

Me: "My code wouldn't have worked for you, because I'm serializing the objects I need and I do have unit tests, but they are only for the internal logic."

Dev:"I don't know, it confused me. Once I figured out the JSON problem and wrote unit tests, I really started to make progress. I used a tuple for this ... functional parameters for that...added a custom event for ... Took me a few weeks, but it's all covered by unit tests."

Me: "Wow. The way you explained the project was; get data from splunk and populate data in SQLServer. With the code I sent you, sounded like a 15 minute project."

Dev: "Oooh nooo...its waaay more complicated than that. I have this very complex splunk query, which I don't understand, and then I have to perform all this parsing, update a database...which I have no idea how it works. Its really...really complicated."

Me: "The splunk query returns what..4 fields...and DBA-Joe provided the upsert stored procedure..sounds like a 15 minute project."

Dev: "Maybe for you...we're all not super geniuses that crank out code. I hope to be at your level some day."

<frack you ... condescending a-hole ...you've got the same seniority here as I do>

Me: "No seriously, the code I sent would have got you 90% done. Write your deserializer for those 4 fields, execute the stored procedure, and call it a day. I don't think the effort justifies the outcome. Isn't the data for a report they'll only run every few months?"

Dev: "Yea, but Mgr-Nick wanted unit tests and I have to follow orders. I tried to explain the situation, but you know how he is."

<fracking liar..Nick doesn't know the difference between a unit test and breathalyzer test. I know exactly what you told Nick>

Dev: "Thanks again for your help. Gotta get back to it. I put a due date of April for this project and time's running out."

APRIL?!! Good Lord he's going to drag this intern-level project for another month!

After he left, I dug around and found the splunk query, the upsert stored proc, and yep, in about 15 minutes I was done.1 -

The time my Java EE technology stack disappointed me most was when I noticed some embarrassing OutOfMemoryError in the log of a server which was already in production. When I analyzed the garbage collector logs I got really scared seeing the heap usage was constantly increasing. After some days of debugging I discovered that the terrible memory leak was caused by a bug inside one of the Java EE core libraries (Jersey Client), while parsing a stupid XML response. The library was shipped with the application server, so it couldn't be replaced (unless installing a different server). I rewrote my code using the Restlet Client API and the memory leak disapperead. What a terrible week!

2

2 -

I send a PR to your GitHub repo.

You close it without a word.

I tell you that your lib crashes because you're trying to parse JavaScript with a (bad) regex, but you keep insisting that no, there exist no problem, and even if you barely know what "parsing" means, you keep denying in front of the evidence.

Well fuck you and your shitty project. I'll keep using my fucking fork.

And if you're reading this, well, fuck you twice. Moron.10 -

Waisting some times on codewars.com

~~~~

3 kyu challenge:

Given a string with mathematical operations like this: ‘3+5*7*(10-45)’, compute the result

~~~~~

*Does a quick and easy one liner in python using eval()*

*sees people actually writing some 100 lines parsing the string and calculating using priority of operation*

Poor them...

(Btw, passed to lvl 4 kyu thx to this)12 -

Imagine, you get employed to restart a software project. They tell you, but first we should get this old software running. It's 'almost finished'.

A WPF application running on a soc ... with a 10" touchscreen on win10, a embedded solution, to control a machine, which has been already sold to customers. You think, 'ok, WTF, why is this happening'?

You open the old software - it crashes immediately.

You open it again but now you are so clever to copy an xml file manually to the root folder and see all of it's beauty for the first time (after waiting for the freezed GUI to become responsive):

* a static logo of the company, taking about 1/5 of the screen horizontally

* circle buttons

* and a navigation interface made in the early 90's from a child

So you click a button and - it crashes.

You restart the software.

You type something like 'abc' in a 'numberfield' - it crashes.

OK ... now you start the application again and try to navigate to another view - and? of course it crashes again.

You are excited to finally open the source code of this masterpiece.

Thank you jesus, the 'dev' who did this, didn't forget to write every business logic in the code behind of the views.

He even managed to put 6 views into one and put all their logig in the code behind!

He doesn't know what binding is or a pattern like MVVM.

But hey, there is also no validation of anything, not even checks for null.

He was so clever to use the GUI as his place to save data and there is a lot of parsing going on here, every time a value changes.

A thread must be something he never heard about - so thats why the GUI always freezes.

You tell them: It would be faster to rewrite the whole thing, because you wouldn't call it even an alpha. Nobody listenes.

Time passes by, new features must be implemented in this abomination, you try to make the cripple walk and everyone keeps asking: 'When we can start the new software?' and the guy who wrote this piece of shit in the first place, tries to give you good advice in coding and is telling you again: 'It was almost finished.' *facepalm*

And you? You would like to do him and humanity a big favour by hiting him hard in the face and breaking his hands, so he can never lay a hand on any keyboard again, to produce something no one serious would ever call code.4 -

Ok, rant incoming.

Dates. Frigging dates. Apparently we as a species are so bloody incompetent we cannot even decide on a one format for how to write today. No, instead we have one for every language and framework, because every moron thinks they know better how to write the date. All of them equivalent and all of them different enough to make me start lactating out of frustration trying to parse this garbage... And when you finally manage to parse it on one platform it turns out that your ORM just decided to use the less common version of the date, and have fun converting one to the other. I hope that ever time someone comes up with a new date format will be hit in a face with a red hot frying pan untill they give up programming in favour of growing cactuses.11 -

Less a rant, more just a sad story.

Our company recently acquired its sister company, and everyone has been focused on improving and migrating their projects over to our stack.

There's a ton of material there, but this one little story summarizes the whole very accurately, I think. (Edit: two stories. I couldn't resist.)

There's a 3-reel novelty slot machine game with cards instead of the usual symbols, and winnings based on poker-like rules (straights and/or flushes, 2-3 of a kind, etc.) The machine is over a hundred times slower than the other slot machines because on every spin it runs each payline against a winnings table that exhastively lists every winning possibility, and I really do mean exhaustively. It lists every type of win, for every card, every segment for straights, in every order, of every suit. Absolutely everything.

And this logic has been totally acceptable for just. so. long. When I saw someone complaining in dev chat about how much slower it is, i made the bloody obvious suggestion of parsing the cards and applying some minimal logic to see if it's a winning combination. Nobody cared.

Ten minutes later, someone from the original project was like "Hey, I have an idea, why don't we do it algorithmically to not have a 4k line rewards table?"

He seriously tried stealing a really bloody obvious idea -- that he hadn't had for years prior -- and passing it off as his own. In the same chat. Eight messages below mine. What a derpballoon.

I called him out on it, and he was like "Oh, is that what you meant by parsing?" 🙄

Someone else leaped in to defend the ~128x slower approach, saying: "That's the tech we had." You really didn't have a for loop and a handful of if statements? Oh wait, you did, because that's how you're checking your exhaustive list. gfj. Abysmal decisions like this is exactly why most of you got fired. (Seriously: these same people were making devops decisions. They were hemorrhaging money.)

But regardless, the quality of bloody everything from that sister company is like this. One of the other fiascos involved pulling data from Facebook -- which they didn't ever even use -- and instead of failing on error/unexpected data, it just instantly repeated. So when Facebook changed permissions on friends context... you can see where this is going. Instead of their baseline of like 1400 errors per day, which is amazingly high, it spiked to EIGHTEEN BLOODY MILLION PER DAY. And they didn't even care until they noticed (like four days later) that it was killing their other online features because quite literally no other request could make it out. More reasons they got fired. I'm not even kidding: no single api request ever left the users' devices apart from the facebook checks.

So.

That's absolutely amazing.7 -

When I found out my JSON didn't parse because I used single instead of double quotes after two hours

8

8 -

Interviewer : So what frameworks and library you usually use?

Me : i use volley for networking, gson for parsing, livedata/architecture components for architecture and observability , room for database and java for app development

I : ok so make this sample app using retrofit for networking, moshi for parsing, mvrx for architecture , rx for observability , sqldelight for db, dagger2 and kotlin for app dev. You have 8 hours

Me :(wtf?) But i never used those libs or language!

I : we just want to check how easily you adapt to different surroundings.

Me : -_-

Honestly i don't know of it was a great experience or a bad one . I was stressed the whole time but was able to adapt to almost all of those libraries and frameworks.

At the end i got selected but decided not to go for those ppl. That was just a lucrative opening of a venus fly trap, they would have stressed the hell out of me11 -

Recently saw a piece of code left by a former coworker where he converted an int to a long, by converting it to a string, then parsing the string.5

-

Wrote a rant yesterday (or recently?) in which I explained that I needed to rewrite the core of something I'm writing in order to make it more extendable/flexible/modular.

Finished the rewrite yesterday and started to write a module (it exists our of modules and one can write a specific module for a specific kind of task) for another use case that has shown itself in the past few weeks.

Fun thing is that part of the core stayed the same and I hardly made changes to the libraries which the core uses a lot but the modules are, except for a few similarities (like one default invoke methods), completely different but do use the libraries to make sure they've got all functions needed to properly fulfill their task.

Ran a rule (what I call something in the project) hoping that everything works together the way it's supposed to and that the config files are interpreted well by the parsing 'engine' (pretty much switch cases and if-elses 😅).

FUCKING BAM IT FUCKING WORKS 😍2 -

No comments allowed in JSON pisses me off so much.

Sure, I get all the arguments of "it's supposed to be a data-only format for machines", "there are alternatives which support comments", and "you can add comments and then minify the file before parsing"

But right now, when I just need to put a quick note inside a super confusing legacy package manager config about why certain dependencies to be frozen at a specific version, IT FUCKING PISSES ME OFF THAT I CAN'T JUST ADD A FUCKING COMMENT.18 -

Lead dev: Hey boss, you really do like Python right?

Me: No

Lead dev: Well it's cuz I was think....wait what? WTF do you mean no, you have automated a fuckload of BS with Python and we are still using it, why tf would you use Python if you don't like it?

Me: I like it enough for the automation scripts that we have and for parsing documents or generating glue scripts, its already installed in every server that we have, so testing bs in dev and then using them in prod is cake, it doesn't mean I LOVE python, I like it for what we use it.

Lead dev: Well ain't already bash and perl installed as well?

Me: Do you know bash and or perl?

Lead dev: No, don't you?....

Me: No......

L Dev: (using a Jim Carrey impersonation) WELLL ALLRIGTHY THEN! What is the other language that you used for X project?

Me: Clojure, do you remember that one?

* he said paren paren paren paren yes paren i space paren do close paren close paren etc etc

L Dev: (((((((yes (i (do)))))))) and nevermind, I'll get back to working more with Python

Me: das what I fucking thought esse6 -

full stack web development in 2017: mvvm model, api for backend, parsing on frontend

me: <?php echo "<div>hello".$name."</div>" ?>9 -

I fucking hate it when developers don’t respect user locale. My phone language is UK English, my Discord app language is UK English and my region is UK.

Then why the fuck is Discord showing me MM/DD/YYYY date format? How hard is it to pass locale when parsing time?15 -

TLDR; Wrote an awesome piece of code, but there's no one capable of understanding how hard it is.

I spent an entire night building an insanely complicated automation script, that picks out certain configuration files (in javascript fml), does some crazy parsing to pick out strings, passes them into a free translation API, translates them, and does more insane parsing to insert those strings as javascript objects.

Spent 3 hours on the bloody parsing algorithm alone.

Manager: Oh this language is really nice. Good of you to discover it can do that.

I didn't "DISCOVER" it ffs! Its a product of my head! I built the damn algo from scratch.

Seriously, screw non devs who trivialize the complexities of writing a good program. Its NOT as simple as opening notepad and typing in

import {insanelyComplexSolution} from 'daveOnTheInternet'3 -

the first program ive written in my own programming language

schreibe

{

hallo welt

}

i didnt know about parsing back then, so everything was its own line4 -

Do you wanna build a Program?

Come on it can be in C

Doesn't compile anymore

bugs to report

Perhaps a parsing tree

We used to be peer buddies

And now we're not

I wish you would tell me why!-

Do you wanna build a Program?

It doesn't have to be in C.

Stackoverflow, Anna

Okay, bye2 -

Super excited to get my first ML project on, been working on this on - off for a couple of years. Now that I had sometime to spare could get it completed :) And along the way learned a lot of things as well :)

Let me know what you guys think.

https://github.com/Prabandham/...

https://github.com/Prabandham/...

A lot of thanks to this Repo that helped in parsing the image to string.

https://github.com/BenNG/...

Of course PR's and suggestions really welcome and appreciated. 9

9 -

Dear external developer dumbass from hell.

We bought your company under the assumption you had a borderline functioning product and/or dev team. Ideally both

For future reference expect "file path" arguments can contain backslashes and perhaps even the '.' character. It ain't that hard. Maybe try using the damn built in path parsing capabilities every halfway decent programming environment has had since before you figured out how to smash your head against the keyboard hard enough for your shitty excuse of a compiler stops arguing and gives in.

I am fixing your shit by completely removing it with one line of code calling the framework and you better not reject this.

This is not a pull request ITS A GOD DAMN PULL COMMAND.

- Is what i would _like_ to say right now... you know if i wouldn't be promptly fired for doing so :p

How's you guys friday going?7 -

Woke up at 5am with the realization that I could use regular expressions to parse the string representation of regular expressions to build this program to parse regular expressions into more human-readable English.

I am so tired.5 -

A dev team has been spending the past couple of weeks working on a 'generic rule engine' to validate a marketing process. The “Buy 5, get 10% off” kind of promotions.

The UI has all the great bits, drop-downs, various data lookups, etc etc..

What the dev is storing the database is the actual string representation FieldA=“Buy 5, get 10% off” that is “built” from the UI.

Might be OK, but now they want to apply that string to an actual order. Extract ‘5’, the word ‘Buy’ to apply to the purchase quantity rule, ‘10%’ and the word ‘off’ to subtract from the total.

Dev asked me:

Dev: “How can I use reflection to parse the string and determine what are integers, decimals, and percents?”

Me: “That sounds complicated. Why would you do that?”

Dev: “It’s only a string. Parsing it was easy. First we need to know how to extract numbers and be able to compare them.”

Me: “I’ve seen the data structures, wouldn’t it be easier to serialize the objects to JSON and store the string in the database? When you deserialize, you won’t have to parse or do any kind of reflection. You should try to keep the rule behavior as simple as possible. Developing your own tokenizer that relies on reflection and hoping the UI doesn’t change isn’t going to be reliable.”

Dev: “Tokens!...yea…tokens…that’s what we want. I’ll come up with a tokenizing algorithm that can utilize recursion and reflection to extract all the comparable data structures.”

Me: “Wow…uh…no, don’t do that. The UI already has to map the data, just make it easy on yourself and serialize that object. It’s like one line of code to serialize and deserialize.”

Dev: “I don’t know…sounds like magic. Using tokens seems like the more straightforward O-O approach. Thanks anyway.”

I probably getting too old to keep up with these kids, I have no idea what the frack he was talking about. Not sure if they are too smart or I’m too stupid/lazy. Either way, I keeping my name as far away from that project as possible.4 -

I’m on this ticket, right? It’s adding some functionality to some payment file parser. The code is atrocious, but it’s getting replaced with a microservice definitely-not-soon-enough, so i don’t need to rewrite it or anything, but looking at this monstrosity of mental diarrhea … fucking UGH. The code stink is noxious.

The damn thing reads each line of a csv file, keeping track of some metadata (blah blah) and the line number (which somehow has TWO off-by-one errors, so it starts on fucking 2 — and yes, the goddamn column headers on line #0 is recorded as line #2), does the same setup shit on every goddamned iteration, then calls a *second* parser on that line. That second parser in turn stores its line state, the line number, the batch number (…which is actually a huge object…), and a whole host of other large objects on itself, and uses exception throwing to communicate, catches and re-raises those exceptions as needed (instead of using, you know, if blocks to skip like 5 lines), and then writes the results of parsing that one single line to the database, and returns. The original calling parser then reads the data BACK OUT OF THE DATABASE, branches on that, and does more shit before reading the next line out of the file and calling that line-parser again.

JESUS CHRIST WHAT THE FUCK

And that’s not including the lesser crimes like duplicated code, misleading var names, and shit like defining class instance constants but … first checking to see if they’re defined yet? They obviously aren’t because they aren’t anywhere else in the fucking file!

Whoever wrote this pile of fetid muck must have been retroactively aborted for their previous crimes against intelligence, somehow survived the attempt, and is now worse off and re-offending.

Just.

Asdkfljasdklfhgasdfdah26 -

I am much too tired to go into details, probably because I left the office at 11:15pm, but I finally finished a feature. It doesn't even sound like a particularly large or complicated feature. It sounds like a simple, 1-2 day feature until you look at it closely.

It took me an entire fucking week. and all the while I was coaching a junior dev who had just picked up Rails and was building something very similar.

It's the model, controller, and UI for creating a parent object along with 0-n child objects, with default children suggestions, a fancy ui including the ability to dynamically add/remove children via buttons. and have the entire happy family save nicely and atomically on the backend. Plus a detailed-but-simple listing for non-technicals including some absolutely nontrivial css acrobatics.

After getting about 90% of everything built and working and beautiful, I learned that Rails does quite a bit of this for you, through `accepts_nested_params_for :collection`. But that requires very specific form input namespacing, and building that out correctly is flipping difficult. It's not like I could find good examples anywhere, either. I looked for hours. I finally found a rails tutorial vide linked from a comment on a SO answer from five years ago, and mashed its oversimplified and dated examples with the newer documentation, and worked around the issues that of course arose from that disasterous paring.

like.

I needed to store a template of the child object markup somewhere, yeah? The video had me trying to store all of the markup in a `data-fields=" "` attrib. wth? I tried storing it as a string and injecting it into javascript, but that didn't work either. parsing errors! yay! good job, you two.

So I ended up storing the markup (rendered from a rails partial) in an html comment of all things, and pulling the markup out of the comment and gsubbing its IDs on document load. This has the annoying effect of preventing me from using html comments in that partial (not that i really use them anyway, but.)

Just.

Every step of the way on building this was another mountain climb.

* singular vs plural naming and routing, and named routes. and dealing with issues arising from existing incorrect pluralization.

* reverse polymorphic relation (child -> x parent)

* The testing suite is incompatible with the new rails6. There is no fix. None. I checked. Nope. Not happening.

* Rails6 randomly and constantly crashes and/or caches random things (including arbitrary code changes) in development mode (and only development mode) when working with multiple databases.

* nested form builders

* styling a fucking checkbox

* Making that checkbox (rather, its label and container div) into a sexy animated slider

* passing data and locals to and between partials

* misleading documentation

* building the partials to be self-contained and reusable

* coercing form builders into namespacing nested html inputs the way Rails expects

* input namespacing redux, now with nested form builders too!

* Figuring out how to generate markup for an empty child when I'm no longer rendering the children myself

* Figuring out where the fuck to put the blank child template markup so it's accessible, has the right namespacing, and is not submitted with everything else

* Figuring out how the fuck to read an html comment with JS

* nested strong params

* nested strong params

* nested fucking strong params

* caching parsed children's data on parent when the whole thing is bloody atomic.

* Converting datetimes from/to milliseconds on save/load

* CSS and bootstrap collisions

* CSS and bootstrap stupidity

* Reinventing the entire multi-child / nested params / atomic creating/updating/deleting feature on my own before discovering Rails can do that for you.

Just.

I am so glad it's working.

I don't even feel relieved. I just feel exhausted.

But it's done.

finally.

and it's done well. It's all self-contained and reusable, it's easy to read, has separate styling and reusable partials, etc. It's a two line copy/paste drop-in for any other model that needs it. Two lines and it just works, and even tells you if you screwed up.

I'm incredibly proud of everything that went into this.

But mostly I'm just incredibly tired.

Time for some well-deserved sleep.7 -

Definitely Rust, and a bit Haskell.

Rust has made me much more conscious of data ownership through a program, to the point that any C/C++ function I wrote that takes a pointer nowadays gets a comment on ownership.

I wish it was a bit less pedantic about generics sometimes, which is why I've started working on a "less pedantic rust", where generics are done through multiple dispatch à la Julia, but still monomorphising everything I can. I've only started this week, but I already have a tokenizer and most of the type inference system (an SLD tree) ready. Next up is the borrow checker and parsing the tokenized input to whatever the type inference and borrow checker need to work with, and of course actual code generation...

Haskell is my first FP language, and introduced me to some FP patterns which, turns out, are super useful even with less FP languages. -

[Begin Rant] When you show your senior manager your REST Web Service and he says "Oh no nooo... I don't wanna see no code"... Me: Code?? That ain't code you fat silly fucker it's the command line output data which I spent a week parsing, batch processing, and storing into the database! [End Rant] :[4

-

When you're parsing SO's questions from your cellphone, you find one that's easy, you answer it, then when it's done, you read the question again to realized you totally missed the point.

When you realize that the StackExchange app doesn't allow you to delete your own comment, so you have to rush to the nearest computer to delete your answer before anyone downvotes it.

When you sit back again to resume your breakfast, the adrenaline rush slowly fading away.2 -

Should I actually look into getting a dev job..?

*I have a high school diploma (graduated three years early)

*College dropout (3-4 months, Computer Science - Personal Reasons)

*No prior work experience.

*Good textural communication skills, poor verbal communication skills.

*Currentally unemployed. (NEET :P)

*I have extensive personal experience with Java, and Python. Some Lua. Knowledge of data generation, parsing, Linux, Windows, Terminal(cmd & bash), & Encryption(Ciphers).

*Math, but very little algebra/geometry (though, could easily improve these).

*Work best under preasure.

Remote only.

Think anyone would hire me..?13 -

Many of you who have a Windows computer may be familiar with robocopy, xcopy, or move.

These functions? Programs? Whatever they may be, were interesting to me because they were the first things that got me really into batch scripting in the first place.

What was really interesting to me was how I could run multiples of these scripts at a time.

<storytime>

It was warm Spring day in the year of 2007, and my Science teacher at the time needed a way to get files from the school computer to the hard-drive faster. The amount of time that the computer was suggesting was 2 hours. Far too long for her. I told her I’d build her something that could work faster than that. And so started the program would take up more of my time than the AI I had created back in 2009.

</storytime>

This program would scan the entirety of the computer's file system, and create an xcopy batch file for each of these directories. After parsing these files, it would then run all the batch files at once. Multithreading as it were? Looking back on it, the throughput probably wasn't any better than the default copying program windows already had, but the amount of time that it took was less. Instead of 2 hours to finish the task it took 45 minutes. My thought for justifying this program was that; instead of giving one man to do paperwork split the paperwork among many men. So, while a large file is being copied, many smaller files could be copied during that time.

After that day I really couldn't keep my hands off this program. As my knowledge of programming increased, so did my likelihood of editing a piece of the code in this program.

The surmountable amount of updates that this program has gone through is amazing. At version 6.25 it now sits as a standalone batch file. It used to consist of 6 files and however many xcopy batch files that it created for the file migration, now it's just 1 file and dirt simple to run, (well front-end, anyways, the back-end is a masterpiece of weirdness, honestly) it automates adding all the necessary directories and files. Oh, and the name is Latin for Imitate, figured it's a reasonable name for a copying program.

I was 14, so my creativity lacked in the naming department >_< 1

1 -

Imagine saving Integers and Floats in a MySQL table as strings containing locale based thousand sepatators...

man... fickt das hart!

Wait, there's more!

Imagine storing a field containing list of object data as a CSV in a single table column instead of using JSON format or a separate DB table.... and later parsing it by splitting the CSV string on ";"...6 -

I spent over a decade of my life working with Ada. I've spent almost the same amount of time working with C# and VisualBasic. And I've spent almost six years now with F#. I consider all of these great languages for various reasons, each with their respective problems. As these are mostly mature languages some of the problems were only knowable in hindsight. But Ada was always sort of my baby. I don't really mind extra typing, as at least what I do, reading happens much more than writing, and tab completion has most things only being 3-4 key presses irl. But I'm no zealot, and have been fully aware of deficiencies in the language, just like any language would have. I've had similar feelings of all languages I've worked with, and the .NET/C#/VB/F# guys are excellent with taking suggestions and feedback.

This is not the case with Ada, and this will be my story, since I've no longer decided anonymity is necessary.

First few years learning the language I did what anyone does: you write shit that already exists just to learn. Kept refining it over time, sometimes needing to do entire rewrites. Eventually a few of these wound up being good. Not novel, just good stuff that already existed. Outperforming the leading Ada company in benchmarks kind of good. At the time I was really gung-ho about the language. Would have loved to make Ada development a career. Eventually build up enough of this, as well as a working, but very bad performing compiler, and decide to try to apply for a job at this company. I wasn't worried about the quality of the compiler, as anyone who's seriously worked with Ada knows, the language is remarkably complex with some bizarre rules in dark corners, so a compiler which passes the standards test indicates a very intimate knowledge of the language few can attest to.

I get told they didn't think I would be a good fit for the job, and that they didn't think I should be doing development.

A few months of rapid cycling between hatred and self loathing passes, and then a suicide attempt. I've got past problems which contributed more so than the actual job denial.

So I get better and start working even harder on my shit. Get the performance of my stuff up even better. Don't bother even trying to fix up the compiler, and start researching about text parsing. Do tons of small programs to test things, and wind up learning a lot. I'm starting to notice a lot of languages really surpassing Ada in _quality of life_, with things package managers and repositories for those, as well as social media presence and exhaustive tutorials from the community.

At the time I didn't really get programming language specific package managers (I do now), but I still brought this up to the community. Don't do that. They don't like new ideas. Odd for a language which at the time was so innovative. But social media presence did eventually happen with a Twitter account that is most definitely run by a specific Ada company masquerading as a general Ada advocate. It did occasionally draw interest to neat things from the community, so that's cool.

Since I've been using both VisualStudio and an IDE this Ada company provides, I saw a very jarring quality difference over the years. I'm not gonna say VS is perfect, it's not. But this piece of shit made VS look like a polished streamlined bug free race car designed by expert UX people. It. Was. Bad. Very little features, with little added over the years. Fast forwarding several years, I can find about ten bugs in five minutes each update, and I can't find bugs in the video games I play, so I'm no bug finder. It's just that bad. This from a company providing software for "highly reliable systems"...

So I decide to take a crack at writing an editor extension for VS Code, which I had never even used. It actually went well, and as of this writing it has over 24k downloads, and I've received some great comments from some people over on Twitter about how detailed the highlighting is. Plenty of bespoke advertising the entire time in development, of course.

Never a single word from the community about me.

Around this time I had also started a YouTube channel to provide educational content about the language, since there's very little, except large textbooks which aren't right for everyone. Now keep in mind I had written a compiler which at least was passing the language standards test, so I definitely know the language very well. This is a standard the programmers at these companies will admit very few people understand. YouTube channel met with hate from the community, and overwhelming thanks from newcomers. Never a shout out from the "community" Twitter account. The hate went as far as things like how nothing I say should be listened to because I'm a degenerate Irishman, to things like how the world would have been a better place if I was successful in killing myself (I don't talk much about my mental illness, but it shows up).

I'm strictly a .NET developer now. All code ported.5 -

My Unreal project right now:

FVector

std::vector

Eigen::Vector

KDL::Vector

....

*sigh*

And people ask me why I like Lisp. A Vector is a Vector goddamit! Half my project is now just parsing the same variable to the different functions. sigh....I hate this.6 -

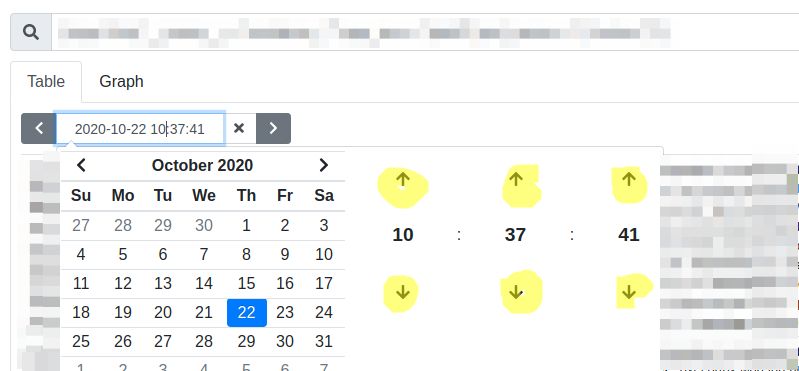

Let's talk about input forms.

Please don't do that!

Setting time with the arrow buttons in the UI.

Each time change causes the page to re-fetch all the records. This is fetching, parsing, rendering tens of thousands of entries on every single click. And I want to set a very specific time, so there's gonna be a lot of gigs of traffic wasted for /dev/null.

Do I hear you say "just type the date manually you dumbass!"? I would indeed be a dumbass if I didn't try that. You know what? Typing the date in manually does nothing. Apparently, the handler is not triggered if I type it in manually/remove the focus/hit enter/try to jump on 1 leg/draw a blue triangle in my notebook/pray 3

3 -

Today's project was answering the question: "Can I update tables in a Microsoft Word document programmatically?"

(spoiler: YES)

My coworker got the ball rolling by showing that the docx file is just a zip archive with a bunch of XML in it.

The thing I needed to update were a pair of tables. Not knowing anything about Word's XML schema, I investigated things like:

- what tag is the table declared with?

- is the table paginated within the table?

- where is the cell background color specified?

Fortunately this wasn't too cumbersome.

For the data, CSV was the obvious choice. And I quickly confirmed that I could use OpenCSV easily within gradle.

The Word XML segments were far too verbose to put into constants, so I made a series of templates with tokens to use for replacement.

In creating the templates, I had to analyze the word xml to see what changed between cells (thankfully, very little). This then informed the design of the CSV parsing loops to make sure the dynamic stuff got injected properly.

I got my proof of concept working in less than a day. Have some more polishing to do, but I'm pretty happy with the initial results!6 -

I made a functional parsing layer for an API that cleans http body json. The functions return insights about the received object and the result of the parse attempt. Then I wrote validation in the controller to determine if we will reject or accept. If we reject, parse and validation information is included on the error response so that the API consumer knows exactly why it was rejected. The code was super simple to read and maintain.

I demoed to the team and there was one hold out that couldn’t understand my decision to separate parse and validate. He decided to rewrite the two layers plus both the controller and service into one spaghetti layer. The team lead avoided conflict at all cost and told me that even though it was far worse code to “give him this”. We still struggle with the spaghetti code he wrote to this day.

When sugar-coating someone’s engineering inadequacies is more important than good engineering I think about quitting. He was literally the only one on the team that didn’t get it.2 -

"So Alecx, how did you solve the issues with the data provided to you by hr for <X> application?"

Said the VP of my institution in charge of my department.

"It was complex sir, I could not figure out much of the general ideas of the data schema since it came from a bunch of people not trained in I.T (HR) and as such I had to do some experiments in the data to find the relationships with the data, this brought about 4 different relations in the data, the program determined them for me based on the most common type of data, the model deemed it a "user", from that I just extracted the information that I needed, and generated the tables through Golang's gorm"

VP nodding and listening intently...."how did you make those relationships?" me "I started a simple pattern recognition module through supervised mach..." VP: Machine learning, that sounds like A.I

Me: "Yes sir, it was, but the problem was fairly easy for the schema to determ.." VP: A.I, at our institution, back in my day it was a dream to have such technology, you are the director of web tech, what is it to you to know of this?"

Me: "I just like to experiment with new stuff, it was the easiest rout to determine these things, I just felt that i should use it if I can"

VP: "This is amazing, I'll go by your office later"

Dude speaks wonders of me. The idea was simple, read through the CSV that was provided to me, have the parsing done in a notebook, make it determine the relationships in the data and spout out a bunch of JSON that I could use. Hook it up to a simple gorm golang script and generate the tables for that. Much simpler than the bullshit that we have in php. I used this to create a new database since the previous application had issues. The app will still have a php frontend and backend, but now I don't leave the parsing of the data to php, which quite frankly, php sucks for imho. The Python codebase will then create the json files through the predictive modeling (98% accuaracy) and then the go program will populate the db for me.

There are also some node scripts that help test the data since the data is json.

All in all a good day of work. The VP seems scared since he knows no one on this side of town knows about this kind of tech. Me? I am just happy I get to experiment. Y'all should have seen his face when I showed him a rather large app written in Clojure, the man just went 0.0 when he saw Lisp code.

I think I scare him.12 -

Writing a function to take a string of delimited entities, parse each character to find the separators, capture the characters in between separators, and return an array of entities.

I used this for about a year before I learned about String.split()

Yeah.1 -

"I don't have any issues with that (OS, code push, software, etc), so I'm not sure why you are having issues." I love reading comments like that, as if that solves the issue. The fuck does it matter if you don't have issues, they are having issues you moron. For a place for developers you'd think they'd be able to think logically, maybe they're in the wrong line of business. Maybe that software had an error parsing some file because some bit got flipped when writing to the HDD because there's an issue with the drive and the ECC failed for some reason, who cares. There's an issue and you saying it works for you makes you sound like a fucking moron... There's an error/crash, it happens, that's software.4

-

Me, the only iOS dev at work one day, and colleague (who we'll call AndroidBoy), the only Android dev at work that same day (he's been working with us for less than two months). There was a change in one of the jsons we received from the server: instead of receiving a list, we now received a dictionary with strings as keys and lists as values. My iOS colleague had already made this modification on our parse function the day before.

AndroidBoy: "Hey what happened with the json?"

Me: "Oh, well instead of parsing a list, we'll parse a dictionary and get the list from each key. You basically have to do the same thing, only this time the lists are organized into categories."

AndroidBoy: "Oh, ok. But I don't know how to parse a dictionary while using Retrofit." (Context: Retrofit is a framework for request handling - correct me if I am mistaken, that's just what I've been told)

Me: "Sucks, dude, can't help ya. I've never worked with that and don't have that much exp. with Android."

I go out for a cigarette break. When I return, AndroidBoy is nowhere to be seen and suddenly I can't seem to get that data in my app. AndroidBoy comes in from the room where the backend colleagues work.

AndroidBoy: "Solved it!"

Me: "Solved what?"

AndroidBoy: "I told them to change back to a list and just put the key inside the objects of the list."

... he used the precious time of the backend colleagues to change the thing back hust because he was too lazy to search how to parse a dictionary. I was so amazed by his answer, that I didn't know whether to laugh, scream at him or punch him in the face. Not to mention the fact that now I had to revert just so he could avoid that extra work.5 -

I have to write an xml configuration parser for an in-house data acquisition system that I've been tasked with developing.

I hate doing string parsing in C++... Blegh! 16

16 -

Kinda all other devs translate incompetent with a lack of knowledge

i would go with not able to recognize his lack of knowledge

Story 1:

once we had a developer, whom was given the task to try out a REST/Json API using Java

after a week he presented his solution,

2 Classes with actual code and a micro-framework for parsing and generating JSON

so i asked him, why he didn't use a framework like jackson or gson, while this presentation he felt pretty offended by this question

a couple of weeks later i met him and he was full of thanks for me, because i showed him, that there are frameworks like that, and even said sorry for feeling offended

- no incompentence here -

Story 2:

once i had a lead dev, who was so self-confident, he refactored (for no reason but refactoring itself) half the app and commited without trying to compile/run test

but not only once, but on a regular basis

as you may imagine, he broke the application multiple times and blamed the other devs

- incompentence warning-

Story 3:

once i had a dev, which wanted to stay up with the latest versions of his libraries

npm update && commit without trying to compile/testing multiple times

- incompentence warning-

Story 4:

once i had a cto

* thought email-marketing is cutting edge

* removed test-systems completely to reduce costs

* liked wordpress

* sets vm to sleep without letting anyone know

- i guess incompetent alert -2 -

I spent four days doing a rewrite for a possible performance boost that yielded nothing.

I spent an hour this morning implementing something that boosted parsing of massive files by 22% and eliminated memory allocations during parsing.

Work effort does not translate into gains.17 -

I'm not much a fan of JavaScript. In fact, I am not very fond of any dynamic language, but JavaScript is one of my least favorites.

But this isn't about that. I use NodeJS for all of my web serving. Why would I do that? Am I a masochist? Yes.

But this isn't about that. I use NodeJS because having the same language on client and server side is something that web has never really seen before, not in this scale. Something I really really love with NodeJS is socket connections. There's no JSON parsing, no annoying conversion of data types. You can get network data and use it AS IS. If you transmit over socket using JSON, as soon as that data arrives on the server, it is available to use. It gets me so hard.

JavaScript is built to be single-threaded, and this is rooted deep into the language. NodeJS knows this isn't gonna work. And while there's still no way to multi-thread, they still try their best and allow certain operations (Usually IO) to run async as if you were using ajax.

With modern versions of the language, the server and client side can share scripts! With the inclusion of the import keyword, for the first time I have ever seen, client and server can use the same fucking code. That is mindblowing.

Syntax is still fluffy and data types are still mushy but the ability to use the same language on both sides is respectable. Can't wait for WebASM to go mainstream and open this opportunity up to more languages!10 -

A cache - related bug that gets triggered only at high loads, 10k parallel sessions or so.

Parsing 30GB of logs, trying to find something to work with....

yippee......5 -

Am i the only one who doesnt see how graphql is useful? Yeah you can cut back on data transfer and let the server do the parsing but like, you can do the same if you spend 3 minutes properly designing the backend?6

-

need advice on integrating .t3d file support in c++ engine

hey, been stuck trying to figure out how to get .t3d file support working in our custom game engine, which is all written in c++. it's turning out to be a lot trickier than expected and i'm kinda hitting a wall here. the documentation on .t3d itself is pretty much nonexistent and what's out there is either outdated or too vague to be of any real use. i've tried a few things based on general file parsing logic in c++ but keep running into issues with either reading the files correctly or integrating the data into the engine in a way that actually works. it feels like i'm missing something fundamental but can't pin down what it is. has anyone here gone through this process before or has any experience with .t3d files? i could really use some advice on how to approach this, or even just some resources that explain the format in detail. also, if there are any common pitfalls or things to watch out for when adding new file format support to a game engine, i'd appreciate the heads up. thanks in advance for any help, feeling pretty stuck and any guidance would be a huge help.11 -

I have come across the most frustrating error i have ever dealt with.

Im trying to parse an XML doc and I keep getting UnauthorizedAccessException when trying to load the doc. I have full permissions to the directory and file, its not read only, i cant see anything immediately wrong as to why i wouldnt be able to access the file.

I searched around for hours yesterday trying a bunch of different solutions that helped other people, none of them working for me.

I post my issue on StackOverflow yesterday with some details, hoping for some help or a "youre an idiot, Its because of this" type of comment but NO.

No answers.

This is the first time Ive really needed help with something, and the first time i havent gotten any response to a post.

Do i keep trying to fix this before the deadline on Sunday? Do i say fuck it and rewrite the xml in C# to meet my needs? Is there another option that i dont even know about yet?

I need a dev duck of some sort :/39 -

This is meant as a follow-up on my story about how I'm no longer and Ada developer and everything leading up to that. The tldr is that despite over a decade of FOSS work, code that could regularly outperform a leading Ada vendor, and much needed educational media, I was rejected from a job at that vendor, as well as a testing company centered around Ada, as well as regularly met with hostility from the community.

The past few months I have been working on a "pattern combinator" engine for text parsing, that works in C#, VB, and F#. I won't explain it here, but the performance is wonderful and there's substantial advantages.

From there, I've started a small project to write a domain specific language for easily defining grammars and parsing it using this engine.

Microsoft's VisualStudio team has reached out and offered help and advice for implementing the extensions and other integrations I want.

That Ada vendor regularly copied things I had worked on, "introducing" seven things after I had originally been working on them.

In the almost as long experience with .NET I've rarely encountered hostility, and the closest thing to a problem I've had has been a few, resolved, misunderstandings.

Microsoft is a pretty damn good company. And it's great to actually be welcomed/included.2 -

So I just spent the last few hours trying to get an intro of given Wikipedia articles into my Telegram bot. It turns out that Wikipedia does have an API! But unfortunately it's born as a retard.

First I looked at https://www.mediawiki.org/wiki/API and almost thought that that was a Wikipedia article about API's. I almost skipped right over it on the search results (and it turns out that I should've). Upon opening and reading that, I found a shitload of endpoints that frankly I didn't give a shit about. Come on Wikipedia, just give me the fucking data to read out.

Ctrl-F in that page and I find a tiny little link to https://mediawiki.org/wiki/... which is basically what I needed. There's an example that.. gets the data in XML form. Because JSON is clearly too much to ask for. Are you fucking braindead Wikipedia? If my application was able to parse XML/HTML/whatevers, that would be called a browser. With all due respect but I'm not gonna embed a fucking web browser in a bot. I'll leave that to the Electron "devs" that prefer raping my RAM instead.

OK so after that I found on third-party documentation (always a good sign when that's more useful, isn't it) that it does support JSON. Retardpedia just doesn't use it by default. In fact in the example query that was a parameter that wasn't even in there. Not including something crucial like that surely is a good way to let people know the feature is there. Massive kudos to you Wikipedia.. but not really. But a parameter that was in there - for fucking CORS - that was in there by default and broke the whole goddamn thing unless I REMOVED it. Yeah because CORS is so useful in a goddamn fucking API.

So I finally get to a functioning JSON response, now all that's left is parsing it. Again, I only care about the content on the page. So I curl the endpoint and trim off the bits I don't need with jq... I was left with this monstrosity.

curl "https://en.wikipedia.org/w/api.php/...=*" | jq -r '.query.pages[0].revisions[0].slots.main.content'

Just how far can you nest your JSON Wikipedia? Are you trying to find the limits of jq or something here?!

And THEN.. as an icing on the cake, the result doesn't quite look like JSON, nor does it really look like XML, but it has elements of both. I had no idea what to make of this, especially before I had a chance to look at the exact structured output of that command above (if you just pipe into jq without arguments it's much less readable).

Then a friend of mine mentioned Wikitext. Turns out that Wikipedia's API is not only retarded, even the goddamn output is. What the fuck is Wikitext even? It's the Apple of wikis apparently. Only Wikipedia uses it.

And apparently I'm not the only one who found Wikipedia's API.. irritating to say the least. See e.g. https://utcc.utoronto.ca/~cks/...

Needless to say, my bot will not be getting Wikipedia integration at this point. I've seen enough. How about you make your API not retarded first Wikipedia? And hopefully this rant saves someone else the time required to wade through this clusterfuck.12 -

Fuck my life! I have been given a task to extract text (with proper formatting) from Docx files.

They look good on the outside but it is absolute hell parsing these files, add to these shitty XML human error and you get a dev's worst nightmare.

I wrote a simple function to extract text written in 'heading(0-9)' paragraph style and got all sorts of shit.

One guy used a table with borders colored white to write text so that he didn't have to use tabs. It is absolute bullshit.2 -

I have quite a few of these so I'm doing a series.

(2 of 3) Flexi Lexi

A backend developer was tired of building data for the templates. So he created a macro/filter for our in house template lexer. This filter allowed the web designers (didn't really call them frond end devs yet back then) could just at an SQL statement in the templates.

The macro had no safe argument parsing and the designers knew basic SQL but did not know about SQL Injection and used string concatination to insert all kinds of user and request data in the queries.

Two months after this novel feature was introduced we had SQL injections all over the place when some piece of input was missing but worse the whole product was riddled with SQLi vulnerabilities.2 -

That feeling when you’re scraping a website to build an API and your script downloads 4049/6170 pages before failing and you have to rewrite it so that puppeteer hits the next button 4049 times before executing the script. 😅

This database is so frustrating.

I hate this website (the one I’m scraping).

It’s going to be so satisfying when this is finished.3 -

>About to create Helperclass for JSON parsing and writing

>Realises there's the GSON-library by Google

>shamefully and silently like a fart deletes 1 hour of work

>repeatedly bangs head against desktop4 -

Fuck Workday! Fuck any company that uses Workday! Fucking stop parsing resume if you’re bad at it! Fucking stop asking to fill the same information in the resume I just uploaded!4

-

I had to implement an internal tool in C++ which parses a file and converts the content into another format.

It did take hundreds of lines of code to get it working, file handling and parsing data in C/C++ is terrible.

I'd rather done it with some scripting language, and additionally implemented it in python as a side-project (in less than an hour and < 20 lines of code, BTW) but it should be C++ "Because that is how we do it here".

At the end the tool was only used for a few weeks, because someone had an idea how to completely avoid the need for that converted data.3 -

Sorry, need to vent.

In my current project I'm using two main libraries [slack client and k8s client], both official. And they both suck!

Okay, okay, their code doesn't really suck [apart from k8s severely violating Liskov's principle!]. The sucky part is not really their fault. It's the commonly used 3rd-party library that's fucked up.

Okhttp3

yeah yeah, here come all the booos. Let them all out.

1. In websockets it hard-caps frame size to 16mb w/o an ability to change it. So.. Forget about unchunked file transfers there... What's even worse - they close the websocket if the frame size exceeds that limit. Yep, instead of failing to send it kills the conn.

2. In websockets they are writing data completely async. Without any control handles.. No clue when the write starts, completes or fails. No callbacks, no promises, no nothing other feedback

3. In http requests they are splitting my request into multiple buffers. This fucks up the slack cluent, as I cannot post messages over 4050 chars in size . Thanks to the okhttp these long texts get split into multiple messages. Which effectively fucks up formatting [bold, italic, codeblocks, links,...], as the formatted blocks get torn apart. [didn't investigate this deeper: it's friday evening and it's kotlin, not java, so I saved myself from the trouble of parsing yet unknown syntax]

yes, okhttp is probably a good library for the most of it. Yes, people like it, but hell, these corner cases and weird design decisions drive me mad!

And it's not like I could swap it with anynother lib.. I don't depend on it -- other libs I need do! -

Had a bad day at work :( They gave me this code for some obscure streaming job and asked me to complete it. Only after 3 days did I realize that the LLD given to me was incorrect as the data model was updated. Another 2 more days, I was able to debug the code and run it successfully— I was able to parse the tables and generate the required frame but not able to stream it back to the output topic as per the LLD. That’s where I needed help but none of my emails/messages were replied to. The main guy who is pretty technical scheduled a code review session with me— I expected that I would run the code and he would spot it something I might’ve missed and why my streaming function isn’t working. Instead, what happened was that he grilled me on each and every line of the code (which had some obscure tables queried) and then got super mad at me saying “Why are we having this code review session if your code is not complete?”. I’m like bruh, you asked for it, and yes, the main parsing logic is done and I’m just having this issue in the last part. And he’s like “Why didn’t you tell me earlier?”. Wtf?! I left at least 5 emails and a dozen messages. He’s like this has to go live on Monday, and I’m like Ok, I’ll work in the weekend. And he’s like “Don’t tell me all these things! You’re not doing me a favor by working on weekends! How am I to ask my colleagues to connect with you separately on Saturday/Sunday? You should have done the on the weekdays itself. What were you doing this whole week?”. Bruh, I was running the code multiple times and debugging it using print statements. All while you were ignoring my attempts to reach out to you. SMH 🤦♂️ I can go on and on about this whole saga.4

-

Should I be excited or concerned?

Newbie dev(babydev) who just learned string vs int and the word "boolean", is SUPER into data parsing, extrapolation and recursion... without knowing what any of those terms.

2 ½ hrs later. still nothing... assuming he was confused, I set up a 'quick' call...near 3 hrs later I think he got that it was only meant so I could see if/where he didnt understand... not dive into building extensive data arch... hopefully.

So, we need some basic af PHP forms for some public-provided input into a mySQL db. I figured I'd have him look up mySQL variables/fields, teach him a bit about proper db/field setup and give him something to practice on his currently untouched linux container I just set up so he could have a static ipv4 and cli on our new block (yea... he's spoiled, but has no clue).

I asked him to list some traits of X that he thinks could be relevant. Then to essentially briefly explain the logic to deciding/returning the values/how to store in the db... essentially basic conditionals and for loops... which is also quite new to him.

I love databases; I know I'm not in the majority... I assumed he'd get a couple traits in his mind and exhaust himself breaking them down. I was wrong. He was/likely is in his sleep now, over complicating something that was just meant as a basic af.

Fyi, the company is currently weighted towards more autistics (him and myself included) than neurotypicals.

I know I was(still am) extremely abnormal, especially when it comes to things like data.

So, should I be concerned/have him focus elsewhere for a bit?... I dont want to have him burnout before he even gets to installing mySQL44 -

For some project, I wrote this algoritem in Java to parse a lot of XML files and save data to database. It needs to have some tricks and optimisations to run in acceptable time. I did it and in average, it run for 8-10 minutes.

After I left company they got new guy. And he didn't know Java so they switched to PHP and rewrote the whole project. He did algorithm his way. After rewrite it run around 8 hours.

I was really proud of myself and shocked they consider it acceptable.4 -

Is your code green?

I've been thinking a lot about this for the past year. There was recently an article on this on slashdot.

I like optimising things to a reasonable degree and avoid bloat. What are some signs of code that isn't green?

* Use of technology that says its fast without real expert review and measurement. Lots of tech out their claims to be fast but actually isn't or is doing so by saturation resources while being inefficient.