Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "cluster"

-

I hired a woman for senior quality assurance two weeks ago. Impressive resume, great interview, but I was met with some pseudo-sexist puzzled looks in the dev team.

Meeting today. Boss: "Why is the database cluster not working properly?"

Team devs: "We've tried diagnosing the problem, but we can't really find it. It keeps being under high load."

New QA: "It might have something to do with the way you developers write queries".

She pulls up a bunch of code examples with dozens of joins and orderings on unindexed columns, explains that you shouldn't call queries from within looping constructs, that it's smart to limit the data with constraints and aggregations, hints at where to actually place indexes, how not to drag the whole DB to the frontend and process it in VueJS, etc...

New QA: "I've already put the tasks for refactoring the queries in Asana"

I'm grinning, because finally... finally I'm not alone in my crusade anymore.

Boss: "Yeah but that's just that code quality nonsense Bittersweet always keeps nagging about. Why is the database not working? Can't we just add more thingies to the cluster? That would be easier than rewriting the code, right?"

Dev team: "Yes... yes. We could try a few more of these aws rds db.m4.10xlarge thingies. That will solve it."

QA looks pissed off, stands up: "No. These queries... they touch the database in so many places, and so violently, that it has to go to therapy. That's why it's down. It just can't take the abuse anymore. You could add more little brothers and sisters to the equation, but damn that would be cruel right? Not to mention that therapy isn't exactly cheap!"

Dev team looks annoyed at me. My boss looks even more annoyed at me. "You hired this one?"

I keep grinning, and I nod.

"I might have offered her a permanent contract"45 -

Hey everyone,

First off, a Merry Christmas to everyone who celebrates, happy holidays to everyone, and happy almost-new-year!

Tim and I are very happy with the year devRant has had, and thinking back, there are a lot of 2017 highlights to recap. Here are just a few of the ones that come to mind (this list is not exhaustive and I'm definitley forgetting stuff!):

- We introduced the devRant supporter program (devRant++)! (https://devrant.com/rants/638594/...). Thank you so much to everyone who has embraced devRant++! This program has helped us significantly and it's made it possible for us to mantain our current infrustructure and not have to cut down on servers/sacrifice app performance and stability.

- We added avatar pets (https://devrant.com/rants/455860/...)

- We finally got the domain devrant.com thanks to @wiardvanrij (https://devrant.com/rants/938509/...)

- The first international devRant meetup (Dutch) with organized by @linuxxx and was a huge success (https://devrant.com/rants/937319/... + https://devrant.com/rants/935713/...)

- We reached 50,000 downloads on Android (https://devrant.com/rants/728421/...)

- We introduced notif tabs (https://devrant.com/rants/1037456/...), which make it easy to filter your in-app notifications by type

- @AlexDeLarge became the first devRant user to hit 50,000++ (https://devrant.com/rants/885432/...), and @linuxxx became the first to hit 75,000++

- We made an April Fools joke that got a lot of people mad at us and hopefully got some laughs too (https://devrant.com/rants/506740/...)

- We launched devDucks!! (https://devducks.com)

- We got rid of the drawer menu in our mobile apps and switched to a tab layout

- We added the ability to subscribe to any user's rants (https://devrant.com/rants/538170/...)

- Introduced the post type selector (https://devrant.com/rants/850978/...) (which will be used for filtering - more details below)

- Started a bug/feature tracker GitHub repo (https://github.com/devRant/devRant)

- We did our first ever live stream (https://youtube.com/watch/...)

- Added an awesome all-black theme (devRant++) (https://devrant.com/rants/850978/...)

- We created an "active discussions" screen within the app so you can easily find rants with booming discussions!

- Thanks to the suggestion of many community members, we added "scroll to bottom" functionality to rants with long comment threads to make those rants more usable

- We improved our app stability and set our personal record for uptime, and we also cut request times in half with some database cluster upgrades

- Awesome new community projects: https://devrant.com/projects (more will be added to the list soon, sorry for the delay!)

- A new landing page for web (https://devrant.com), that was the first phase of our web overhaul coming soon (see below)

Even after all of this stuff, Tim and I both know there is a ton of work to do going forward and we want to continue to make devRant as good as it can be. We rely on your feedback to make that happen and we encourage everyone to keep submitting and discussing ideas in the bug/feature tracker (https://github.com/devRant/devRant).

We only have a little bit of the roadmap right now, but here's some things 2018 will bring:

- A brand new devRant web app: we've heard the feedback loud and clear. This is our top priority right now, and we're happy to say the completely redesigned/overhauled devRant web experience is almost done and will be released in early 2018. We think everyone will really like it.

- Functionality to filter rants by type: this feature was always planned since we introduced notif types, and it will soon be implemented. The notif type filter will allow you to select the types of rants you want to see for any of the sorting methods.

- App stability and usability: we want to dedicate a little time to making sure we don't forget to fix some long-standing bugs with our iOS/Android apps. This includes UI issues, push notification problems on Android, any many other small but annoying problems. We know the stability and usability of devRant is very important to the community, so it's important for us to give it the attention it deserves.

- Improved profiles/avatars: we can't reveal a ton here yet, but we've got some pretty cool ideas that we think everyone will enjoy.

- Private messaging: we think a PM system can add a lot to the app and make it much more intuitive to reach out to people privately. However, Tim and I believe in only launching carefully developed features, so rest assured that a lot of thought will be going into the system to maximize privacy, provide settings that make it easy to turn off, and provide security features that make it very difficult for abuse to take place. We're also open to any ideas here, so just let us know what you might be thinking.

There will be many more additions, but those are just a few we have in mind right now.

We've had a great year, and we really can't thank every member of the devRant community enough. We've always gotten amazingly positive feedback from the community, and we really do appreciate it. One of the most awesome things is when some compliments the kindness of the devRant community itself, which we hear a lot. It really is such a welcoming community and we love seeing devs of all kind and geographic locations welcomed with open arms.

2018 will be an important year for devRant as we continue to grow and we will need to continue the momentum. We think the ideas we have right now and the ones that will come from community feedback going forward will allow us to make this a big year and continue to improve the devRant community.

Thanks everyone, and thanks for your amazing contributions to the devRant community!

Looking forward to 2018,

- David and Tim 45

45 -

Hey everyone!

devRant will be going down on Friday, July 7th around 10:30pm EDT so we can do some database maintenance and restructuring of our cluster. It hopefully won't be down for more than about 30 minutes or so, and during that time you should see our "down for maintenance" message.

If you usually use devRant while you're on the toilet (we know many do!), we apologize and suggest you try to schedule around this!

Please let me know if you have any questions and apologies for the inconvenience.43 -

rant? rant!

I work for a company that develops a variety of software solutions for companies of varying sizes. The company has three people in charge, and small teams that each worked on a certain project. 9 months ago I joined the company as a junior developer, and coincidentally, we also started working on our biggest project so far - an online platform for buying groceries from a variety of vendors/merchants and having them be delivered to your doorstep on the same day (hadn't been done to this scale in Estonia yet). One of the people from management joined the team working on that. The company that ordered this is coincidentally being run by one of the richest men in Estonia. The platform included both the actual website for customers to use, a logistics system for routing between the merchants, the warehouse, and the customers, as well as a bunch of mobile apps for the couriers, warehouse personnel, etc. It was built on Node.js with Hapi (for the backend stuff), Angular 2 (for all the UIs, including the apps which are run through a WebView wrapper), and PostgreSQL (for the database). The deadline for the MVP we (read: the management) gave them, but we finished it in about 7 months in a team of five.

The hours were insane, from 10 AM to 10 PM if lucky. When we weren't lucky (which was half of the time, if not more), we had to work until anywhere from 12 PM to 3 AM, sometimes even the whole night. The weekends weren't any better, for the majority of the time we had to put in even more extra hours on the weekends. Luckily, we were paid extra for them, but the salary was no way near fair (the majority of the team earned about 1000€/mo after taxes in a country where junior developers usually earn 1500€/month). Also because of the short deadline given to us, we skipped all the important parts like writing tests, doing CI, code reviews, feature branching/PR's, etc. I tried pushing the team and the management to at least write tests and make feature branches/PRs, but the management always told me that there wasn't enough time to coordinate and work on all that, that we'll do that after launching the MVP, etc. We basically just wrote features, tested them by hand, and pushed into the "test" branch which would later get tested and merged into master.

During development, one of the other juniors managed to write the worst kind of Angular code you could imagine - enormous amounts of duplication, no reusable components (every view contained the everything used in the view, so popups and other parts that should logically be reusable were in every view separately), fuck - even the HTML was broken (the most memorable for me were the "table > tr > div > td" ones, but that's barely scratching the surface). He left a few months into the project, and we had to build upon his shit, ever so slightly trying to fix the shit he produced. This could have definitely been avoided if we did code reviews.

A month after launching the MVP for internal testing, the guy working on the logistics system had burned out and left the company (he's earning more than twice the salary he got here, happy for him, he is a great coder and an even better team player). This could have been avoided if this project had been planned better, but I can't really blame them, since it was the first project they had at this scale (even though they had given longer deadlines for projects way smaller than this).

After we finished and launched the MVP, the second guy from management joined, because he saw we needed extra help. Again I tried to push us into investing the time to write tests for the system (because at this point we had created an unstable cluster fuck of a codebase), but again to no avail. The same "no time, just test it manually for now, we'll do that later when we have time" bullshit from management.

Now, a few weeks ago, the third guy from management joined. He saw what a disaster our whole project was. Him joining was simply a blessing from the skies. He started off by writing migrations using sequelize. I talked to him about writing tests and everything, and he actually listened. He told me that I'm gonna be the one writing them, and also talked to the rest of management about it. I was overjoyed. I could actually hear the bitterness in the voices of the rest of management when they told me how to write the tests, what to test, etc. But I didn't give a flying rat's ass, I was hapi.

I was told to start off by writing a smoke test for the whole client flow using Puppeteer. I got even happier, since I was finally able to again learn new things (this stopped at about 4 or 5 months into the project).

I'm using jest as the framework and started writing the tests in TypeScript. Later I found a library called jest-extended, but it didn't have type defs, so I decided to write them and, for the first time in my life, contribute to the open source community.18 -

--- GitHub 24-hour outage post mortem ---

As many of you will remember; Github fell over earlier this month and cracked its head on the counter top on the way down. For more or less a full 24 hours the repo-wrangling behemoth had inconsistent data being presented to users, slow response times and failing requests during common user actions such as reporting issues and questioning your career choice in code reviews.

It's been revealed in a post-mortem of the incident (link at the end of the article) that DB replication was the root cause of the chaos after a failing 100G network link was being replaced during routine maintenance. I don't pretend to be a rockstar-ninja-wizard DBA but after speaking with colleagues who went a shade whiter when the term "replication" was used - It's hard to predict where a design decision will bite back and leave you untanging the web of lies and misinformation reported by the databases for weeks if not months after everything's gone a tad sideways.

When the link was yanked out of the east coast DC undergoing maintenance - Github's "Orchestrator" software did exactly what it was meant to do; It hit the "ohshi" button and failed over to another DC that wasn't reporting any issues. The hitch in the master plan was that when connectivity came back up at the east coast DC, Orchestrator was unable to (un)fail-over back to the east coast DC due to each cluster containing data the other didn't have.

At this point it's reasonable to assume that pants were turning funny colours - Monitoring systems across the board started squealing, firing off messages to engineers demanding they rouse from the land of nod and snap back to reality, that was a bit more "on-fire" than usual. A quick call to Orchestrator's API returned a result set that only contained database servers from the west coast - none of the east coast servers had responded.

Come 11pm UTC (about 10 minutes after the initial pant re-colouring) engineers realised they were well and truly backed into a corner, the site was flipped into "Yellow" status and internal mechanisms for deployments were locked out. 5 minutes later an Incident Co-ordinator was dragged from their lair by the status change and almost immediately flipped the site into "Red" status, a move i can only hope was accompanied by all the lights going red and klaxons sounding.

Even more engineers were roused from their slumber to help with the recovery effort, By this point hair was turning grey in real time - The fail-over DB cluster had been processing user data for nearly 40 minutes, every second that passed made the inevitable untangling process exponentially more difficult. Not long after this Github made the call to pause webhooks and Github Pages builds in an attempt to prevent further data loss, causing disruption to those of us using Github as a way of kicking off our deployment processes (myself included, I had to SSH in and run a git pull myself like some kind of savage).

Glossing over several more "And then things were still broken" sections of the post mortem; Clever engineers with their heads screwed on the right way successfully executed what i can only imagine was a large, complex and risky plan to untangle the mess and restore functionality. Github was picked up off the kitchen floor and promptly placed in a comfy chair with a sweet tea to recover. The enormous backlog of webhooks and Pages builds was caught up with and everything was more or less back to normal.

It goes to show that even the best laid plan rarely survives first contact with the enemy, In this case a failing 100G network link somewhere inside an east coast data center.

Link to the post mortem: https://blog.github.com/2018-10-30-...6 -

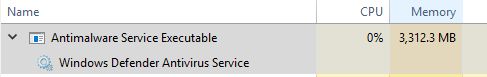

Finish my Raspberry Pi Zero Cluster... complete with kubernetes, etc.

... I still don't know what I'm going to run on this. suggestions in the comments welcome. 27

27 -

Never have I been so furious whilst at work as yesterday, I am still super pissed about going back today but knowing it's only for another few weeks makes it baerable.

I have been the lead developer on a project for the last 3~ months and our CTO is the product owner. So every now and then he decides to just work on a feature he is interested in- fair enough I guess. But everything I have to go and clean up his horrendous code. Everything he writes is an absolute joke, it's like he is constantly in Hackathon mode "let's just copy and paste some code here, hardcoded shit there and forgot about separation of code- it all goes in 1 file".

So yesterday he added a application to the project and instead of reusing a shared data access layer he added an entirely new ORM, which is near identical to the existing ORM in use, for this one application.

Being anal about these things, the first thing I did was delete his shit and simply reference the shared library then refactor a little code to make it compatible.

WELL!! I certainly hit a nerve, he went crazy spamming messages on Slack demanding I revert as it broke ONE SINGLE QUERY that he hadn't checked in (he does 1 huge commit for 10 of everyone else's). I stuck to my principals and explained both ORM's are similar and that we only needed one, the second would cause a fragmented codebase for no benefit whatsoever.

The lead Dev was then forced to come and convince me to revert, again I refused and called out the shit quality of their code. The battle raged on via the public slack group and I could hear colleagues enjoying the heated debate, new users even started joining the group just to get in on mine and the cto's difference of opinion.

I even offered to fix his code for him if he were to commit it, obviously that was not taken well ;).

Once I finally got a luck at the cluster fuck of shit he had written it took me around 5 minutes to fix and I ever improved performance. Regardless he was having none of it. Still the demands to revert continued.

I left the office steaming after long discussions with the lead Dev caught in the middle.

Fortunately my day was salvages with a positive technical discussion that evening at a company with whome I had a job offer from.

I really hate burning bridges and have never left a company under bad terms but this dictator is making me look forward to breaking the news today I will be gone in 4 weeks.4 -

!rant

So last weekend I started collecting hardware for a small scale cluster at home to test scalability of my software. Making some decent progress.

Tomorrow I will replace the switch and this weekend I will set up storage so I can start my first application 20

20 -

Data scientist: we need to whitelist a pod to connect to a database

Me: Whitelist? We don't use whitelists on private databases

DS: It's the new data warehouse database

Me: is it on <X> VPC?

DS: I'm not sure what that means but its ip is <real world ipv4>

Me: Are you hosting a publicly accessible database with all our end users information?!

DS: ...

Me: There goes our SOC2 audit controls...

DS: how long until you can white list it?

Me: I won't be whitelisting it. You need to put it on a private VPC and peer with the cluster, you'll have to rebuild all the Terraform and redeploy

DS: We didn't use Terraform because it takes too long, just white list the pods IP.

Me: No. I'm contacting the CISO and CTO...21 -

So, some time ago, I was working for a complete puckered anus of a cosmetics company on their ecommerce product. Won't name names, but they're shitty and known for MLM. If you're clever, go you ;)

Anyways, over the course of years they brought in a competent firm to implement their service layer. I'd even worked with them in the past and it was designed to handle a frankly ridiculous-scale load. After they got the 1.0 released, the manager was replaced with some absolutely talentless, chauvinist cuntrag from a phone company that is well known for having 99% indian devs and not being able to heard now. He of course brought in his number two, worked on making life miserable and running everyone on the team off; inside of a year the entire team was ex-said-phone-company.

Watching the decay of this product was a sheer joy. They cratered the database numerous times during peak-load periods, caused $20M in redis-cluster cost overrun, ended up submitting hundreds of erroneous and duplicate orders, and mailed almost $40K worth of product to a random guy in outer mongolia who is , we can only hope, now enjoying his new life as an instagram influencer. They even terminally broke the automatic metadata, and hired THIRTY PEOPLE to sit there and do nothing but edit swagger. And it was still both wrong and unusable.

Over the course of two years, I ended up rewriting large portions of their infra surrounding the centralized service cancer to do things like, "implement security," as well as cut memory usage and runtimes down by quite literally 100x in the worst cases.

It was during this time I discovered a rather critical flaw. This is the story of what, how and how can you fucking even be that stupid. The issue relates to users and their reports and their ability to order.

I first found this issue looking at some erroneous data for a low value order and went, "There's no fucking way, they're fucking stupid, but this is borderline criminal." It was easy to miss, but someone in a top down reporting chain had submitted an order for someone else in a different org. Shouldn't be possible, but here was that order staring me in the face.

So I set to work seeing if we'd pwned ourselves as an org. I spend a few hours poring over logs from the log service and dynatrace trying to recreate what happened. I first tested to see if I could get a user, not something that was usually done because auth identity was pervasive. I discover the users are INCREMENTAL int values they used for ids in the database when requesting from the API, so naturally I have a full list of users and their title and relative position, as well as reports and descendants in about 10 minutes.

I try the happy path of setting values for random, known payment methods and org structures similar to the impossible order, and submitting as a normal user, no dice. Several more tries and I'm confident this isn't the vector.

Exhausting that option, I look at the protocol for a type of order in the system that allowed higher level people to impersonate people below them and use their own payment info for descendant report orders. I see that all of the data for this transaction is stored in a cookie. Few tests later, I discover the UI has no forgery checks, hashing, etc, and just fucking trusts whatever is present in that cookie.

An hour of tweaking later, I'm impersonating a director as a bottom rung employee. Score. So I fill a cart with a bunch of test items and proceed to checkout. There, in all its glory are the director's payment options. I select one and am presented with:

"please reenter card number to validate."

Bupkiss. Dead end.

OR SO YOU WOULD THINK.

One unimportant detail I noticed during my log investigations that the shit slinging GUI monkeys who butchered the system didn't was, on a failed attempt to submit payment in the DB, the logs were filled with messages like:

"Failed to submit order for [userid] with credit card id [id], number [FULL CREDIT CARD NUMBER]"

One submit click later and the user's credit card number drops into lnav like a gatcha prize. I dutifully rerun the checkout and got an email send notification in the logs for successful transfer to fulfillment. Order placed. Some continued experimentation later and the truth is evident:

With an authenticated user or any privilege, you could place any order, as anyone, using anyon's payment methods and have it sent anywhere.

So naturally, I pack the crucifixion-worthy body of evidence up and walk it into the IT director's office. I show him the defect, and he turns sheet fucking white. He knows there's no recovering from it, and there's no way his shitstick service team can handle fixing it. Somewhere in his tiny little grinchly manager's heart he knew they'd caused it, and he was to blame for being a shit captain to the SS Failboat. He replies quietly, "You will never speak of this to anyone, fix this discretely." Straight up hitler's bunker meme rage.13 -

A Developer is desperate: his java application servers are unresponsive, thousand of dead zombie threads are sucking all cpus, memory is leaking everywhere, garbage collector has gone crazy, the cluster sessions are fucked....

The Developer goes to the closest bridge, ties a stone to his neck and gets ready to jump.

Suddenly a bearded old man with a fiery look runs toward him, yelling:

- stop stop!!!! Your application is not scaling and misconfigured, your servers are melting, cpu usage is not sustainable anymore, but don't despair

The Developer, puzzled, looks at him:

-I've never seen you...how do you know...

- Hey, man, I'm the Devil. I know everything. All your problems are solved. I'll give you magic functions. They are called Lambda.

You'll never have to worry about your servers, scalability, security, configuration and shit.

The Developer seems astonished but relieved:

- Ok, sounds great! let's try it - suddenly suspicion creeps in - hmmmm but you are the Devil....so...you want something back, don't you?

(the Devil nods lightly with a diabolic smile)

- ...and...you want my soul, I guess...

- your soul??? come on!!! - the Devil burst in a laugh - we are in 2019. I don't care about your soul. I want your ass.

- What!???!!!?

- yes, I want to fuck your ass

The Developer, evaluates quickly the situation.

Few moments of pain or slight discomfort (?) in exchange for magic lambda. It could be worth. He accepts.

After a while of rough anal fucking, the devil asks

- Hey, how old are you anyway?

- 45, why?

- Oh jeeez...45!!!??? and you still believe in the devil?5 -

We are moving to kubernetes.

Nothing much has changed except we now get to say

it works on my cluster.

instead of

it work on my machine.1 -

Reasons I hate the US

1. It's fucking 2 in the morning there and folks are checking their Slack when they wake up for pee breaks or done after their sex sessions.

Nearly 90% of the team is on and off checking Slack.

2. Culture fucks have only 10 holidays and hence they align rest of the world to their calendar and only give 10 holidays. India, Europe, and entire world can easily get 15 holidays per year outside their leave quota.

What a cluster fuck of a country it is.23 -

a dude just DROPPED the whole Fu**ing mongodb cluster. like Right Now.

multiple databases, expanding multiple projects.

fortunately in dev. but dunno how much data is recoverable.13 -

Some days I just want to shoot myself.

I get why... someone might do this, but sweet mother of god! rant kill it with fire js i must have done bad in a previous life cluster fuck jquery alternative syntax php17

rant kill it with fire js i must have done bad in a previous life cluster fuck jquery alternative syntax php17 -

CEO - So... We'll have a new side project, it's a small thing, I spoke with a guy, he needs just a small thing...

Me - Ok....... So, what do you need me for?

CEO - Not much, this guy started a project but wants your help on a small part, and you already did something similar for us, so it should be fast, just copy and paste and change a little bit. You probably know better than me.

Me - *Sigh* ... So `friend`, what do you need from me?

Friend - So, I made a crawler that is storing some information on a local file, I just need to add multiprocessing and multithreading, a producer-consumer system with a queue so I can automatically add new links every now and then, a failback system, so when a process doesn't finish, it should be re-queued and crawled later, store all the information on a database cluster (that is not set up), [......]

Me - And when is this supposed to be up and running?

Friend - Your CEO told me you could do this by the weekend. Can we finish this by Friday?

Me - *facepalm* FML13 -

Inception.

Today I needed to check something in a remote server: this was the easiest way:

1: teamviewer to my home pc from university

2: started a vm on that machine with vpn connection to my work office

3: rdp to a windows server vm

4: ssh to a vm on our hosting cluster

5: from there, ssh to the server that I needed access to7 -

Yep. So the dev teams boss says it's fine to run a production environment on a single Windows instance with the db on that same instance, which they already totally lost once from a reboot after an auto update before I came along tasked with fixing the cluster fuck they created.

This from a man who somehow runs a dev team while using gmail via the web because he can't use an email client, uses email to track tasks but can't because they get lost amongst his 3000+ unread emails, has a screen dirtier than a hookers vag on half priced Tuesday, and got a new laptop but had to get his daughter to set it up and transfer his data because he couldn't.

But ok... you have a degree, You must know what you're doing.

It's ok though, I'll keep covering your incompetent ass while you keep raping the company because no one listens.

Peoples ignorance and arrogance astounds me.4 -

I am running a small - but growing - ceph-cluster at work. Since it is fun and our storage demand is growing each day.

Today, it was time to bring another node online and add another 12TB to the cluster.

Installation of the OS went fine, network settings fine, drives looks fine.

Now, time to add it into the cluster.... BAM

Every Dell machine in the Cluster - Dead.

The two HP-machines is online and running. But the Dell-machines just died.

WAT!?19 -

Went in to the therapist today. Their impression is I'm likely okay. Wait until I show them the cluster fuck that is Java Enterprise. 🙃11

-

We are building this big-data engine for a client's product for which we were using a cluster on GCP and they were billed ~1100$ for the last month's usage.

The CTO - the CHIEFFUCKING TECHNOLOGY OFFICER told us to hook up 5-6 laptops in our server room and create our own cluster because they cannot afford so much bill.6 -

"Let's create a docker-swarm cluster thingy for this application with horizontal scaling to learn docker and run this application better!"

This stuff is so overwhelming and I don't understand half of how to possibly set this up 😅32 -

Manager: "Can we get an accurate report on how many containers we have on the Kubernetes cluster?"

Me: "Well not really since Kubernetes is designed to be dynamic and agile with the number of resources and containers being created and deleted being subject to change at a moment's notice."

Manager: "I want numbers"

Me: "Okay well if we look at a simple moving average over time, we can see how the number of containers changes and then grab a rough answer from that"

Manager: "These numbers look a little round, are you sure these are exact?"

I'm going to throw myself into a pile of used heroin needles and hope i get stuck with whatever the hell this guy has to somehow be a manager while also being this retarded.13 -

Today we presented our project in Embedded Systems. We made our so called "Blinkdiagnosegerät" (blink diagnosis device) which is used to get error codes from older verhicles which use the check enginge light to output the error. (for reference: http://up.picr.de/7461761jwd.jpg ) This was common for vehicles without OBD.

We made our own PCB, made a small database for 2 vehicles and used a Suzuki Samurai instrument cluster for the presentation (hooked up to an Arduino UNO and a relay for emulating some Error Codes)

Got an 1.0 (A) for the project. Feel proud for the first project done in C++ and making our own PCB. So no rant, just a good day after all the stress in the last weeks doing all assignements and presentations.

Next week we hopefully finish our inverse pendulum in Simulink and then the exams are close. :D 15

15 -

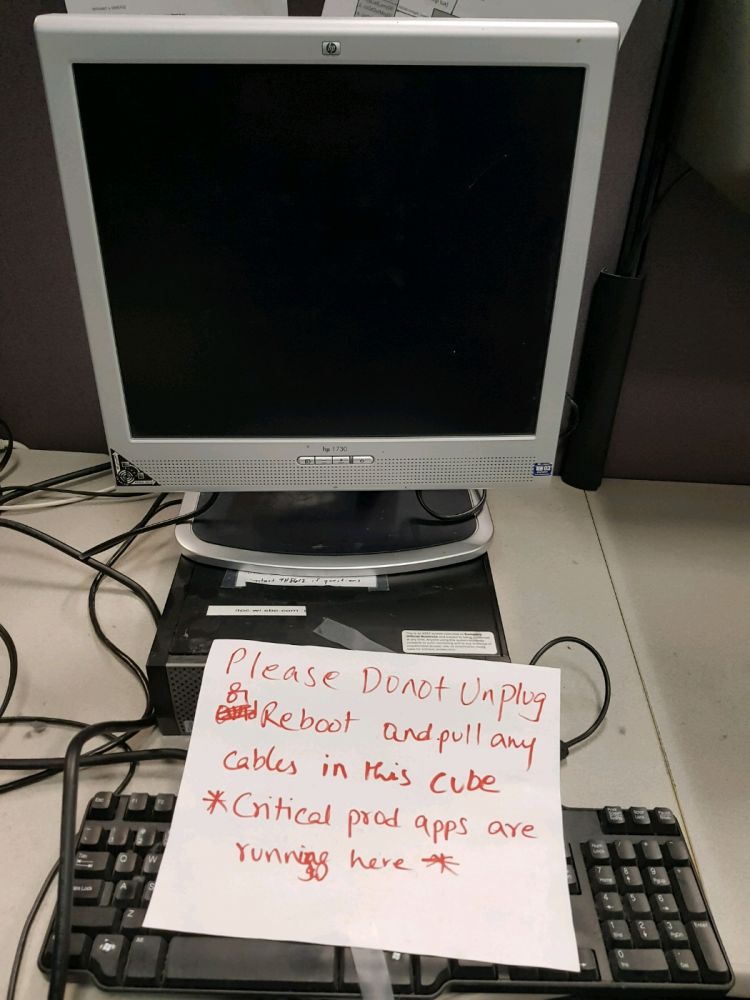

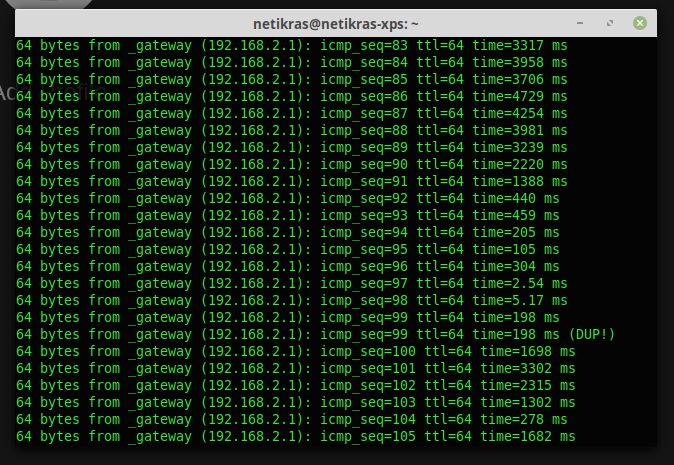

> Monitoring: Load Average of 57!! ALERT!!!!

> me: What? That's not possible?

> *Monitoring froze 14 hours ago*

> *sshs into server*

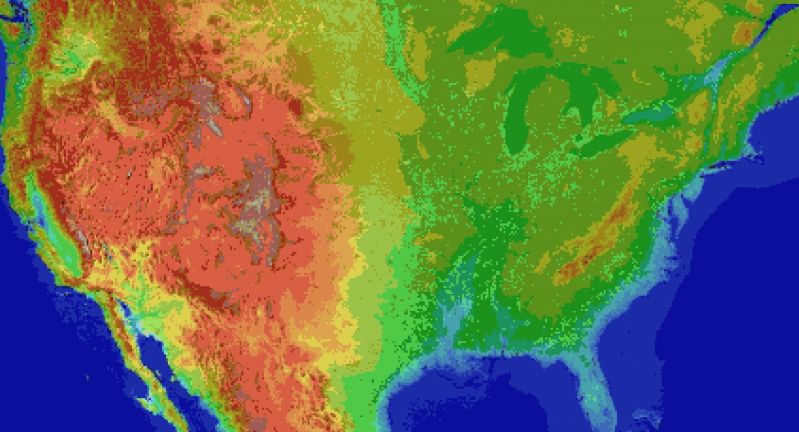

> *see attached image*

The issue was ~1200 df processes that were issued by our monitoring system and all of them didn't finish because the external cluster we mounted onto that server died a few minutes before that. Just re-mounting the cluster fixed it but still a funny sight! 24

24 -

Got laid off last week with the rest of the dev team, except one full stack Laravel dev. Investors money drying up, and the clowns can't figure out how to sell what we have.

I was all of devops and cloud infra. Had a nice k8s cluster, all terraform and gitops. The only dev left is being asked to migrate all of it to Laravel forge. 7 ML microservices, monolith web app, hashicorp vault, perfect, mlflow, kubecost, rancher, some other random services.

The genius asked the dev to move everything to a single aws account and deploy publicly with Laravel forge... While adding more features. The VP of engineering just finished his 3 year plan for the 5 months of runway they have left.

I already have another job offer for 50k more a year. I'm out of here!13 -

Personally the coolest was the program I built for my fathers use on his job.

It was my first to be used commercially in the real.

That was a very big thing, I was 17 at the time an used turbo pascal 5.5 and he used it to compute how well all machinery was doing, they rented out diggers and other construction equipment to construction sites and manually compute this with a calculator took up to three days. (This was 1987 so there was not very many ready made programs for business, you often had to build your own)

With this program he had it done in around 30 minutes.

The next best was recently when I got my raft distributed consensus cluster server working. Its a little bit like zookeeper.

Building that purely from the research paper was rewarding but a bit of a challenge.3 -

My company is providing cloud infrastructure to our customers. For research purposes we are running a little openstack cloud in our laboratory datacenter were we can test stuff before implementing it in the productive environment.

Last week the manager asked me to shut down the cluster over night and only power on the servers when we need it. (about twice a week)

The reason: it produces too much heat.

My answer was: No.

First off thats not how cloud infrastructure works, and how about a proper climate control?

Sometimes i ask myself in which parallel universum our managers live 😑3 -

So I'm sitting on the swings, minding my own business, seing how best I could destroy this cluster of servers, when suddenly I notice SOMEONE IS COMING FOR MY COFFEE

"hi neighbour! What you've got there" 4

4 -

Finally got ceph up and running, so far it does look really great!

At the moment we have 45 TB in the cluster, but we are able to expand it extremely easy.

Two servers with 256 GB RAM and 24 cores, three more with 32 GB and 12 cores.

Dedicated gigabit WAN connection.

The project is going forward strong, I'll let you know when it is time for beta testing.7 -

Between containers, cluster managers and virtual machines we've lost track of where our code even is.4

-

IT is so violent and disturbing when it comes to technical terms in relation to clusters:

- master / slave

- master / master / witness (crime scene?)

- splitbrain (how does that look in reality?)

- stonith (shoot the other node in the head)

- deadtime (no heartbeat)

- slicing (wtf?)

- shard (do they kill with that?)

- active / passive (sex scene?)

- single point of failure (at least they know who is to blame)

- share nothing / shared all (dictators vs communism)

😯6 -

I once found a MongoDB cluster open to the internet with no authentication with nearly a terabyte of data that backed a CRM service whose customers included Microsoft and Adobe to name a few.7

-

OMFG! Who’s bright fucking horrible stupid ass idea was it to mix Ajax with php (php deciding the ajax paths) with random js outputting HTML inside random fucking static divs found no where near the logical route of content.

Trying to add a simple fucking status to a gigantic cluster fuck of a legacy project is just FUCK.

If I could I would burn this bitch to the ground and start again I would, But no, it’s needed.

Someone kill me before I break the shit out of this thing, I would take a wordpress project right now instead. -

I received a job offer as web-app developer and, in order to access the interview, I had to do an online QI test.

I login in the website and I see an ugly cluster of links, missing informations, errors at receiving the data I just inserted, a lot of bugs, broken links, images not availables, blank pages and so on.

I'm not sure I want to work there anymore6 -

So you want full stack engineers to: design, do UX, create front end, build backend and deploy it in your mono repo stupid manual deployment "kubernetes cluster", add monitoring alerting manually, review others PR, QA our own apps and features, manually sync to Production, use VPN otherwise we cannot connect to anything, 2factor auth, do SRE, architecture diagrams, demo, run agile ceremonies, and learn a legacy coding language which was never mentioned in the job description. Did I miss anything?7

-

So we have an API that my team is supposed send messages to in a fire and forget kind of style.

We are dependent on it. If it fails there is some annoying manual labor involved to clean that mess up. (If it even can be cleaned up, as sometimes it is also time-sensitive.)

Yet once in a while, that endpoint just crashes by letting the request vanish. No response, no error, nothing, it is just gone.

Digging through the log files of that API nothing pops up. Yet then I realize the size of the log files. About ~30GB on good old plain text log files.

It turns out that that API has taken the LOG EVERYTHING approach so much too heart that it logs to the point of its own death.

Is circular logging such a bleeding edge technology? It's not like there are external solutions for it like loggly or kibana. But oh, one might have to pay for them. Just dump it to the disk :/

This is again a combination of developers thinking "I don't need to care about space! It's cheap!" and managers thinking "100 GB should be enough for that server cluster. Let's restrict its HDD to 100GB, save some money!"

And then, here I stand trying to keep my sanity :/1 -

We have a 15-machine cluster that went down last night because one machine in the cluster went down. Apparently having a cluster for redundancy is just a nice idea and doesnt actually work in practice.

Also I shouldnt have to go to a vendor's forums to find out the bug that is causing my cluster to go down is fixed in a future version. It should be in the goddamn patch notes!!! -

Should’ve posted this after it happened, but it requires a bit of background anyway.

There’s this guy that oversees our OpenStack environment. My team often make jokes and groan about him in private because he’s so overbearing. A few months back, he had to take us to our data center to show us our new racks, and he kept saying stupid stuff like “you break this and it costs me $30,000” as if he owns everything. He’s just... one of THOSE people. Always speaks in such a condescending way. We make jokes that he is our “best friend”.

Our company is shifting most of our products to the cloud in response to the coronavirus (trying to make it an opportunity for “innovation”). This has involved some structural and responsibility changes in our department, and long story short, I’m now heading the OpenStack environment alongside other projects.

This means going through grueling 1-on-1 meetings with our “best friend”. It’s not too bad, I can be pretty patient with people, so I didn’t mind too much at first. Then a few things happened.

1. He sent a shared folder that he owned containing info related to the environments. Several documents were outdated and incomplete, so I downloaded them, corrected them, and then uploaded the documents to my teams file share, as I was supposed to since we now own the projects.

2. Several files were missing, and when I asked about them, he said “Oh, did you refresh the browser?”. I told him no, that I downloaded them locally and republished them to my teams server, because he was supposed to hand everything off to us at once. He says “Well, silly, how are you going to get updates if you’re looking at them locally?” and kind of chuckles at me like I’m stupid.

3. He insists on training me how to remote into one of the servers to check on cluster space, which in itself is fine. I understand others wanting to make sure things will be done right by the people who come after them. But he tells me to download SuperPutty. I tell him, “oh no, that’s alright. I don’t need putty”. He says “oh cool, what tool do you use for ssh?”. I answer him “Just Git. If I want to I can use a CentOs bash terminal too, because we have WSL installed”. He responds “You can’t ssh through Git”.

I was actually a little shocked. I didn’t know if he was serious or not so I was silent for a few seconds before hesitantly saying “yes you can”. He says “this is news to me” and I so I tell him “every single one of our build jobs fetches code from Git with ssh” and he seemed genuinely shocked and surprised by that.... so then it occurs to me to show him that you can ssh in Powershell and that REALLY blew his mind. He would not shut up about it for several minutes. I was amused until it just got annoying.

Needless to say, my team had been previously teasing me about having to work with him, so they found it hilarious when I told them afterwards.8 -

Once upon a time, one or two jobs ago, a really awesome engineer specced out a distributed search application in response to a business need. This company was managed pretty oldschool and required a ton of paperwork and approvals.

The engineer spent many weeks running tests and optimizing the hell out of this app cluster. It flew, and he had the data to prove it could handle production workloads (think hundreds of terabytes of data being processed every single day)

Part of the way he achieved this was having RAID0 on all of the servers to maximize I/O throughput. He didn't care much about data loss, since the application itself was fault tolerant on a much more granular level.

Management, hearing about this, absolutely flipped their shit and demanded RAID6 instead. This despite the conclusive data that the engineer had that proved RAID6 couldn't keep up.

He more or less got told to STFU.

Even this despite the fact that a RAID restripe would actually take many times longer than rebuilding the failed node from scratch (a process that took about 30 minutes by hand, and could probably be automated to be done in less than five), causing a longer exposure to actual data loss throughout the length of the days-long array rebuild time.

The ill-thought-out requirement added about 50% to the cost of the project (*many* more hard drives now required), beyond the original budget, and the subsequent bureaucratic wrangling resulted in a late product launch.

6 months or so later, after real customers were using this product, the app was buckling under around half of its expected workload. A friend of the engineer suggested to management to try RAID0. Sure enough, that resolved the I/O bottleneck.

This rage-inducing story has a happy ending, though! Said engineer left the company not long after this incident, citing it as a reason for his departure. He was immediately hired by another company, making integer multiples of his prior salary.

The product the company botched the launch of by ignoring his spec? It died a few months later. Maybe the poor customer experience was to blame? Maybe the late launch? Maybe it was another reason entirely.

Either way, millions of dollars of hardware now sat fallow. This was a black eye on the company all the way up to the C-level.

tl;dr: Listen to your engineers. You hired them for their expertise.4 -

"four million dollars"

TL;DR. Seriously, It's way too long.

That's all the management really cares about, apparently.

It all started when there were heated, war faced discussions with a major client this weekend (coonts, I tell ye) and it was decided that a stupid, out of context customisation POC had that was hacked together by the "customisation and delivery " (they know to do neither) team needed to be merged with the product (a hot, lumpy cluster fuck, made in a technology so old that even the great creators (namely Goo-fucking-gle) decided that it was their worst mistake ever and stopped supporting it (or even considering its existence at this point)).

Today morning, I my manager calls me and announces that I'm the lucky fuck who gets to do this shit.

Now being the defacto got admin to our team (after the last lead left, I was the only one with adequate experience), I suggested to my manager "boss, here's a light bulb. Why don't we just create a new branch for the fuckers and ask them to merge their shite with our shite and then all we'll have to do it build the mixed up shite to create an even smellier pile of shite and feed it to the customer".

"I agree with you mahaDev (when haven't you said that, coont), but the thing is <insert random manger talk here> so we're the ones who'll have to do it (again, when haven't you said that, coont)"

I said fine. Send me the details. He forwarded me a mail, which contained context not amounting to half a syllable of the word "context". I pinged the guy who developed the hack. He gave me nothing but a link to his code repo. I said give me details. He simply said "I've sent the repo details, what else do you require?"

1st motherfucker.

Dafuq? Dude, gimme some spice. Dafuq you done? Dafuq libraries you used? Dafuq APIs you used? Where Dafuq did you get this old ass checkout on which you've made these changes? AND DAFUQ IS THIS TOOL SUPPOSED TO DO AND HOW DOES IT AFFECT MY PRODUCT?

Anyway, since I didn't get a lot of info, I set about trying to just merge the code blindly and fix all conflicts, assuming that no new libraries/APIs have been used and the code is compatible with our master code base.

Enter delivery head. 2nd motherfucker.

This coont neither has technical knowledge nor the common sense to ask someone who knows his shit to help out with the technical stuff.

I find out that this was the half assed moron who agreed to a 3 day timeline (and our build takes around 13 hours to complete, end to end). Because fuck testing. They validated the their tool, we've tested our product. There's no way it can fail when we make a hybrid cocktail that will make the elephants foot look like a frikkin mojito!

Anywho, he comes by every half-mother fucking-hour and asks whether the build has been triggered.

Bitch. I have no clue what is going on and your people apparently don't have the time to give a fuck. How in the world do you expect me to finish this in 5 minutes?

Anyway, after I compile for the first time after merging, I see enough compilations to last a frikkin life time. I kid you not, I scrolled for a complete minute before reaching the last one.

Again, my assumption was that there are no library or dependency changes, neither did I know the fact that the dude implemented using completely different libraries altogether in some places.

Now I know it's my fault for not checking myself, but I was already having a bad day.

I then proceeded to have a little tantrum. In the middle of the floor, because I DIDN'T HAVE A CLUE WHAT CHANGES WERE MADE AND NOBODY CARED ENOUGH TO GIVE A FUCKING FUCK ABOUT THE DAMN FUCK.

Lo and behold, everyone's at my service now. I get all things clarified, takes around an hour and a half of my time (could have been done in 20 minutes had someone given me the complete info) to find out all I need to know and proceed to remove all compilation problems.

Hurrah. In my frustration, I forgot to push some changes, and because of some weird shit in our build framework, the build failed in Jenkins. Multiple times. Even though the exact same code was working on my local setup (cliche, I know).

In any case, it was sometime during sorting out this mess did I come to know that the reason why the 2nd motherfucker accepted the 3 day deadline was because the total bill being slapped to the customer is four fucking million USD.

Greed. Wow. The fucker just sacrificed everyone's day and night (his team and the next) for 4mil. And my manager and director agreed. Four fucking million dollars. I don't get to see a penny of it, I work for peanut shells, for 15 hours, you'll get bonuses and commissions, the fucking junior Dev earns more than me, but my manager says I'm the MVP of the team, all I get is a thanks and a bad rating for this hike cycle.

4mil usd, I learnt today, is enough to make you lick the smelly, hairy balls of a Neanderthal even though the money isn't truly yours.4 -

5 of us working for a larger team were tasked with doing some R&D, we blew everyone away and were given funding to start a new team and hire people to make the project come to life.

One of the high level sales / product managers we were reporting to, secretly had another team work on a similar idea because he needed it quicker (i.e. no time for research, just build it).

After forming new team, we were asked to work on his project instead because it was further along. 4 months later, big knob comes to a meeting and basically says "You know what, this doesn't look like we have enough features, we need more, but I don't know what".

Project blew up 2 months later, head of the unit kicked up a shit storm saying how badly everything was planned and canned everything. Now one of our clients is building nearly the same thing we were originally working on, the team no longer exists and i'm back on the R&D team.

Don't get me wrong, I LOVE the R&D team, actually didn't want to leave in the first place but was told I had to. But the sheer anger and frustration to see that walking cluster fuck strutting around like his shit doesn't stink, derailing entire teams, meanwhile we can't hire new staff due to lack of funding.

Heres an idea, fire the fucktards bleeding us dry ... then we'll have lots of funding. -

Was forced to do some work on Windows this week (CAD tools that runs only on Windows). I spent a few days just setting up the tools. There were quite a few things I realized I forgot about Windows (as compared to Linux).

1) Installation times are down right horrific. What exactly are the installer doing for 10 minutes?

2) .NET is a cluster fuck. Not even Microsofts repair tool can fix it, but rather just hangs. I ended up using another tool to nuke it and reinstall.

3) Windows binary installs are insanely huge, thus, takes forever to download.

4) The registry is a pointless database that must have been written in hell with the single intent of destroying users will to live. The sole existence of the registry is another proof that completely incompetent engineers designed Windows.

5) Rebooting is the only way to solve many problems. This is another sure sign of a fundamentally fucked up OS design.

6) What the heck is wrong with the GUIs designers? The control panel must be the worst design ever. There are so many levels to get to a particular setting I'm getting dizzy. Nothing gets better by the illogical organisation.

7) Windows networking. A perversion of the tcp/ip stack that makes it virtually impossible to understand a damn thing about the current network configuration. There are at least 3 different places that effects the settings.

8) Windows command prompt. Why did they even bother to leave it in? The interpreter is as intelligent as retarded donut. You can't do anything with it, except typing "exit" and Google for another solution.

8) Updates. Why does it takes hundreds of updates per month to keep that thing safe?

9) Despite all updates that is flying out of Redmond like confetti, it is still necessary to install antivirus to keep the damn thing safe. That cost extra money, and further cost you by degrading performance of your hardware.

10) Window performance. Software runs like it was swimming in molasses. The final stab in the back on your hardware investment, and pretty much sends performance on your hardware back a few hundred bucks more.

11) Closed source is evil. If something crash consistently, you might find a forum that address the issues you have. Otherwise you're out of luck. On the other hand, it might be for the better. I imagine reading the code for Windows can lead to severe depression.

I'm lucky to be a Linux dev, and should probably not complain too much... But really, Windows, go get yourself hit by a truck and die. I won't miss you.14 -

Just let me be a programmer. Why do I have to learn yaml and deploy an app with 100 lines of code to a kubernetes cluster with literally 0 users?

Scale sucks.8 -

REDIS: Great for cloud, will fuck up your local disk if too many write operations per second.

DynamoDB: WTF 10Mb should not be "too large for a single record"!!

SPARK: NEVER CONNECT IT TO A DATABASE! Wasted A LOT of cluster time. Also, can you be LESS specific on exactly what are the bugs in my code? 'cause I don't think it's possible.

NPM: can't install a package for shit. tried it waaaay to many times.

Makefiles: Just fuck you.

WSL1: breaks more often than a glass hammer.

Python >= 3.6: FUCK ENCODINGS!!

Jupyter: STOP MESSING UP WHILE SAVING!

Living is to collet bugs, it seems.4 -

I had spent the last year working on a online store power by woocommerce with over 100k products from various suppliers. This online store utilized a custom API that would take the various formats that suppliers offer their inventory in and made them consistent. Now everything was going swimmingly initially, but then I began adding more and more products using a plug-in called WP all import. I reached around 100k products and the site would take up to an entire minute to load sometimes timing out. I got desperate so I installed several caching plugins, but to no avail this did not help me. The site was originally only supposed to take three to four months but ended up taking an entire year. Then, just yesterday I found out what went wrong and why this woocommerce website with all of these optimizations was still taking anywhere from 60 to 90 seconds to load, or just timing out entirely. I had initially thought that I needed a beefier server so I moved it to a high CPU digitalocean VM. While this did help a little bit, the site was still very slow and now I had very high CPU usage RAM usage and high disk IO. I was seriously stumped the Apache process was using a high amount of CPU and IO along with MYSQL as well. It wasn't until I started digging deeper into the database that I actually found out what the issue was. As I was loading the site I would run 'show process list' in the SQL terminal, I began to notice a very significant load time for one of the tables, so I went to go and check it out. What I did was I ran a select all query on that particular table just to see how full it was and SQL returned a error saying that I had exceeded the maximum packet size. So I was like okay what the fuck...

So I exited my SQL and re-entered it this time with a higher packet size. I ran a query that would count how many rows were in this particular table and the number came out to being in the millions. I was surprised, and what's worse is that this table belong to a plugin that I had attempted to use early in the development process to cache the site. The plugin was deactivated but apparently it had left PHP files within the wp content directory outside of the actual plugin directory, so it's still executing scripts even though the plugin itself was disabled. Basically every time I would change anything on the site, it would recache the whole thing, and it didn't delete any old records. So 100k+ products caching on saves with no garbage collection... You do the math, it's gonna be a heavy ass database. Not only that but it was serialized data, so when it did pull this metric shit ton of spaghetti from the database, PHP then had to deserialize it. Hence the high ass CPU load. I had caching enabled on the MySQL end of things so that ate the ram. I was really desperate to get this thing running.

Honest to God the main reason why this website took so long was because the load times made it miserable to work on. I just thought that the hardware that I had the site on was inadequate. I had initially started the development on a small Linux VM which apparently wasn't enough, which is why I moved it to digitalocean which also seemed to not be enough, so from there I moved to a dedicated server which still didn't seem to be enough. I was probably a few more 60-second wait times or timeouts from recommending a server cluster to my client who I know would not be willing to purchase it. The client who I promised this site to have completed in 3 months and has waited a year. Seriously, I would tell people the struggles that I would go through with this particular site and they would just tell me to just drop the site; just take the money, just take the loss. I refused to, this was really the only thing that was kicking my ass. I present myself as this high-and-mighty developer like I'm just really good at what I do but then I have this WordPress site that's just beating the shit out of me for a year. It was a very big learning experience and it was also very humbling as well, it made me realize that I really don't know as much as I think I might. It was evidence that there is still so much more to learn out there, I did learn a lot from that experience especially about optimizing websites the different types of methods to do that particular lonely on the server side and I'll be able to utilize this knowledge in the future.

I guess the moral of the story is, never really give up. Ultimately things might get so bad that you're running on hopes and dreams. Those experiences are generally the most humbling. Now I can finally present the site that I am basically a year late on to the client who will be so happy that I did not give up on the project entirely. I'll have experienced this feeling of pure euphoria, and help the small business significantly grow their revenue. Helping others is very fulfilling for me, even at my own expense.

Anyways, gonna stop ranting. Running out of characters. If you're still here... Ty for reading :')7 -

I do like Windows, it is a quite good OS nowadays, but for FUCK SAKE, what does it take to fix that CLUSTER FUCK that you call search? You don't have enough people MS or what? Just show me the BLOODY ITEMS that actually contain the words that I typed in!!! While you are at it WOULD YOU MIND LEAVING ME THE FUCK ALONE WITH THE FUCKING WEB RESULTS???9

-

So I was wondering, what is you guys' 'goal' in the long run? What do you really want to be able to do or what do you really want to achieve in 5 to 10 years? Are you working primarily for an income or are there more prominent reasons for doing what you do?

I'm in my last years of university and have a job at a software development company, not because I need the money but because of all the amazing technologies I come in touch with and the amazing things I learn, mostly about servers and devops tools.

I currently have 2 goals in mind: Creating an .io game together with a frontend developer and setting up a kubernetes cluster and using it. I personally consider kubernetes an end-game thing when it comes to running apps and am experimenting with it on three servers that I got from school.

So I was wondering, what ahout you? 😀27 -

This begs for a rant... [too bad I can't post actual screenshots :/ ]

Me: He k8s team! We're having trouble with our k8s cluster. After scaling up and running h/c and Sanity tests environment was confirmed as Healthy and Stable. But once we'd started our load tests k8s cluster went out for a walk: most of the replicas got stoped and restarted and I cannot find in events' log WHY that happened. Could you please have a look?

k8s team [india]: Hello, thank you for reaching out to k8s support. We will check and let you know.

Me: Oh, you're welcome! I'll be just sitting here quietly and eagerly waiting for your reply. TIA! :slightly_smiling_face:

<5 minutes later>

k8s team India: Hi. Could you give me a list of replicas that were failing?

Me: I gave you a Grafana link with a timeframe filter. Look there -- almost all apps show instability at k8s layer. For instance APP_1 and APP_2 were OK. But APP_3, APP_4 and APP_5 were crashing all over the place

k8s team India: ok I will check.

<My shift has ended. k8s team works in different timezone. I've opened up Slack this morning>

k8s team India: HI. APP_1 and APP_2 are fine. I don't even see any errors from logs, no restarts. All response codes are 200.

Me: 🤦♂️ .... Man, isn't that what I've said? ... 🤦♂️5 -

EoS1: This is the continuation of my previous rant, "The Ballad of The Six Witchers and The Undocumented Java Tool". Catch the first part here: https://devrant.com/rants/5009817/...

The Undocumented Java Tool, created by Those Who Came Before to fight the great battles of the past, is a swift beast. It reaches systems unknown and impacts many processes, unbeknownst even to said processes' masters. All from within it's lair, a foggy Windows Server swamp of moldy data streams and boggy flows.

One of The Six Witchers, the Wild One, scouted ahead to map the input and output data streams of the Unmapped Data Swamp. Accompanied only by his animal familiars, NetCat and WireShark.

Two others, bold and adventurous, raised their decompiling blades against the Undocumented Java Tool beast itself, to uncover it's data processing secrets.

Another of the witchers, of dark complexion and smooth speak, followed the data upstream to find where the fuck the limited excel sheets that feeds The Beast comes from, since it's handlers only know that "every other day a new one appears on this shared active directory location". WTF do people often have NPC-levels of unawareness about their own fucking jobs?!?!

The other witchers left to tend to the Burn-Rate Bonfire, for The Sprint is dark and full of terrors, and some bigwigs always manage to shoehorn their whims/unrelated stories into a otherwise lean sprint.

At the dawn of the new year, the witchers reconvened. "The Beast breathes a currency conversion API" - said The Wild One - "And it's claws and fangs strike mostly at two independent JIRA clusters, sometimes upserting issues. It uses a company-deprecated API to send emails. We're in deep shit."

"I've found The Source of Fucking Excel Sheets" - said the smooth witcher - "It is The Temple of Cash-Flow, where the priests weave the Tapestry of Transactions. Our Fucking Excel Sheets are but a snapshot of the latest updates on the balance of some billing accounts. I spoke with one of the priestesses, and she told me that The Oracle (DB) would be able to provide us with The Data directly, if we were to learn the way of the ODBC and the Query"

"We stroke at the beast" - said the bold and adventurous witchers, now deserving of the bragging rights to be called The Butchers of Jarfile - "It is actually fewer than twenty classes and modules. Most are API-drivers. And less than 40% of the code is ever even fucking used! We found fucking JIRA API tokens and URIs hard-coded. And it is all synchronous and monolithic - no wonder it takes almost 20 hours to run a single fucking excel sheet".

Together, the witchers figured out that each new billing account were morphed by The Beast into a new JIRA issue, if none was open yet for it. Transactions were used to update the outstanding balance on the issues regarding the billing accounts. The currency conversion API was used too often, and it's purpose was only to give a rough estimate of the total balance in each Jira issue in USD, since each issue could have transactions in several currencies. The Beast would consume the Excel sheet, do some cryptic transformations on it, and for each resulting line access the currency API and upsert a JIRA issue. The secrets of those transformations were still hidden from the witchers. When and why would The Beast send emails, was still a mistery.

As the Witchers Council approached an end and all were armed with knowledge and information, they decided on the next steps.

The Wild Witcher, known in every tavern in the land and by the sea, would create a connector to The Red Port of Redis, where every currency conversion is already updated by other processes and can be quickly retrieved inside the VPC. The Greenhorn Witcher is to follow him and build an offline process to update balances in JIRA issues.

The Butchers of Jarfile were to build The Juggler, an automation that should be able to receive a parquet file with an insertion plan and asynchronously update the JIRA API with scores of concurrent requests.

The Smooth Witcher, proud of his new lead, was to build The Oracle Watch, an order that would guard the Oracle (DB) at the Temple of Cash-Flow and report every qualifying transaction to parquet files in AWS S3. The Data would then be pushed to cross The Event Bridge into The Cluster of Sparks and Storms.

This Witcher Who Writes is to ride the Elephant of Hadoop into The Cluster of Sparks an Storms, to weave the signs of Map and Reduce and with speed and precision transform The Data into The Insertion Plan.

However, how exactly is The Data to be transformed is not yet known.

Will the Witchers be able to build The Data's New Path? Will they figure out the mysterious transformation? Will they discover the Undocumented Java Tool's secrets on notifying customers and aggregating data?

This story is still afoot. Only the future will tell, and I will keep you posted.6 -

A new head of operations joins a small company.

— Okay guys, I’m planning for the long run. I need 500 warehouses across the country — we might need that capacity. We will build them rather than renting them — Amazon does the same thing, so we should too. We also need our own shipping fleet — FedEx has that too, so it’s a battle-tested approach. We might need that capacity. We need a future-proof solution.

— Uh… That’s kind of dumb. Are you kidding me?

A new head of engineering joins a small company.

— Okay guys, I’m planning for the long run. I need an AWS cluster running Kubernetes deploying microservices built with Docker. We might need autoscaling. Frontend should be Next.js + TypeScript — everyone does that now, plus we can develop a React Native app more easily if need be. We need a future-proof solution.

— Wow! That’s what I call a good manager. You really know what you’re talking about. You’re promoted!4 -

Want to make someone's life a misery? Here's how.

Don't base your tech stack on any prior knowledge or what's relevant to the problem.

Instead design it around all the latest trends and badges you want to put on your resume because they're frequent key words on job postings.

Once your data goes in, you'll never get it out again. At best you'll be teased with little crumbs of data but never the whole.

I know, here's a genius idea, instead of putting data into a normal data base then using a cache, lets put it all into the cache and by the way it's a volatile cache.

Here's an idea. For something as simple as a single log lets make it use a queue that goes into a queue that goes into another queue that goes into another queue all of which are black boxes. No rhyme of reason, queues are all the rage.

Have you tried: Lets use a new fangled tangle, trust me it's safe, INSERT BIG NAME HERE uses it.

Finally it all gets flushed down into this subterranean cunt of a sewerage system and good luck getting it all out again. It's like hell except it's all shitty instead of all fiery.

All I want is to export one table, a simple log table with a few GB to CSV or heck whatever generic format it supports, that's it.

So I run the export table to file command and off it goes only less than a minute later for timeout commands to start piling up until it aborts. WTF. So then I set the most obvious timeout setting in the client, no change, then another timeout setting on the client, no change, then i try to put it in the client configuration file, no change, then I set the timeout on the export query, no change, then finally I bump the timeouts in the server config, no change, then I find someone has downloaded it from both tucows and apt, but they're using the tucows version so its real config is in /dev/database.xml (don't even ask). I increase that from seconds to a minute, it's still timing out after a minute.

In the end I have to make my own and this involves working out how to parse non-standard binary formatted data structures. It's the umpteenth time I have had to do this.

These aren't some no name solutions and it really terrifies me. All this is doing is taking some access logs, store them in one place then index by timestamp. These things are all meant to be blazing fast but grep is often faster. How the hell is such a trivial thing turned into a series of one nightmare after another? Things that should take a few minutes take days of screwing around. I don't have access logs any more because I can't access them anymore.

The terror of this isn't that it's so awful, it's that all the little kiddies doing all this jazz for the first time and using all these shit wipe buzzword driven approaches have no fucking clue it's not meant to be this difficult. I'm replacing entire tens of thousands to million line enterprise systems with a few hundred lines of code that's faster, more reliable and better in virtually every measurable way time and time again.

This is constant. It's not one offender, it's not one project, it's not one company, it's not one developer, it's the industry standard. It's all over open source software and all over dev shops. Everything is exponentially becoming more bloated and difficult than it needs to be. I'm seeing people pull up a hundred cloud instances for things that'll be happy at home with a few minutes to a week's optimisation efforts. Queries that are N*N and only take a few minutes to turn to LOG(N) but instead people renting out a fucking off huge ass SQL cluster instead that not only costs gobs of money but takes a ton of time maintaining and configuring which isn't going to be done right either.

I think most people are bullshitting when they say they have impostor syndrome but when the trend in technology is to make every fucking little trivial thing a thousand times more complex than it has to be I can see how they'd feel that way. There's so bloody much you need to do that you don't need to do these days that you either can't get anything done right or the smallest thing takes an age.

I have no idea why some people put up with some of these appliances. If you bought a dish washer that made washing dishes even harder than it was before you'd return it to the store.

Every time I see the terms enterprise, fast, big data, scalable, cloud or anything of the like I bang my head on the table. One of these days I'm going to lose my fucking tits.10 -

Some "engineers" entire jobs seems to only consist of enforcing ridiculous bureaucracy in multinational companies.

I'm not going to get specific, the flow is basically:

- Developer that has to actually write code and build functionality gets given a task, engineer needs X to do it - a jenkins job, a small k8s cluster, etc.

- Developer needs to get permission from some highly placed "engineer" who hasn't touched a docker image or opened a PR in the last 2 years

- Sends concise documentation on what needs to be built, why X is needed, etc.

Now we enter the land of needless bureaucracy. Everything gets questioned by people who put near 0 effort into actually understanding why X is needed.

They are already so much more experienced than you - so why would they need to fucking read anything you send them.

They want to arrange public meetings where they can flaunt their "knowledge" and beat on whatever you're building publicly while they still have nearly 0 grasp of what it actually is.

I hold a strong suspicion that they use these meetings simply as a way to publicly show their "impact", as they'll always make sure enough important people are invited. X will 99% of the time get approved eventually anyway, and the people approving it just know the boxes are being ticked while still not understanding it.

Just sick of dealing with people like this. Engineers that don't code can be great, reasonable people. I've had brilliant Product Owners, Architects, etc. But some of them are a fucking nightmare to deal with.7 -

I'm a python fanboy, not gonna lie.

I love everything about it. It's clean syntax, ready to use out of the box-ness, convenient built-in functions.

The one thing I hate is the official documentation. It's ugly, hard to navigate and a cluster fuck.

But it has proper information, so it's fine I guess. tsch12 -

Too many night shifts.

But it's done.

After the last migrations my emotional state is... Questionable.

VM migrations between different CPU vendors and generations leading to segfaults because of unsupported X86 extensions.... Thx for doing that at 23 o'clock after 8 hours of work....

Forgetting a left over NIC in a virtual machine, creating a routing loop, leading to very erratic behaviour and fun things.

Someone forgot to check the '"Unique" box, mass spawning a cluster of VMs with same MAC adresses....

DNS fuckery since someone thought that reboot would flush the cache of an DNS server.... Nope most DNS servers have persistent caches. You'll have to flush manually.

And let's not forget the joy of the 12 plus pages of when and where to move VMs, harddrives and VLAN configuration.

Oh migrations are such a festival of joy.

Finally done with that shit -.-4 -

Holy fcuk! Can anyone here help me understand how this domain is possible?

WARNING: obviously its a spam site. Take necessary security precautions if you are going to visit.

the following domain opens a cluster fuck domain name! >> secret.ɢoogle.com

That ɢ is not what it looks like. How is such domains possible to exist? Even more surprising, how is this sub domain -ception possible? 7

7 -

This just happened....

Tester: My cluster is not working properly!!!

Me: What's wrong?

Tester: I don't know. I've checked all the logs available on the entire cluster. All i know is that node 1 and 7 is broken.

*ssh into the cluster*

node1

# less /var/log/<affected application log>.log

*no errors here everything is working properly*

node7

# less /var/log/<affected application log>.log

*goes down to the bottom and scrolls up a few lines*

<insert massive error here>

Checked all the logs eh? 3

3 -

Building my own router was a great idea. It solved almost all of my problems.

Almost.

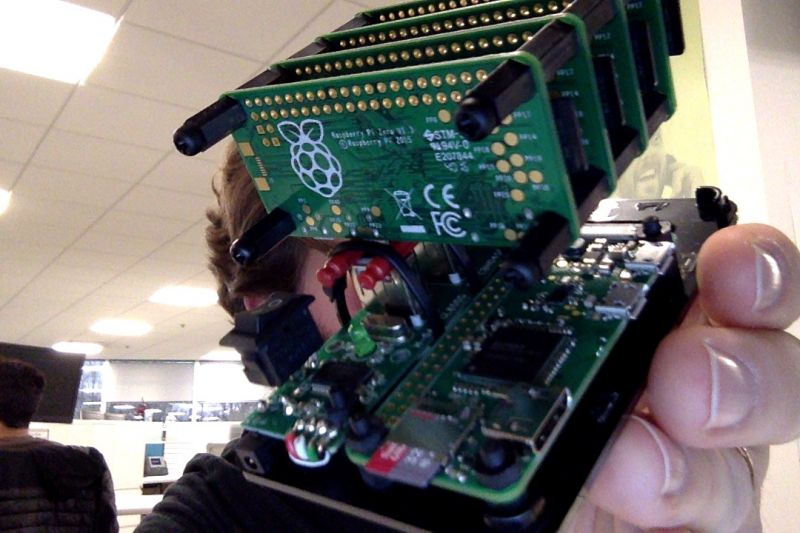

Just recently have I started to build a GL CI pipeline for my project. >100 jobs for each commit - quite a bundle. Naturally, I have used up all my free runners' time after a few commits, so I had to build myself a runner. "My old i7 should do well" - I thought to myself and deployed the GL runner on my local k8s cluster.

And my router is my k8s master.

And this is the ping to my router (via wifi) every time after I push to GL :)

DAMN IT!

P.S. at least I have Noctua all over that PC - I can't hear a sound out of it while all the CPUs are at 100% 8

8 -

So, it's 22:40 here and I'm sat on a bench staring out at a pond because my stress and anxiety is at an all time high after a couple of weeks of hellish arguments with work and my personal life so as were all developers here to some degree let me convey my fucking thoughts here.

If you care more about maintaining your fucking superiority complex over writing good clean efficient code then get the fuck out of the industry.