Join devRant

Do all the things like

++ or -- rants, post your own rants, comment on others' rants and build your customized dev avatar

Sign Up

Pipeless API

From the creators of devRant, Pipeless lets you power real-time personalized recommendations and activity feeds using a simple API

Learn More

Search - "protocol"

-

You see a web, I see:

CLIENT: TCP SYN

SERVER: TCP SYN ACK

CLIENT: HTTP Get

SERVER: HTTP Response

...

CLIENT: TCP FIN

SERVER: TCP FIN ACK

All I’m saying is that this spider has a clear understanding of Transfer Control Protocol. 13

13 -

Internet Explorer:

You type a local IP without the protocol.

It doesn't add http automatically.

It doesn't add https automatically.

IT TRIES TO SEARCH IT ON BING

I freaking hate IE13 -

--- HTTP/3 is coming! And it won't use TCP! ---

A recent announcement reveals that HTTP - the protocol used by browsers to communicate with web servers - will get a major change in version 3!

Before, the HTTP protocols (version 1.0, 1.1 and 2.2) were all layered on top of TCP (Transmission Control Protocol).

TCP provides reliable, ordered, and error-checked delivery of data over an IP network.

It can handle hardware failures, timeouts, etc. and makes sure the data is received in the order it was transmitted in.

Also you can easily detect if any corruption during transmission has occurred.

All these features are necessary for a protocol such as HTTP, but TCP wasn't originally designed for HTTP!

It's a "one-size-fits-all" solution, suitable for *any* application that needs this kind of reliability.

TCP does a lot of round trips between the client and the server to make sure everybody receives their data. Especially if you're using SSL. This results in a high network latency.

So if we had a protocol which is basically designed for HTTP, it could help a lot at fixing all these problems.

This is the idea behind "QUIC", an experimental network protocol, originally created by Google, using UDP.

Now we all know how unreliable UDP is: You don't know if the data you sent was received nor does the receiver know if there is anything missing. Also, data is unordered, so if anything takes longer to send, it will most likely mix up with the other pieces of data. The only good part of UDP is its simplicity.

So why use this crappy thing for such an important protocol as HTTP?

Well, QUIC fixes all these problems UDP has, and provides the reliability of TCP but without introducing lots of round trips and a high latency! (How cool is that?)

The Internet Engineering Task Force (IETF) has been working (or is still working) on a standardized version of QUIC, although it's very different from Google's original proposal.

The IETF also wants to create a version of HTTP that uses QUIC, previously referred to as HTTP-over-QUIC. HTTP-over-QUIC isn't, however, HTTP/2 over QUIC.

It's a new, updated version of HTTP built for QUIC.

Now, the chairman of both the HTTP working group and the QUIC working group for IETF, Mark Nottingham, wanted to rename HTTP-over-QUIC to HTTP/3, and it seems like his proposal got accepted!

So version 3 of HTTP will have QUIC as an essential, integral feature, and we can expect that it no longer uses TCP as its network protocol.

We will see how it turns out in the end, but I'm sure we will have to wait a couple more years for HTTP/3, when it has been thoroughly tested and integrated.

Thank you for reading!26 -

So this was a couple years ago now. Aside from doing software development, I also do nearly all the other IT related stuff for the company, as well as specialize in the installation and implementation of electrical data acquisition systems - primarily amperage and voltage meters. I also wrote the software that communicates with this equipment and monitors the incoming and outgoing voltage and current and alerts various people if there's a problem.

Anyway, all of this equipment is installed into a trailer that goes onto a semi-truck as it's a portable power distribution system.

One time, the computer in one of these systems (we'll call it system 5) had gotten fried and needed replaced. It was a very busy week for me, so I had pulled the fried computer out without immediately replacing it with a working system. A few days later, system 5 leaves to go work on one of our biggest shows of the year - the Academy Awards. We make well over a million dollars from just this one show.

Come the morning of show day, the CEO of the company is in system 5 (it was on a Sunday, my day off) and went to set up the data acquisition software to get the system ready to go, and finds there is no computer. I promptly get a phone call with lots of swearing and threats to my job. Let me tell you, I was sweating bullets.

After the phone call, I decided I needed to try and save my job. The CEO hadn't told me to do anything, but I went to work, grabbed an old Windows XP laptop that was gathering dust and installed my software on it. I then had to build the configuration file that is specific to system 5 from memory. Each meter speaks the ModBus over TCP/IP protocol, and thus each meter as a different bus id. Fortunately, I'm pretty anal about this and tend to follow a specific method of id numbering.

Once I got the configuration file done and tested the software to see if it would even run properly on Windows XP (it did!), I called the CEO back and told him I had a laptop ready to go for system 5. I drove out to Hollywood and the CFO (who was there with the CEO) had to walk about a mile out of the security zone to meet me and pick up the laptop.

I told her I put a fresh install of the data acquisition software on the laptop and it's already configured for system 5 - it *should* just work once you plug it in.

I didn't get any phone calls after dropping off the laptop, so I called the CFO once I got home and asked her if everything was working okay. She told me it worked flawlessly - it was Plug 'n Play so to speak. She even said she was impressed, she thought she'd have to call me to iron out one or two configuration issues to get it talking to the meters.

All in all, crisis averted! At work on Monday, my supervisor told me that my name was Mud that day (by the CEO), but I still work here!

Here's a picture of the inside of system 8 (similar to system 5 - same hardware) 15

15 -

I wrote a Student Information system for my midterm project back in 94 written in Clipper and runs on MS-DOS.

I demoed & explained to the panel of professors how it tracks enrollments, payments, class schedules, grades and attendance of each and every student. Has user authentication, auditing and reporting functionalities.

It has a lite version also written in Clipper that can be installed on a Professor's laptop so that he/she can update records even at home, and would be able to sync with the db at school via a BBS. Telix for DOS (self-taught) was my choice for the BBS as it was shareware, has built-in Zmodem support and comes with it's own programming language called SALT (Script Application Language for Telix) that can be used for automating tasks. The lite version of my project would dump the updates on an ASCII file, compress the file using PKZIP, use the laptop's modem to dial-up the number to the school's BBS and send the file across using Zmodem protocol.

The main version would then download the file(s) from the BBS and proceed to do a sync.

After the doing the demo and answering all their questions the panel asked me to wait outside the room, called me back in after 15mins and told me that I don't have to attend that class for the remainder of the term. The happiness as the my classmates outside of the room gawked at me felt like King Midas himself gave my balls his golden touch.

Then in 97, 2yrs after I graduated, I accompanied my cousins to a different campus of the same school for their enrollment and right there on the bottom of the screen were my initials on a very very familiar UI! They actually used, and were still using, my school project. Needless to say my cousins didn't believe that it was written by me.15 -

Soms week ago a client came to me with the request to restructure the nameservers for his hosting company. Due to the requirements, I soon realised none of the existing DNS servers would be a perfect fit. Me, being a PHP programmer with some decent general linux/server skills decided to do what I do best: write a small nameservers which could execute the zone transfers... in PHP. I proposed the plan to the client and explained to him how this was going to solve all of his problems. He agreed and started worked.

After a few week of reading a dozen RFC documents on the DNS protocol I wrote a DNS library capable of reading/writing the master file format and reading/writing the binary wire format (we needed this anyway, we had some more projects where PHP did not provide is with enough control over the DNS queries). In short, I wrote a decent DNS resolver.

Another two weeks I was working on the actual DNS server which would handle the NOTIFY queries and execute the zone transfers (AXFR queries). I used the pthreads extension to make the server behave like an actual server which can handle multiple request at once. It took some time (in my opinion the pthreads extension is not extremely well documented and a lot of its behavior has to be detected through trail and error, or, reading the C source code. However, it still is a pretty decent extension.)

Yesterday, while debugging some last issues, the DNS server written in PHP received its first NOTIFY about a changed DNS zone. It executed the zone transfer and updated the real database of the actual primary DNS server. I was extremely euphoric and I began to realise what I wrote in the weeks before. I shared the good news the client and with some other people (a network engineer, a server administrator, a junior programmer, etc.). None of which really seemed to understand what I did. The most positive response was: "So, you can execute a zone transfer?", in a kind of condescending way.

This was one of those moments I realised again, most of the people, even those who are fairly technical, will never understand what we programmers do. My euphoric moment soon became a moment of loneliness...21 -

It's maddening how few people working with the internet don't know anything about the protocols that make it work. Web work, especially, I spend far too much time explaining how status codes, methods, content-types etc work, how they're used and basic fundamental shit about how to do the job of someone building internet applications and consumable services.

The following has played out at more than one company:

App: "Hey api, I need some data"

API: "200 (plain text response message, content-type application/json, 'internal server error')"

App: *blows the fuck up

*msg service team*

Me: "Getting a 200 with a plaintext response containing an internal server exception"

Team: "Yeah, what's the problem?"

Me: "...200 means success, the message suggests 500. Either way, it should be one of the error codes. We use the status code to determine how the application processes the request. What do the logs say?"

Team: "Log says that the user wasn't signed in. Can you not read the response message and make a decision?"

Me: "That status for that is 401. And no, that would require us to know every message you have verbatim, in this case, it doesn't even deserialize and causes an exception because it's not actually json."

Team: "Why 401?"

Me: "It's the code for unauthorized. It tells us to redirect the user to the sign in experience"

Team: "We can't authorize until the user signs in"

Me: *angermatopoeia* "Just, trust me. If a user isn't logged in, return 401, if they don't have permissions you send 403"

Team: *googles SO* "Internet says we can use 500"

Me: "That's server error, it says something blew up with an unhandled exception on your end. You've already established it was an auth issue in the logs."

Team: "But there's an error, why doesn't that work?"

Me: "It's generic. It's like me messaging you and saying, "your service is broken". It doesn't give us any insight into what went wrong or *how* we should attempt to troubleshoot the error or where it occurred. You already know what's wrong, so just tell me with the status code."

Team: "But it's ok, right, 500? It's an error?"

Me: "It puts all the troubleshooting responsibility on your consumer to investigate the error at every level. A precise error code could potentially prevent us from bothering you at all."

Team: "How so?"

Me: "Send 401, we know that it's a login issue, 403, something is wrong with the request, 404 we're hitting an endpoint that doesn't exist, 503 we know that the service can't be reached for some reason, 504 means the service exists, but timed out at the gateway or service. In the worst case we're able to triage who needs to be involved to solve the issue, make sense?"

Team: "Oh, sounds cool, so how do we do that?"

Me: "That's down to your technology, your team will need to implement it. Most frameworks handle it out of the box for many cases."

Team: "Ah, ok. We'll send a 500, that sound easiest"

Me: *..l.. -__- ..l..* "Ok, let's get into the other 5 problems with this situation..."

Moral of the story: If this is you: learn the protocol you're utilizing, provide metadata, and stop treating your customers like shit.21 -

Mother of god.

I spent hours and hours last week to try and get OpenVPN working. I mean, OpenVPN is working perfectly fine (on a VirtualBox (nope no vmware for me on servers) machine on a friends' dedicated server) but it wouldn't get through! As in, every forwarding/firewall rule just didn't work.

Was seriously about to lose my shit just now when I suddenly noticed the term 'TCP' in a forwarding rule.

Looked at the .ovpn file: proto udp

I added the exact same rule for UDP as a forward within VirtualBox.

It worked.

Well, there goes quite some hours 😐

And solely because I didn't realise that I setup a forwarding thingy for the wrong protocol.

I feel very stupid now :(5 -

IBM

I have replied to them with scripts, curl commands, and Swagger docs (PROVIDED TO SUPPORT THEIR API), everything that could possibly indicate there's a bug. Regardless, they refuse to escalate me to level 1 support because "We cant reproduce the issue in a dev environment"

Well of course you can't reproduce it in a dev environment otherwise you'd have caught this in your unit tests. We have a genuine issue on our hands and you couldnt give less of a shit about it, or even understand less than half of it. I literally gave them a script to use and they replied back with this:

"I cannot replicate the error, but for a resource ID that doesnt exist it throws an HTTP 500 error"

YOUR APP... throws a 500... for a resource NOT FOUND?????????!!!!!!!!!! That is the exact OPPOSITE of spec, in fact some might call it a MISUSE OF RESTFUL APIs... maybe even HTTP PROTOCOL ITSELF.

I'm done with IBM, I'm done with their support, I'm done with their product, and I'm DONE playing TELEPHONE with FIRST TIER SUPPORT while we pay $250,000/year for SHITTY, UNRELENTING RAPE OF MY INTELLECT.9 -

My first hack... Back at the days when phones had disks to dial a number. I was a kid of cause, I'm not that old. I used to like to call my grans. Once, when I supposed to go to sleep already, I've found out that there is phone socket in my room (the one connected to the copper wire, that is where the word "phone line" came from).

It took me about a half of an hour to detach handset from the toy phone and about two ours to reverse engineer dialing protocol (you just need to disconnect the line sequentially corresponding number if times).

And after that I've heard my granny's voice. I was literally overwhelmed that it worked.6 -

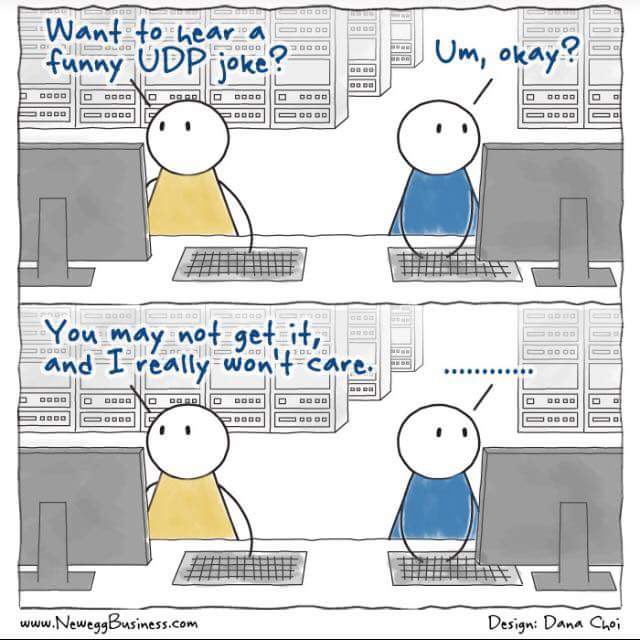

me: do you know what is so great about UDP jokes?

you: No

me: the fact that i don't care if you got them.1 -

Me: Why is there such a delay between the app and the hardware device?

Colleague: Ah, same old same old, TCP is just an inefficient protocol. We should stop development and build our own replacement to TCP.

(PS. The actual problem was his code)9 -

So today (or a day ago or whatever), Pavel Durov attacked Signal by saying that he wouldn't be surprised if a backdoor would be discovered in Signal because it's partially funded by the US government (or, some part of the us govt).

Let's break down why this is utter bullshit.

First, he wouldn't be surprised if a backdoor would be discovered 'within 5 years from now'.

- Teeny tiny little detail: THE FUCKING APP IS OPEN SOURCE. So yeah sure, go look through the code! Good idea! You might actually learn something from it as your own crypto seems to be broken! (for the record, I never said anything about telegram not being open source as it is)

sources:

http://cryptofails.com/post/...

http://theregister.co.uk/2015/11/...

https://security.stackexchange.com/...

- The server side code is closed (of signal and telegram both). Well, if your app is open source, enrolled with one of the strongest cryptographic protocols in the world and has been audited, then even if the server gets compromised, the hackers are still nowhere.

- Metadata. Signal saves the following and ONLY the following: timestamp of registration, timestamp of the last connection with the server (both rounded to the day so not on the second), your phone number and your contact details (if you authorize it) (only phone numbers) in HASHED (BCrypt I thought?) format.

There have been multiple telegram metadata leaks and it's pretty known that it saves way more than neccesary.

So, before you start judging an app which is open, uses one of the best crypto protocols in the world while you use your own homegrown horribly insecure protocol AND actually tries its best to save the least possible, maybe try to fix your own shit!

*gets ready for heavy criticism*19 -

If there is SMTP (Simple mail transfer protocol), is there also HMTP (Hard mail transfer protocol)?6

-

Biggest dev insecurity?

Probably http://

It’s not secure at all, never feeling very confident when browsing that protocol.5 -

So, some time ago, I was working for a complete puckered anus of a cosmetics company on their ecommerce product. Won't name names, but they're shitty and known for MLM. If you're clever, go you ;)

Anyways, over the course of years they brought in a competent firm to implement their service layer. I'd even worked with them in the past and it was designed to handle a frankly ridiculous-scale load. After they got the 1.0 released, the manager was replaced with some absolutely talentless, chauvinist cuntrag from a phone company that is well known for having 99% indian devs and not being able to heard now. He of course brought in his number two, worked on making life miserable and running everyone on the team off; inside of a year the entire team was ex-said-phone-company.

Watching the decay of this product was a sheer joy. They cratered the database numerous times during peak-load periods, caused $20M in redis-cluster cost overrun, ended up submitting hundreds of erroneous and duplicate orders, and mailed almost $40K worth of product to a random guy in outer mongolia who is , we can only hope, now enjoying his new life as an instagram influencer. They even terminally broke the automatic metadata, and hired THIRTY PEOPLE to sit there and do nothing but edit swagger. And it was still both wrong and unusable.

Over the course of two years, I ended up rewriting large portions of their infra surrounding the centralized service cancer to do things like, "implement security," as well as cut memory usage and runtimes down by quite literally 100x in the worst cases.

It was during this time I discovered a rather critical flaw. This is the story of what, how and how can you fucking even be that stupid. The issue relates to users and their reports and their ability to order.

I first found this issue looking at some erroneous data for a low value order and went, "There's no fucking way, they're fucking stupid, but this is borderline criminal." It was easy to miss, but someone in a top down reporting chain had submitted an order for someone else in a different org. Shouldn't be possible, but here was that order staring me in the face.

So I set to work seeing if we'd pwned ourselves as an org. I spend a few hours poring over logs from the log service and dynatrace trying to recreate what happened. I first tested to see if I could get a user, not something that was usually done because auth identity was pervasive. I discover the users are INCREMENTAL int values they used for ids in the database when requesting from the API, so naturally I have a full list of users and their title and relative position, as well as reports and descendants in about 10 minutes.

I try the happy path of setting values for random, known payment methods and org structures similar to the impossible order, and submitting as a normal user, no dice. Several more tries and I'm confident this isn't the vector.

Exhausting that option, I look at the protocol for a type of order in the system that allowed higher level people to impersonate people below them and use their own payment info for descendant report orders. I see that all of the data for this transaction is stored in a cookie. Few tests later, I discover the UI has no forgery checks, hashing, etc, and just fucking trusts whatever is present in that cookie.

An hour of tweaking later, I'm impersonating a director as a bottom rung employee. Score. So I fill a cart with a bunch of test items and proceed to checkout. There, in all its glory are the director's payment options. I select one and am presented with:

"please reenter card number to validate."

Bupkiss. Dead end.

OR SO YOU WOULD THINK.

One unimportant detail I noticed during my log investigations that the shit slinging GUI monkeys who butchered the system didn't was, on a failed attempt to submit payment in the DB, the logs were filled with messages like:

"Failed to submit order for [userid] with credit card id [id], number [FULL CREDIT CARD NUMBER]"

One submit click later and the user's credit card number drops into lnav like a gatcha prize. I dutifully rerun the checkout and got an email send notification in the logs for successful transfer to fulfillment. Order placed. Some continued experimentation later and the truth is evident:

With an authenticated user or any privilege, you could place any order, as anyone, using anyon's payment methods and have it sent anywhere.

So naturally, I pack the crucifixion-worthy body of evidence up and walk it into the IT director's office. I show him the defect, and he turns sheet fucking white. He knows there's no recovering from it, and there's no way his shitstick service team can handle fixing it. Somewhere in his tiny little grinchly manager's heart he knew they'd caused it, and he was to blame for being a shit captain to the SS Failboat. He replies quietly, "You will never speak of this to anyone, fix this discretely." Straight up hitler's bunker meme rage.13 -

Start a development job.

Boss: "let's start you off with something very easy. There's this third party we need data from. They have an api, just get the data and place it on our messaging bus."

Me: "sure, sounds easy enough"

Third party api turns out to have the most retarded conversation protocol. With us needing a service to receive data on while also having a client to register for the service. With a lot of timed actions like, 'send this message every five minutes' and 'check whether our last message was sent more than 11 minutes ago'.

Due to us needing a service, we also need special permissions through the company firewall. So I have to go around the company to get these permissions, FOR EVERY DATA STREAM WE NEED!

But the worst of it all is... This whole api is SOAP based!!

Also, Hey DevRant!5 -

What I'm posting here is my 'manifesto'/the things I stand for. You may like it, you may hate it, you may comment but this is what I stand for.

What are the basic principles of life? one of them is sharing, so why stop at software/computers?

I think we should share our software, make it better together and don't put restrictions onto it. Everyone should be able to contribute their part and we should make it better together. Of course, we have to make money but I think that there is a very good way in making money through OSS.

Next to that, since the Snowden releases from 2013, it has come clear that the NSA (and other intelligence agencies) will try everything to get into anyone's messages, devices, systems and so on. That's simply NOT okay.

Our devices should be OUR devices. No agency should be allowed to warrantless bypass our systems/messages security/encryptions for the sake of whatever 'national security' bullshit. Even a former NSA semi-director traveled to the UK to oppose mass surveillance/mass govt. hacking because he, himself, said that it doesn't work.

We should be able to communicate freely without spying. Without the feeling that we are being watched. Too badly, the intelligence agencies of today do not want us to do this and this is why mass surveillance/gag orders (companies having to reveal their users' information without being allowed to alert their users about this) are in place but I think that this is absolutely wrong. When we use end to end encrypted communications, we simply defend ourselves against this non-ethical form of spying.

I'm a heavy Signal (and since a few days also Riot.IM (matrix protocol) (Riot.IM with end to end crypto enabled)), Tutanota (encrypted email) and Linux user because I believe that only those measures (open source, reliable crypto) will protect against all the mass spying we face today.

The applications/services I strongly oppose are stuff like WhatsApp (yes, encryted messages but the metadata is readily available and it's closed source), skype, gmail, outlook and so on and on and on.

I think that we should OWN our OWN data, communications, browsing stuffs, operating systems, softwares and so on.

This was my rant.17 -

I'm working on a project with a teacher to overview the project at my school to be responsible for the confidential student data...

Teacher: How are we going to authenticate the kiosk machines so people don't need a login?

Me: Well we can use a unique URL for the app and that will put an authorized cookie on the machine as well as local IP whitelisting.

Teacher: ok but can't we just put a secret key in a text file on the C drive and access it with JavaScript?

Me: well JavaScript can't access your drive it's a part of the security protocol built into chrome...

Teacher: well that seems silly! There must be a way.

Me: Nope definately not. Let's just make a fancy shortcut?

Teacher: Alright you do that for now until I find a way to access that file.

I want to quit this project so bad2 -

Found this on mastodon:

I sometimes imagine that somewhere there must be a Ministry for Messing Up the Internet. It would be like a Monty Python sketch.

Each day a new idea would arrive in the intray of an official who looks like a young John Cleese. They would form a large pile of papers.

[reads] "Make a protocol so complicated that nobody can understand it. No the Sematic Web has already been tried".

[reads] "Ban all the cat photos for spurious copyright reasons. No, we already have an upload filter in progress to do that".

[reads] "Fill Tim Berners-Lee's socks with elephants. No - much too silly."

"Ah yes, [reads] make a giant man in the middle that everything on the internet has to go through like a sausage machine and get squirted out on the other side, hopefully in the correct order. Bernard, get Cloudflare on the phone immediately."

@bob@soc.freedombone.net2 -

I reversed engineered the network protocol for a game.

I uploaded the source code to GitHub and made a post on UC Forums.

I kept getting bombarded with messages from the same person, it went something like this:

Him: "I can't get this hack to work, pls send finish hack, thanks"

Me: "First of all this is not a complete hack. You actually need to know how to code to use this library."

Guy: "Ok, can u help me make hack for game?"

To keep this short, I basically told him:

"No. Look through the code, learn it, use what you learned."

Couple of hours later he replied:

"Ok. I look through code but don't know how work. Send me code pls."

From the kindness of my heart I made a extremely simplified wrapper for the already simple code and sent him the project files.

He replies with: "Thank for hack, I not able make it work. I build I try inject game but no work. How to run dll file."

At that point I gave up...3 -

I absolutely love the email protocols.

IMAP:

x1 LOGIN user@domain password

x2 LIST "" "*"

x3 SELECT Inbox

x4 LOGOUT

Because a state machine is clearly too hard to implement in server software, clients must instead do the state machine thing and therefore it must be in the IMAP protocol.

SMTP:

I should be careful with this one since there's already more than enough spam on the interwebs, and it's a good thing that the "developers" of these email bombers don't know jack shit about the protocol. But suffice it to say that much like on a real letter, you have an envelope and a letter inside. You know these envelopes with a transparent window so you can print the address information on the letter? Or the "regular" envelopes where you write it on the envelope itself?

Yeah not with SMTP. Both your envelope and your letter have them, and they can be different. That's why you can have an email in your inbox that seemingly came from yourself. The mail server only checks for the envelope headers, and as long as everything checks out domain-wise and such, it will be accepted. Then the mail client checks the headers in the letter itself, the data field as far as the mail server is concerned (and it doesn't look at it). Can be something else, can be nothing at all. Emails can even be sent in the future or the past.

Postfix' main.cf:

You have this property "mynetworks" in /etc/postfix/main.cf where you'd imagine you put your own networks in, right? I dunno, to let Postfix discover what your networks are.. like it says on the tin? Haha, nope. This is a property that defines which networks are allowed no authentication at all to the mail server, and that is exactly what makes an open relay an open relay. If any one of the addresses in your networks (such as a gateway, every network has one) is also where your SMTP traffic flows into the mail server from, congrats the whole internet can now send through your mail server without authentication. And all because it was part of "your networks".

Yeah when it comes to naming things, the protocol designers sure have room for improvement... And fuck email.

Oh, bonus one - STARTTLS:

So SMTP has this thing called STARTTLS where you can.. unlike mynetworks, actually starts a TLS connection like it says on the tin. The problem is that almost every mail server uses self-signed certificates so they're basically meaningless. You don't have a chain of trust. Also not everyone supports it *cough* government *cough*, so if you want to send email to those servers, your TLS policy must be opportunistic, not enforced. And as an icing on the cake, if anything is wrong with the TLS connection (such as an MITM attack), the protocol will actively downgrade to plain. I dunno.. isn't that exactly what the MITM attacker wants? Yeah, great design right there. Are the designers of the email protocols fucking retarded?9 -

Senior manager: I cant understand how this project has taken so long?

Me: Well you hired me as a C# WPF developer and then asked me to deliver an android app without any kind of training so i had to teach myself app development and reverse engineer the undocumented protocol it needs to use to communicate with our product.

Senior manager: Ok. I get that, but it should only take around 3 months to get up to speed though right?

Me (to myself): how in the hell? New platform, self teaching, undocumented protocol for a complex low level real-time system, other responsibilities taking at least 50% of my time and i should be as productive as an outsourced app dev company in 3 months???!! FFFFFUUUUUUUUUUUUUU!!!!!!!!!3 -

I die, go to hell and my punishment is to write software for hell network that is having power problems due to light source disruptions and is running on Windows 95 on FAT32 without any service pack.

Network speed is trough 300bps dial up modem. Protocol is over IPX/SPX.

My task is to write interactive websites that are replacement of modern websites but in VBScript, ActiveX, IE 4.0.

I have 10 managers that tell me what to do and scream when I miss deadline that is set everyday without my knowledge at random times.

They send me an email and 5 minutes later they arrive at my desk to ask me about it.

I must work 16 hours a day before I can leave the place and if I won’t show up police beats me and escorts me to the office.

If I’m late a second I don’t get payment.

I can’t afford to rent a place so I sleep in the sleeping bag.

It doesn’t matter much cause as soon as I fall asleep phone rings until I wake up and my manager screams about the problems he have for about an hour.6 -

Hi,

I'm not a ranty person so I never actually thought I'd post anything here but here it goes.

From the beginning.

We use ancient technologies. PHP 5.2, Symfony 1.2 and a non RFC complient SOAP with NO documentation.

A year ago We've been thrown a new temporary project. An VOIP app for every OS.

That being iOS, Android, MAC, PC, Linux, Windows mobile. With a 3 month deadline. All that thrown at 4 PHP developers. The idea being that They'll take it, sign the delivery protocol, everyone happy. No more updates for the app needed. They get their funds they needed the app for and we get paid.

Fast forward to today...

Our dev team started the year with great news that We'll most likely have to create a new project. Since the amount of new features would be far greater than current feature set, we managed to finally force our boss to use newer technologies (ie. seperate backend symfony4 PHP7+/frontend react, rest api and so on). So we were ecstatic to say the least. With preestimates aimed at a minimum 3 month development period. Since we're comfortable with everything that needs to be done.

Two days later our boss came to me that one of our most annoying clients needs a new feature. Said client uses ancient version written on a napkin because They changed half of the specification 2 weaks before deadline in a software made not by a developer but some sysadmin who didn't know anything. His MVC model was practically VVV model since he even had sql queries in some views. Feature will take 3 days - fixing everything that will break in the meantime - 1-2 months.

F*** it, fine. A little overtime won't kill me.

Yesterday boss comes again... Apparently someone lost a delivery protocol for a project we ended that half a year ago. Whats even better at the time when we asked for hardware to test we never got any. When we asked about any testing enviornment - nothing. The app being SEMI-stable on everything is an overstatement but it was working on the os'es available at the time. Since the client started testing now again, it turns out that both Android app does not work on 8.1/9 and the iOS app does not work on ios12. The client obviously does not want to pay and we can do little with it without the protocol, other than rewriting the apps.

It will take months at least since all of those apps were written by people that didn't know neither the OS'es nor the languages. For example I started writing the iOS one in swift. Only to learn after half of the development time, that swift doesn't like working by C Library rules and I had to use ObjC also. With some C thrown in due to the library. 3 unknown languages, on an unknown platform in 3 months. I never had any apple device in my hand at that time nor do I intend to now. I'm astonished it worked out then. It was a clusterf**k of bad design and sticking everything together with deprecated apis and a gum. So I'll have to basically fully rewrite it.

If boss decides we'll take all those at the same time I'll f***ing jump of a bridge.8 -

Just thought I'd share my current project: Taking an old ISA sound card I got off eBay and wiring it up to an Arduino to control its OPL3 synth from a MIDI keyboard. I have it mostly working now.

No intention to play audio samples, so I've not bothered with any of the DMA stuff - just MIDI (MPU-401 UART) and OPL3.

It has involved learning the pinout of the ISA bus connectors, figuring out which ones are actually used for this card, ignoring the standards a little (hello, amplifier chip that is wired up to the +12V line but which still happily works at +5V...)

Most of the wires going to it are for each bit of the 16-bit address and 8-bit data. Using a couple of shift registers for the address, and a universal shift register for the data. Wrote some fairly primitive ISA bus read/write code, but it was really slow. Eventually found out about SPI and re-wrote the code to use that and it became very fast. Had trouble with some timings, fixed those.

The card is an ISA Plug and Play card, meaning before I could use it I had to tell it what resources to use. Linux driver code and some reverse-engineering of the official Windows/DOS drivers got me past this stage.

Wired up IRQ 5 to an Arduino interrupt to deal with incoming MIDI data, with a routine that buffers it. Ran into trouble with the interrupt happening during I/O and needing to do some I/O inside the handler and had to set a flag to decide whether to disable/re-enable interrupts during I/O.

It looks like total chaos, but the various wires going across the breadboard are mainly to make it easier to deal with the 16-bit address and 8-bit data lines. The LEDs were initially used to check what addresses/data were being sent, but now only one of them is connected and indicates when the interrupt handler is executing.

There's still a lot to do after that though - MIDI and OPL3 are two completely different things so I had to write some code to manage the different "channels" of the OPL3 chip. I have it playing multiple notes at the same time but need to make it able to control the various settings over MIDI. Eventually I might add some physical controls to it and get a PCB made.

The fun part is, I only vaguely know what I'm doing with the electronics side of this. I didn't know what a "shift register" was before this project, nor anything about the workings of the ISA bus. I knew a bit about MIDI (both the protocol and generally how the MPU-401 UART works) along with the operation of a sound card from a driver/software perspective, but everything else is pretty new to me.

As a useful little extra, I made some "fake" components that I can build the software against on a PC, to run some tests before uploading it to the Arduino (mostly just prints out the addresses it is going to try and write to). 46

46 -

A few days ago a friend of mine asked me to teach him to code. When I wanted to know which language he'd like to learn, he hesitantly replied "https".

Then I explained, this was a data transfer protocol. His next idea was "http". 🙄

Guess who will learn Python8 -

This has been said countless times before me, and way better than me that’s supper tired, but I need to rant out

And what I’m ranting out today, is Apple. Its essence, its core, the reason it still exists: the ECOSYSTEM!

The problem with Apple ecosystem is that it’s the ecosystem of a fucking PRISON!

People like it because it works well together , but it’s sure that in a prison, the path from your cell to the cantine is pretty optimized; you get forced there! And you might try to get your food elsewhere, but the walls of the prison are made to be difficult to cross. Especially on mobile, where they’re making it harder and harder to escape, to make a jailbreak (pun-intended). Keeping you the loyal little sheep, or the forcing you to it.

That prison is also made private, a little club, to attract people to it. They even got their own little system to talk to each other, but oh god protect them from their little messages to pass the walls of the prison.

And all that prison is guarded by the warden, watching from high in the cloud. Forcing you to report yourself to him to be part of that prison.

That prison, also, can only be entered with specific vehicles, provided by the prison, to ensure maximum compatibility and efficiency. Good luck entering with a disguised vehicle if you find the official ones too pricey for their parts.

They also provided pressure tubes to send things from one cell to another. While being only simple pressure tubes like any other, they’re acclaimed because they’re apparently easier to use than the other 3rd party pressure tubes that can send things to the outside. Why? Because, oh yes it’s already in everybody’s cells (of that prison, outside is dangerous) and the other tubes have been conveniently being placed somewhere harder to reach.

Another thing they have are those windows that can view the outside. While being maybe less clear than some other windows, they are ok. But if you ever consider going mobile to enjoy that safari with lions, then man do they love bringing you back to that window.

Ok so I’m done with the prison metaphor, or I won’t sleep.

The ecosystem is probably the major reason Apple is still there. You buy from there because you’re a prisoner (I guess I’m not finished with the metaphor after all).

This is a prime example of RMS’s quote “If the user doesn’t control the software, the software controls the user”

AirDrop isn’t some sort of revolutionary tech, it uses a well established protocol that other implementations use to do the same thing. They could really easily open source the protocol and allow everyone to profit, but they won’t, because that would mean you don’t have to buy Apple.

That’s why I militate for open source, decentralized and standardized protocols. Because that way, we control the software, and it doesn’t control us.

All the things I said aren’t so bad because when you buy Apple, you make a choice. But I don’t have a choice, I am typing this on an Apple device, because I need to (I won’t elaborate on that) because of that fucking *ecosystem*

I am really tired, so half the sentences probably don’t make sense, but thanks for coming to my stupid TED talk.12 -

That's actually something that happened fairly recently.. just that I didn't have the energy left at the time to write it down. That, or I got my ass too drunk to properly write anything.. not sure actually.

So on paper I'm unemployed, but I do spend some time still on pretty much voluntary work for HackingVision, along with a handful of other people.

At the time, we were just doing the usual chit-chat in the admin channel, me still sick in my bed (actually that means that I wasn't drunk but really tired for once.. amazing!) and catching up to what happened, but unable to do any useful work in this sick state. So, tablet, typing on glass, right. I didn't have any keyboard attached at the time.

One of the staff members (a wanketeer from India) apparently had an assignment in a few hours for which he needed to write a server application in Java. Now, performance issues aside, I figured.. well I've got quite a bit of experience with servers, as well as some with client-server protocols. So I got thinking.. mail servers, way too overengineered. Web servers.. well that could work, I've done some basic netcat webservers that just sent an HTTP 200 OK and the file, those worked fine.. although super basic of course. And then there's IRC, which I've actually talked to an InspIRCd server through telnet before (which by the way is pretty much the only thing that telnet is still useful for, something that was never its purpose, lol) and realized that that protocol is actually quite easy to develop around. That's why I like it so much over modern chat protocols like XMPP, MQTT and whatnot. So I recommended that he'd write a little IRC server in Java. Or even just a chatbot like I attempted to at the time, considering that that's - with a stretch of course - a sort-of server too.

His fucking response however, so goddamn fucking infuriating. "If the protocol is so easy, then please write me down how to implement it in Java."

Essentially do his fucking work for him. I don't know Java, but as a fucking HackingVision admin, YOU SHOULD FUCKING KNOW THAT HACKERS CAN'T STAND LAZY CUNTS THAT CAN'T EVEN BE ASSED TO GOOGLE SHIT!!! If I wanted to deal with cunts like that, I'd have opened the page inbox with all its Fb h4xx0ring questions, not the fucking admin chat!

And type it on a goddamn fucking piece of glass, while fucking sick?! Get your ass fucked by a bobs and vegana horny fuck from the untouchable caste, because that's where you fucking belong for expecting THAT from me, you fucking bhenchod.

But at least I didn't get my ass enraged like that to say that to him in the admin chat. Although that probably wouldn't have been a bad thing, to get his feet right back on the ground again.1 -

The IT head of my Client's company : You need to explain me what exactly you are doing in the backend and how the IOT devices are connected to the server. And the security protocol too.

Me : But it's already there in the design documents.

IT Head : I know, but I need more details as I need to give a presentation.

Me : (That's the point! You want me to be your teacher!) Okay. I will try.

IT Head : You have to.

Me : (Fuck you) Well, there are four separate servers - cache, db, socket and web. Each of the servers can be configured in a distributed way. You can put some load balancers and connect multiple servers of the same type to a particular load balancer. The database and cache servers need to replicated. The socket and http servers will subscribe to the cache server's updates. The IOT devices will be connected to the socket server via SSL and will publish the updates to a particular topic. The socket server will update the cache server and the http servers which are subscribed to that channel will receive the update notification. Then http server will forward the data to the web portals via web socket. The websockets will also work on SSL to provide security. The cache server also updates the database after a fixed interval.

This is how it works.

IT Head : Can you please give the presentation?

Me : (Fuck you asshole! Now die thinking about this architecture) Nope. I am really busy.11 -

Modern web frontend is giving me a huge headache...

Gazillion frameworks, css preprocessors, transpilers, task runners, webpack, state management, templating, Rxjs, vector graphics,async,promises, es6,es7,babel,uglifying,minifying,beautifying,modules,dependecy injection....

All this for programming apps that happen to run inside browsers on a protocol which was designed to display simple text pages...

This is insanity. It cannot go on like this for long. I pray for webasm and elm to rescue me from this chaos.

I work now as a fullstack dev as my first job but my next job is definitely going to be backend/native stuff for desktop or mobile. It seems those areas are much less crazy.10 -

At college (UK) and taking a general IT course (databases, programming, networking) a friend suggested "We should make our own network protocol." (The only language we had covered at the time was Visual Basic)4

-

My typical morning Teams exchange:

Newb: GM (requesting connection)

Me: GM (connection established)

Newb: How r u? (requesting headers)

Me: Good (headers sent)

Newb: You free? (ready for comms?)

Me: Sure (comms ready)

…

Feels like a bad internet protocol.8 -

*working on a programming assignment for a graduate-level course*

"We will provide you code that implements the protocol in the server. You do not need to touch this code."

*provided file has syntax errors, including a block comment which doesn't close before EOF*1 -

Arrived at office then almost immediately the boss, who's also a developer, tells me he changed something on the protocol/api.

Me thinking: u just broke the api without thinking on consequences but hey... Ur the boss...

Later, he says: look, our app crashes!

Me: obviously...

:/ what the f**k was he thinking... :/9 -

It has been bugging the shit out of me lately... the sheer number of shit-tier "programmers" that have been climbing out of the woodwork the last few years.

I'm not trying to come across as elitist or "holier than thou", but it's getting ridiculous and annoying. Even on here, you have people who "only do frontend development" or some other lame ass shit-stain of an excuse.

When I first started learning programming (PHP was my first language), it wasn't because I wanted to be a programmer. I used to be a member (my account is still there, in fact) of "HackThisSite", back when I was about 12 years old. After hanging out long enough, I got the hint that the best hackers are, in essence, programmers.

Want to learn how to do SQL injection? Learn SQL - write a program that uses an SQL database, and ask yourself how you would exploit your own software.

Want to reverse engineer the network protocol of some proprietary software? Learn TCP/IP - write a TCP/IP packet filter.

Back then, a programmer and a hacker were very much one in the same. Nowadays, some kid can download Python, write a "hello, world" program and they're halfway to freelancing or whatever.

It's rare to find a programmer - a REAL programmer, one who knows how the systems he develops for better than the back of his hand.

These days, I find people want the instant gratification that these simpler languages provide. You don't need to understand how virtual memory works, hell many people don't even really understand C/C++ pointers - and that's BASIC SHIT right there.

Put another way, would you want to take your car to a brake mechanic that doesn't understand how brakes work? I sure as hell wouldn't.

Watching these "programmers" out there who don't have a fucking clue how the code they write does what it does, is like watching a grown man walk around with a kid's toolbox full or plastic toys calling himself a mechanic. (I like cars, ok?!)

*sigh*

Python, AngularJS, Bootstrap, etc. They're all tools and they have their merits. But god fucking dammit, they're not the ONLY damn tools that matter. Stop making excuses *not* to learn something, Mr."IOnlyDoFrontEnd".

Coding ain't Lego's, fuckers.36 -

I've optimised so many things in my time I can't remember most of them.

Most recently, something had to be the equivalent off `"literal" LIKE column` with a million rows to compare. It would take around a second average each literal to lookup for a service that needs to be high load and low latency. This isn't an easy case to optimise, many people would consider it impossible.

It took my a couple of hours to reverse engineer the data and implement a few hundred line implementation that would look it up in 1ms average with the worst possible case being very rare and not too distant from this.

In another case there was a lookup of arbitrary time spans that most people would not bother to cache because the input parameters are too short lived and variable to make a difference. I replaced the 50000+ line application acting as a middle man between the application and database with 500 lines of code that did the look up faster and was able to implement a reasonable caching strategy. This dropped resource consumption by a minimum of factor of ten at least. Misses were cheaper and it was able to cache most cases. It also involved modifying the client library in C to stop it unnecessarily wrapping primitives in objects to the high level language which was causing it to consume excessive amounts of memory when processing huge data streams.

Another system would download a huge data set for every point of sale constantly, then parse and apply it. It had to reflect changes quickly but would download the whole dataset each time containing hundreds of thousands of rows. I whipped up a system so that a single server (barring redundancy) would download it in a loop, parse it using C which was much faster than the traditional interpreted language, then use a custom data differential format, TCP data streaming protocol, binary serialisation and LZMA compression to pipe it down to points of sale. This protocol also used versioning for catchup and differential combination for additional reduction in size. It went from being 30 seconds to a few minutes behind to using able to keep up to with in a second of changes. It was also using so much bandwidth that it would reach the limit on ADSL connections then get throttled. I looked at the traffic stats after and it dropped from dozens of terabytes a month to around a gigabyte or so a month for several hundred machines. The drop in the graphs you'd think all the machines had been turned off as that's what it looked like. It could now happily run over GPRS or 56K.

I was working on a project with a lot of data and noticed these huge tables and horrible queries. The tables were all the results of queries. Someone wrote terrible SQL then to optimise it ran it in the background with all possible variable values then store the results of joins and aggregates into new tables. On top of those tables they wrote more SQL. I wrote some new queries and query generation that wiped out thousands of lines of code immediately and operated on the original tables taking things down from 30GB and rapidly climbing to a couple GB.

Another time a piece of mathematics had to generate all possible permutations and the existing solution was factorial. I worked out how to optimise it to run n*n which believe it or not made the world of difference. Went from hardly handling anything to handling anything thrown at it. It was nice trying to get people to "freeze the system now".

I build my own frontend systems (admittedly rushed) that do what angular/react/vue aim for but with higher (maximum) performance including an in memory data base to back the UI that had layered event driven indexes and could handle referential integrity (overlay on the database only revealing items with valid integrity) or reordering and reposition events very rapidly using a custom AVL tree. You could layer indexes over it (data inheritance) that could be partial and dynamic.

So many times have I optimised things on automatic just cleaning up code normally. Hundreds, thousands of optimisations. It's what makes my clock tick.4 -

I opened a post starting with a "NO TOFU" logo and I was wondering what relationship existed between the SSH protocol and anti-vegan people.

After some paragraphs it explained that TOFU stands for Trust On First Use (a security anti-pattern). 7

7 -

Clicking "share" on directory in Windows Explorer, digging through config panel, fidgeting with network discovery options, toggling password protection, digging through account management, jumping over a chair 3 times to channel my inner Bill Gates, checking directory permissions, sacrificing 7 virgin unicorns, go into lusrmgr.msc, curse various gods, install CIFS1.0 protocol, reboot computer, disable encryption, checking registry, trying to summon Steve Ballmer using the blood of a bald goat and sweat-scented candles... 5 hours.

Install Ubuntu on spare SSD, mount Windows NTFS drive, start SMB daemon and set up samba users... 15 minutes.10 -

!dev - cybersecurity related.

This is a semi hypothetical situation. I walked into this ad today and I know I'd have a conversation like this about this ad but I didn't this time, I had convo's like this, though.

*le me walking through the city centre with a friend*

*advertisement about a hearing aid which can be updated through remote connection (satellite according to the ad) pops up on screen*

Friend: Ohh that looks usefu.....

Me: Oh damn, what protocol would that use?

Does it use an encrypted connection?

How'd the receiving end parse the incoming data?

What kinda authentication might the receiving end use?

Friend: wha..........

Me: What system would the hearing aid have?

Would it be easy to gain RCE (Remote Code Execution) to that system through the satellite connection and is this managed centrally?

Could you do mitm's maybe?

What data encoding would the transmissions/applications use?

Friend: nevermind.... ._________.

Cybersecurity mindset much...!11 -

My friend, Gavin, an air steward (a job that he had done for decades), told me about an incident at work. He said that (shockingly to me) passengers occasionally die on a flight (particularly long-haul), just as a matter of course. This can be because people sometimes travel to visit loved ones BECAUSE they are dying, people sometimes find travelling itself stressful (so it can exacerbate an existing medical condition), or simply that, if you took a large number of people and shut them up in a space together for some considerable time, some of them would pop off through sheer statistical probability. Cabin crew are, apparently, fully trained to deal within this eventually in a calm, almost routine manner.

This particular flight, Gavin was working with another gay man: Peter, who was actually a VERY funny personality. Camp, extravagant and loud, Peter really lit up the place. But naturally, when the very elderly male passenger in seat 38b died peacefully in his sleep halfway across the Atlantic, Peter acted (like the entire crew), with decorum and dignity. As per the protocol, all the lights in the cabin were dimmed. A hush fell over the passengers (Gavin told me that, although no announcement is ever made, the other passengers nearly always instinctively know what's happened, with the news spreading via the media of hushed whispers and nudges). Then, as per standing instructions, two of the crew carefully lifted the deceased out of his seat and gently carried him to the crew station where he was laid down on a bed for the remainder of the flight.

After the late gentleman disappeared behind the discreetly drawn curtain, you could have heard a pin drop. There was a demure pause during which, slowly, the lights went back up.

Suddenly Peter's cheery face appeared, poking through the gap in the drapes. He looked around, blinking brightly with curiosity at the seated passengers, and said, in a voice that echoed around the whole cabin:

"SO! Anyone else have the fish?"

He narrowly avoided getting sacked.8 -

During the last couple of days, I got to hear quite a horrible story...

So we start at the beginning, where I have a dev-related chat with some other strangers on the internet. One of them was working on a custom protocol implementation with an API to go with it, written in Python. There were plans to migrate the codebase to another language like Rust in the long term. So the project seemed to be going well.

Another guy and the main subject of this chimed in on various of our messages, and long story short - he uses Express.js for everything he does, and he doesn't know jack shit on what he's talking about. Yet he still does.

Later we got the delight to hear that he had beaten up his mother, and that she's now in the hospital because of it, with broken arms, hands, fingers and severe bleeding. Yet he has the audacity to complain about his sore throat, caused by all his shouting. He refuses to seek any help, or to take medicines he's been given. This has been going on for several days now.

As much as I hate to even think about it, these too are "developers". I too have skeletons in my closet, but goddamn.. that these people even exist. The very idea that you may be talking to them every day. It disgusts me.16 -

Sorry for breaking the protocol, but I'm not here to rant. I want to thanks all the ranters (is that a thing now?) for recommending Mr. Robot (the TV series). Just watched the first episode and I can see myself watching it all day. Be back tomorrow.6

-

The new mobile app codebase i'm working with, was clearly written by someone who just read a book on generics and encapsulation.

I need to pull out 2 screens into a separate library to have it shared around. The 1 networking request used is wrapped up in a 'WebServiceFactory' and `WebServiceObjectMapper`, used by a `NetworkingManager` which exposes a generic `request` method taking in a `TopLevelResponse` type (Which has imported every model) which uses a factory method to get the real response type.

This is needed by the `Router` which takes a generic `Action` which they've subclassed for each and every use case needing server communication.

Then the networking request function is part of a chain of 4 near identical functions spread across 4 different files, each one doing a tiny bit more than the last and casting everything to a new god damn protocol, because fuck concrete types.

Its not even used in that many places, theres like 6 networking calls. Why are people so god damn fucking stupid and insist on over engineering the shit out of their apps. I'm fed the fuck up with these useless skidmarks.3 -

In my previous rant about IPv6 (https://devrant.com/rants/2184688 if you're interested) I got a lot of very valuable insights in the comments and I figured that I might as well summarize what I've learned from them.

So, there's 128 bits of IP space to go around in IPv6, where 64 bits are assigned to the internet, and 64 bits to the private network of end users. Private as in, behind a router of some kind, equivalent to the bogon address spaces in IPv4. Which is nice, it ensures that everyone has the same address space to play with.. but it should've been (in my opinion) differently assigned. The internet is orders of magnitude larger than private networks. Most SOHO networks only have a handful of devices in them that need addressing. The internet on the other hand has, well, billions of devices in it. As mentioned before I doubt that this total number will be more than a multiple of the total world population. Not many people or companies use more than a few public IP addresses (again, what's inside the SOHO networks is separate from that). Consider this the equivalent of the amount of public IP's you currently control. In my case that would be 4, one for my home network and 3 for the internet-facing servers I own.

There's various ways in which overall network complexity is reduced in IPv6. This includes IPSec which is now part of the protocol suite and thus no longer an extension. Standardizing this is a good thing, and honestly I'm surprised that this wasn't the case before.

Many people seem to oppose the way IPv6 is presented, hexadecimal is not something many people use every day. Personally I've grown quite fond of the decimal representation of IPv4. Then again, there is a binary conversion involved in classless IPv4. Hexadecimal makes this conversion easier.

There seems to be opposition to memorizing IPv6 addresses, for which DNS can be used. I agree, I use this for my IPv4 network already. Makes life easier when you can just address devices by a domain name. For any developers out there with no experience with administration that think that this is bullshit - imagine having to remember the IP address of Facebook, Google, Stack Overflow and every other website you visit. Add to the list however many devices you want to be present in the imaginary network. For me right now that's between 20 and 30 hosts, and gradually increasing. Scalability can be a bitch.

Any other things.. Oh yeah. The average amount of devices in a SOHO network is not quite 1 anymore - there are currently about half a dozen devices in a home network that need to be addressed. This number increases as more devices become smart devices. That said of course, it's nowhere close to needing 64 bits and will likely never need it. Again, for any devs that think that this is bullshit - prove me wrong. I happen to know in one particular instance that they have centralized all their resources into a single PC. This seems to be common with developers and I think it's normal. But it also reduces the chances to see what networks with many devices in it are like. Again, scalability can be a bitch.

Thanks a lot everyone for your comments on the matter, I've learned a lot and really appreciate it. Do check out the previous rant and particularly the comments on it if you're interested. See ya!25 -

This brings joy

https://reddit.com/r/technology/...

Bypass paywall:

A series of scandals and missteps has damaged Facebook's reputation so much that the company is being forced to pay ever larger compensation to hire and retain workers, according to industry recruiters, former employees, and data reviewed by Insider.

The company has always competed aggressively for talent, and the tech job market in general is on fire. But a deteriorating public image means the social-media giant now has to outbid other major tech companies, such as Google.

"One thing Facebook can still do is pay a lot more," said Jose Guardado, an experienced tech recruiter and the founder of Build Talent. "They can easily throw more compensation at people they currently have, and cover any brand tax and pay a little more to get people to come on."

Silicon Valley companies thrive or whither based on their ability to recruit the smartest employees. Without a steady influx of engineers and other technical experts, new products and important updates take longer to release, and rivals can quickly get ahead. Then there's the financial cost: In 2022, Facebook projected, expenses could jump as high as $97 billion from $70 billion this year, in large part because of "investments in technical and product talent." A company spokesperson did not respond to a request for comment.

Other companies, and even whole industries, have had to increase compensation to overcome hiring and retention problems caused by scandal and shifting public perceptions, said Alan Johnson, a managing director at the compensation consulting firm Johnson Associates. "If you're an oil company, if you make cigarettes, if you're in cattle or Wells Fargo, sure," he said.

How well this is working for Facebook is debatable as the company has more than 4,300 open jobs and has seen decreasing rates of acceptance on job offers, according to internal documents reported by Protocol. It's also seen dozens of high-level executives leave this year, and recruiters say employees are now more open to considering jobs elsewhere. Facebook used to be a place that people rarely left, given its reach, pay, and perks.

A former Oculus engineer who left last year said Facebook could now be seen as a "black mark" on someone's career. A hardware engineer who exited in 2020 shared similar sentiments: They said they quit because of concerns about misinformation on the platform and the effect of that on children. Another employee said their department was dissolved in late 2019 by Facebook and, although the company offered another position that paid more, they left last year anyway for a different industry. The workers, and many other people who spoke with Insider for this story, asked not to be identified because of the sensitive nature of the topic.

For those who stick around and people who take new jobs at Facebook, base pay and stock grants have gone up a "sizable" amount in the past year, said Zuhayeer Musa, cofounder of Levels.fyi, a platform that collects pay data based on verified offers and compensation disclosures.

During the second quarter of 2021, the median compensation for an upper-mid-level engineer, an E5, was $400,000, up from $380,000 a year earlier. For an E4, the median pay jumped to $276,000 from $256,000 in the same period. For both groups, the increases were double the gains between 2018 and 2019, Levels.fyi data showed.

Musa, who's firm also offers pay-negotiation coaching, said previously that the total compensation ceiling for an E5 engineer at Facebook was $450,000. "We recently had a client get up to $510,000 for E5," he added.

Equity awards at the company are getting more generous, too. At the group-director and VP levels, Facebook staff are getting $3 million to $6 million in restricted stock units each year, another tech recruiter said. Directors and managers are getting on average $1 million a year. In engineering, a high-level engineer is getting $600,000 in stock and a $75,000 bonus, while even an entry-level engineer is getting $50,000 to $100,000 in stock and a $20,000 to $50,000 bonus, Levels.fyi data indicated.

Even compared to Google, Facebook's stock awards are generous and increasing, Levels.fyi data shows. While base pay is about the same, Facebook offers more in stock grants, significantly increasing total compensation. At Google, entry-level equity awards range from $20,000 to $38,000, while Facebook grants are worth $40,000 to $60,000. Sign-on bonuses at Facebook are often about $50,000, while Google gives about $20,000, according to the data.

"It's not normal, but it's consistent with the craziness that's happening in the market right now," said Aalap Shah, a managing director focused on the tech industry at the consulting firm Pearl Meyer.10 -

In the before time (late 90s) I worked for a company that worked for a company that worked for a company that provided software engineering services for NRC regulatory compliance. Fallout radius simulation, security access and checks, operational reporting, that sort of thing. Given that, I spent a lot of time around/at/in nuclear reactors.

One day, we're working on this system that uses RFID (before it was cool) and various physical sensors to do a few things, one of which is to determine if people exist at the intersection of hazardous particles, gasses, etc.

This also happens to be a system which, at that moment, is reporting hazardous conditions and people at the top of the outer containment shell. We know this is probably a red herring or faulty sensor because no one is present in the system vs the access logs and cameras, but we have to check anyways. A few building engineers climb the ladders up there and find that nothing is really visibly wrong and we have an all clear. They did not however know how to check the sensor.

Enter me, the only person from our firm on site that day. So in the next few minutes I am also in a monkey suit (bc protocol), climbing a 150 foot ladder that leads to another 150 foot ladder, all 110lbs of me + a 30lb diag "laptop" slung over my shoulder by a strap. At the top, I walk about a quarter of the way out, open the casing on the sensor module and find that someone had hooked up the line feed, but not the activity connection wire so it was sending a false signal. I open the diag laptop, plug it into the unit, write a simple firmware extension to intermediate the condition, flash, reload. I verify the error has cleared and an appropriate message was sent to the diagnostic system over the radio, run through an error test cycle, radio again, close it up. Once I returned to the ground, sweating my ass off, I also send a not at all passive aggressive email letting the boss know that the next shift will need to push the update to the other 600 air-gapped, unidirectional sensors around the facility.11 -

A recruiter emailed me.

And called me (and left a voicemail).

AND texted me.

About a job opportunity in California (I live in Texas).

That requires experience writing performance critical and thread-safe code in a large multi-threaded codebase (I work primarily in JavaScript/TypeScript ecosystem, fat chance of that).

Responsibilities listed as: Focus on Supercharger Open Charge Point Protocol (OCPP) software features. I don’t even know what the fuck that means.

Opportunity is for a 3 month contract.

Why are you so desperate, lady?9 -

Another incident which made a Security Researcher cry

[ NOTE : Check profile to read older incidents ]

-----------------------------------------------------------

So this all started when I was at my home (bunked the office that day xD) and I got a call from a..... Let's call him Fella as I always do . So here we go . And yeah , our Fella is a SysAdmin .

-----------------------------------------------------------

Fella - Hey man sup!

Me - Good going mate , bunked the office , weather's nice , gonna spend time with my girl today . So what's goinon?

Fella - Bruh my network sharing folders ain't working no more .

Me - Did you changed or modified anything?

Fella - Nope

Me - Okay , gimme your login creds lemme check .

Fella - Check your inbox *texts me the credentials*

*I logged in and what I'm seeing is that server runs on Windows2008R2 , checked the event logs , everything's fine and all of a sudden what I found is fucking embarrassing , this wise man closed SMB service*

Me - Did you closed SMB service?

Fella - Yeah

Me - You know what it does?

Fella - Yeah it's a protocol , I turned it off to protect the server from Wannacry .

Me - Fuckerrrr!!!!! Asshole dumbass you fuckin piece of Dodo's shit!! SMB is the service responsible for files and network sharing!!!

Fella - But....I just wanted protection

Me - 😭😭😭

*A long conversation continues with a lot of specially made words to decrease the rate of frustration which I used already*

Fella - Okay I'm turning it on .

Me - Go on....... Asshole

Fella - It worked! Thanks a lot bro

Me - Just leave me and my soul away from evil and hang up .

*Now the question is , who the hell gives them the post of SysAdmin? While thinking this question , I almost thought of committing suicide but then my girl came with coffee and my rubber duck*1 -

Google: buys Android

Makes tons of $ from Ads

Meanwhile 7 year old bugs

Are still not fixed

A bug reported in 2012: recently created files are not visible when using MTP protocol.

Guess what? I still have this bug on my 2017 phone, like many other people.

Probably has something to do with file cache.

Because obviously 7 years is not enough to fix a stupid bug. Especially when Google is busy implementing all the other features nobody asked for except marketing department4 -

Physics class, groupwork

Me: *Writing a protocol in Markdown and LaTeX*

Partner: Are you currently using Excel?

Me: "No"

Partner: *yells* We need a new computer!7 -

I am currently in a bit of a (well-deserved) lull at work, both of my projects are finishing up/ finished, so tomorrow should be pretty light, as the latter half of today was.

And I have really gotten interested in the HTTP protocol. It's so interesting learning how it all works under the hood.

So I think I'm going to be researching/ messing around with creating a cpp project that essentially implements cURL from the ground up, creating sockets, reading from them, parsing the HTTP requests... all that. I don't expect to actually get it done, but it should be an immense learning experience. I have a clear goal: implement this function:

std::string get(const std::string&);

Once I'm able to just GET as simple as that, I know I have achieved my goal!3 -

Boss: "We need this change implemented tomorrow"

Me: "No problem, it's completed"

Boss: "Wait you didn't follow change protocol you need to allow 5 business days for review and approval"

Me: ....2 -

I used to work IT in an entertainment startup, and now I’m an iOS dev at a big entertainment company. Several people from my old company have been reaching out to eagerly tell me about their new app idea I just have to hear, asking me to help code their app— and have even hinted at me quitting my nice safe job to join their great new startup that doesn’t even exist yet.

I know this must happen to app devs all the time. What do you say?

How do you deal with telling these nice people who just don’t understand it doesn’t work that way, without crushing their dream? I have a coffee meeting planned to tell one of them “You should learn to code so you can make a proof of concept,” but I fear that won’t be received well.

What’s the standard protocol for telling people you won’t be able to code their magic app idea?10 -

I asked my CS teacher why my institutions domain had only the www subdomain pointing to the webspace, but not also the second level domain itself. He then explained me that www is the *protocol* on the internet and it's necessary for the website to be accessible, and that pointing the SLD to the webspace in addition therefore wouldn't work.

How could I ever take him serious again? He's supposed to teach networking btw.2 -

Apparently DELETE and... most of the HTTP verbs are disabled by default in IIS (ASP/ MVC/ Microsoft server software)

Am I wrong in saying that's fucking bullshit?!

Why make an HTTP serving environment with a massive array of tools to help you do everything you need in the web environment... And then DISABLE some of the web protocol??? What???

Not even the obscure verbs. DELETE. Is microsoft the type of bitch to delete using a GET request?? I bet the send passwords as get parameters.8 -